Captive Portal is broken?

-

Yep, that was this one ( see above ):

@gertjan said in Captive Portal is broken?:

See here : FreeRadius and quotas, doesn't work since 22.05 and if you use multiple portals (thus multiple interfaces) : Problem with multiple Interfaces since Version 22.05

For the last issue, there is a redmine present - and isn't even a patch proposed : just edit the file ( add a dot somewhere ;) ) -

is there a Patch available?

-

No official patches as of today.

If needed do the editing yourself. -

what i have to edit? i cant find it :=)

-

@opit-gmbh said in Captive Portal is broken?:

i cant find it :=)

Scroll upwards, see the second post if this thread for the 'bas switch!' patch.

See this one for another patch.Also look post number 12.

You will have to edit the /etc/inc/captiveportal.inc to apply it.If you do not use (FreeRadius) quotas, don't bother patching.

-

and which one should i use? Iam not using any Radius Setup. Just Bandwidth limitations...

-

Open this file : /usr/local/captiveportal/index.php and locate this line ( around line 251)

$pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, true);change true for false :

$pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, false);save.

Now, pipes are working per user as it should be.

Btw : this my quick and dirty hack. I'm using this 'false' for several weeks no : it works.

I see now Limiters and Schedulers per user under Diagnostics >Limiter Info.

-

THX, it seams to work.

Limiters:

02002: 2.048 Mbit/s 0 ms burst 0

q133074 100 sl. 0 flows (1 buckets) sched 67538 weight 0 lmax 0 pri 0 droptail

sched 67538 type FIFO flags 0x0 16 buckets 0 active

02003: 2.048 Mbit/s 0 ms burst 0

q133075 100 sl. 0 flows (1 buckets) sched 67539 weight 0 lmax 0 pri 0 droptail

sched 67539 type FIFO flags 0x0 16 buckets 0 active

02000: 2.048 Mbit/s 0 ms burst 0

q133072 100 sl. 0 flows (1 buckets) sched 67536 weight 0 lmax 0 pri 0 droptail

sched 67536 type FIFO flags 0x0 16 buckets 0 active

02001: 2.048 Mbit/s 0 ms burst 0

q133073 100 sl. 0 flows (1 buckets) sched 67537 weight 0 lmax 0 pri 0 droptail

sched 67537 type FIFO flags 0x0 16 buckets 0 activeI see here 4 Limiters but i just have 2 Captive Portal Users, is this OK? Is this because one is for Upload and one for Download or?

-

@opit-gmbh

For each user, one down and one upload limiter. -

cool thx.

here is my Patch what iam using under System > Patches

diff --git a/opit/usr/local/captiveportal/index.php b/opit/usr/local/captiveportal/index.php

--- a/opit/usr/local/captiveportal/index.php

+++ b/opit/usr/local/captiveportal/index.php

@@ -249,7 +249,7 @@

$context = 'first';

}- $pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, true);

- $pipeno = captiveportal_get_next_dn_ruleno('auth', 2000, 64500, false);

/* if the pool is empty, return appropriate message and exit */

if (is_null($pipeno)) {

$replymsg = gettext("System reached maximum login capacity");

-

@gertjan said in Captive Portal is broken?:

Hi, I'm using 22.05 on a SG 4100. In the past, I was using 2.6.0 on a home made pfSense device.

If my captive portal wasn't working, I would lose my job - sort-of, as I use the portal for a hotel.

Last time I looked, it worked.

I'm using a dedicated interface for my portal, and as LAN has already 192.168.1.1/24, my portal uses 192.168.2.1/24 - DHCP pool 192.168.2.5 -> 254.

I use the Resolver, nearly default settings.

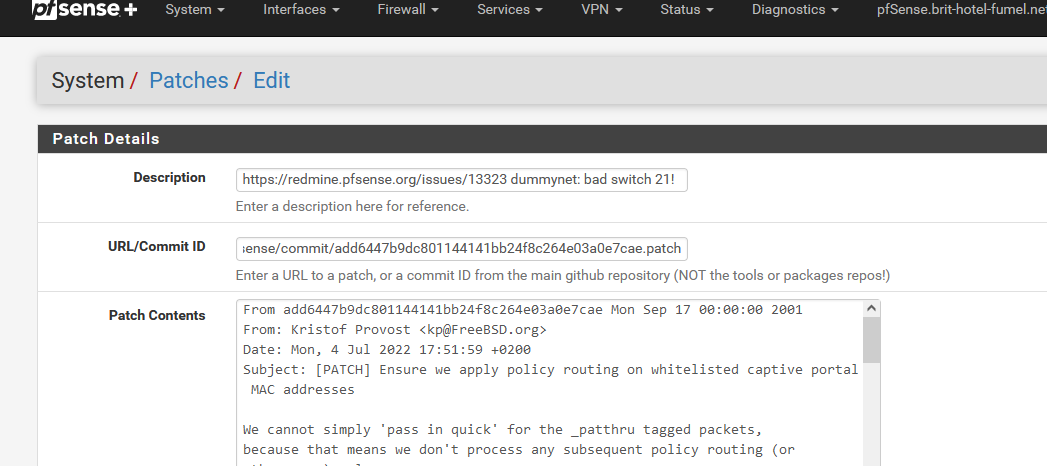

The pfSense patches packages has one patch for the portal :

This is the patch ID to be used : https://github.com/pfsense/pfsense/commit/add6447b9dc801144141bb24f8c264e03a0e7cae.patch

after install patch - same error

dummynet: bad switch 21!

dummynet: bad switch 21! -

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on

-

O OpIT GmbH referenced this topic on