IPSec is very slow between two pfsense routers

-

@cool_corona Yes I tested with SMB, but also Plex cannot stream any video over the IPSEC tunnel without buffering every 2 min

-

@kevingoos And the funny part is, hardware doesnt matter. No matter how much power the FW has, it doesnt matter.

Its much better on a 2.2.6 version of Pfsense. Then it works quickly and no issues.

Food for thought.

-

@kevingoos Have you tried the iPerf tests I suggested?

Until you do, we cannot really say what is what - we need to see if the tunnel can carry the “up to 30mbit” possible in your scenario.After that test - if no throughput is possible with iPerf either, look into limiting the fragmentation size as i suggested by using MSS Clamping on the tunnel. I don’t think that is you issue though, so…

My guess is your tunnel is fine when you do a 3 or 4 streams iPerf test (will pass about 30 mbit).

The issue is very likely the asyncronous speeds in both ends combined with the latency introduced. That’s a real throughput killer. It can very quickly completely kill single stream interactive protocols like SMB. -

@keyser Sorry currently I broke the VPN by updating 7100 to the latest version. But the update for the 2100 is not yet ready...

I will get back the moment I get this updated. -

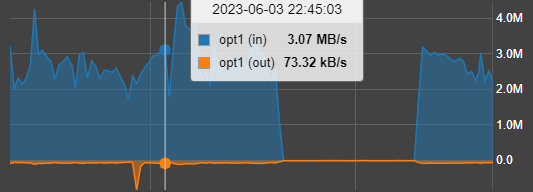

So in the end I took some time off for this problem and today I switched to wireguard site to site.

And now I am very happy with the performance (this is with SMB so not yet the full potential)

So for me this probem is solved

-

The ARM GCM Problem is gone. It was in 22.05 and after 23.01 GCM runs again.

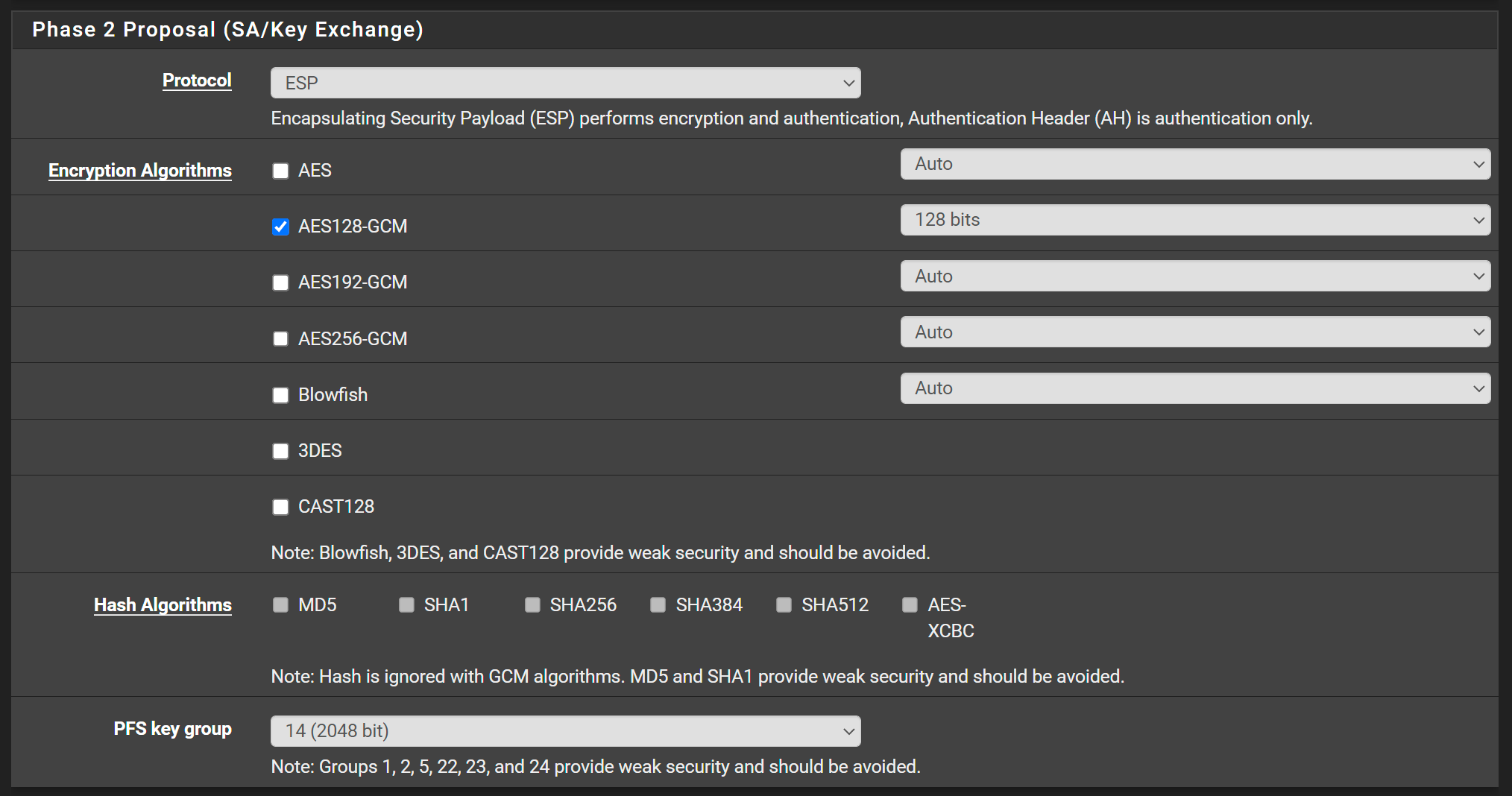

I run my S2S with AES GCM 128, SHA256, DH19, and Upstream is the Iimit.

In Run my NAS Backup over it and if the Docis 3.1 Modem is stable, it run over Days with max Upsteam Speed. -

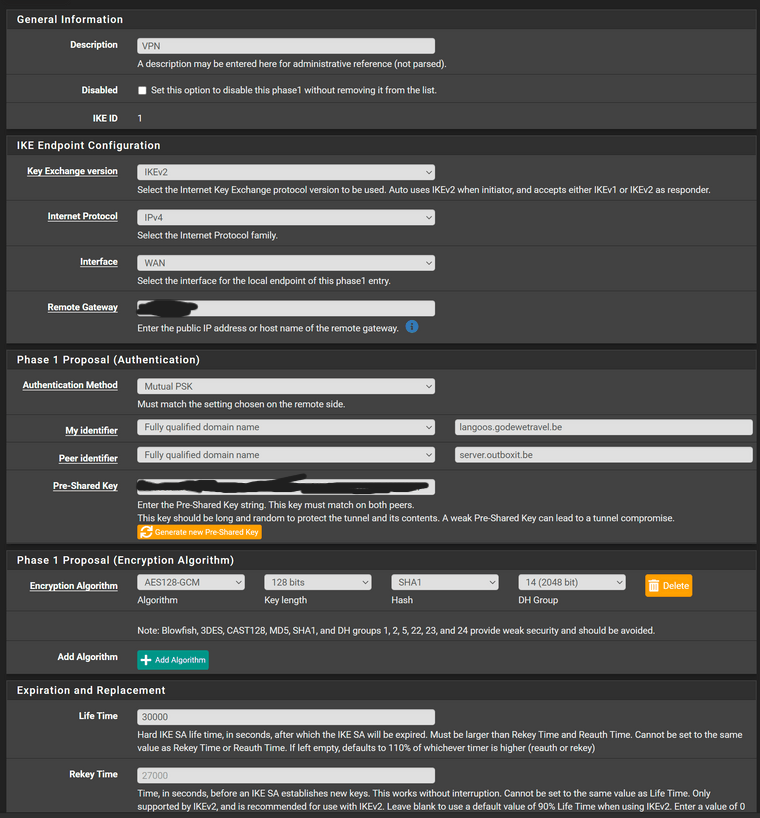

Hello.

I have two Pfsense 2.6.0 configured with IPSEC, AES-NI on the hardware. The latency between the two links is 3ms.

With IPSEC, the transfer does not exceed 5MB/s, whereas with OpenVPN, the transfer reaches 50MB/s, which is the maximum bandwidth of the internet link.I have already tested all protocols in phase 1 and 2 and the rate does not change.

What could be "blocking" IPSEC?

-

@patrick-pesegodinski Could be fragmentation. Try setting MSS to 1350 or 1300 to start and test.

How are you performing the speedtest? On a client behind the pfsense?

Also please post the specs of your system -

The test is carried out by transferring files from the server at one end to computers at the other end.

Should I reduce the MSS at both ends?

Main Pfsense Configuration:

Intel(R) Core(TM) i5-8400 CPU @ 2.80GHz

Current: 2800MHz, Max: 2801MHz

6 CPUs: 1 package(s) x 6 core(s)

AES-NI CPU Crypto: Yes (active)

QAT Crypto: NoSecondary Pfsense Configuration:

Intel(R) Core(TM) i3-8100 CPU @ 3.60GHz

4 CPUs: 1 package(s) x 4 core(s)

AES-NI CPU Crypto: Yes (active)

QAT Crypto: No -

@patrick-pesegodinski

What NICs are used?

Try reducing MSS on both.

Are these SMB file transfers? -

- NICs was TP-LINK TG-3468;

- SMB file

-

@michmoor Should the IPsec tunnel be restarted after MSS modification?

-

@patrick-pesegodinski doesnt have to be i believe.

-

@patrick-pesegodinski I know this sounds like BS, but you need to try and benchmark the VPN connection with something other than SMB. SMB is NOTORIOUSLY bad on “less than 1500 bytes” MTU links like a VPN. It’s all over the place if any fragmentation is involved.

So try and clamp down your MSS and benchmark it with a iPerf3 TCP test. -

@keyser I understand your thinking, but with OpenVPN I get transfer rates 10x higher than IPSEC.

-

@patrick-pesegodinski i know, and thats likely because OpenVPN knows how to participate in MTU Discovery so the SMB Client knows the proper packet sizes to use.

IPSec VPN on pfsense does not play Nice in this Area - it’s a known bug and has been for years, But unfortunately IPSec VPN sees Very little developer love, so we have to work around it.

Thats why we need MSS clamping and an iPerf3 test - then we really know where the culprit is buried. -

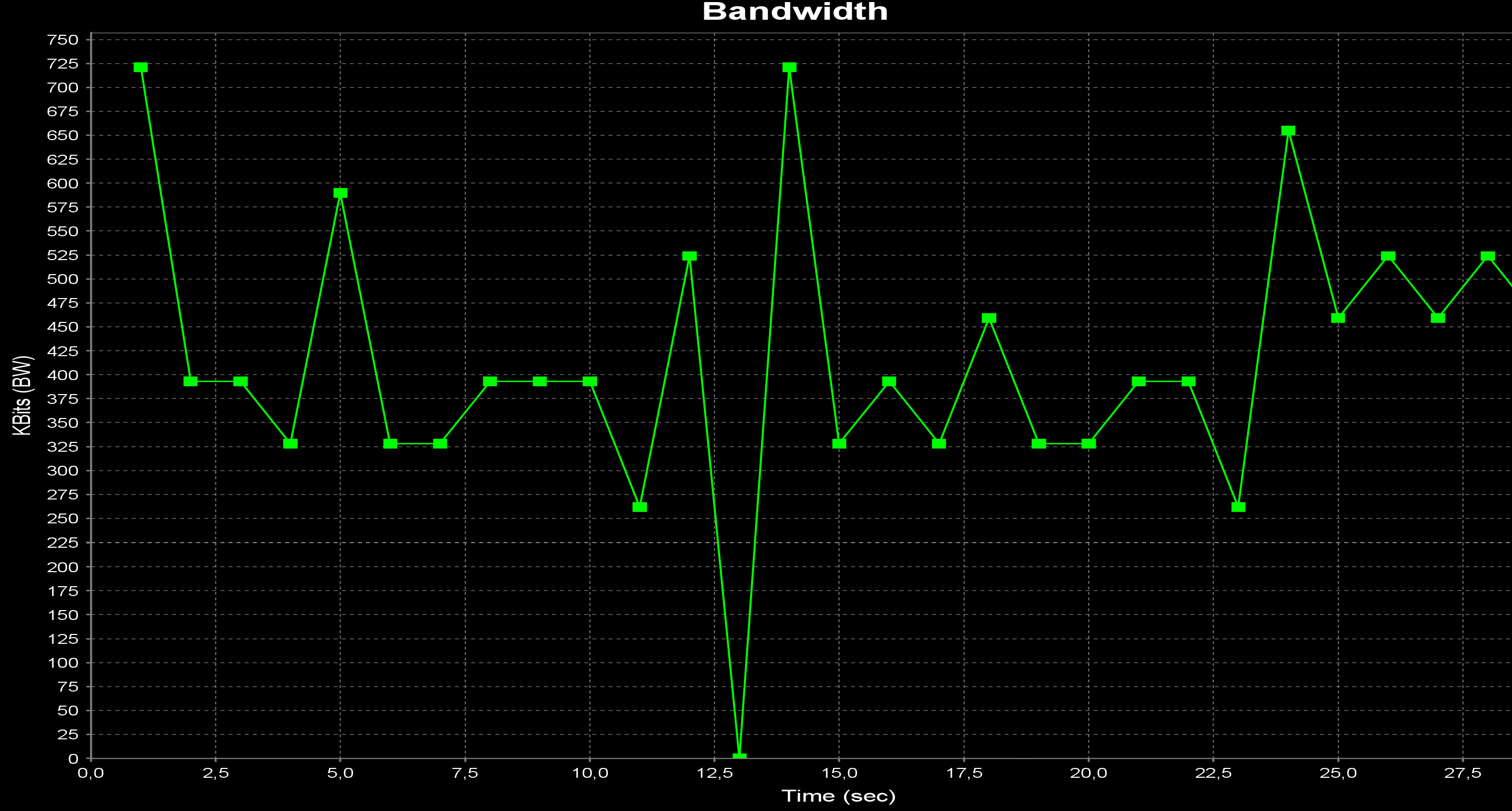

@keyser hello friend.

The test iperf

Connecting to host 172.29.0.1, port 5201

[ 5] local 10.0.0.1 port 45903 connected to 172.29.0.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 26.3 MBytes 221 Mbits/sec 19 64.1 KBytes

[ 5] 1.00-2.00 sec 27.1 MBytes 227 Mbits/sec 15 61.8 KBytes

[ 5] 2.00-3.00 sec 26.7 MBytes 224 Mbits/sec 15 82.0 KBytes

[ 5] 3.00-4.00 sec 27.1 MBytes 228 Mbits/sec 28 57.6 KBytes

[ 5] 4.00-5.00 sec 26.1 MBytes 219 Mbits/sec 16 71.2 KBytes

[ 5] 5.00-6.00 sec 26.6 MBytes 224 Mbits/sec 19 67.0 KBytes

[ 5] 6.00-7.00 sec 27.1 MBytes 227 Mbits/sec 15 27.4 KBytes

[ 5] 7.00-8.00 sec 26.5 MBytes 223 Mbits/sec 36 68.6 KBytes

[ 5] 8.00-9.00 sec 26.8 MBytes 225 Mbits/sec 18 81.6 KBytes

[ 5] 9.00-10.00 sec 21.0 MBytes 176 Mbits/sec 67 81.7 KBytes

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 261 MBytes 219 Mbits/sec 248 sender

[ 5] 0.00-10.00 sec 261 MBytes 219 Mbits/sec receiveriperf Done.

-

@patrick-pesegodinski Is this before or after clamping down MSS?

You are seeing 220mbit which is around 25MB/s (Half your OpenVPN throughput). But I cannot see if you tried a single session iPerf or the default with multiple parallel streams. -

@keyser Test performed without changing the MSS.

I used the iperf client in Pfsense itself with the default settings. -

@patrick-pesegodinski

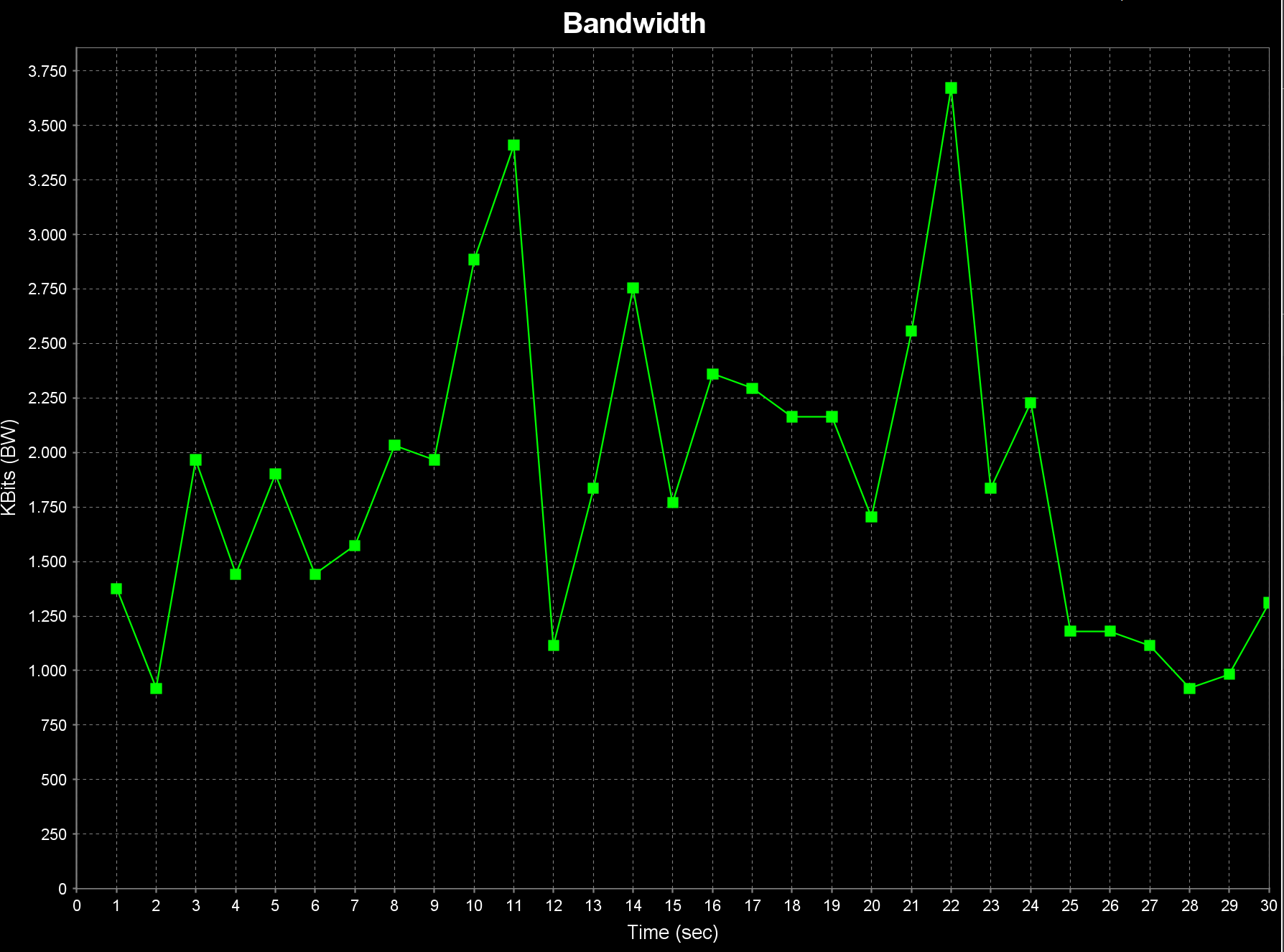

Post your iPerf syntax here so we can understand what you are doing.

Something along the lines ofiperf3 -c 192.168.70.26 -P 50 -t 30Also post for us the before MSS change and after MSS change results.