OpenVPN interfering with CARP Failover

-

Hello,

I have setup 2 identical pfSense instances and setup Failover with carp. I have 3 Addresses available on all interfaces (also on the WAN.) So I have setup Outbound NAT for my CARP VIP and it works great. If I open a few connections on a client behind the firewalls (like a download), and then trigger the carp maintenance mode, the firewall fails over correctly within 1-2 seconds and the download on the client does not even disconnect (which is amazing).

So far so good

However as soon as I start the OpenVPN server which I have setup on the carp VIP of the WAN (so it also fails over) the regular Failover does not work as expected anymore. When I then do the same download test on the client behind the Firewalls, it disconnects and has to rebuild all connections.

Also the VPN Server correctly gets restarted on the second node and the client automatically reconnects (so expected behavior)

If I then disable the OpenVPN server again and try again, the Failover works again as expected without any outage.How are these two settings related and why are they interfering with each other?

pfSense version 2.7.2

Any Help is appreciated.

Thanks

-

EDIT:

Definitely has something to with the OpenVPN Server being on the WAN CARP VIP Interface as when I change the OpenVPN interface to the WAN, The regular Failover works again (However then obviously no failover of the OpenVPN as it is not on the WAN VIP)

-

If the OpenVPN server setup as a WAN with a gateway? If so pfSense could be seeing it go down when the VIP switches and initiating the state killing that requires.

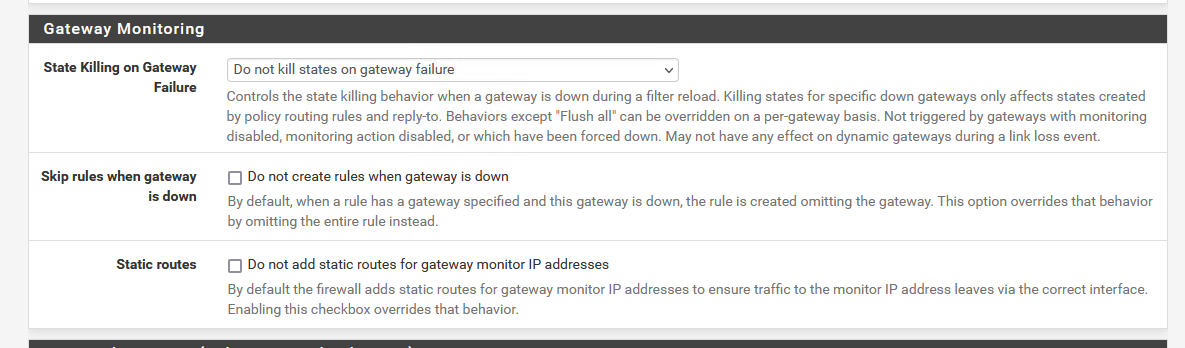

How do you have gateway state killing configured in Sys > Adv > Misc? If it's set to 'flush states' that would produce the behaviour you're seeing. All states would be killed which would break existing connections.

Steve

-

Thanks @stephenw10 for the answer. I have not enabled the State killing in Sys -> Adv -> Misc

just to make sure I am not missing something obvious with the OpenVPN setup:

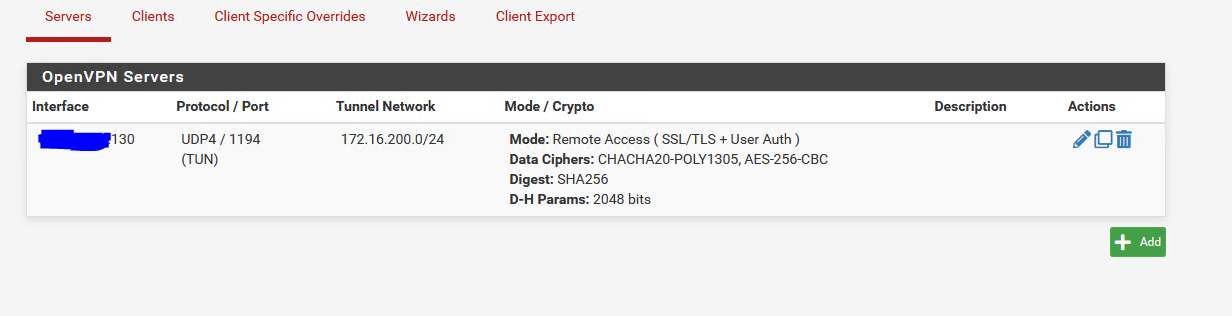

I have the interface of the OpenVPN server set to the public CARP VIP

Public CARP VIP is .130

FWL01 is .131

FWL02 is .132

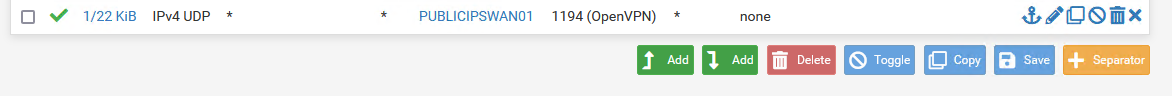

(not the real IPs)I have then a firewall rule on the WAN to allow traffic to these 3 IPs (.130 .131 .132)

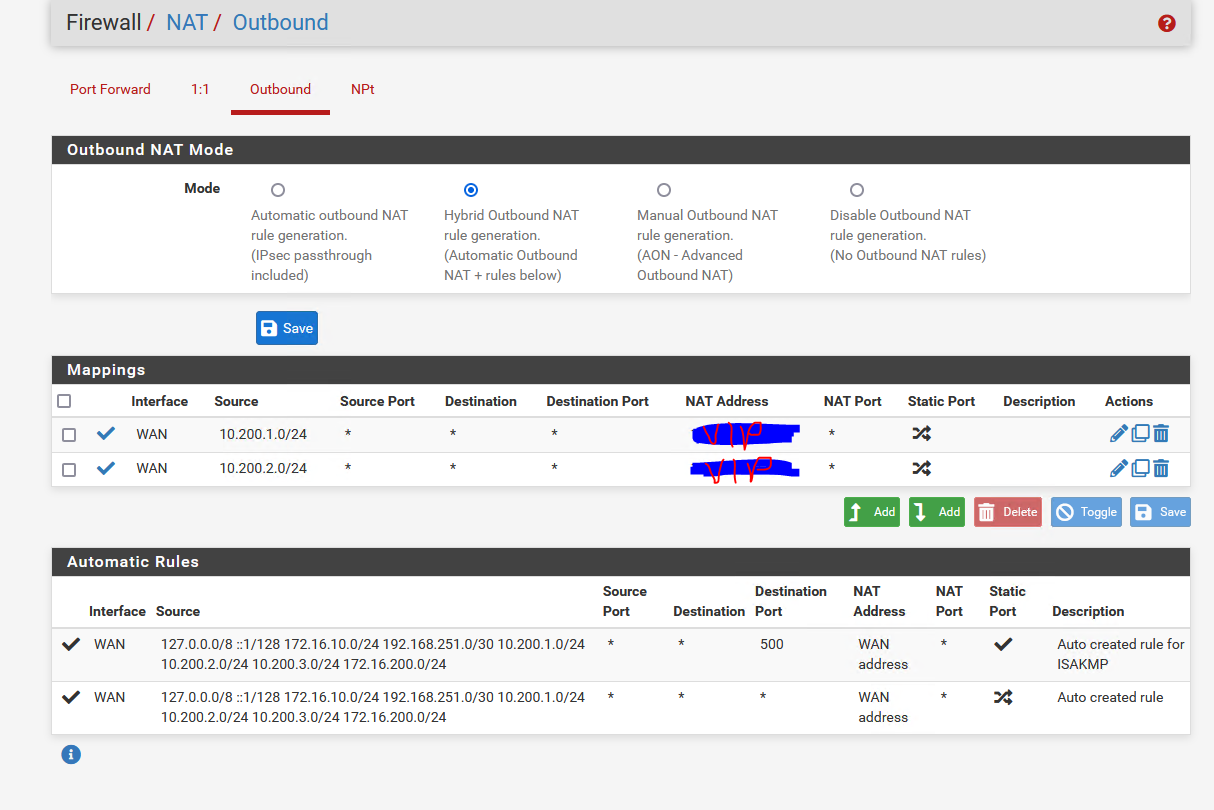

I have also setup Outbound NAT so the local Clients initiate connections with the public VIP

How I tested

Without OpenVPN Server Running

FWL01 is master (default)

OpenVPN server set to disabled on FWL01

clients behind the Firewall can reach the internet.

I trigger the "enter persistent carp maintenance mode on FWL01 (the master)" and the Firewall fails over

FWL02 becomes the master and the Clients behind the firewalls retain all their states and no connection gets killed (downloads are not interrupted, RDP session to Clients also does not get interrupted)With OpenVPN Server Running

FWL01 is master (default)

OpenVPN server running on FWL01

Everything works as expected: Clients can connect to the OpenVPN Server and clients behind the Firewall can reach the internet.

I trigger the "enter persistent carp maintenance mode on FWL01 (the master)" and the Firewall fails over

FWL02 becomes the master and the OpenVPN clients get disconnected and reconnect after 5 seconds automatically (expected behavior)

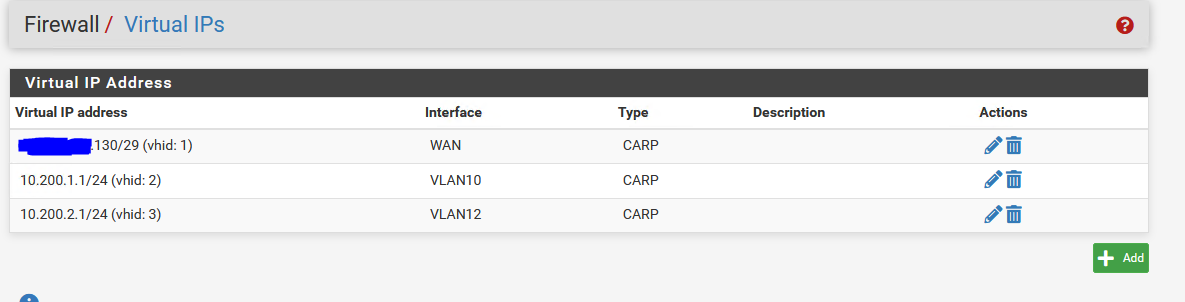

The Clients behind the firewalls get all states killed and need to rebuild them (NOT seamless anymore )Here My VIP config

Also all the State creator IDs are also the same on both nodes

Can anyone with a HA cluster and OpenVPN reproduce this?

I have tried this on Pfsense 2.6.0 and 2.7.0 and 2.7.2 always the same issueAny Help is appreciated

Thanks -

@UserCo We can reproduce this issue with our ha cluster running version 23.09.1

Some process is killing states it should not touch when bringing the openvpn server up on the active node.

We are currently discussing this issue with the Netgate Support. -

Hmm, the OpenVPN tunnel network is shown in the auto outbound NAT rules which means pfSense sees as a LAN. That should mean it doesn't run any of the WAN IP scripts when it comes up.

However, what is logged when it does?

If you restart the OpenVPN server without failing over does that also break existing connections?

-

@stephenw10 It does not break connections consistently when just restarting the ovpns.

It does for example if you fail over to your secondary node and the restart the ovpns on the primary (inactive) node, but only on the first restart of the service.Here are the logs for that specific scenario:

OpenVPN Log Feb 2 17:59:04 openvpn 49241 Initialization Sequence Completed Feb 2 17:59:04 openvpn 49241 UDPv4 link remote: [AF_UNSPEC] Feb 2 17:59:04 openvpn 49241 UDPv4 link local (bound): [AF_INET] x.x.x.181:1201 Feb 2 17:59:04 openvpn 49241 /usr/local/sbin/ovpn-linkup ovpns2 1500 0 10.150.11.1 255.255.255.0 init Feb 2 17:59:04 openvpn 49241 /sbin/ifconfig ovpns2 10.150.11.1/24 mtu 1500 up Feb 2 17:59:04 openvpn 49241 TUN/TAP device /dev/tun2 opened Feb 2 17:59:04 openvpn 49241 TUN/TAP device ovpns2 exists previously, keep at program end Feb 2 17:59:04 openvpn 49241 WARNING: experimental option --capath /var/etc/openvpn/server2/ca Feb 2 17:59:04 openvpn 49241 Note: OpenSSL hardware crypto engine functionality is not available Feb 2 17:59:04 openvpn 49241 NOTE: the current --script-security setting may allow this configuration to call user-defined scripts Feb 2 17:59:04 openvpn 49120 DCO version: FreeBSD 14.0-CURRENT amd64 1400094 #1 plus-RELENG_23_09_1-n256200-3de1e293f3a: Wed Dec 6 21:00:32 UTC 2023 root@freebsd:/var/jenkins/workspace/pfSense-Plus-snapshots-23_09_1-main/obj/amd64/Obhu6gXB/var/jenkins/workspace/pfSense-Plus-snapshots-23_09_1 Feb 2 17:59:04 openvpn 49120 library versions: OpenSSL 3.0.12 24 Oct 2023, LZO 2.10 Feb 2 17:59:04 openvpn 49120 OpenVPN 2.6.8 amd64-portbld-freebsd14.0 [SSL (OpenSSL)] [LZO] [LZ4] [PKCS11] [MH/RECVDA] [AEAD] [DCO] Feb 2 17:59:04 openvpn 13686 SIGTERM[hard,] received, process exiting Feb 2 17:59:04 openvpn 35804 Flushing states on OpenVPN interface ovpns2 (Link Down) Feb 2 17:59:04 openvpn 13686 /usr/local/sbin/ovpn-linkdown ovpns2 1500 0 10.150.11.1 255.255.255.0 init Feb 2 17:59:04 openvpn 13686 /sbin/ifconfig ovpns2 10.150.11.1 -alias Feb 2 17:59:02 openvpn 13686 event_wait : Interrupted system call (fd=-1,code=4) Syslog General Feb 2 17:59:05 php-fpm 35457 /rc.newwanip: Interface is disabled, nothing to do. Feb 2 17:59:05 php-fpm 35457 /rc.newwanip: rc.newwanip: Info: starting on ovpns2. Feb 2 17:59:04 check_reload_status 467 rc.newwanip starting ovpns2 Feb 2 17:59:04 kernel ovpns2: link state changed to UP Feb 2 17:59:04 check_reload_status 467 Reloading filter Feb 2 17:59:04 php-fpm 43717 OpenVPN PID written: 49241 Feb 2 17:59:04 check_reload_status 467 Reloading filter Feb 2 17:59:04 kernel ovpns2: link state changed to DOWN -

Hmm, I expect the server to be down already if it's running on the CARP VIP since that will be unavailable on the backup node.

Does your tunnel subnet also appear on auto OBN rules?

Is the server interface assigned?

-

@stephenw10 In my scenario described above ovpns is not running on a carp ip but native wan, if we bind it to a carp ip states clear on each failover no matter what.

If ovpns is bound to native wan ip states do not reset with each failover and ovpns server will not stop and start based on carp ip status.

Tunnel subnet is in auto OBN rules.Ovpn server interface is not assigned currently for debugging.

Here are some clarifications:

- If opvns is disabled no states clear and everything works perfectly

- if opvns is active and bound to carp wan ip each failover clears states (ovpns starts and stop depending on carp state)

- if opvns is active and bound to native wan ip carp failover do not usually trigger a state reset, but do in some cases. (if you restarted opvns on the passive node)

- first restart of ovpns often resets states, following restarts do not until you fallback or you do interface changes

- disabling opvns on the primary node and syncing the config to the secondary node will cause state reset everytime carp is active on the secondary node (until you enable and disable ovpns again on the secondary node)

- We do not have any issues with xmlrpc or state sync, they work perfectly fine

To me it looks like this is some wrapper handling bs but i cant find the script or function causing it.

-

Ah, OK that's not the same then.

So do you have the server assigned as an interface?

Do you see the tunnel subnet in auto outbound NAT rules?

Which states are cleared?

If the server is running on the WAN directly I assume it's there only for access to the firewall itself. Rules passing traffic there should be set to not sync states since they would not be valid on the other node.

-

@stephenw10 Not the same as what? It is exactly the same issue @UserCo is experiencing.

If an OpenVPN server is bound to a wan interface, wan states are cleared if the service starts or stops after an interface change.@stephenw10 said in OpenVPN interfering with CARP Failover:

So do you have the server assigned as an interface?

As stated before, there is not interface assigned for the ovpns currently for debugging, but this does not seem to make a difference.

@stephenw10 said in OpenVPN interfering with CARP Failover:

Do you see the tunnel subnet in auto outbound NAT rules?

As stated before, we see the tunnel network on outbound NAT rules.

@stephenw10 said in OpenVPN interfering with CARP Failover:

Which states are cleared?

At least all WAN states are cleared, we have not verified if really all states are cleared since it does not matter that much in our case.

@stephenw10 said in OpenVPN interfering with CARP Failover:

If the server is running on the WAN directly I assume it's there only for access to the firewall itself. Rules passing traffic there should be set to not sync states since they would not be valid on the other node.

We have multiple OpenVPN server for different purposes, the ones that are directly bound to the native WAN interface are only for accessing the firewall itself.

We also have OpenVPN servers for other purposes that need to be on a carp ip. I am not worried about invalid states and that is not the issue here.Support suggested to bind the OpenVPN servers to localhost and then NAT from the carp ip/wan interface to localhost.

This "resolves" the issue that states are cleared during failover, but creates other unwanted sideeffects. -

@dkoruga @stephenw10 Thank you for the inputs. Yes for me it behaves exactly like @dkoruga describes. Is this a known Bug in Pfsense? what can I do about it? when I try the suggested workaround from you @dkoruga with having the OpenVPN server on localhost and doing the port forwarding, the failover does not break the states anymore but also the OpenVPN server does not send an exit notify to the clients so they don't try to reconnect. How do I get the Clients to reconnect? If that would work, I would be satisficed with this workaround as it ticks all the boxes.

What are the mentioned "other unwanted Sid effects"?

Thanks

-

@UserCo Netgate Support confirmed the issue we are seeing here is https://redmine.pfsense.org/issues/13569

First unwanted side effect is the missing exit notify during shutdown of the server as you mentioned.

In result you have to reduce the client ping timeout to a low value to make the client reconnect after some seconds.

Even if you put this as low as 1 or 2 seconds, with exit notification the failover is way more seamless for the client.Second is that ovpns will not see the real client ip without additional magic

Third there could be additional side effects if any packets are received by your inactive firewall node since this node will have the tunnel network in its routing table.

We are considering "commenting out the line /sbin/pfctl -i $1 -Fs in /usr/local/sbin/ovpn-linkdown" as mentioned as a workaround in the bug tracker since i can not imagine an unwanted state on this interface in our configuration, and if there is then i will make sure these states are not synced within our firewall rules in the first place.

@dkoruga said in OpenVPN interfering with CARP Failover:

To me it looks like this is some wrapper handling bs but i cant find the script or function causing it.

It is funny how the line "/sbin/pfctl -i $1 -Fs in /usr/local/sbin/ovpn-linkdown" was my first suspicion 10 minutes into debugging and was then scrapped in my head as the variable is logged and when i tried to execute the command by hand 0 states were cleared as described in the bug tracker conversation.

-

@dkoruga Thanks a lot. This workaround worked for me.

-

@dkoruga said in OpenVPN interfering with CARP Failover:

https://redmine.pfsense.org/issues/13569

Hmm, interesting. Since you're not running it on the CARP VIP I wouldn't expect that to apply to you. I wouldn't have expected exit notification to apply either since the server running on the WAN IP would not shutdown. Unless it loses link entirely.

The server still sees the real source IP when you forward to localhost. There's no source NAT there.

Steve

-

@dkoruga I can confirm it still happens in 2.7.2 and the /usr/local/sbin/ovpn-linkdown fix worked for me.

Normal HA with OpenVPN in WAN CARP. Commenting the line made the trick -

I have a similar problem with carp and vpn, however I use the openvpn interface being a gateway group, being a gateway where carp is running the vpn does not connect, the other one that is outside carp is normal, the same case as comment Could the line solve this?

-

OpenVPN is part of a gateway group? On the gateway group? Unclear exactly how you have that setup.

-

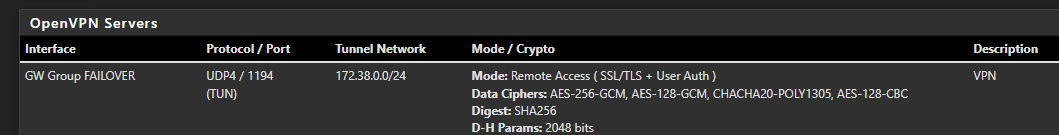

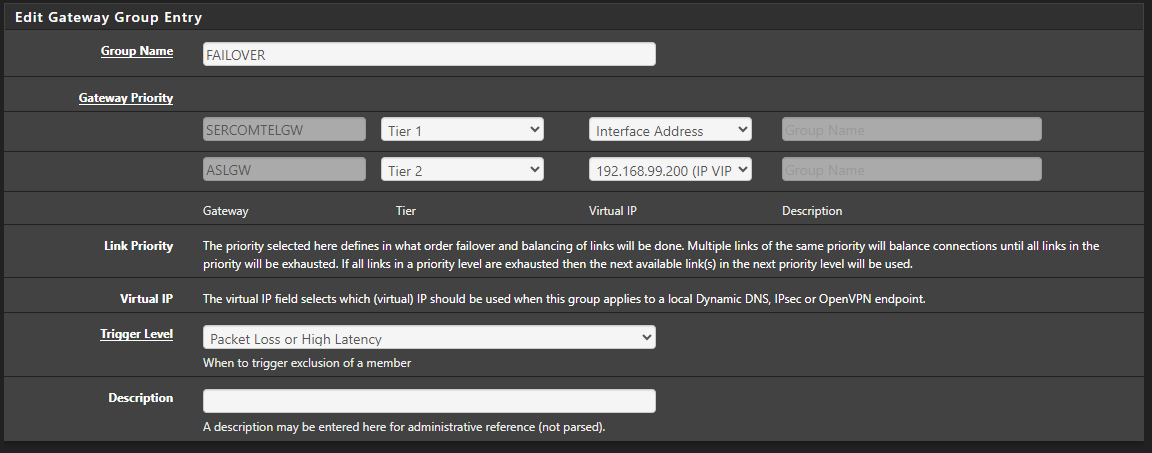

@stephenw10 Good afternoon, I have a VPN in the following configuration.

In the System - Routing part it looks like this

(I don't know if the part where this ASLGW with the carp's IP is working, as it was due to some testing)In the export part, I put the IP of 1 interface as default and then in Additional configuration options (still in export) I put remote ip port udp4

How it works, the VPN tries to connect to the first IP and if it is out, it goes to another IP. This configuration may not be the best and there are better ones, but without the carp part it has always worked for me.

-

Hmm, OK. So what exactly are you seeing happen?