Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory

-

I'm running a Netgate 1100 with pfSense+ 24.03.

Installed packages are:

Cron

mailreport

pfBlockerNG

Service_Watchdog

Status_Traffic_Totals

System PatchesI have tried to run without service watchdog, as some here recommend, but repeated failures of unbound shut down my Internet service without service watchdog being there to restart unbound.

The typical error messages posted to the system log are:

Sep 11 05:37:49 php-cgi[87477]: servicewatchdog_cron.php: Service Watchdog detected service unbound stopped. Restarting unbound (DNS Resolver) Sep 11 05:37:49 php-cgi[87477]: servicewatchdog_cron.php: Suppressing repeat e-mail notification message. Sep 11 05:46:08 kernel: pid 33748 (unbound), jid 0, uid 59, was killed: failed to reclaim memory Sep 11 05:46:11 php-cgi[38533]: servicewatchdog_cron.php: Service Watchdog detected service unbound stopped. Restarting unbound (DNS Resolver) Sep 11 05:46:11 [router name] php-cgi[38533]: servicewatchdog_cron.php: Suppressing repeat e-mail notification message. ... Sep 11 14:21:17 php-cgi 89910 servicewatchdog_cron.php: Suppressing repeat e-mail notification message. Sep 11 14:21:17 php-cgi 89910 servicewatchdog_cron.php: Service Watchdog detected service unbound stopped. Restarting unbound (DNS Resolver) Sep 11 14:21:11 kernel pid 17260 (unbound), jid 0, uid 59, was killed: a thread waited too long to allocate a page Sep 11 14:19:00 sshguard 87226 Now monitoring attacks. Sep 11 14:19:00 sshguard 30287 Exiting on signal. Sep 11 14:18:14 php-cgi 58192 servicewatchdog_cron.php: Suppressing repeat e-mail notification message. Sep 11 14:18:14 php-cgi 58192 servicewatchdog_cron.php: Service Watchdog detected service unbound stopped. Restarting unbound (DNS Resolver) Sep 11 14:18:08 kernel pid 88715 (unbound), jid 0, uid 59, was killed: failed to reclaim memoryThe times this occurs varies. In the above example, there is/was no cron jobs or other router system software change activity going on around 05:37-46 in the morning.

I have searched this forum and read of reports of this kind of error going back several years but without finding a clear indication of the cause, fix or patch to the product.

I have also attempted to pare down any optional settings to the minimum to see if turning them off would cause the errors to cease, but they have not.

It's possible turning the unbound optional settings off reduced the frequency of the errors, but it's not a clear cause-effect relationship given the random nature of the errors.

Since this kind of problem can kill network services for an installation (absent running service watchdog) and it appears to have being going on off-and-on for years, it seems like something Netgate would want to fix in the name of stability and software quality.

The error message suggests it's a memory management, pointer, garbage collection or similar error or defect.

I'm happy to supply additional details to help run this to ground and get it resolved.

-

reason unbound could be restarting is because you are using the Kea DHCP server. There are numerous threads on why in the current release causes unbound to restart. For now switch to ISC DHCP, and stop the Kea release from restarting unbound every time a client adds a DHCP lease. if that is your caseApparently the wording here is not correct, although there are numerous posts on the forum that suggest turning off Kea and using ISC instead is the way to go in 24.03. I stand corrected.another reason you may find that unbound is restarting because of a pfBlockerNG configuration issue.

There are other reasons of course, but unbound generally runs for days, before pfBlocker tells it to reload. This will depend a lot on the lists you are loading, the frequency of running pfblocker updates (the cron timing) and some other factors.Running servicewatchdog against unbound is not a good idea. Generally what happens is that when unbound is restarting as part of the normal pfBlocker cycle, the watchdog sees it is down, then tries to restart it, but the system in the case of pfblocker restarting it is likely already doing that as part of the normal operation.

The issue will likely go away, or at least you can narrow down why unbound is restarting

it will likely be one or the other or combination of both. -

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Sep 11 14:18:08 kernel pid 88715 (unbound), jid 0, uid 59, was killed: failed to reclaim memory

This error message means your box ran out of memory completely and the OOM (out-of-memory) process killer was tasked with killing the process currently consuming the largest amount of memory. At that instant it was the

unboundprocess. The OOM killer is a self-preservation mechanism for the kernel. It kills something in user space to free up RAM needed by something deemed more critical in an attempt to not become totally unresponsive (due to not having any free RAM).You can learn more about the OOM killer here: https://klarasystems.com/articles/exploring-swap-on-freebsd/. Scroll down to the section heading Why is the kernel killing my processes? on that page.

Sep 11 14:21:11 kernel pid 17260 (unbound), jid 0, uid 59, was killed: a thread waited too long to allocate a pageThe error message above means

unbound(or something else such as PHP executing the pfBlockerNG code) has likely consumed all the available RAM and stalled out waiting for the system to give it some more.How can this happen? For starters, the SG-1100 is very RAM limited. It has only 1GB of RAM. It also does not have the speediest of CPUs. Secondly, if you have large DNSBL lists or other IP lists feeding into pfBlockerNG, that can eat up memory and lead to the OOM killer taking action. Another side effect of very large IP lists is it takes

unboundquite a long time to load and process them. During that timeunboundwill not be answering DNS queries from clients on your network and for all and intents and purposes your Internet will seem to be "down".Large IP lists and the SG-1100 are not good friends because the SG-1100 can quickly run out of RAM. This situation is exacerbated if you have enabled ZFS on the 1100 hardware as ZFS consumes a lot of RAM for the ARC (cache).

To troubleshoot this, begin by stopping (or even better, removing) the pfBlockerNG package for a test. If you remove it, it will remember your current configuration and everything will come back when you reinstall the package.

Restore the

unboundsettings to the out-of-the-box defaults for pfSense.Remove

unboundfrom being monitored by the Service Watchdog package. That is only going to make a bad situation worse in a RAM-limited situation. If you want large IP lists with pfBlockerNG, then you should obtain more capable hardware with at least 4 GB of RAM. Or revisit your choice of block lists and trim those down to something much smaller (to conserve RAM usage). -

@jrey said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

reason unbound could be restarting is because you are using the Kea DHCP server. There are numerous threads on why in the current release causes unbound to restart. For now switch to ISC DHCP, and stop the Kea release from restarting unbound every time a client adds a DHCP lease. if that is your case

This it not accurate. We have only introduced Kea integration with Unbound in the upcoming 24.08 release.

-

Thanks for the clarity regarding Kea. Seems I may have read this (quoted below, and many other threads) incorrectly. That is, those suggesting that the issue occurs with Kea and DHCP client registration, and that switching to ICP DHCP resolves their issues. Personally I wouldn't be able to verify either way as I run all my DHCP services and DNS client registration on another platform, not on the Netgate. I should likely not have commented specifically on KEA on this platform in the first place. Appreciate your time providing the correct information.

@Gertjan said in Netgate 2100 blocking? Spotify issue:

If presume you use KEA as the DHCP server (is that so ?), so it's not the dreaded dhcp_leases process that restarts unbound on every incoming DHCP lease or lease renew.

@bmeeks said in Netgate 2100 blocking? Spotify issue:

Is perhaps DHCP client registration in DNS enabled on the firewall? If so, that can result in unbound restarts each time a DHCP client obtains or renews its lease. If enabled, that can contribute to a bunch of unbound DNS Resolver restarts. Combine that with large DNSBL lists and you could have a perfect storm essentially killing DNS resolution on the network for large intervals of time.

@SteveITS said in Netgate 2100 blocking? Spotify issue:

FWIW to all, this was/will be finally changed, in Kea, in 24.08.

https://www.netgate.com/blog/improvements-to-kea-dhcp@stephenw10 said in Netgate 2100 blocking? Spotify issue:

Yup, going to be so much better!

-

Thank you to everyone who has taken the time to respond. Here is some detail:

The crash hasn't happened in a couple of days. This is typical. It's seems to occur on a more or less random basis.

^This suggests that servicewatchdog isn't typically jumping on the pfBlocker initiated restart. I would, I suspect, see this in the logs if it happened at the designated pfBlocker update time, which I do not.

Kea is my DHCP server.

DHCP client registration in DNS is not enabled. I don't need this function.

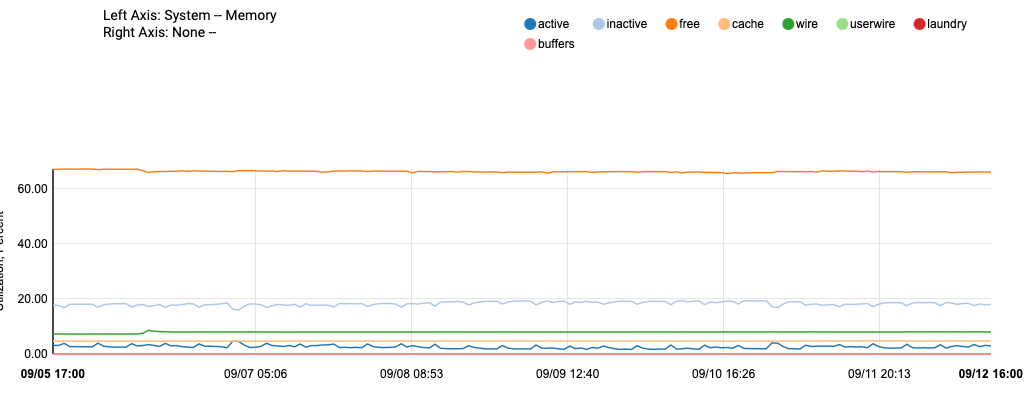

Memory usage is at about 65%, which is typical. Seeing higher is uncommon and I have never seen full memory usage. How can I find any other evidence of what free memory was at the times these process killings occurred to test the hypothesis that memory is filling up?

pfBlocker has been a worthwhile part of my security profile. While it may be a contributing factor, I would like to find supporting evidence of this in logs rather than experimenting with shutting it off. I don't have vast numbers of block lists.

I set incoming and outgoing TCP buffers to 20 from the default of 10.

I set the Message Cache to 10mb from 4mb.

The only Advanced check boxes I have in the DNS Resolver (unbound) are Prefetch Support and Keep Probing.

Questions:

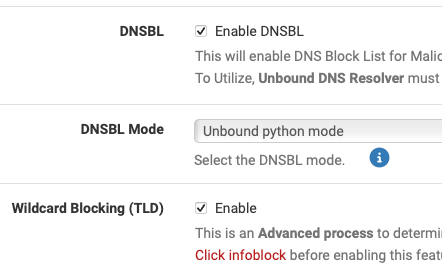

Could using the Python module be a factor?

Could Prefetch Support and/or Keep Probing be a factor?

Plan:

I'll try setting TCP buffers and message caches back down to the defaults and see how that affects it.

I'm looking forward to the improved Kea and Unbound integration.

-

open the pfblockerng.log

Firewall -> pfBlockerNG -> Logs. (log type = Log Files, Log/file selection: pfblockerng.log)

scroll near the end of the file -- look for the section

pfSense Table Statslet us know what values you see below that heading.

there should be two lines

table-entries hard limit (with a value)

Table Usage Count (with a value)but your recent post suggests you don't think the issue it is related to pfBlockerNG based on timestamps..

-

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

^This suggests that servicewatchdog isn't typically jumping on the pfBlocker initiated restart. I would, I suspect, see this in the logs if it happened at the designated pfBlocker update time, which I do not.

Service Watchdog is a troubleshooting/workaround package. It was not designed to be a regular production feature. It simply periodically checks if the specified daemon is running, and restarts it immediately if it's not. With daemons that periodically either restart themselves, or are restarted by third-party packages, ignorance of such an automatic restart by Service Watchdog can result in it gumming up the works. That's why we suggest not running that package as a normal production feature.

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Memory usage is at about 65%, which is typical. Seeing higher is uncommon and I have never seen full memory usage. How can I find any other evidence of what free memory was at the times these process killings occurred to test the hypothesis that memory is filling up?

This will be a dynamic failure. That means you would need to be constantly watching the memory utilization on the box to notice. You can scan the logs for any messages such as "out of swap space" or similar low-memory log messages.

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Could using the Python module be a factor?

Very possible. There were some Python memory leak bugs in older versions of

unbound. Perhaps not all the bugs have been slain ???But I believe you need the Python Module enabled to fully utilize the DNSBL function (but I don't use that feature and thus am not an authority in this area).

-

@bmeeks said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Perhaps not all the bugs have been slain ???

seems he is running 24.03 which should be running

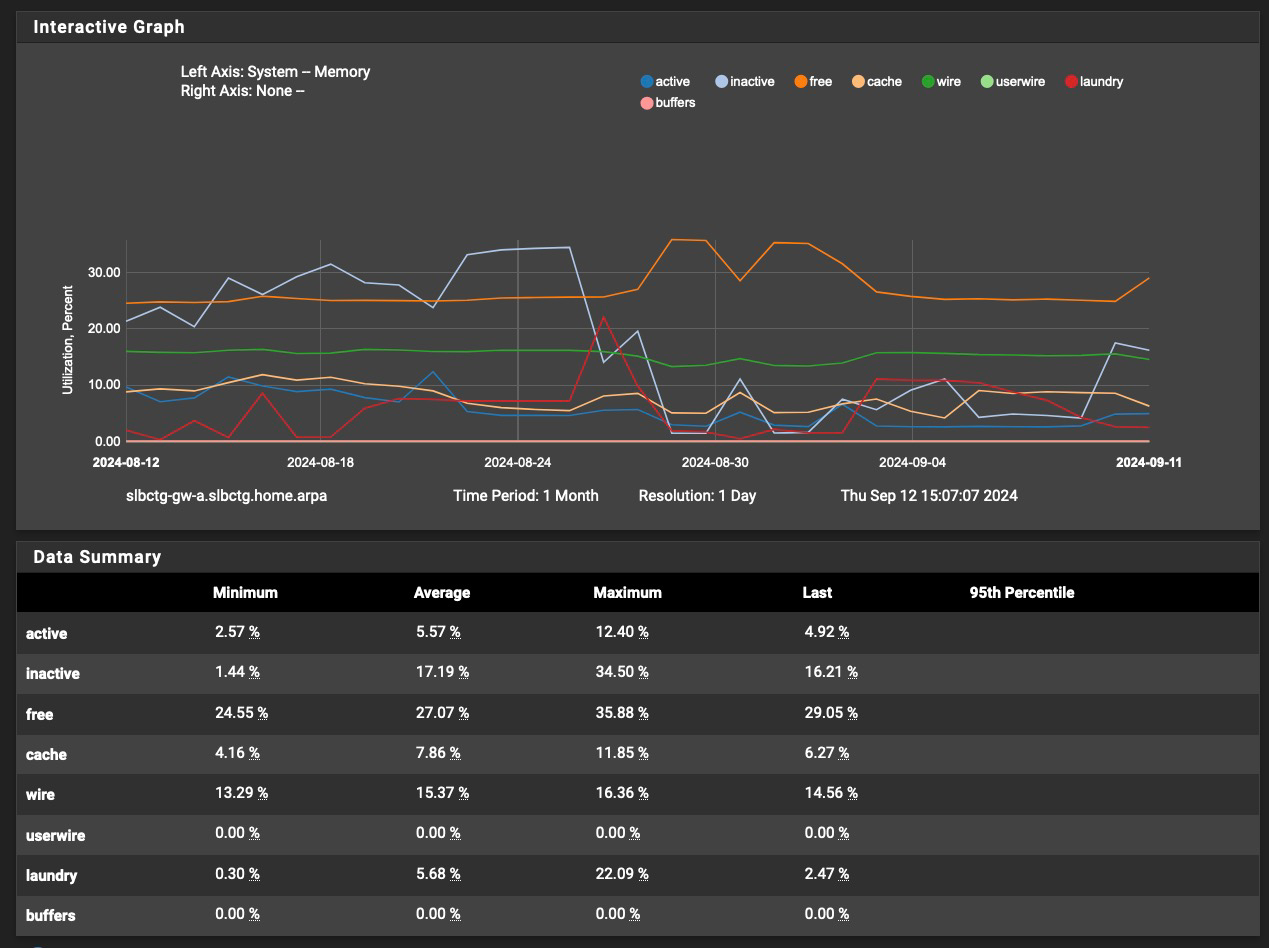

start of service (unbound 1.19.3). <- at least on my 2100Zero memory leaks here, system will stay pretty flat lined with regards to memory use for weeks. (about the only time it really fluctuates is when I reboot - then it drops to zero - LOL) so almost never..

During this timeframe (past week shown) unbound has restarted exactly one time, due to one of the lists being updated for DNSBL the lists I use refresh every 3 / 4 days. That's when unbound will restart, but It is pretty stable. Other lists may / may not update more often.

Some settings to confirm.

Might be a fair question, even though @Mission-Ghost doesn't think it is related to pfblocker based on time stamps of events, but that we also ask for the version of pfblocker that is installed on the system?

I still also say - turn the service watchdog off.

-

@jrey said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

open the pfblockerng.log

Firewall -> pfBlockerNG -> Logs. (log type = Log Files, Log/file selection: pfblockerng.log)

scroll near the end of the file -- look for the section

pfSense Table Statslet us know what values you see below that heading.

there should be two lines

table-entries hard limit (with a value)

Table Usage Count (with a value)pfSense Table Stats

table-entries hard limit 400000

Table Usage Count 17743but your recent post suggests you don't think the issue it is related to pfBlockerNG based on timestamps..

Correct. pfBlocker updates about 3am, last crash I reported here was in the 5am and 2pm hours.

-

seems to be a recurring issue for you going back to 23.05 and 23.09?

So has the problem ever gone away?

https://forum.netgate.com/topic/184130/23-09-unbound-killed-failing-to-reclaim-memory

-

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

table-entries hard limit 400000

Table Usage Count 17743those don't look like a problem -- pretty small list

-

@bmeeks said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

^This suggests that servicewatchdog isn't typically jumping on the pfBlocker initiated restart. I would, I suspect, see this in the logs if it happened at the designated pfBlocker update time, which I do not.

Service Watchdog is a troubleshooting/workaround package. It was not designed to be a regular production feature. It simply periodically checks if the specified daemon is running, and restarts it immediately if it's not. With daemons that periodically either restart themselves, or are restarted by third-party packages, ignorance of such an automatic restart by Service Watchdog can result in it gumming up the works. That's why we suggest not running that package as a normal production feature.

I understand. But this instability causes random downtime that doesn't correct itself, so the tradeoff is worth it. Until unbound is stable, I need something to keep the dns and by extension the network running so I'm not always running to restart unbound and fielding complaints.

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Memory usage is at about 65%, which is typical. Seeing higher is uncommon and I have never seen full memory usage. How can I find any other evidence of what free memory was at the times these process killings occurred to test the hypothesis that memory is filling up?

This will be a dynamic failure. That means you would need to be constantly watching the memory utilization on the box to notice. You can scan the logs for any messages such as "out of swap space" or similar low-memory log messages.

In the last 10,000 system/general log messages, which takes me back to mid-August, there are only the unbound messages previously reported here and the following which only appeared on August 31 in the middle of many unbound failed to reclaim memory error messages before and after:

solisten_clone: pcb 0xffffa000297dc000: New socket allocation failure: limit reached or out of memoryI'll highlight again the unbound error is about reclaiming memory, not failing to allocate or running out of memory.

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Could using the Python module be a factor?

Very possible. There were some Python memory leak bugs in older versions of

unbound. Perhaps not all the bugs have been slain ???But I believe you need the Python Module enabled to fully utilize the DNSBL function (but I don't use that feature and thus am not an authority in this area).

I believe that's correct; I need Python Module to run pfBlocker.

-

@jrey said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

@bmeeks said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

Perhaps not all the bugs have been slain ???

seems he is running 24.03 which should be running

start of service (unbound 1.19.3). <- at least on my 2100Might be a fair question, even though @Mission-Ghost doesn't think it is related to pfblocker based on time stamps of events, but that we also ask for the version of pfblocker that is installed on the system?

Here's my memory chart for the last month; uptime now is 28 days:

I still also say - turn the service watchdog off.

I'd love to...once this stops killing production at random times and requiring manual intervention.

-

@jrey said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

seems to be a recurring issue for you going back to 23.05 and 23.09?

So has the problem ever gone away?

https://forum.netgate.com/topic/184130/23-09-unbound-killed-failing-to-reclaim-memory

No. It ebbs and flows. It seemed better enough for a while that I turned off service watchdog and then it recurred killing the network, so I turned service watchdog back on. So I'm trying again to figure it out with this forum's generous help.

So, after my last post I have changed in unbound the following:

- memory cache size down from 10 to 4mb

- in and out tcp buffers down from 20 to 10

- EDNS buffer to default from automatic

We'll see if this has a material effect on the errors posting to the log and unbound being killed for failing to reclaim memory.

-

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

We'll see if this has a material effect on the errors posting to the log and unbound being killed for failing to reclaim memory.

Do not get confused by the unfortunate poor wording of the kernel's error message. The OOM killer is a kernel process that is launched to unconditionally terminate the largest consumer of user space memory in order to prevent the system from becoming unstable. It's the kernel saying "

unboundhas continued to consume more and more memory and has not returned any back to the system pool". The fault here is withunboundconsuming memory. It isunboundconsuming memory and never releasing it back to the system. -

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

The only Advanced check boxes I have in the DNS Resolver (unbound) are Prefetch Support and Keep Probing.

just for fun try to Turn off Prefetch support and restart unbound

-

@jrey said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

The only Advanced check boxes I have in the DNS Resolver (unbound) are Prefetch Support and Keep Probing.

just for fun try to Turn off Prefetch support and restart unbound

This was my first experiment. Unfortunately it was ineffective, so I turned it back on.

So far no failures following the changes I mentioned a couple of posts ago. I may return them to original values one at a time until a failure is noted so I can isolate the issue.

Someday a project to substantially improve the log messages to aid in end-user understanding of what is happening and proper recovery procedures would add tremendous value to the product.

-

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

This was my first experiment.

Ah sorry, I missed that. How long did it run then ?

I just mentioned it because it was mentioned in an earlier bug on NLnet Labs

(that should have been fixed by 1.19.3 (the version I am running, as should you on 24.03).Do you keep a log of your setting and the runtime you get from each test before it fails, it may or may not prove helpful in trying to find the answer if you don't.

although it is manifesting as a unbound memory error, I wouldn't rule out anything else reducing the available memory over time or running past something at a given point in time. You have established (by lack of matching event times) that when it occurs not being consistent with say pfBlockerNG, what about say mailreport?. I've never used it but if it is doing something that requires lookups when generating the reports,(again I don't know that it does) on a system with limited memory it might stretch to the point the unbound isn't releasing fast enough in a limited pool and it gets wacked because that is what the kernel sees as the "bad guy" at that time.

at a glance mailreport doesn't really say much about what it is reporting on (or what you are reporting on) saying only that:

"Allows you to setup periodic e-mail reports containing command output, and log file contents"huge spikes in memory likely wouldn't show on a 28 day memory graph with a 1 day resolution in the sample.

when it happens try a narrow the graph with less time and more frequent resolution and see what is going on, maybe something like an hour/with 1 minute resolution.

OR

Since the data is still there, you could use the custom feature of the graph and specify the time range and graph resolution from a previous time it gave up. again not to small time slice, but lean toward more time leading up to the logged event.

Again may or may not be helpful or give some clues.really just trying to see why unbound is the unwilling victim getting killed so randomly. It should run for days and days, weeks and weeks without this and only restart when something like pfBlocker tell it to restart (those restart events will be listed in the pfblockerng.log. as well as the resolver log On most system that restart is usually pretty quick and you wouldn't normally notice. (but one would also assume at that point in time unbound would flush everything during the restart. The service is stopping and starting. So any memory it had would/should be cleared at that time.

It is at that point in time (when unbound is restarting) that if things are a little sluggish the watchdog may see it down and try to start another or worse multiple copies. things will usually go really bad, really fast. I understand that you believe the watchdog is in your best interest, but it is really not, in this case. Still up to you how to proceed, of course.

Edit: if you looking for the restarts in pfblockerng.log they will look like this (but may not be there every time pfblocker runs as it only restarts when it has to (ie a list has changed) )

Saving DNSBL statistics... completed Reloading Unbound Resolver (DNSBL python) Stopping Unbound Resolver. Unbound stopped in 2 sec. Additional mounts (DNSBL python): No changes required. Starting Unbound Resolver... completed [ 09/13/24 14:15:09 ] -

Thank you for your thoughtful reply. It's interesting and gave me some things to think about.

@jrey said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

@Mission-Ghost said in Repeating problem: (unbound), jid 0, uid 59, was killed: failed to reclaim memory:

This was my first experiment.

Ah sorry, I missed that. How long did it run then ?

Just a day or two before the next event occurred.

Do you keep a log of your setting and the runtime you get from each test before it fails, it may or may not prove helpful in trying to find the answer if you don't.

No. I imagine it might be helpful.

although it is manifesting as a unbound memory error, I wouldn't rule out anything else reducing the available memory over time or running past something at a given point in time.

An interesting idea. Without logs or symptoms pointing specifically to an item it seems it would be a bit of a fishing expedition.

at a glance mailreport doesn't really say much about what it is reporting on (or what you are reporting on) saying only that:

"Allows you to setup periodic e-mail reports containing command output, and log file contents"I use mailreport to email the last several lines of some logs to myself once a day to keep tabs on possible problems without having to sign in every day and review logs in detail. It's how I flagged this problem.

really just trying to see why unbound is the unwilling victim getting killed so randomly. It should run for days and days, weeks and weeks without this and only restart when something like pfBlocker tell it to restart

Indeed, I have not had a failure event since I lowered the memory cache size, in/out buffers and edns buffer three days ago.

So far the evidence supports the hypothesis that while these higher settings didn't precipitate an unbound failure right away, it did allow it to grow at a later time outside of the resources available on the 1100. Clearly the 1100 can't support more than the default settings in at least a couple of areas. I previously had an update fail because I'd used non-default settings in another part of the system. It was tricky to diagnose.

It would be helpful to have some more discussion in the manuals that recommend against adjusting the defaults upward on an 1100 due to the limitations.