pfSense not enabling port

-

@georgelza said in pfSense not enabling port:

explain.

I mean just link the two SFP ports on the Topton together to see if it will link without flapping. You obviously won't be able to pass any traffic but if it shows a stable link on both ports in that situation it's probably some low level issue between the ix ports and the switch.

Though thinking more you could set that up in proxmox so you could pass traffic between them by having them on separate bridges.

-

@stephenw10 will try tomorrow.

received a package bout 30min, ago, havent opened it, but think it could be the DAC cable and the 2nd Transceiver.

G

-

Ran the following on the pfSense host:

pciconf -lcv ix0 ix0@pci0:4:0:0: class=0x020000 rev=0x01 hdr=0x00 vendor=0x8086 device=0x10fb subvendor=0xffff subdevice=0xffff vendor = 'Intel Corporation' device = '82599ES 10-Gigabit SFI/SFP+ Network Connection' class = network subclass = ethernet cap 01[40] = powerspec 3 supports D0 D3 current D0 cap 05[50] = MSI supports 1 message, 64 bit, vector masks cap 11[70] = MSI-X supports 64 messages, enabled Table in map 0x20[0x0], PBA in map 0x20[0x2000] cap 10[a0] = PCI-Express 2 endpoint max data 256(512) FLR RO NS max read 512 link x4(x8) speed 5.0(5.0) ASPM disabled(L0s) cap 03[e0] = VPD ecap 0001[100] = AER 1 0 fatal 0 non-fatal 1 corrected ecap 0003[140] = Serial 1 a8b8e0ffff05ef51 ecap 000e[150] = ARI 1 ecap 0010[160] = SR-IOV 1 IOV disabled, Memory Space disabled, ARI disabled 0 VFs configured out of 64 supported First VF RID Offset 0x0180, VF RID Stride 0x0002 VF Device ID 0x10ed Page Sizes: 4096 (enabled), 8192, 65536, 262144, 1048576, 4194304 -

sweeeeeet... so ye that delivery was 1 x DAC, 1 x other brand SFP+ Transciever.

plugged the DAC in between switch and pfsense SFP+ port 0

plugged the new Transceiver into pmox SFP+ port0

both started flashing...

go onto unifi network controller, both SFP+ ports immediately changed colour to hey we got something we recognise, vs grey or red.in pmox console,

dmesg =>

[78378.314597] ixgbe 0000:04:00.0 enp4s0f0: detected SFP+: 5 [78433.681346] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TXand ip a

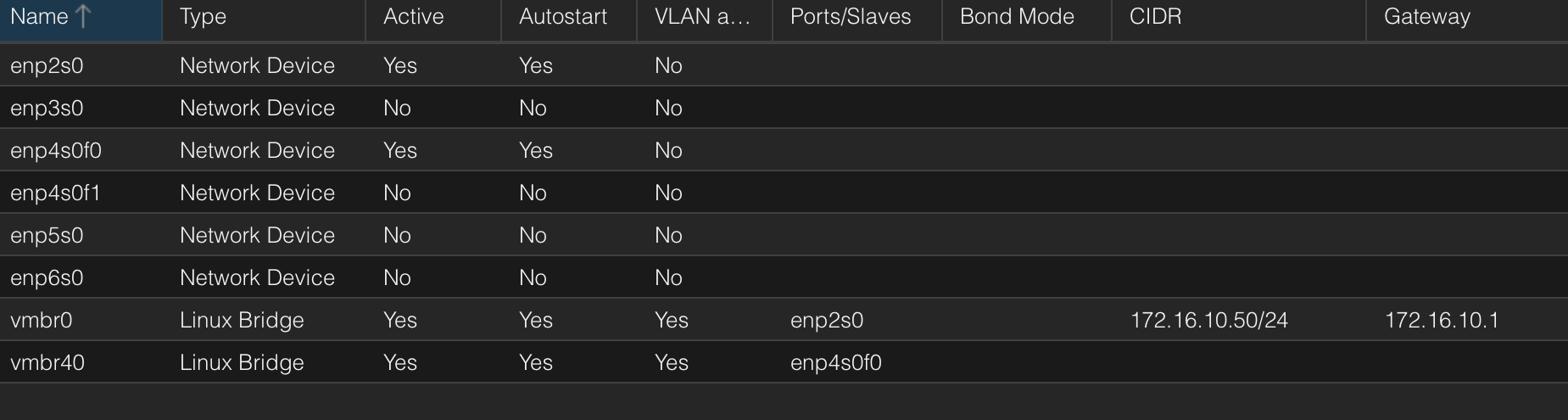

ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host noprefixroute valid_lft forever preferred_lft forever 2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff 3: enp3s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:72 brd ff:ff:ff:ff:ff:ff 4: enp5s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:73 brd ff:ff:ff:ff:ff:ff 5: enp6s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:74 brd ff:ff:ff:ff:ff:ff 8: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff inet 172.16.10.50/24 scope global vmbr0 valid_lft forever preferred_lft forever inet6 fe80::aab8:e0ff:fe02:a371/64 scope link valid_lft forever preferred_lft forever 9: vmbr40: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:05:f0:91 brd ff:ff:ff:ff:ff:ff inet6 fe80::aab8:e0ff:fe05:f091/64 scope link valid_lft forever preferred_lft forever 10: tap100i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i0 state UNKNOWN group default qlen 1000 link/ether c6:2d:5a:7a:41:d7 brd ff:ff:ff:ff:ff:ff 11: fwbr100i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether da:0c:52:e1:39:89 brd ff:ff:ff:ff:ff:ff 12: fwpr100p0@fwln100i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000 link/ether 76:c4:56:d3:6a:93 brd ff:ff:ff:ff:ff:ff 13: fwln100i0@fwpr100p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i0 state UP group default qlen 1000 link/ether da:0c:52:e1:39:89 brd ff:ff:ff:ff:ff:ff 14: tap100i1: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i1 state UNKNOWN group default qlen 1000 link/ether 76:c9:86:22:cc:30 brd ff:ff:ff:ff:ff:ff 15: fwbr100i1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 52:9a:1c:4d:76:a6 brd ff:ff:ff:ff:ff:ff 16: fwpr100p1@fwln100i1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000 link/ether 26:2d:af:5a:9a:b4 brd ff:ff:ff:ff:ff:ff 17: fwln100i1@fwpr100p1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i1 state UP group default qlen 1000 link/ether 52:9a:1c:4d:76:a6 brd ff:ff:ff:ff:ff:ff 18: tap100i2: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i2 state UNKNOWN group default qlen 1000 link/ether c2:9f:1f:4e:c6:2e brd ff:ff:ff:ff:ff:ff 19: fwbr100i2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 7a:a8:67:a9:29:14 brd ff:ff:ff:ff:ff:ff 20: fwpr100p2@fwln100i2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr40 state UP group default qlen 1000 link/ether 72:43:cc:4b:b3:40 brd ff:ff:ff:ff:ff:ff 21: fwln100i2@fwpr100p2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i2 state UP group default qlen 1000 link/ether 7a:a8:67:a9:29:14 brd ff:ff:ff:ff:ff:ff 39: enp4s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr40 state UP group default qlen 1000 link/ether a8:b8:e0:05:f0:91 brd ff:ff:ff:ff:ff:ff 40: enp4s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:05:f0:92 brd ff:ff:ff:ff:ff:ffnow to reconfigure vlan40 from the igc0.40 onto ix0

ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:c0:e8:1e brd ff:ff:ff:ff:ff:ff inet 172.16.10.51/24 metric 100 brd 172.16.10.255 scope global dynamic ens18 valid_lft 4206sec preferred_lft 4206sec inet6 fe80::be24:11ff:fec0:e81e/64 scope link valid_lft forever preferred_lft forever 3: ens19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:81:2c:cb brd ff:ff:ff:ff:ff:ff inet6 fe80::be24:11ff:fe81:2ccb/64 scope link valid_lft forever preferred_lft forever 4: ens20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:1e:20:ac brd ff:ff:ff:ff:ff:ff inet6 fe80::be24:11ff:fe1e:20ac/64 scope link valid_lft forever preferred_lft forever 5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:cb:d9:fb:26 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft foreverhappiness, making progress in right direction...

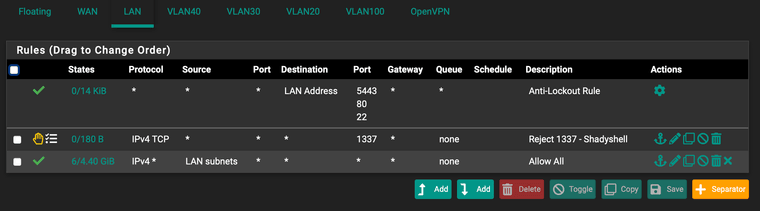

i configured my pfSense console to be accessible via 2nd address on 172.16.40.1. reconfigured that via primary 172.16.10.1 interface to now be serviced/routed via ix0, then connected to console via new 2nd, working so have a back door ;)

connected via back door and went and reconfigured vlan0, vlan20, vlan30 to now be routed via ix0 also. hit refresh on my browser which access console via 172.16.10.1 and did same for 172.16.40.1, still accessible.

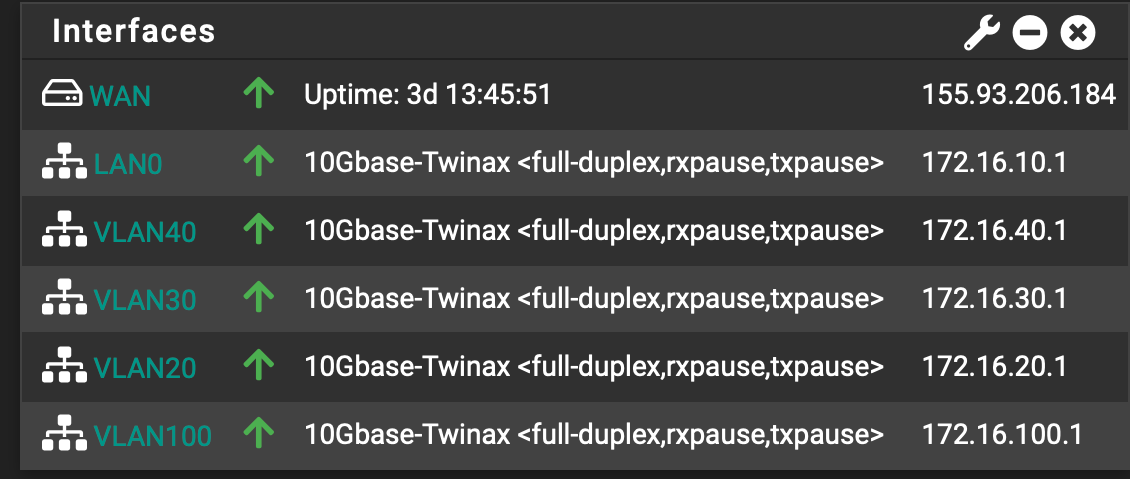

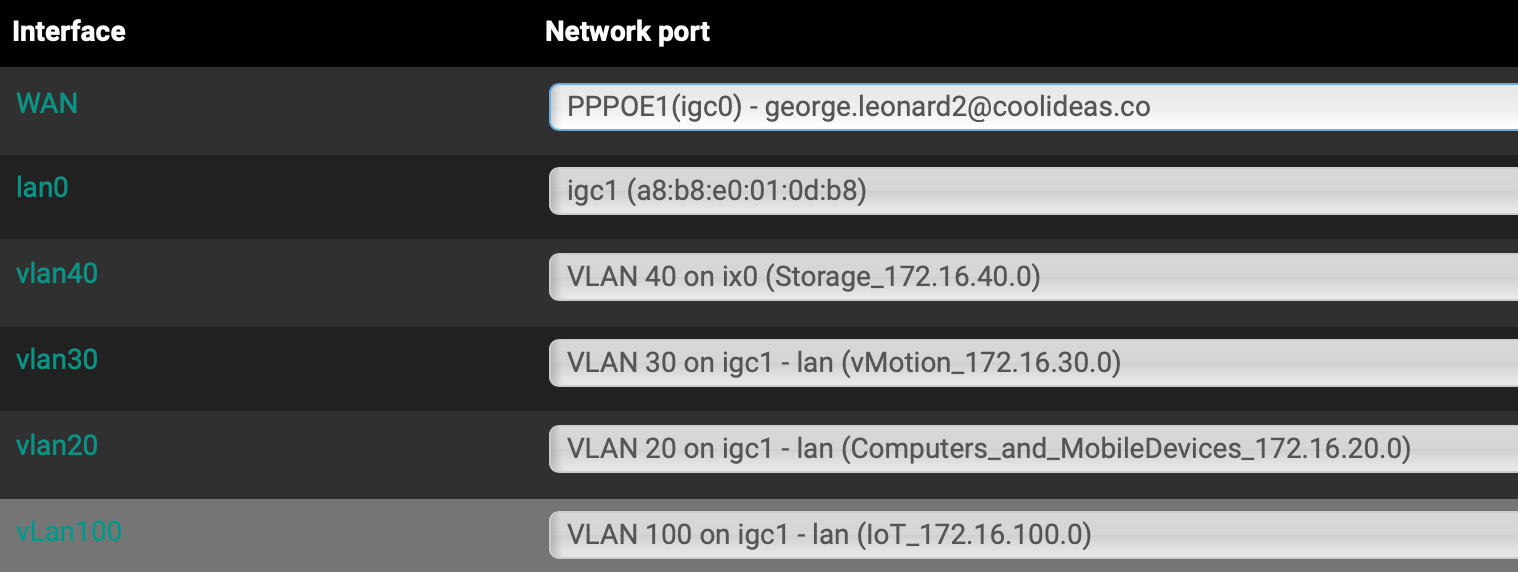

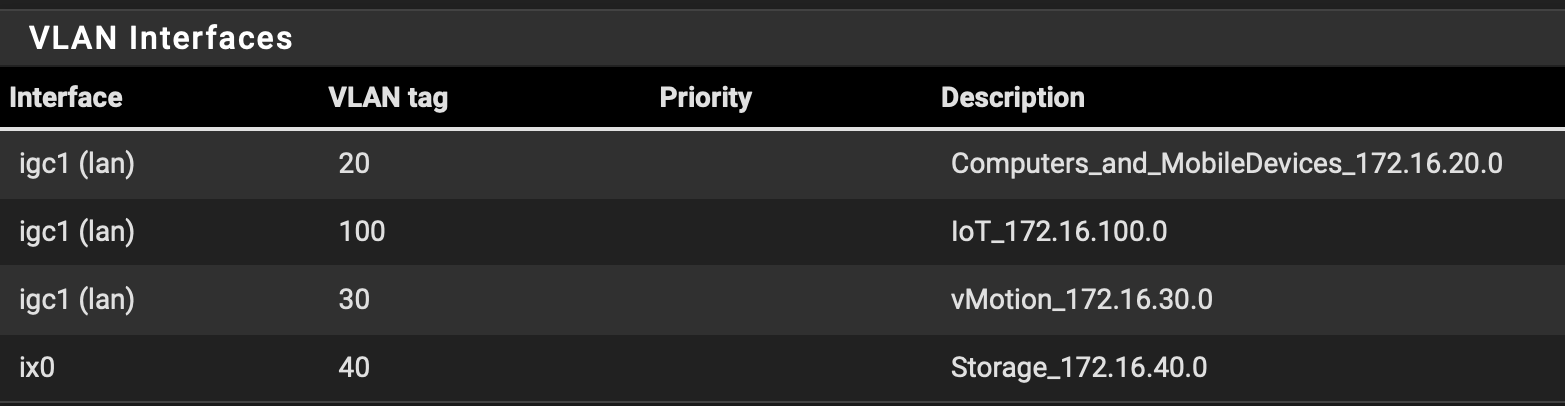

at this point the below picture reflect pfsense config.

Ok, this seemed good. but (once i unplugged the 2.5 igc1 cat6 cable between ProMax port24 and pfSense ) now found i could not access anything else that sat on 172.16.10.0/24 network.

At this point wondering... am i trying to get something to work and whats the value of it... does it make sense...

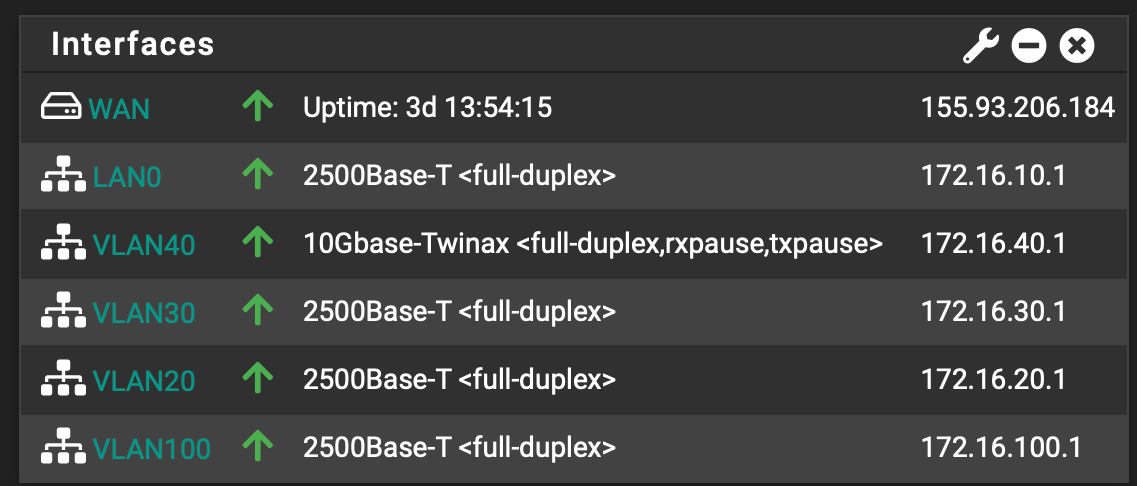

To fix the above I reconfigured onto this.

Maybe leave vlan0, vlan20, vlan30 and vlan100 on igc1 as all the device that connect to that themself are either wifi, 1GbE or 2.5GbE based.

then leave vlan40 on ix0 and the device that will on there is 10GbE based.

I got an aggregation on the way anyhow. so SFP+ port 2 on pfSense will go into aggregation and the pmox and TrueNAS will go into that.some comments, view's

note, on ubuntu-01 i still dont have a ip assigned to the vlan30 and vlan40 interfaces... so thats i guess the last to be fixed...

G

-

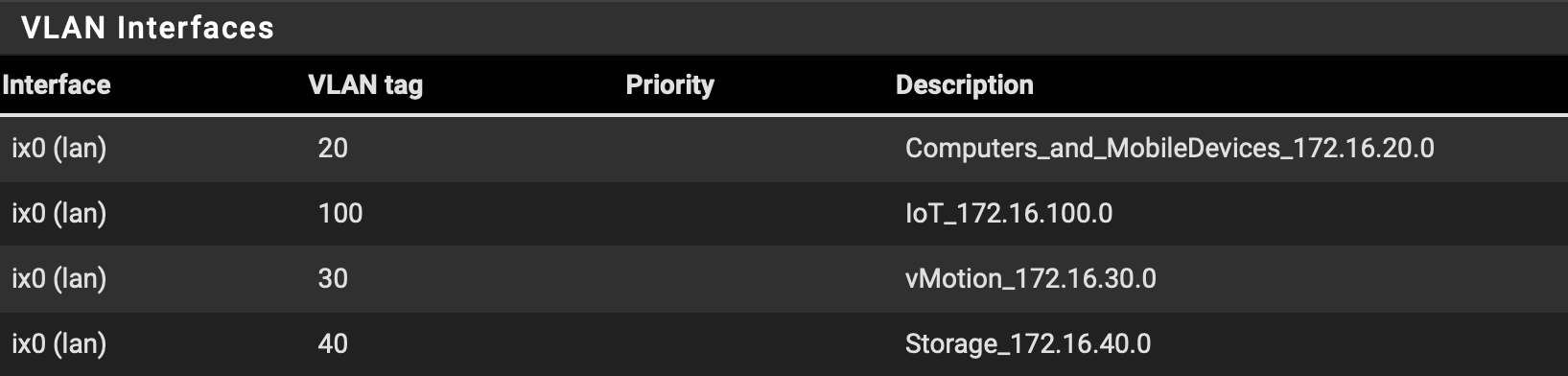

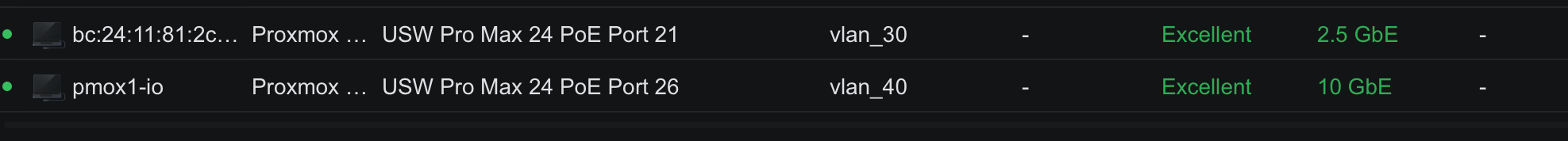

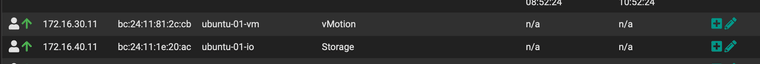

for reference, here is my pmox datacenter

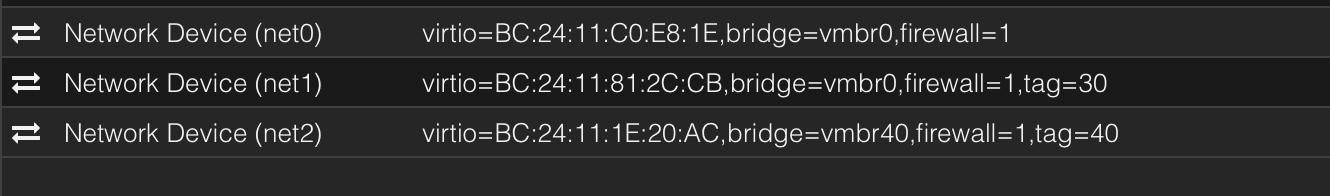

and pmox1 server network configuration.

interesting enough, without telling my unifi environment, this is what it's showing.

<note the MAC addresses assigned to net1 and net2 has been dhcp reserved in pfsense on the respective vlans as 172.16.30.11 and 172.16.40.11

<interesting take away, the unifi has no problem with the Dell/EMC SFP+ (dell emc ftlx8574d3bnl-fc) Transcievers, it's on the Topton side where they are not liked, I have found a thread on lawrenceIT that points to the following transceivers working also>

10Gtek 10GBase-SR SFP+ LC Transceiver, 10G 850nm Multimode SFP Moduledell emc ftlx8574d3bnl-fc

-

@georgelza said in pfSense not enabling port:

Ok, this seemed good. but (once i unplugged the 2.5 igc1 cat6 cable between ProMax port24 and pfSense ) now found i could not access anything else that sat on 172.16.10.0/24 network.

Unclear exactly what you are testing to and from in that scenario. Just disconnecting the cable won't start routing via some other subnet if it still has an IP address directly in the subnet.

-

I wanted all traffic between the ProMax & pfSense to flow over the DAC.

why i reconfigured all vlan GW's to then sit on the Ix0 interface.

in the end what i got is vlan0, vlan20, vlan30 and vlan100 which is "low" bandwidth traffic get to the fSense via igb1 / 2.5GbE and then all traffic from vlan40, will sit on ix0,

G

-

strange bit...

on my pfSense

on ubuntu-01

ifconfig -a docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:79:4c:b3:b2 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens18: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.16.10.51 netmask 255.255.255.0 broadcast 172.16.10.255 inet6 fe80::be24:11ff:fec0:e81e prefixlen 64 scopeid 0x20<link> ether bc:24:11:c0:e8:1e txqueuelen 1000 (Ethernet) RX packets 30289 bytes 4984073 (4.9 MB) RX errors 0 dropped 32 overruns 0 frame 0 TX packets 15485 bytes 3129562 (3.1 MB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens19: flags=4098<BROADCAST,MULTICAST> mtu 1500 ether bc:24:11:81:2c:cb txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens20: flags=4098<BROADCAST,MULTICAST> mtu 1500 ether bc:24:11:1e:20:ac txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 96 bytes 7756 (7.7 KB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 96 bytes 7756 (7.7 KB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 -

Got the 172.16.30.11 working, did a manual configuration inside ubuntu.

battling with 172.16.40.11 thats on the fiber.

G

-

figured i'd try re-install ubuntu with the interfaces now "working"

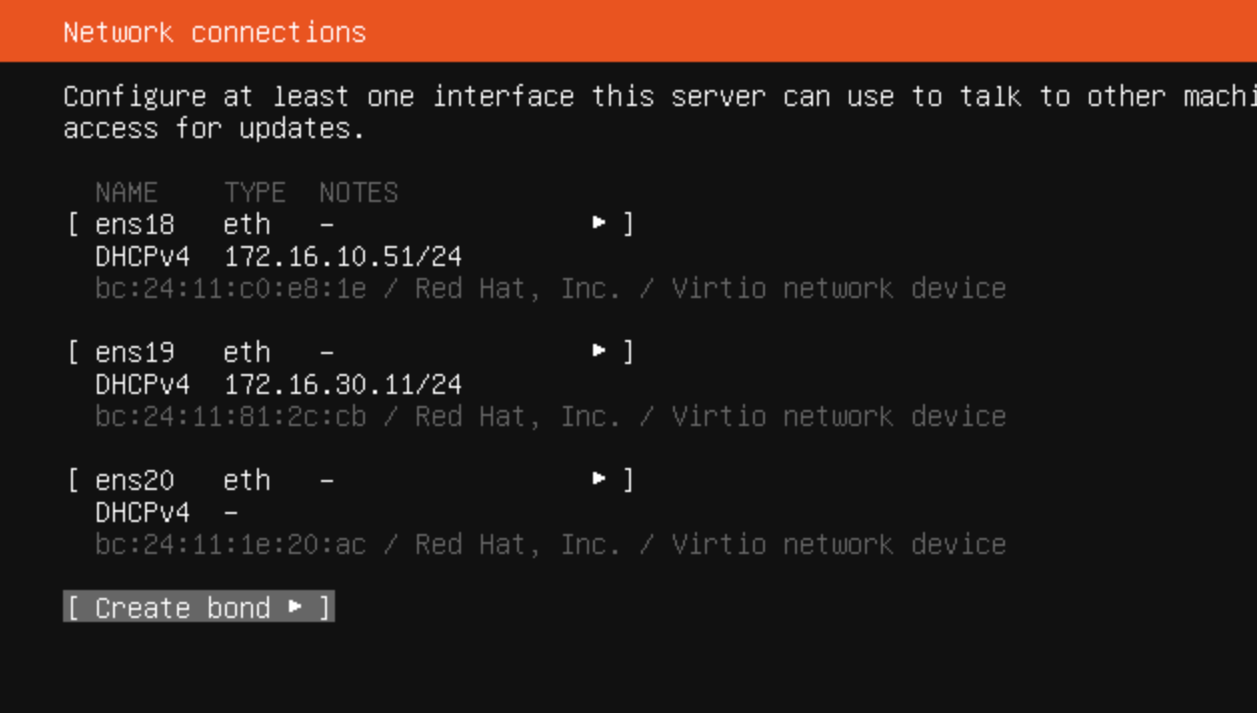

assigning the multiple networks at configuration time,as soon as ubuntu installer started, immediately picked up the intended 172.16.10.51 and 172.16.30.11 address. 172.16.40.11 did not want to get picked.

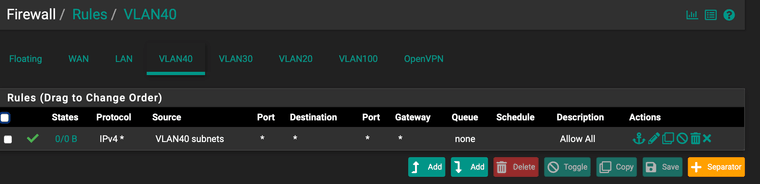

implying i have some rule problem between pfsense and the Proxmox.

this is also the interface even when manually configuring it i could not get to access on the guestVM.G

Ubuntu installer

-

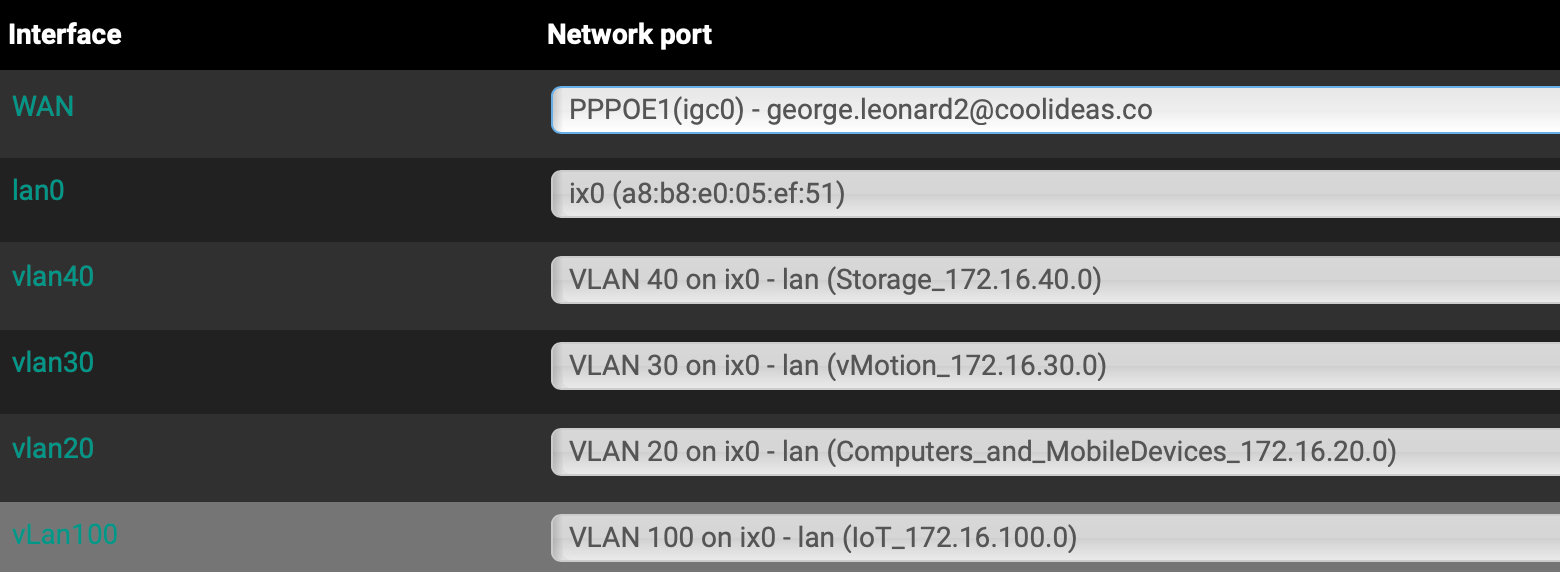

i changed the interface from a vlan to a lan... as it's not a vlan anymore.

scratching head.

G

-

press enough buttons and things get working, hehehehe

;)

now to unpack a bit what is what and then update diagrams for my own understanding...then onto next phase...

G

-

@georgelza said in pfSense not enabling port:

now to unpack a bit what is what and then update diagrams for my own understanding...

Yup, a diagram here would definitely help a lot!

-

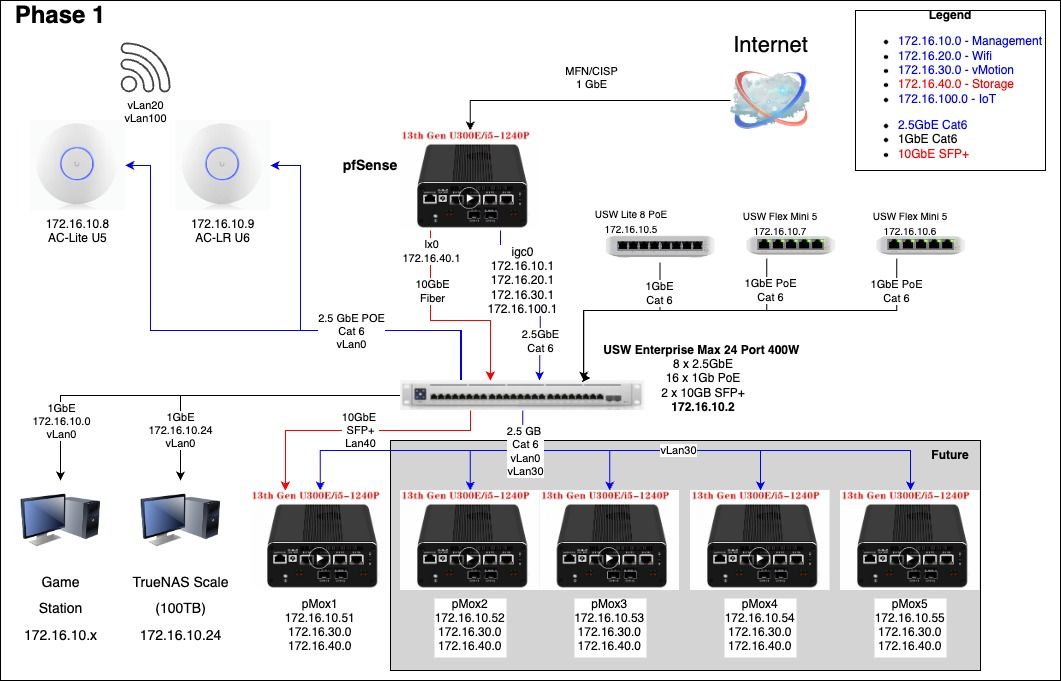

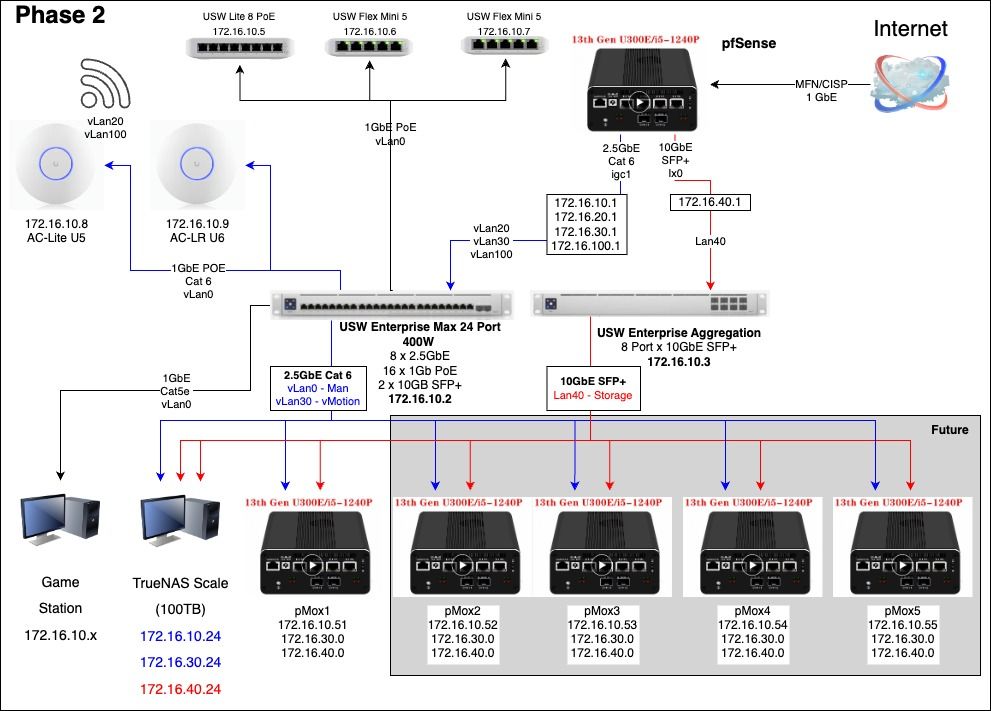

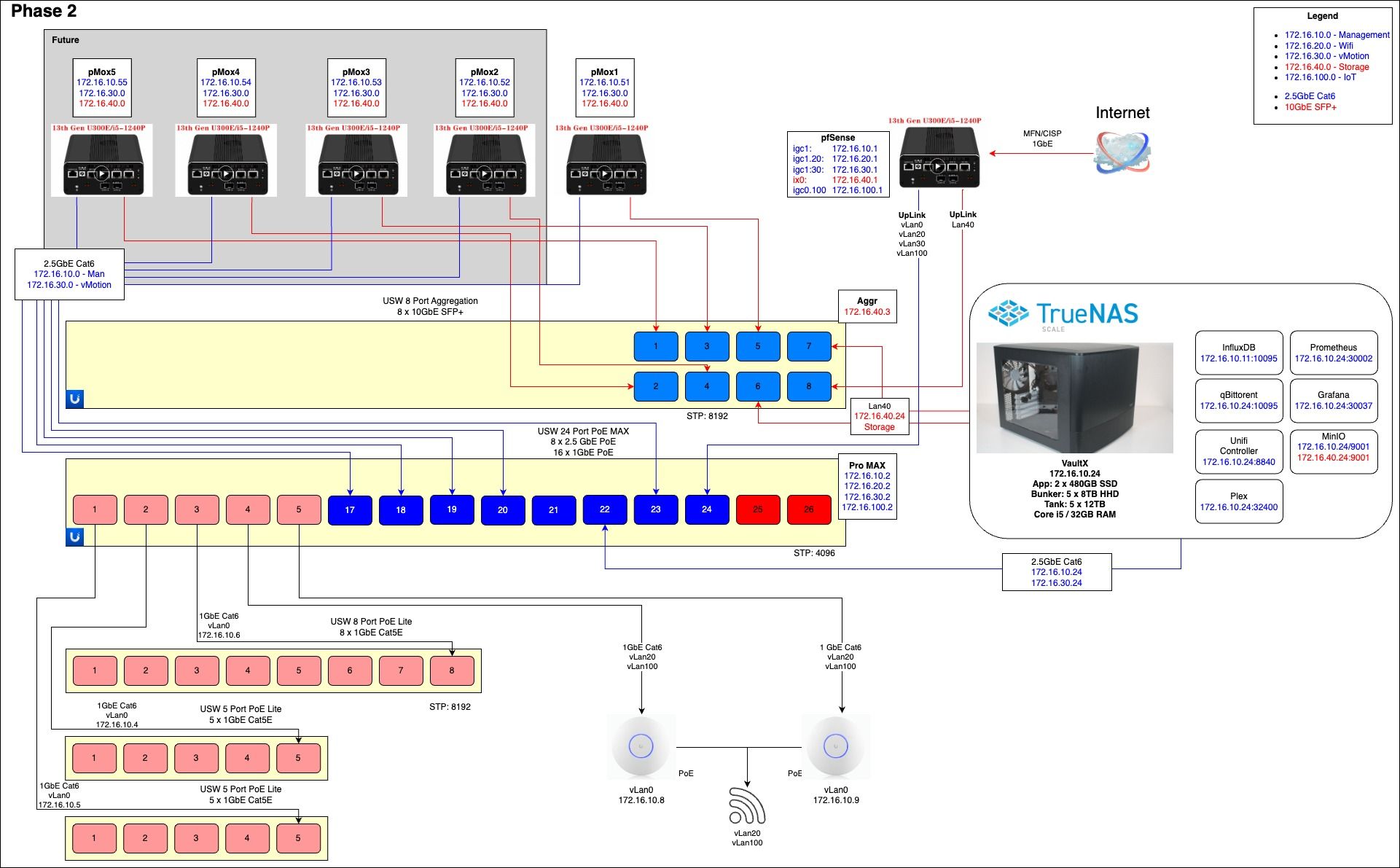

Da pictures... The planning, Phase 1 is completed.

The aggregation switch is on its way so that will be phase 2 cable wise by next weekend. Planning on getting another TopTon on Black Friday. will also then get the dual port 10GbE x520 Fiber card for the TrueNAS.

NetworkPhases 2.1-Day 1.jpg

NetworkPhases 2.1-Day 1.jpg NetworkPhases 2.1-Day 2.jpg

NetworkPhases 2.1-Day 2.jpg

NetworkPhases 2.1-Cabling 1.0.jpg -

@georgelza said in pfSense not enabling port:

press enough buttons and things get working, hehehehe

;)

now to unpack a bit what is what and then update diagrams for my own understanding...then onto next phase...

G

Glad to see that you finally got it to work... it's a great feeling!

Glad to see that you finally got it to work... it's a great feeling!

And now you know what cables and modules to use going forward. And as you already found out, there are likely others that will work as well. I suppose you were just unlucky with the one's you had...And who knows, there might be a solution with the Topton NIC, to make it like those DELL modules as well. I guess the thing is that since there is firmware in those modules, DAC's as well, and even though protocols are "standardized", it's still a matter of interpretation sometimes... And some vendors, like Intel, choose to "blacklist" not supported devices inside some of their cards... Hence the command you tried earlier to "allow_unsupported device".

One thought about your issue with the Docker application not "finding" VLAN 40...

You can of course add a specific VLAN tag to each interface you assign to a VM. And you don't have to set the bridge or anything to be "VLAN aware". But in this case Proxmox will Untag all traffic towards the VM and Tag it going out of the bridge towards the switch..

And since docker can only "see" what the host VM is receiving (untagged traffic), neither docker not the containers will be able to attach to any specific VLAN.

To change the bridge interface (vmbr0, vmbr40) into a trunk interface, simply make it VLAN aware from the UI.

Then it will pass any and all VLAN's from 2-4094, or something like that.And then on the VM OS, you have to handle the VLAN's. Which I have never tried myself, but basically in docker you will have to create and interface which you can then assign to the container. Something looking like this I suppose...

docker network create -d macvlan

--subnet=192.168.40.0/24

--gateway=192.168.40.1

-o parent=eth0.40

vlan40_netdocker run -d --name mycontainername --network vlan40_net some_image

-

@georgelza said in pfSense not enabling port:

Planning on getting another TopTon on Black Friday. will also then get the dual port 10GbE x520 Fiber card for the TrueNAS.

Remember the issues you had here... so when looking for x520 cards, do some googling and perhaps you can make use of your DELL links... When I got my X520's, I had read about how Intel is picky with which modules they accept. They actually whitelists (in the firmware of the card) which modules they accept, and anything that doesn't match is an "unsupported device". So I went for a Fujitsu made Intel card and have had no trouble with it. There are plenty out there and I hear Mellanox cards are great as well.

-

@Gblenn said in pfSense not enabling port:

One thought about your issue with the Docker application not "finding" VLAN 40...

this was not docker, this was purely a ubuntu vm on proxmox, trying to remember now if it auto dhcp picked up ip in the end...

i did end rebuilding the vm, thinking that maybe when i build it and the network publish internally via proxmox was not 100% it might have impacted the vm so once i knew the networks were now more "right" redid the vm.. lessons learned with how i want to manage the ip ranges etc, as i build broke down and rebuild...

ps I ALSO did end making vlan40 just lan40... as it's the only network sitting on that port on the pfsense and will have a dedicated port on the proxmox hosts.

@Gblenn said in pfSense not enabling port:

Remember the issues you had here... so when looking for x520 cards, do some googling and perhaps you can make use of your DELL links... When I got my X520's, I had read about how Intel is picky with which modules they accept. They actually whitelists (in the firmware of the card) which modules they accept, and anything that doesn't match is an "unsupported device". So I went for a Fujitsu made Intel card and have had no trouble with it. There are plenty out there and I hear Mellanox cards are great as well.

Will need to find exactly what i'm looking for before the day and then hope it's there on the day to add to basket at a black friday special price.

luckily the Dell/EMC transcievers work happily in the Unifi switches so thats at least 1/2 off. looking at the prices... I now have a known workable solution locally available... so might do the transcievers locally... also

he he he. this thread turned into a network/proxmox/pfsense rabbit hole... hope it ends being useful for others... as i've noticed allot of ppl now deploy their pfsense as a guest on proxmox... so maybe this will help them.

i'm seriously impressed with these little topton devices, packs a mean amount of power in a nicely packaged solution.

with this i5 1335 and i5 1240 being 2 very proxmox option CPU's and think the U300E being awesome as a physical pfSense host, got some serious single thread capabilities.now to figure out how i want to do the storage... know i know to little and probable allot to make better...

G

-

@georgelza said in pfSense not enabling port:

ps I ALSO did end making vlan40 just lan40... as it's the only network sitting on that port on the pfsense and will have a dedicated port on the proxmox hosts.

Re the above, this might flip back as a vLan... will have to see if I patch the aggregation into the ix0 port on the pfsense or hang it off the Pro Max, like the idea of the Pro Max as i then better expose this on my Unifi Manager, most of the traffic across it will only be on it... and the little that might be from another network will be small volume so having to then go up to the pfSense as it will transverse a network/thus FW rule and then back down, don't think it's a major issue.

for those thats been following this thread, basically back at the phase 3a vs 3b discussion, which becomes more executable now that we have known working components.

G -

ha ha ha... and there i thought I was finished with questions...

Guess this more Proxmox, but might be of value to people

(sorry the Proxmox forum seem to be dead and very few sections, nothing where I can see I can even ask this).So with lets say limited 10GbE ports on the aggregation switch.

And then also not wanting to just eat up ports on my Pro Max. I do have to run some of the traffic over the same links.For the Proxmox I see the following.

Main management interface

Main client connectivity

Proxmox cluster interconnect

Storage.Mapped to my network:

Main management => lan / 172.16.10.0/24

Main client connectivity => lan

Proxmox cluster +> vlan30

Storage => vlan40Currently have lan and vlan30 on the 2.5GbE copper and then vlan40 on the 10GbE Fiber.

the other side of the storage is the TrueNAS which will have 2 x 10GbE Fiber connections.

Comment, an alternate could be to move vlan30 also onto the fiber.At the moment, the Proxmox install just ate up the entire 1TB NVMe card...

Thinking a shared CEPH volume might be a good idea, to host the VM images on and the ISO's... so thinking of redoing pmox1 installed, giving ProxMox hypervisor say 200Gb and then making the balance available to Ceph.

For now it won't mean much but when I start adding the other nodes then it will become "valuable"G

-

@georgelza What is your thinking re Ceph and splitting the drive for Proxmox?

Perhaps you could use that for backup of VM's ans stuff, but I'm not so sure that is a good idea? You want to keep those disks alive for a long time so I'd try to keep the disk activity low.Perhaps it's a better idea to install a Proxmox Backup Server as a VM on one of the Proxmox machines. And then assign some disks space on your TrueNAS Scale to it, for VM backups. You can always store a few backups directly on the Proxmox machines as well, for somewhat quicker restore.

Wrt the port mappings, I wouldn't necessarily limit any port on Proxmox to a single VLAN. I mean, you can have all this on a single port if you want:

Main management => lan / 172.16.10.0/24

Main client connectivity => lan

Proxmox cluster +> vlan30

Storage => vlan40I have two Proxmox machines with 2.5G ports and one with 1G port on the mobo, and they all have 10G SFP+ added. I assign Ports and VLANs to VM's freely and depending on need. So anything that may require high throughput, is assigned one or more 10G port. And if I want it to be in a particular VLAN, I set that in Proxmox. (so it is as if it was hanging off an Untagged port on a switch).

I also have some VLANs that only exist on my switches (no interfce or DCHP on pfsense). They are used to create switches withing the switches so to say. One thing I use it for is to "tunnel" the LTE router LAN port from our top floor, to my failover WAN on pfsense. This port is assigned on the 2.5G port on Proxmox. Same port being used for a couple of other VM's as well... In this case I have two SFP+ ports passed thru (IOMMU) to pfsense, so they can't be used for any other VM's

I mean the logical connections or assignments rather, don't have to define or limit the way you physically connect things. And I'm sort of liking 3A more in that regard, as it is cleaner and simpler on the pfsense side, and the Trunk between your two switches is 10G..

And I'm wondering about the dual connection to the NAS? What disks are you using? Would you really expect throughput to reach even 10G?

I'm wondring if this discussion should continue on the Virtualization section?

Where perhaps we could always discuss the option of virtualizing also pfsense, and the benefits and possible drawbacks of that...

I mean the i5-P1240 seems pretty much on par with my i5-11400 which is simply killing it in my setup on Proxmox... Even the 10400 that I also have gives me similar perfomance on a 10G fiber connection. 8+ Gbps speedtest with Suricata running in legacy mode.