Unbound/DNS resolver with IPv6 unreliable finally solved

-

Are you sure you have disabled the auto rules?

The access_lists.conf does not look like that in my case with auto rules disabled. -

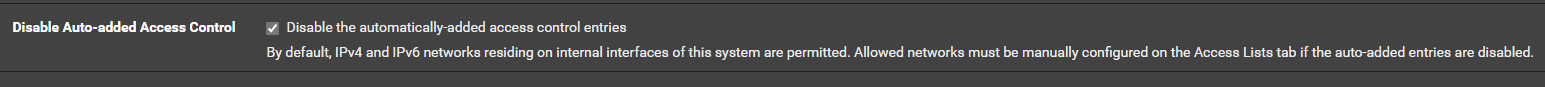

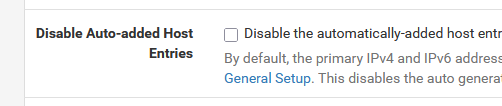

When I check this :

( which I don't have checked right now )

I have to create my own access list .... so more chances to f##k up.

I'm a "leave it to default" guy

-

@Gertjan said in Unbound/DNS resolver with IPv6 unreliable finally solved:

I wonder what happens if I delete these two lines :

a9c12224-4af3-4fde-8015-2265b6b91de5-image.png

I would delete

::1/128 allow, and add the/128CIDR notation to the::1 allow_snoopentry manually—and leave127.0.0.1/32 allow_snoopas is.But I agree that neither may be necessary as my auto-generated

/var/unbound/access_lists.confcontains only the ACLs I've defined via the webGUI. No loopback addresses are present. -

This post is deleted! -

@tinfoilmatt said in Unbound/DNS resolver with IPv6 unreliable finally solved:

127.0.0.1/128

Isn't that a 'syntax error' ?

127.0.0.1/32 is as far as it goes. -

I tried to add the:

access-control: ::1/128 allow_snoop

to my manual access list over the weekend. The result was that both the primary and the secondary firewall had a unresponcive unbond service on sunday. Today I have removed the access rule above. We will see how this goes.

Does anybody know what this rule is for?

-

Yes, 127.0.0.1/128 is wrong, and 127.0.0.1/32 is correct, but I see that the auto rule allow 127.0.0.0/8. Is that necessary? In case it is which other IP addresses in the 127.0.0.0/8 are in use?

-

@strandte said in Unbound/DNS resolver with IPv6 unreliable finally solved:

but I see that the auto rule allow 127.0.0.0/8. Is that necessary? In case it is which other IP addresses in the 127.0.0.0/8 are in use?

127.0.0/8 is a bit large, true.

Execute for example

sockstat -4 | grep '127'to see who is using 127.a.b.c

-

I can't see any othe address in the 127.0.0.0/8 used other than 127.0.0.1, so I would assume it would be ok to change out 127.0.0.0/8 with 127.0.0.1/32.

-

Sure.

Will it make any difference ?

Not sure. -

@strandte said in Unbound/DNS resolver with IPv6 unreliable finally solved:

After I setup monitoring I found out that the DNS resolver on the pfSense boxes often stopped for a while and then automatically started to respond to queries again, and that the problem seemed to be more pronounced for resolving via the IPv6 addresses of the pfSense boxes. Often the unbound stopped responding to queries done via the IPv6 address, but still responded to queries done via the IPv4 address. After a while both became unresponsive. When this was the case restarting the service made it respond to queries again, but I think it might also would have started again at some time if I had not done anything. When the service had stopped this would not be the case of cause.

I honestly don't think that the unbound control settings are related to this issue. Unless access control for unbound simply prevents its endless restarts and refreshes, which, in turn, solves one problem but clearly causes a thousand others. In fact, unbound was rock-stable for me on 24.11 and earlier. But it "broke" on the 23.05 beta because pfSense suddenly decided that now, every time it receives configuration packets (RA info) from the ISP, it needs to refresh and update all related settings, including unbound, even if no changes are detected in those settings received. When I started digging into this issue, I was surprised to see just how many requests there were to stop and restart the service — sometimes ending with it stopping and not starting again. Ideally, with proper Python module integration, everything should be much more stable, but sometimes it is not.

-

@Gertjan said in Unbound/DNS resolver with IPv6 unreliable finally solved:

Isn't that a 'syntax error' ?

Yes, typo. Post edited. Thanks for pointing out.