New PPPoE backend, some feedback

-

@stephenw10 said in New PPPoE backend, some feedback:

There isn't anything like the same logging that mpd gives. Yet. I would run a pcap on the parent NIC and see whats actually happening. I would think it has to send from the CARP MAC since it clearly doesn't us the actual VIP IP.

Are you talking about the mpd backend or the new one? On the new one, when selecting the CARP VIP, the pcap on the parent interface naturally shows nothing — the new backend simply can't configure itself properly and doesn't start at all.

Interesting. I switched back to mpd, leaving the settings with the VIP that were configured for the new backend — and now PPPoE doesn't want to work even with mpd. Something is definitely wrong with the configuration conversion between the two backends.

In the log, it also looks like it's connecting through the wrong interface:

2025-04-08 18:55:45.407053+03:00 ppp 56619 [wan] Bundle: Interface ng0 created 2025-04-08 18:55:45.406382+03:00 ppp 56619 web: web is not running 2025-04-08 18:55:44.495307+03:00 ppp 36089 process 36089 terminated 2025-04-08 18:55:44.446476+03:00 ppp 36089 [wan] Bundle: Shutdown 2025-04-08 18:55:44.403502+03:00 ppp 56619 waiting for process 36089 to die... 2025-04-08 18:55:43.401537+03:00 ppp 56619 waiting for process 36089 to die... 2025-04-08 18:55:42.400289+03:00 ppp 36089 [wan] IPV6CP: Close event 2025-04-08 18:55:42.400259+03:00 ppp 36089 [wan] IPCP: Close event 2025-04-08 18:55:42.400219+03:00 ppp 36089 [wan] IFACE: Close event 2025-04-08 18:55:42.400117+03:00 ppp 36089 caught fatal signal TERM 2025-04-08 18:55:42.399979+03:00 ppp 56619 waiting for process 36089 to die... 2025-04-08 18:55:42.399687+03:00 ppp 56619 process 56619 started, version 5.9 2025-04-08 18:55:42.399135+03:00 ppp 56619 Multi-link PPP daemon for FreeBSD 2025-04-08 18:54:43.826132+03:00 ppp 36089 [wan] Bundle: Interface ng0 createdbut nothing on LAGG

Ok next step...

I booted into the previous snapshot from February, launched PPPoE and pcap there —

Here’s an example of one of the packets:

b4:96:91:c9:77:84 is just active ethernet card ixl0 MAC form LAGG (FAILOVER) I have used for CARP VIP.

-

Hmm, well I can certainly see why that might fail. Setting it on a VIP really makes no sense for a L2 protocol. It seems like it worked 'by accident'. I'm not sure that will ever work with if_pppoe. I'll see if Kristof has any other opinion...

-

@stephenw10 I don't see how setting a carp IP on a PPPoE interface would make sense, no.

It doesn't make sense on the underlying Ethernet device (because it's not expected to have an address assigned at all), and also doesn't make sense on the PPPoE device itself, because there's no way to do the ARP dance that makes carp work.

-

@kprovost said in New PPPoE backend, some feedback:

It doesn't make sense on the underlying Ethernet device (because it's not expected to have an address assigned at all), and also doesn't make sense on the PPPoE device itself, because there's no way to do the ARP dance that makes carp work.

The whole point is to use the status of the parent interface to bring up the PPPoE interface. To determine the status of the parent (underlying) interface, the CARP VIP on the parent interface is exactly what's needed — to identify which node is the master and where to bring up PPPoE. Honestly, I have no idea why it even worked before. But if it's not supposed to work and never will, then of course I won't insist on this approach :)

Ideally, there would simply be a feature to bring up the PPPoE WAN session only if the firewall is the MASTER.

I doubt I'm the only one whose ISP doesn't appreciate users trying to initiate more than one PPPoE session. -

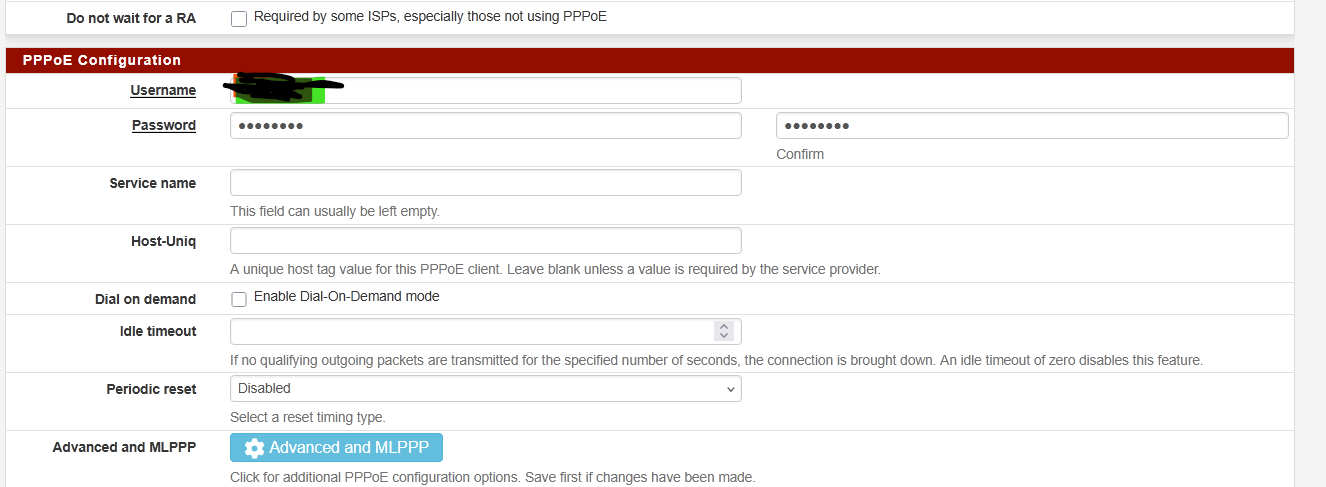

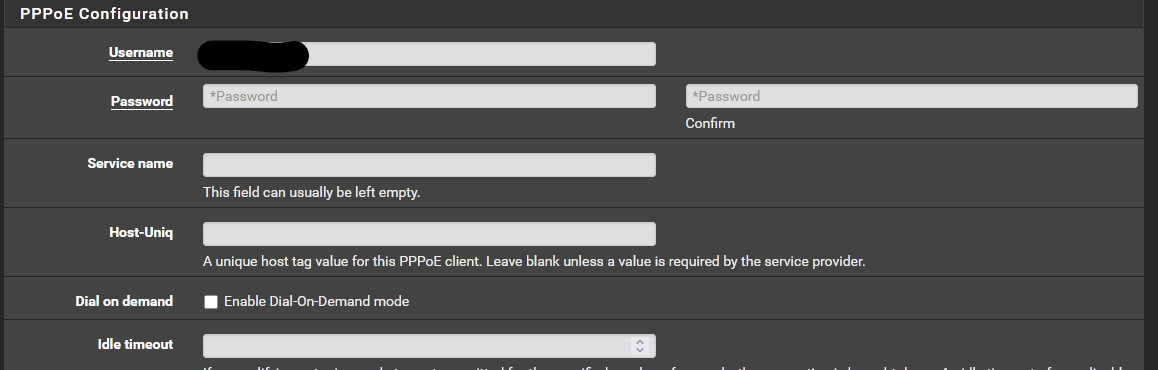

I've recently upgraded to the latest beta 2.8, and switched to the new PPPOE backend.

I really didn't have any issues with the previous one other than performance. I recently upgraded to 3gig fiber and have been struggling to get full speed when using pppoe on the pfsense box.

I have found no difference from the old to the new backend. Performance still seems to be the same. The odd thing is that I get full speed on the upload, but only about half to 2/3rds on the down. I.e. I get 3000-3200Mbps upload, but download is usually around 1700-1900Mbps.

I've tried it with an intel X520 card, and an X710 card. No difference.

What I have noticed, and I'm not sure if this is the reason for the performance hit, is that on the upload or the tx side it seems to use all the queue's available to it. but on the rx side it only uses the first queue. I tried tweaking the queue's on the x710 and didn't make any difference.

Here's an example

[2.8.0-BETA][root@router]/root: sysctl -a | grep '.ixl..*xq0' | grep packets

dev.ixl.0.pf.txq07.packets: 2550054

dev.ixl.0.pf.txq06.packets: 2444906

dev.ixl.0.pf.txq05.packets: 542271

dev.ixl.0.pf.txq04.packets: 781264

dev.ixl.0.pf.txq03.packets: 2216896

dev.ixl.0.pf.txq02.packets: 2738515

dev.ixl.0.pf.txq01.packets: 5394

dev.ixl.0.pf.txq00.packets: 8645

dev.ixl.0.pf.rxq07.packets: 0

dev.ixl.0.pf.rxq06.packets: 0

dev.ixl.0.pf.rxq05.packets: 0

dev.ixl.0.pf.rxq04.packets: 0

dev.ixl.0.pf.rxq03.packets: 0

dev.ixl.0.pf.rxq02.packets: 0

dev.ixl.0.pf.rxq01.packets: 0

dev.ixl.0.pf.rxq00.packets: 6262688at the moment I have this in my loader.conf.local file

net.tcp.tso="0"

net.inet.tcp.lro="0"

hw.ixl.flow_control="0"

hw.ix1.num_queues="8"

dev.ixl.0.iflib.override_qs_enable=1

dev.ixl.0.iflib.override_nrxqs=8

dev.ixl.0.iflib.override_ntxqs=8

dev.ixl.1.iflib.override_qs_enable=1

dev.ixl.1.iflib.override_nrxqs=8

dev.ixl.1.iflib.override_ntxqs=8

dev.ixl.0.iflib.override_nrxds=4096

dev.ixl.0.iflib.override_ntxds=4096

dev.ixl.1.iflib.override_nrxds=4096

dev.ixl.1.iflib.override_ntxds=4096If I could fix this issue the rest seems to be rock solid.

-

How are you testing? What hardware are you running?

The upload speed is also unchanged from mpd5?

-

I'm running a Supermicro E300-8D with a Xeon D-1518 CPU and 16gig of Ram.

The onboard 10gig nic's are Lagg'd in to my lan and the addon slot is filled with currently an X710 but initially I had tried a X520.The switch from mpd5 made very little if any difference. Maybe 100mbps if that.

I'm testing from my pc which has 10gig fiber into my core switch and then the pfsense box is fed by the mentioned 10gig LAGG group.

When I run the speed test right from the modem itself using he provider interface it comes back with 3.2gig up and down every time. When running it from my pc I'm using the providers speed test site. Upload is always 3-3.2gig, but the download always falls short.

The only difference I saw from going from mpd5 to the new one is the cpu usage dropped.. Previously on a test I'd see upwards of 60% cpu , now it's 38-43%. 39% on the download and upwards of 43% on the upload tests.

The only thing I haven't tried that I can think of is to remove the pppoe from pfsense and just set it up as a dhcp to the provider modem and see if I get the full speed all the way through.

The part I find odd is that if it was the pppoe , I wouldn't think I'd get full speed on the upload ? That's why I started looking at other things, like the queue's to see if I could find something off.

-

Does that speedtest use multiple connections?

The reason you see a difference between up and down is that when you're downloading Receive Side Scaling applies to the PPPoE directly and that is what limits it.

However if_pppoe is RSS enabled so should be able to spread the load across the queues/cores much better. But only if there are multiple streams to spread.

And just to be clear the WAN here was either the X520 or X710 NIC?

-

@dsl-ottawa

Did you remove the net.isr.dispatch=deferred? See PPPoE with multi-queue NICs -

@w0w said in New PPPoE backend, some feedback:

@dsl-ottawa

Did you remove the net.isr.dispatch=deferred? See PPPoE with multi-queue NICs@w0w I did remove this value, shouldn't I ? I didn't see any change without.

-

@mr_nets said in New PPPoE backend, some feedback:

@w0w I did remove this value, shouldn't I ? I didn't see any change without.

AFAIK it should be removed on the new backend.

Do you have any other tunes enabled, flow control, tcp segmentation offload, LRO, no?

Just guessing... I have never seen anything over 1Gig running PPPoE... -

@w0w said in New PPPoE backend, some feedback:

@mr_nets said in New PPPoE backend, some feedback:

@w0w I did remove this value, shouldn't I ? I didn't see any change without.

AFAIK it should be removed on the new backend.

Do you have any other tunes enabled, flow control, tcp segmentation offload, LRO, no?

Just guessing... I have never seen anything over 1Gig running PPPoE...Fine, every offload setting are disabled as well. The only thing I didn't remove is Jumbo Frame on PPPoE (MTU 1500) since my ISP support that.

-

What CPU usage are you seeing when you test? What about per core usage? I one core still pegged at 100%

-

@stephenw10

The multiple connection question is one I can't really answer. All I can say is that it's my providers tester and they sell up to 8gig on residential , so I have to sort of assume that it would take multiple streams into account. There is a comment on their page that mentions Ookla and Speedtest so there may be a linkage to them for the testing. It's an interesting thought though. Is there a known good tester that would be a good one to use to test against ? I'm betting it's going to be hard to get a full pipe test across the internet.Yup I've tried both cards for the WAN side of the connection.

I've even thought that maybe the problem is on the PFSENSE side be elsewhere, like what if it's my desktop that is having the issue (long shot but the reality is that it is a device in the chain of the test)

-

@w0w

I double checked and yup currently it is not there.

so in my tunables side here are the ones I think I have changed (some have been there for a while to help the 10gig on the LAN side and general performance). and Some where attempts to fix this issue. I'm certainly not an expert when it comes to tuning so it's very possible that something is messed up.kern.ipc.somaxconn 4096

hw.ix.tx_process_limit -1

hw.ix.rx_process_limit -1

hw.ix.rxd 4096

hw.ix.txd 4096

hw.ix.flow_control 0

hw.intr_storm_threshold 10000

net.isr.bindthreads 1

net.isr.maxthreads 8

net.inet.rss.enabled 1

net.inet.rss.bits 2

net.inet.ip.intr_queue_maxlen 4000

net.isr.numthreads 8

hw.ixl.tx_process_limit -1

hw.ixl.rx_process_limit -1

hw.ixl.rxd 4096

hw.ixl.rxd 4096

dev.ixl.0.fc 0

dev.ixl.1.fc 0and in my boot local file

net.inet.tcp.lro="0"

hw.ixl.flow_control="0"

hw.ix1.num_queues="8"

dev.ixl.0.iflib.override_qs_enable=1

dev.ixl.0.iflib.override_nrxqs=8

dev.ixl.0.iflib.override_ntxqs=8

dev.ixl.1.iflib.override_qs_enable=1

dev.ixl.1.iflib.override_nrxqs=8

dev.ixl.1.iflib.override_ntxqs=8

dev.ixl.0.iflib.override_nrxds=4096

dev.ixl.0.iflib.override_ntxds=4096

dev.ixl.1.iflib.override_nrxds=4096

dev.ixl.1.iflib.override_ntxds=4096And in the netowrk interface side

TCP Segmentaion offloading, Large Receive and of course use if_pppoe are checked.

more than 1gig pppoe, I'm not sure where in the world uses it but in Canada, they seem to love their PPPOE, we can get it up to 8gig from one of the providers. Honestly I still miss the old PVC based ATM connections, no special configuration from the users perspective it's just a pipe and it worked lol. I know the reason they use PPPOE it's just in cases like this it's extra overhead to deal with.

-

Yeah, if it's ookla it's almost certainly multistream. Also at those speeds it would probably need to be.

I'm not sure I can recommend anything other than the ISPs test at that speed either. Anything that was routed further may be restricted there.

But check the per-core CPU usage. Is it just hitting a single core limit?

-

@stephenw10

the per core load doesn't seem to be an issue, which would mean that the new backend is doing it's job. I captured this in the middle of the download test.CPU 0: 9.4% user, 0.0% nice, 43.1% system, 0.0% interrupt, 47.5% idle

CPU 1: 7.8% user, 0.0% nice, 1.6% system, 18.0% interrupt, 72.5% idle

CPU 2: 3.9% user, 0.0% nice, 3.1% system, 45.9% interrupt, 47.1% idle

CPU 3: 6.7% user, 0.0% nice, 3.5% system, 14.5% interrupt, 75.3% idle

CPU 4: 4.7% user, 0.0% nice, 14.5% system, 27.1% interrupt, 53.7% idle

CPU 5: 20.4% user, 0.0% nice, 7.1% system, 0.8% interrupt, 71.8% idle

CPU 6: 12.9% user, 0.0% nice, 28.6% system, 0.4% interrupt, 58.0% idle

CPU 7: 5.1% user, 0.0% nice, 2.7% system, 27.8% interrupt, 64.3% idle -

Hmm, yeah that looks fine. Yet you still see all the traffic on one receive queue?

-

Just FYI, some parameters used on one of my firewall with X710 and Xeon(R) CPU E3-1285L v4 :

net.inet.rss.bucket_mapping: 0:0 1:1 2:2 3:3 4:4 5:5 6:6 7:7 8:0 9:1 10:2 11:3 12:4 13:5 14:6 15:7 net.inet.rss.debug: 0 net.inet.rss.basecpu: 0 net.inet.rss.buckets: 16 net.inet.rss.maxcpus: 64 net.inet.rss.ncpus: 8 net.inet.rss.maxbits: 7 net.inet.rss.mask: 15 net.inet.rss.bits: 4 net.inet.rss.hashalgo: 2 hw.ixl.flow_control: 0 hw.ixl.tx_itr: 122 hw.ixl.rx_itr: 62 hw.ixl.shared_debug_mask: 0 hw.ixl.core_debug_mask: 0 hw.ixl.enable_head_writeback: 1 hw.ixl.enable_vf_loopback: 1 hw.ixl.i2c_access_method: 0 dev.ixl.0.wake: 0 dev.ixl.0.mac.xoff_recvd: 0 dev.ixl.0.mac.xoff_txd: 0 dev.ixl.0.mac.xon_recvd: 0 dev.ixl.0.mac.xon_txd: 0 dev.ixl.0.mac.tx_frames_big: 0 dev.ixl.0.mac.tx_frames_1024_1522: 21680695 dev.ixl.0.mac.tx_frames_512_1023: 14381471 dev.ixl.0.mac.tx_frames_256_511: 420044 dev.ixl.0.mac.tx_frames_128_255: 1330792 dev.ixl.0.mac.tx_frames_65_127: 2233359 dev.ixl.0.mac.tx_frames_64: 94083 dev.ixl.0.mac.checksum_errors: 0 dev.ixl.0.mac.rx_jabber: 0 dev.ixl.0.mac.rx_oversized: 0 dev.ixl.0.mac.rx_fragmented: 0 dev.ixl.0.mac.rx_undersize: 0 dev.ixl.0.mac.rx_frames_big: 0 dev.ixl.0.mac.rx_frames_1024_1522: 837784 dev.ixl.0.mac.rx_frames_512_1023: 105087 dev.ixl.0.mac.rx_frames_256_511: 526010 dev.ixl.0.mac.rx_frames_128_255: 801107 dev.ixl.0.mac.rx_frames_65_127: 17592164 dev.ixl.0.mac.rx_frames_64: 96374 dev.ixl.0.mac.rx_length_errors: 0 dev.ixl.0.mac.remote_faults: 0 dev.ixl.0.mac.local_faults: 1 dev.ixl.0.mac.illegal_bytes: 0 dev.ixl.0.mac.crc_errors: 0 dev.ixl.0.mac.bcast_pkts_txd: 25 dev.ixl.0.mac.mcast_pkts_txd: 1622 dev.ixl.0.mac.ucast_pkts_txd: 40138797 dev.ixl.0.mac.good_octets_txd: 39093159271 dev.ixl.0.mac.rx_discards: 0 dev.ixl.0.mac.bcast_pkts_rcvd: 2970 dev.ixl.0.mac.mcast_pkts_rcvd: 13177 dev.ixl.0.mac.ucast_pkts_rcvd: 19942379 dev.ixl.0.mac.good_octets_rcvd: 2847966747 dev.ixl.0.pf.txq03.itr: 122 dev.ixl.0.pf.txq03.bytes: 4629080532 dev.ixl.0.pf.txq03.packets: 5725464 dev.ixl.0.pf.txq03.mss_too_small: 0 dev.ixl.0.pf.txq03.tso: 0 dev.ixl.0.pf.txq02.itr: 122 dev.ixl.0.pf.txq02.bytes: 11637468273 dev.ixl.0.pf.txq02.packets: 10638943 dev.ixl.0.pf.txq02.mss_too_small: 0 dev.ixl.0.pf.txq02.tso: 0 dev.ixl.0.pf.txq01.itr: 122 dev.ixl.0.pf.txq01.bytes: 18307072164 dev.ixl.0.pf.txq01.packets: 18105770 dev.ixl.0.pf.txq01.mss_too_small: 0 dev.ixl.0.pf.txq01.tso: 0 dev.ixl.0.pf.txq00.itr: 122 dev.ixl.0.pf.txq00.bytes: 4364881017 dev.ixl.0.pf.txq00.packets: 5673826 dev.ixl.0.pf.txq00.mss_too_small: 0 dev.ixl.0.pf.txq00.tso: 0 dev.ixl.0.pf.rxq03.itr: 62 dev.ixl.0.pf.rxq03.desc_err: 0 dev.ixl.0.pf.rxq03.bytes: 0 dev.ixl.0.pf.rxq03.packets: 0 dev.ixl.0.pf.rxq03.irqs: 2153626 dev.ixl.0.pf.rxq02.itr: 62 dev.ixl.0.pf.rxq02.desc_err: 0 dev.ixl.0.pf.rxq02.bytes: 0 dev.ixl.0.pf.rxq02.packets: 0 dev.ixl.0.pf.rxq02.irqs: 2243131 dev.ixl.0.pf.rxq01.itr: 62 dev.ixl.0.pf.rxq01.desc_err: 0 dev.ixl.0.pf.rxq01.bytes: 0 dev.ixl.0.pf.rxq01.packets: 0 dev.ixl.0.pf.rxq01.irqs: 5679657 dev.ixl.0.pf.rxq00.itr: 62 dev.ixl.0.pf.rxq00.desc_err: 0 dev.ixl.0.pf.rxq00.bytes: 2766924779 dev.ixl.0.pf.rxq00.packets: 19948205 dev.ixl.0.pf.rxq00.irqs: 13195725 dev.ixl.0.pf.rx_errors: 0 dev.ixl.0.pf.bcast_pkts_txd: 25 dev.ixl.0.pf.mcast_pkts_txd: 281474976710649 dev.ixl.0.pf.ucast_pkts_txd: 40138797 dev.ixl.0.pf.good_octets_txd: 38931601458 dev.ixl.0.pf.rx_discards: 4294966174 dev.ixl.0.pf.bcast_pkts_rcvd: 2970 dev.ixl.0.pf.mcast_pkts_rcvd: 791 dev.ixl.0.pf.ucast_pkts_rcvd: 19941980 dev.ixl.0.pf.good_octets_rcvd: 2846521088 dev.ixl.0.admin_irq: 3 dev.ixl.0.link_active_on_if_down: 1 dev.ixl.0.eee.rx_lpi_count: 0 dev.ixl.0.eee.tx_lpi_count: 0 dev.ixl.0.eee.rx_lpi_status: 0 dev.ixl.0.eee.tx_lpi_status: 0 dev.ixl.0.eee.enable: 1 dev.ixl.0.fw_lldp: 1 dev.ixl.0.dynamic_tx_itr: 0 dev.ixl.0.dynamic_rx_itr: 0 dev.ixl.0.rx_itr: 62 dev.ixl.0.tx_itr: 122 dev.ixl.0.unallocated_queues: 380 dev.ixl.0.fw_version: fw 9.820.73026 api 1.15 nvm 9.20 etid 8000d966 oem 22.5632.9 dev.ixl.0.current_speed: 10 Gbps dev.ixl.0.supported_speeds: 7 dev.ixl.0.advertise_speed: 7 dev.ixl.0.fc: 0 dev.ixl.0.iflib.rxq3.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq3.rxq_fl0.credits: 128 dev.ixl.0.iflib.rxq3.rxq_fl0.cidx: 0 dev.ixl.0.iflib.rxq3.rxq_fl0.pidx: 128 dev.ixl.0.iflib.rxq3.cpu: 6 dev.ixl.0.iflib.rxq2.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq2.rxq_fl0.credits: 128 dev.ixl.0.iflib.rxq2.rxq_fl0.cidx: 0 dev.ixl.0.iflib.rxq2.rxq_fl0.pidx: 128 dev.ixl.0.iflib.rxq2.cpu: 4 dev.ixl.0.iflib.rxq1.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq1.rxq_fl0.credits: 128 dev.ixl.0.iflib.rxq1.rxq_fl0.cidx: 0 dev.ixl.0.iflib.rxq1.rxq_fl0.pidx: 128 dev.ixl.0.iflib.rxq1.cpu: 2 dev.ixl.0.iflib.rxq0.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq0.rxq_fl0.credits: 1023 dev.ixl.0.iflib.rxq0.rxq_fl0.cidx: 685 dev.ixl.0.iflib.rxq0.rxq_fl0.pidx: 684 dev.ixl.0.iflib.rxq0.cpu: 0 dev.ixl.0.iflib.txq3.r_abdications: 0 dev.ixl.0.iflib.txq3.r_restarts: 0 dev.ixl.0.iflib.txq3.r_stalls: 0 dev.ixl.0.iflib.txq3.r_starts: 5719770 dev.ixl.0.iflib.txq3.r_drops: 0 dev.ixl.0.iflib.txq3.r_enqueues: 5733475 dev.ixl.0.iflib.txq3.ring_state: pidx_head: 1123 pidx_tail: 1123 cidx: 1123 state: IDLE dev.ixl.0.iflib.txq3.txq_cleaned: 10975024 dev.ixl.0.iflib.txq3.txq_processed: 10975032 dev.ixl.0.iflib.txq3.txq_in_use: 9 dev.ixl.0.iflib.txq3.txq_cidx_processed: 824 dev.ixl.0.iflib.txq3.txq_cidx: 816 dev.ixl.0.iflib.txq3.txq_pidx: 825 dev.ixl.0.iflib.txq3.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq3.txd_encap_efbig: 0 dev.ixl.0.iflib.txq3.tx_map_failed: 0 dev.ixl.0.iflib.txq3.no_desc_avail: 0 dev.ixl.0.iflib.txq3.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq3.m_pullups: 4578827 dev.ixl.0.iflib.txq3.mbuf_defrag: 0 dev.ixl.0.iflib.txq3.cpu: 6 dev.ixl.0.iflib.txq2.r_abdications: 0 dev.ixl.0.iflib.txq2.r_restarts: 0 dev.ixl.0.iflib.txq2.r_stalls: 0 dev.ixl.0.iflib.txq2.r_starts: 10633370 dev.ixl.0.iflib.txq2.r_drops: 0 dev.ixl.0.iflib.txq2.r_enqueues: 10653511 dev.ixl.0.iflib.txq2.ring_state: pidx_head: 1863 pidx_tail: 1863 cidx: 1863 state: IDLE dev.ixl.0.iflib.txq2.txq_cleaned: 20820188 dev.ixl.0.iflib.txq2.txq_processed: 20820196 dev.ixl.0.iflib.txq2.txq_in_use: 8 dev.ixl.0.iflib.txq2.txq_cidx_processed: 228 dev.ixl.0.iflib.txq2.txq_cidx: 220 dev.ixl.0.iflib.txq2.txq_pidx: 228 dev.ixl.0.iflib.txq2.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq2.txd_encap_efbig: 0 dev.ixl.0.iflib.txq2.tx_map_failed: 0 dev.ixl.0.iflib.txq2.no_desc_avail: 0 dev.ixl.0.iflib.txq2.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq2.m_pullups: 10415382 dev.ixl.0.iflib.txq2.mbuf_defrag: 0 dev.ixl.0.iflib.txq2.cpu: 4 dev.ixl.0.iflib.txq1.r_abdications: 0 dev.ixl.0.iflib.txq1.r_restarts: 0 dev.ixl.0.iflib.txq1.r_stalls: 0 dev.ixl.0.iflib.txq1.r_starts: 18108957 dev.ixl.0.iflib.txq1.r_drops: 0 dev.ixl.0.iflib.txq1.r_enqueues: 18135709 dev.ixl.0.iflib.txq1.ring_state: pidx_head: 0669 pidx_tail: 0669 cidx: 0669 state: IDLE dev.ixl.0.iflib.txq1.txq_cleaned: 35744277 dev.ixl.0.iflib.txq1.txq_processed: 35744285 dev.ixl.0.iflib.txq1.txq_in_use: 9 dev.ixl.0.iflib.txq1.txq_cidx_processed: 541 dev.ixl.0.iflib.txq1.txq_cidx: 533 dev.ixl.0.iflib.txq1.txq_pidx: 542 dev.ixl.0.iflib.txq1.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq1.txd_encap_efbig: 0 dev.ixl.0.iflib.txq1.tx_map_failed: 0 dev.ixl.0.iflib.txq1.no_desc_avail: 0 dev.ixl.0.iflib.txq1.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq1.m_pullups: 17863485 dev.ixl.0.iflib.txq1.mbuf_defrag: 0 dev.ixl.0.iflib.txq1.cpu: 2 dev.ixl.0.iflib.txq0.r_abdications: 0 dev.ixl.0.iflib.txq0.r_restarts: 0 dev.ixl.0.iflib.txq0.r_stalls: 0 dev.ixl.0.iflib.txq0.r_starts: 5665998 dev.ixl.0.iflib.txq0.r_drops: 0 dev.ixl.0.iflib.txq0.r_enqueues: 5676493 dev.ixl.0.iflib.txq0.ring_state: pidx_head: 1485 pidx_tail: 1485 cidx: 1485 state: IDLE dev.ixl.0.iflib.txq0.txq_cleaned: 10681309 dev.ixl.0.iflib.txq0.txq_processed: 10681317 dev.ixl.0.iflib.txq0.txq_in_use: 8 dev.ixl.0.iflib.txq0.txq_cidx_processed: 997 dev.ixl.0.iflib.txq0.txq_cidx: 989 dev.ixl.0.iflib.txq0.txq_pidx: 997 dev.ixl.0.iflib.txq0.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq0.txd_encap_efbig: 0 dev.ixl.0.iflib.txq0.tx_map_failed: 0 dev.ixl.0.iflib.txq0.no_desc_avail: 0 dev.ixl.0.iflib.txq0.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq0.m_pullups: 5253756 dev.ixl.0.iflib.txq0.mbuf_defrag: 0 dev.ixl.0.iflib.txq0.cpu: 0 dev.ixl.0.iflib.override_nrxds: 0 dev.ixl.0.iflib.override_ntxds: 0 dev.ixl.0.iflib.allocated_msix_vectors: 5 dev.ixl.0.iflib.use_extra_msix_vectors: 0 dev.ixl.0.iflib.use_logical_cores: 0 dev.ixl.0.iflib.separate_txrx: 0 dev.ixl.0.iflib.core_offset: 0 dev.ixl.0.iflib.tx_abdicate: 0 dev.ixl.0.iflib.rx_budget: 0 dev.ixl.0.iflib.disable_msix: 0 dev.ixl.0.iflib.override_qs_enable: 0 dev.ixl.0.iflib.override_nrxqs: 0 dev.ixl.0.iflib.override_ntxqs: 0 dev.ixl.0.iflib.driver_version: 2.3.3-k dev.ixl.0.%iommu: rid=0x100 dev.ixl.0.%parent: pci1 dev.ixl.0.%pnpinfo: vendor=0x8086 device=0x15ff subvendor=0x8086 subdevice=0x0006 class=0x020000 dev.ixl.0.%location: slot=0 function=0 dbsf=pci0:1:0:0 handle=\_SB_.PCI0.PEG0.PEGP dev.ixl.0.%driver: ixl dev.ixl.0.%desc: Intel(R) Ethernet Controller X710 for 10GBASE-T - 2.3.3-k dev.ixl.%parent: ixl0@pci0:1:0:0: class=0x020000 rev=0x02 hdr=0x00 vendor=0x8086 device=0x15ff subvendor=0x8086 subdevice=0x0006 vendor = 'Intel Corporation' device = 'Ethernet Controller X710 for 10GBASE-T' class = network subclass = ethernet bar [10] = type Prefetchable Memory, range 64, base 0xfbe000000, size 16777216, enabled bar [1c] = type Prefetchable Memory, range 64, base 0xfbf018000, size 32768, enabled cap 01[40] = powerspec 3 supports D0 D3 current D0 cap 05[50] = MSI supports 1 message, 64 bit, vector masks cap 11[70] = MSI-X supports 129 messages, enabled Table in map 0x1c[0x0], PBA in map 0x1c[0x1000] cap 10[a0] = PCI-Express 2 endpoint max data 128(2048) FLR max read 512 link x8(x8) speed 8.0(8.0) ASPM L1(L1) cap 03[e0] = VPD ecap 0001[100] = AER 2 0 fatal 0 non-fatal 1 corrected ecap 0003[140] = Serial 1 8477c9ffff9196b4 ecap 000e[150] = ARI 1 ecap 0010[160] = SR-IOV 1 IOV disabled, Memory Space disabled, ARI disabled 0 VFs configured out of 32 supported First VF RID Offset 0x0110, VF RID Stride 0x0001 VF Device ID 0x154c Page Sizes: 4096 (enabled), 8192, 65536, 262144, 1048576, 4194304 ecap 0017[1a0] = TPH Requester 1 ecap 000d[1b0] = ACS 1 Source Validation unavailable, Translation Blocking unavailable P2P Req Redirect unavailable, P2P Cmpl Redirect unavailable P2P Upstream Forwarding unavailable, P2P Egress Control unavailable P2P Direct Translated unavailable, Enhanced Capability unavailable ecap 0019[1d0] = PCIe Sec 1 lane errors 0@dsl-ottawa

Is there a way to test pfSense WAN to LAN throughput without using PPPoE?

I don’t remember exactly what I set up back then, but it was something like an iPerf server on the WAN side (hooked to 10Gbit switch) with a 10 Gbit interface — and of course, the same on the LAN side, but client... -

@stephenw10 Yup yup

dev.ixl.0.pf.txq07.packets: 8401098

dev.ixl.0.pf.txq06.packets: 7316577

dev.ixl.0.pf.txq05.packets: 3704542

dev.ixl.0.pf.txq04.packets: 4271522

dev.ixl.0.pf.txq03.packets: 3784653

dev.ixl.0.pf.txq02.packets: 7219027

dev.ixl.0.pf.txq01.packets: 4471821

dev.ixl.0.pf.txq00.packets: 2694355

dev.ixl.0.pf.rxq07.packets: 0

dev.ixl.0.pf.rxq06.packets: 0

dev.ixl.0.pf.rxq05.packets: 0

dev.ixl.0.pf.rxq04.packets: 0

dev.ixl.0.pf.rxq03.packets: 0

dev.ixl.0.pf.rxq02.packets: 0

dev.ixl.0.pf.rxq01.packets: 0

dev.ixl.0.pf.rxq00.packets: 47313613