25.07 - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down

-

But if dpinger binds to the WAN address then that traffic should always go via the WAN gateway because the rule that passes it is:

pass out route-to ( ix2 192.168.191.1 ) from 192.168.191.2 to !192.168.191.0/24 ridentifier 1000010012 keep state allow-opts label "let out anything from firewall host itself"That could be broken by a user outbound rule since it's not quick but I don't see one here.

I also don't see a state for 8.8.8.8 icmp from 192.168.191.2 in either status output?

-

@dennypage I see what you're saying, but has something changed in FreeBSD 15 that could be affecting this? I am fairly sure this was all working as expected before.

I just ran another test, pulled my WAN2 cable out and executed this command:

# dpinger -r 1000 -f -i WAN2_test -B 192.168.191.2 -s 1000 -d 1 -D 500 -L 75 1.0.0.1 send_interval 1000ms loss_interval 4000ms time_period 60000ms report_interval 1000ms data_len 1 alert_interval 1000ms latency_alarm 500ms loss_alarm 75% alarm_hold 10000ms dest_addr 1.0.0.1 bind_addr 192.168.191.2 identifier "WAN2_test " WAN2_test 0 0 0 WAN2_test 0 0 0 WAN2_test 0 0 0 WAN2_test 0 0 100 WAN2_test 1.0.0.1: Alarm latency 0us stddev 0us loss 100% WAN2_test 0 0 100 WAN2_test 0 0 100 WAN2_test 0 0 100 ...So, 100% packet loss, gateway down. Then, without the

-Bswitch:# dpinger -r 1000 -f -i WAN2_test -s 1000 -d 1 -D 500 -L 75 1.0.0.1 send_interval 1000ms loss_interval 4000ms time_period 60000ms report_interval 1000ms data_len 1 alert_interval 1000ms latency_alarm 500ms loss_alarm 75% alarm_hold 10000ms dest_addr 1.0.0.1 bind_addr (none) identifier "WAN2_test " WAN2_test 3309 0 0 WAN2_test 3517 208 0 WAN2_test 3421 222 0 WAN2_test 3464 210 0 WAN2_test 3524 230 0 WAN2_test 3565 231 0 WAN2_test 3636 269 0 WAN2_test 3707 314 0 WAN2_test 3709 303 0 WAN2_test 3706 280 0 WAN2_test 3760 327 0 ...(pings succeed)

# route -n get 1.0.0.1 route to: 1.0.0.1 destination: 0.0.0.0 mask: 0.0.0.0 gateway: 74.101.221.1 fib: 0 interface: ix0 flags: <UP,GATEWAY,DONE,STATIC> recvpipe sendpipe ssthresh rtt,msec mtu weight expire 0 0 0 0 1500 1 0# netstat -rn -f inet | grep -E '1\.0\.0\.|0\.0\.0\.0' 0.0.0.0 74.101.221.1 UGS ix0# ping 1.0.0.1 PING 1.0.0.1 (1.0.0.1): 56 data bytes 64 bytes from 1.0.0.1: icmp_seq=0 ttl=60 time=3.363 ms 64 bytes from 1.0.0.1: icmp_seq=1 ttl=60 time=4.120 ms 64 bytes from 1.0.0.1: icmp_seq=2 ttl=60 time=2.914 ms ^C --- 1.0.0.1 ping statistics --- 3 packets transmitted, 3 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 2.914/3.466/4.120/0.498 ms -

With WAN2 up check the state opened by dpinger and the rule that opened it.

It should show a state on ix2 from 192.168.191.2 and being opened by the default allow out rule above.

But I suspect it won't because that should not go down when WAN1 does.

-

@stephenw10 said:

I also don't see a state for 8.8.8.8 icmp from 192.168.191.2 in either status output?

State for 8.8.8.8:

[25.07-RC][root@r1.lan]/root: pfctl -vvss | grep -A3 'ix2.*8.8.8.8' ix2 icmp 192.168.191.2:18153 -> 8.8.8.8:8 0:0 age 00:48:33, expires in 00:00:10, 581:581 pkts, 16849:16849 bytes, rule 107, allow-opts id: 52c2976800000000 creatorid: 7d506d72 route-to: 192.168.191.1@ix2 origif: ix0Rules (anything look wrong here?):

[25.07-RC][root@r1.lan]/root: pfctl -vvsr | grep -A3 '@107' @107 pass out route-to (ix2 192.168.191.1) inet from 192.168.191.2 to ! 192.168.191.0/24 flags S/SA keep state (if-bound) allow-opts label "let out anything from firewall host itself" ridentifier 1000010023 [ Evaluations: 423034 Packets: 688 Bytes: 40320 States: 0 ] [ Inserted: uid 0 pid 0 State Creations: 19 ] [ Last Active Time: Thu Jul 31 20:45:00 2025 ][25.07-RC][root@r1.lan]/root: pfctl -sr | grep 'pass.*192.168.191' pass out route-to (ix2 192.168.191.1) inet from 192.168.191.2 to ! 192.168.191.0/24 flags S/SA keep state (if-bound) allow-opts label "let out anything from firewall host itself" ridentifier 1000010023 pass in quick on ix2 reply-to (ix2 192.168.191.1) inet from <OPT3__NETWORK> to any flags S/SA keep state (if-bound) label "USER_RULE: allow inet" label "id:1561436253" ridentifier 1561436253[25.07-RC][root@r1.lan]/root: cat /tmp/rules.debug | grep 'pass.*192.168.191' pass out route-to ( ix2 192.168.191.1 ) from 192.168.191.2 to !192.168.191.0/24 ridentifier 1000010023 keep state allow-opts label "let out anything from firewall host itself" pass in quick on $WAN2_RUT reply-to ( ix2 192.168.191.1 ) inet from $OPT3__NETWORK to any ridentifier 1561436253 keep state label "USER_RULE: allow inet" label "id:1561436253" -

No that looks like exactly what I would expect. It's being passed by the route-to rule and forced via the WAN2 gateway. As such I would not expect that to fail if WAN1 is disconnected.

However I assume it does fail?

-

@stephenw10 Yes, pings out of WAN2 start to fail as soon as I pull the WAN1 cable...

[25.07-RC][root@r1.lan]/root: dpinger -r 1000 -f -i WAN2_test -B 192.168.191.2 -s 1000 -d 1 -D 500 -L 75 1.0.0.1 send_interval 1000ms loss_interval 4000ms time_period 60000ms report_interval 1000ms data_len 1 alert_interval 1000ms latency_alarm 500ms loss_alarm 75% alarm_hold 10000ms dest_addr 1.0.0.1 bind_addr 192.168.191.2 identifier "WAN2_test " WAN2_test 69570 0 0 WAN2_test 70163 649 0 WAN2_test 68235 2777 0 WAN2_test 65246 5713 0 WAN2_test 60112 11467 0 WAN2_test 63049 12360 0 WAN2_test 62877 11451 0 WAN2_test 60334 14108 0 WAN2_test 60794 13456 0 WAN2_test 59918 13124 0 WAN2_test 60180 12596 0 WAN2_test 59935 12134 0 WAN2_test 59935 12134 0 WAN2_test 59567 11766 0 WAN2_test 59506 11371 0 WAN2_test 58916 11244 0 WAN2_test 58469 11053 0 WAN2_test 57181 11984 0 WAN2_test 57413 11703 0 WAN2_test 57553 11424 0 WAN2_test 58340 11692 0 WAN2_test 58237 11431 0 WAN2_test 58841 11538 0 ( here is where I yank the WAN1 cable... ) WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 0 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 0 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 0 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 0 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 4 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 11 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 14 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 17 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 20 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 23 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 25 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 28 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 30 WAN2_test 1.0.0.1: sendto error: 65 WAN2_test 58841 11538 32 -

Hmm, and presumably it still logs the WAN2 gateway going down?

Does the state still exist after pulling WAN1? Still on ix2?

To be clear, does it work as expected if you allow it to create the static route?

-

@luckman212 said in 25.07 RC - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down:

I see what you're saying, but has something changed in FreeBSD 15 that could be affecting this?

Not that I am aware of. The general behavior of routing in Unix systems goes back to system 3 times.

-

@luckman212 said in 25.07 RC - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down:

/usr/local/bin/dpinger -S -r 0 -i WAN2_RUT -B 192.168.191.2 -p /var/run/dpinger_WAN2_RUT~192.168.191.2~8.8.8.8.pid -u /var/run/dpinger_WAN2_RUT~192.168.191.2~8.8.8.8.sock -C /etc/rc.gateway_alarm -d 1 -s 5000 -l 2000 -t 120000 -A 10000 -D 500 -L 75 8.8.8.8So, this attracts my attention... Why is this bind in private address space? Is this an ISP private shared space?

-

@luckman212 said in 25.07 RC - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down:

dpinger -r 1000 -f -i WAN2_test -B 192.168.191.2 -s 1000 -d 1 -D 500 -L 75 1.0.0.1I don't understand why you would expect this to work. The source address for the ICMP packets will be 192.168.191.2. This is private address space, so Cloudflare, who are very security conscious, should simply drop the inbound packet if they received it from an exterior network. No possibility of a response.

When WAN2 is up, the only way I can think of ICMP to Cloudflare working is if the 192.168.191.0/24 (or some other masking) is a shared private space with the ISP or modem, and some entity (presumably the ISP) is performing NAT on the outbound packets.

FWIW, my WAN2 cellular connection operates in exactly this way, except it is in the 100.64.0.0/10 space. The carrier does the NATing in my case. And coincidentally, I also use 1.0.0.1 as my monitor address for that connection...

-

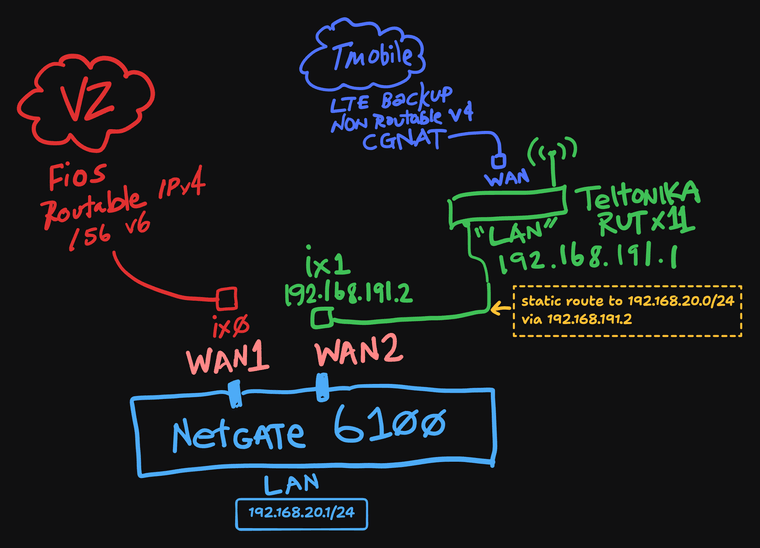

@dennypage I should have provided a diagram. This is not related to Cloudflare. I am using 192.168.191.0/24 as a transit network to connect to an LTE router that receives a CGNAT v4 from T-mobile.

-

@luckman212 said in 25.07 RC - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down:

I should have provided a diagram. This is not related to Cloudflare. I am using 192.168.191.0/24 as a transit network to connect to an LTE router that receives a CGNAT v4 from T-mobile.

Yes, it's related to Cloudflare. That's who 1.0.0.1 is.

So yes, you are dependent upon NAT from T-Mobile to make 192.168.191.0/24 work. There is no way to use the 192.168.191.2 source address on the Verizon network. And vice versa. Unless of course you have BGP (which would not work with CGNAT anyway).

With multiple WAN connections, you need the static routes assigned to ensure everything goes out the correct interface.

-

Denny thank you for your help, I think somehow we are now talking about multiple different things.

I have "normal" outbound NAT rules on both WAN1 + WAN2. So by the time a packet has arrived on Verizon's or T-mobile's network, its source address has already been rewritten to the public WAN side of either router in the diagram, right? So Verizon, T-mobile, Cloudflare etc don't know about or care about 192.168.191.0/24. It's up to the router(s): my 6100 as well as the Teltonika RUTX11 (which also does its own NAT of course), to keep track of the states (I'm sure I don't need to tell you any of this).

Yes, in my diagram, I am aware that I am "double-natting" on the WAN2 side. I know the limitations of that, but prefer it to trying to use IPPT (pass thru) mode—which is not stable in my testing as T-mobile rotates IPs very frequently on their LTE network and when pfSense runs it's various

rc.newwanip*scripts it can be mildly disruptive.All that being said, I again want to point out that all of this routing/NATting was and is working fine, as long as I don't unplug my WAN1 cable. That's the strange part, and the new behavior which wasn't happening before I installed 25.07.

Side note: I find Cloudflare anycast DNS IPs (1.1.1.1, 1.0.0.1) to be highly unreliable for ICMP, they frequently drop packets and experience wide latency fluctuations. I don't recommend them as dpinger monitor IPs. YMMV.

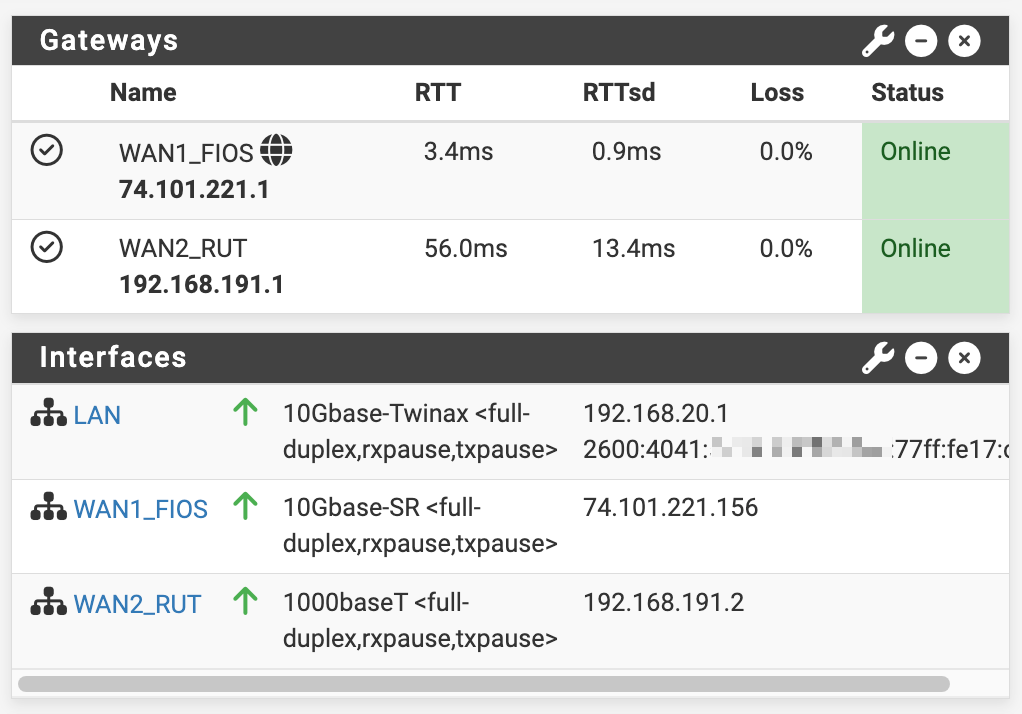

Here are a few more screenshots:

Some pings (with source address binding) and routes

note the hugely different latency, clearly the packets are traversing the LTE network and later the FIOS.

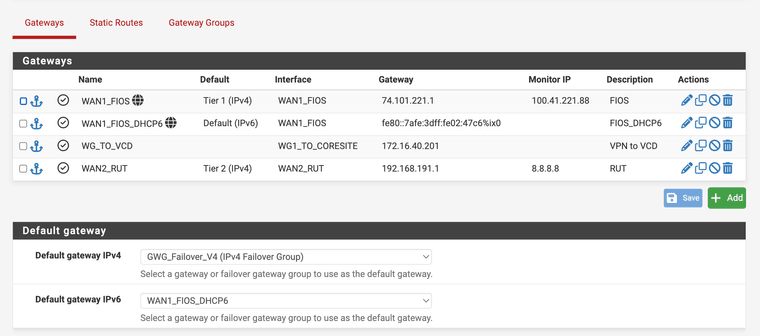

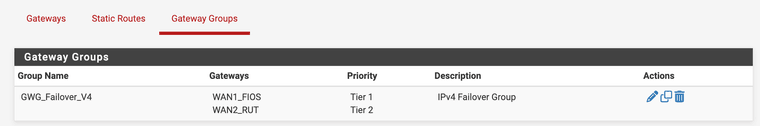

[25.07-RC][root@r1.lan]/root: ping -S 192.168.191.2 8.8.4.4 PING 8.8.4.4 (8.8.4.4) from 192.168.191.2: 56 data bytes 64 bytes from 8.8.4.4: icmp_seq=0 ttl=112 time=58.607 ms 64 bytes from 8.8.4.4: icmp_seq=1 ttl=112 time=57.743 ms 64 bytes from 8.8.4.4: icmp_seq=2 ttl=112 time=61.948 ms 64 bytes from 8.8.4.4: icmp_seq=3 ttl=112 time=57.283 ms ^C --- 8.8.4.4 ping statistics --- 4 packets transmitted, 4 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 57.283/58.895/61.948/1.825 ms [25.07-RC][root@r1.lan]/root: ping -S 192.168.20.1 8.8.4.4 PING 8.8.4.4 (8.8.4.4) from 192.168.20.1: 56 data bytes 64 bytes from 8.8.4.4: icmp_seq=0 ttl=120 time=3.940 ms 64 bytes from 8.8.4.4: icmp_seq=1 ttl=120 time=3.257 ms 64 bytes from 8.8.4.4: icmp_seq=2 ttl=120 time=3.770 ms 64 bytes from 8.8.4.4: icmp_seq=3 ttl=120 time=3.185 ms ^C --- 8.8.4.4 ping statistics --- 4 packets transmitted, 4 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 3.185/3.538/3.940/0.324 ms [25.07-RC][root@r1.lan]/root: ping -S 74.101.221.156 8.8.4.4 PING 8.8.4.4 (8.8.4.4) from 74.101.221.156: 56 data bytes 64 bytes from 8.8.4.4: icmp_seq=0 ttl=120 time=3.074 ms 64 bytes from 8.8.4.4: icmp_seq=1 ttl=120 time=2.985 ms 64 bytes from 8.8.4.4: icmp_seq=2 ttl=120 time=2.823 ms 64 bytes from 8.8.4.4: icmp_seq=3 ttl=120 time=3.022 ms ^C --- 8.8.4.4 ping statistics --- 4 packets transmitted, 4 packets received, 0.0% packet loss round-trip min/avg/max/stddev = 2.823/2.976/3.074/0.094 ms [25.07-RC][root@r1.lan]/root: route -n get 192.168.191.1 route to: 192.168.191.1 destination: 192.168.191.0 mask: 255.255.255.0 fib: 0 interface: ix2 flags: <UP,DONE,PINNED> recvpipe sendpipe ssthresh rtt,msec mtu weight expire 0 0 0 0 1500 1 0 [25.07-RC][root@r1.lan]/root: route -n get 8.8.4.4 route to: 8.8.4.4 destination: 0.0.0.0 mask: 0.0.0.0 gateway: 74.101.221.1 fib: 0 interface: ix0 flags: <UP,GATEWAY,DONE,STATIC> recvpipe sendpipe ssthresh rtt,msec mtu weight expire 0 0 0 0 1500 1 0Gateways + Interfaces

System > Routing

Routing > Gateway Groups

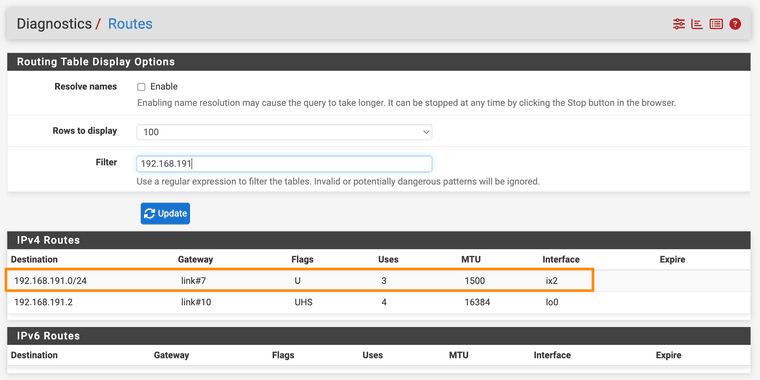

Diags > Routes showing route to 192.168.191.0/24 via ix2

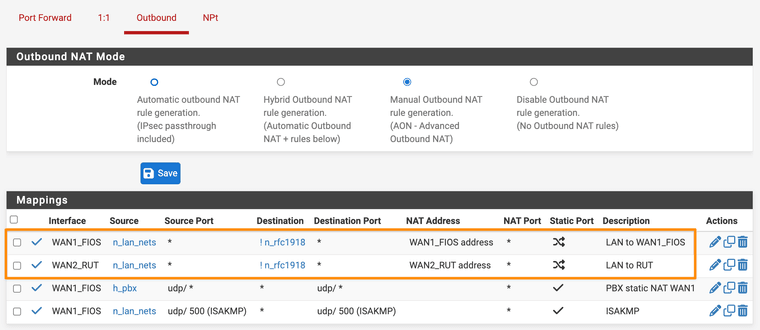

Outbound NAT rules showing 1 for each WAN if ! → rfc1918 dest

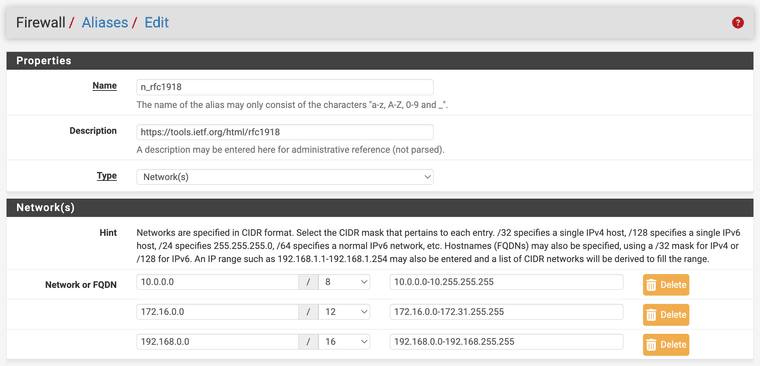

My "rfc1918" alias definition

(yes I know 25.07 now has its own native

_private4_for the same...) -

To be clear, does it work as expected if you allow it to create the static route?

Sorry @stephenw10 I missed this question before, yes I just tested it—removed the

dpinger_dont_add_static_routeoption from WAN2, and failover works normally again.There should not need to be a static route to 8.8.8.8 bound to WAN2, and in fact requiring such a thing would be very problematic (all DNS queries would be routed over my slow LTE connection...). Also, to say again, this used to work, so feels like a regression. What I wrote above about having a system with literally no default route makes no "sense".

-

@luckman212 said in 25.07 RC - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down:

Sorry @stephenw10 I missed this question before, yes I just tested it—removed the dpinger_dont_add_static_route option from WAN2, and failover works normally again.

This is as expected. I don't see Multi-WAN monitoring working correctly without having static routes for the monitor addresses. Btw, make sure you enable static routes for both gateways.

I cannot explain why it appeared to work previously. Perhaps some interaction with Floating States? @stephenw10 might have thoughts on this.

The only other possibility that occurs to me is that there might have been a configuration interaction if you were using the same destination as a DNS server with a gateway set in DNS Server Settings--see the doc on DNS Resolver and Multi-WAN. I can't speak directly to this because I've never used that style of configuration for DNS. Probably just a red herring, but worth a check.

The issue with routing all DNS queries via the wrong WAN interface can easily be addressed by not using the same address for DNS that you use for monitoring. Instead of Google or Cloudflare for DNS, I recommend using DNS over TLS with quad9. Better security, and you don't expose your queries to your ISPs.

Regardless, I'm glad you have it working.

-

I wouldn't consider this current state "working" - I really want to know why things break so badly when I don't have a static route to both monitor IPs. As I proved in my screenshots and command output above, the routing works as expected without the routes.

The bug / problem is because, when WAN1 loses its gateway, the gateway code for some reason ends up removing BOTH gateways and leaving the firewall without any default route AND apparently no route to the next hop on WAN2 either, which to me seems like a regression and not something I would consider ready for wide release.

I'd like to help debug this by whatever means necessary. I mentioned in the top post that I will share my configs. or invite Netgate to take a direct look via remote access, etc.

-

@luckman212 I consider the static routes to be required for correct Multi-WAN monitoring. Unless there is something doesn't work correctly with the static routes in place, I don't see an issue worth pursuing.

However, I don't speak for Netgate -- perhaps they have a different opinion and will be willing to explore it further.

-

@luckman212 said in 25.07 RC - no default gateway being set if default route is set to a gateway group and the Tier 1 member interface is down:

the gateway code for some reason ends up removing BOTH gateways

Maybe it thinks, it has no working gateways anymore because PING failed for all at the same time because everything got routed through the now down gateway. It looks like it is working like expected at this point. Maybe that checkbox should be removed.

-

@Bob.Dig I don't think that's what's happening. If you scroll up a few posts to where I have a section called "Some pings (with source address binding) and routes" you can see that the pings are traversing each separate gateway (you can tell from the vastly different latencies).

I just ran a few tcpdumps to confirm as well, the packets are definitely egressing out the separate correct gateways without the static routes:

[25.07-RC][root@r1.lan]/root: tcpdump -ni ix0 dst host 8.8.8.8 tcpdump: verbose output suppressed, use -v[v]... for full protocol decode listening on ix0, link-type EN10MB (Ethernet), snapshot length 262144 bytes ^C 0 packets captured <<–– ✅ no packets to the monitor IP seen on the WAN1 interface 857 packets received by filter 0 packets dropped by kernel [25.07-RC][root@r1.lan]/root: tcpdump -ni ix2 dst host 8.8.8.8 tcpdump: verbose output suppressed, use -v[v]... for full protocol decode listening on ix2, link-type EN10MB (Ethernet), snapshot length 262144 bytes 06:22:32.463054 IP 192.168.191.2 > 8.8.8.8: ICMP echo request, id 22849, seq 36, length 9 06:22:37.497085 IP 192.168.191.2 > 8.8.8.8: ICMP echo request, id 22849, seq 37, length 9 06:22:42.500047 IP 192.168.191.2 > 8.8.8.8: ICMP echo request, id 22849, seq 38, length 9 ^C 3 packets captured <<–– ✅ packets are being sent via WAN2 166 packets received by filter 0 packets dropped by kernel -

@stephenw10 @marcosm Since you guys seem to be unable to replicate this (?) would you be able to send me the

25.07-RELEASEimage to test with? I see on redmine (e.g. here, here, and here) that there's a build you guys are testing on tagged-RELEASE(built on 2025-07-22). Maybe there are some small differences in that build that are affecting my results? I've lost a good portion of my weekend on this and growing more desperate.