Playing with fq_codel in 2.4

-

https://github.com/pfsense/pfsense/pull/3941

PR was accepted and needs to be tested now.

Sorry I am not on development releases but has limiter info page also been updated to show schedulers information?

like suggested earlier in this thread:

@w0w:

Patch for Limiter Info page with schedulers information and refresh interval of 500ms

--- diag_limiter_info.php Wed Sep 07 00:26:47 2016 +++ diag_limiter_info.php Sun Oct 01 08:20:33 2017 @@ -40,5 +40,5 @@ echo $text; - $text = `/sbin/ipfw queue show`; + $text = `/sbin/ipfw sched show`; if ($text != "") { - echo "\n\n" . gettext("Queues") . ":\n"; + echo "\n\n" . gettext("Shedulers") . ":\n"; echo $text; @@ -72,3 +76,3 @@ events.push(function() { - setInterval('getlimiteractivity()', 2500); + setInterval('getlimiteractivity()', 500); getlimiteractivity(); -

Not yet, that's gonna need to be a separate PR. I'm thinking of maybe making a Queue-like page for that instead of text-based readouts.

-

any idea when this will show up in 2.4 development snapshots?

-

Can someone please guide me how to setup the shellcmd if Im using more than 1 queue per pipe as in:

(I have placed different weights to identify where queues are placed)pipe 1 config bw 5000Kb queue 1 config pipe 1 weight 10 mask src-ip6 /128 src-ip 0xffffffff queue 2 config pipe 1 weight 20 mask src-ip6 /128 src-ip 0xffffffff pipe 2 config bw 1024Kb queue 3 config pipe 2 weight 30 mask dst-ip6 /128 dst-ip 0xffffffff queue 4 config pipe 2 weight 40 mask dst-ip6 /128 dst-ip 0xffffffffusing the setup above i get this when running ipfw sched show

[2.4.3-RELEASE][root@pfTest.localdomain]/root: ipfw sched show 00001: 5.000 Mbit/s 0 ms burst 0 q00001 50 sl. 0 flows (256 buckets) sched 1 weight 10 lmax 0 pri 0 droptail mask: 0x00 0xffffffff/0x0000 -> 0x00000000/0x0000 sched 1 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 2 1 00002: 1.024 Mbit/s 0 ms burst 0 q00002 50 sl. 0 flows (256 buckets) sched 1 weight 20 lmax 0 pri 0 droptail mask: 0x00 0xffffffff/0x0000 -> 0x00000000/0x0000 sched 2 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 ECN Children flowsets: 4 3If you pay close attention you can see that the queues 1 and 2 from pipe 1 are assigned to sched 1 and 2... look for the weight value and youll see better.

im using this command:

ipfw sched 1 config pipe 1 type fq_codel && ipfw sched 2 config pipe 2 type fq_codelhope you guys can help me!

-

@matt_ said in Playing with fq_codel in 2.4:

https://github.com/pfsense/pfsense/pull/3941

PR was accepted and needs to be tested now.

Could I download this to my box for testing, or to a test VM? I am super excited to have a less hacky way to implement fq_codel.

-

@cplmayo

Yes, you can. What is your pfSense version? -

@w0w I am on the latest release

-

@cplmayo

Install System_Patches package, go to System->Patches, Press Add New Patch button and copy-paste URL https://github.com/pfsense/pfsense/compare/RELENG_2_4_3...mattund:RELENG_2_4_3.diff into URL/Commit ID field.

Also, write something into description field, press SAVE button. After that you will see you newly created patch in the list and Fetch button on the right, press it, then press appeared Test button, you should seePatch can be applied cleanly (detail) Patch can NOT be reverted cleanly (detail)If you see that, then you can press Apply button and enjoy your new Limiters

-

So after applying the patch do you just fill in the settings under limiters?

New here to traffic shaping so just looking for an easy way to decrease latency. Thanks.

-

@slowgrind

Generally yes, but I prefer to share bandwidth between all users evenly.

Unfortunately looks like foxale08 guide is broken, it was here but now it's completely gone :(

I'll provide some guide later, if you want it. -

Thank you and I just have a simple network setup. 1 wan and 1 lan with 1000 Mbps download and 50 Mbps upload.

-

I'm testing my patch, and I am seeing some unexpected behavior (maybe intentional).

pipe 2 config bw 122800Kb queue 100 buckets 256 droptail sched 2 config pipe 2 type fq_codel target 35ms interval 35ms quantum 1514 limit 10240 flows 1024 noecn queue 1 config pipe 2 queue 100 droptail pipe 1 config bw 12000Kb queue 100 droptail sched 1 config pipe 1 type fq_codel target 5ms interval 10ms quantum 1514 limit 10240 flows 1024 noecn queue 2 config pipe 1 queue 100 droptailUsing the above generated ruleset, if I start a speedtest, and have an ICMP ping up at the same time, upon reaching the ceiling of ~120Mbps, pings drop completely. Not bufferbloat, but full drops. Now, of course that would happen, the limiter is supposed to limit the bandwidth. However, is there a way around this? I figured burst might help, but it doesn't appear to -- FQ_CoDel assumes the new burst value as part of the total bandwidth share and doesn't kick in.

It's almost as though FQ_CoDel isn't active, and the limiter is putting itself into the drop state before CoDel can say, "hey, this teeny tiny little ICMP packet isn't part of a busy flow, so let's let that through". Unless I am mistaken on something.

What I want:

- Small flow traffic to not get dropped (think my little ping)

- Busy flow traffic to be shaped (dropped) appropriately

- Not allow the limiter pipe drop packets before FQ_CoDel has a chance to process them (?)

I was under the impression FQ_CoDel was designed around those objectives. Am I doing this right?

-

@mattund said in Playing with fq_codel in 2.4:

I'm testing my patch, and I am seeing some unexpected behavior (maybe intentional).

pipe 2 config bw 122800Kb queue 100 buckets 256 droptail sched 2 config pipe 2 type fq_codel target 35ms interval 35ms quantum 1514 limit 10240 flows 1024 noecn queue 1 config pipe 2 queue 100 droptail pipe 1 config bw 12000Kb queue 100 droptail sched 1 config pipe 1 type fq_codel target 5ms interval 10ms quantum 1514 limit 10240 flows 1024 noecn queue 2 config pipe 1 queue 100 droptailUsing the above generated ruleset, if I start a speedtest, and have an ICMP ping up at the same time, upon reaching the ceiling of ~120Mbps, pings drop completely. Not bufferbloat, but full drops. Now, of course that would happen, the limiter is supposed to limit the bandwidth. However, is there a way around this? I figured burst might help, but it doesn't appear to -- FQ_CoDel assumes the new burst value as part of the total bandwidth share and doesn't kick in.

It's almost as though FQ_CoDel isn't active, and the limiter is putting itself into the drop state before CoDel can say, "hey, this teeny tiny little ICMP packet isn't part of a busy flow, so let's let that through". Unless I am mistaken on something.

Am I doing this right?

I applied your patch to my install and so far it is working as intended. I haven't noticed any huge difference between it and manual approach I have been using for months.

I did noticed that when I tried setting weights for different queues and if I set the scheduler in the queues no traffic would pass. I went back and reread your instructions on GitHub and once I removed the weights and scheduler options from the queue it works.

I am still doing some testing to see results in dslreports but so far your interface is simple and just seems to work.

Great job!

-

@slowgrind said in Playing with fq_codel in 2.4:

So after applying the patch do you just fill in the settings under limiters?

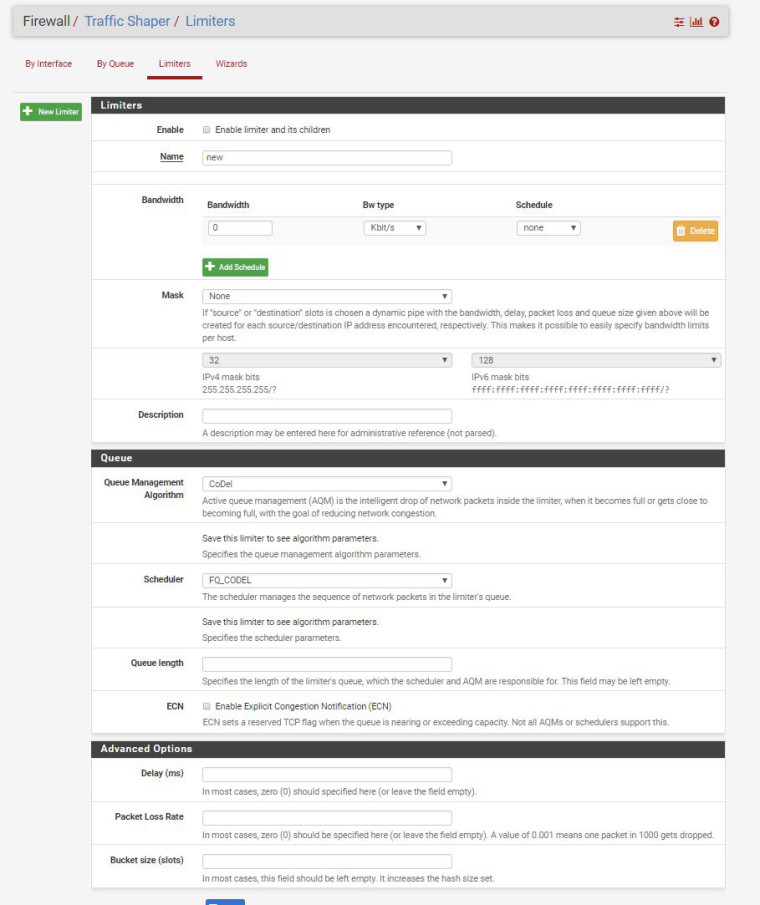

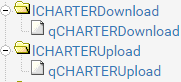

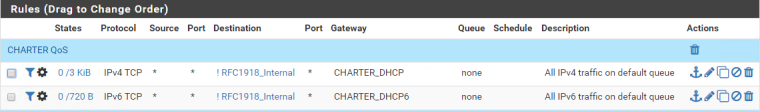

Here's what I'm doing. This might be a little more than what you need, but I figure I would share my configuration in case others have a crazy Multi-WAN multi-LAN setup like I do. I've constructed a series of limiters, one for download and one for upload, each with its own associated queue (you can make the queue with the "+ Add new Queue" button on the bottom of a Limiter's settings page) :

(I have more for my second ISP following that naming scheme: lINTERFACEDownload/lINTERFACEUpload and qINTRERFACEDownload/qINTERFACEUpload children)

I'm assigning FQ_CoDel to the scheduler on the parent limiter and leaving everything else alone. You can either edit the parameters, or leave them at default if you have a typical connection (FQ_CoDel is supposed to be "knobless" after all).

According to the following diagram, this is how the traffic will flow inside dummynet:

(flow_mask|sched_mask) sched_mask +---------+ weight Wx +-------------+ | |->-[flow]-->--| |-+ -->--| QUEUE x | ... | | | | |->-[flow]-->--| SCHEDuler N | | +---------+ | | | ... | +--[LINK N]-->-- +---------+ weight Wy | | +--[LINK N]-->-- | |->-[flow]-->--| | | -->--| QUEUE y | ... | | | | |->-[flow]-->--| | | +---------+ +-------------+ | +-------------+via: https://www.freebsd.org/cgi/man.cgi?query=ipfw&manpath=FreeBSD+9-current&format=html

Dissection: firewall traffic is assigned to a queue, which then generates flows defined by the mask, which pipe into the scheduler (set to FQ_CoDel), which then outputs to the pipe/link at the specified max bitrate.

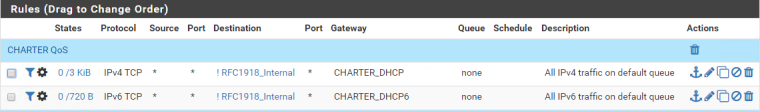

To assign your traffic to queues, you could do something like I did, which is to use floating rules. I have two WANs, and I need independent shaping and all that, so if you're on a single WAN it may be different for you/you may have better options.

How I set the rules up:

- Interface: WAN A or B interface

- Direction: out

- Address Family: IPv4 or IPv6; I had to do two rules, one for each IP version

- Gateway: Select the applicable IPv4 or IPv6 gateway consistent with how traffic should be routed on that IP stack

- In / Out pipe: qCHARTERUpload / qCHARTERDownload

I have some filtering rules in play here as you can see in my screenshot, but that's only since I'm testing some issues I mentioned previously. It's up to you if you want to match certain protocols/ports, etc.

-

@mattund said in Playing with fq_codel in 2.4:

I'm testing my patch, and I am seeing some unexpected behavior (maybe intentional).

This stopped happening as soon as I enabled masks on the offending download queue correlating to the one ICMP traffic was being dropped on. Not sure why.

-

@mattund said in Playing with fq_codel in 2.4:

@slowgrind said in Playing with fq_codel in 2.4:

So after applying the patch do you just fill in the settings under limiters?

Here's what I'm doing. This might be a little more than what you need, but I figure I would share my configuration in case others have a crazy Multi-WAN multi-LAN setup like I do. I've constructed a series of limiters, one for download and one for upload, each with its own associated queue (you can make the queue with the "+ Add new Queue" button on the bottom of a Limiter's settings page) :

(I have more for my second ISP following that naming scheme: lINTERFACEDownload/lINTERFACEUpload and qINTRERFACEDownload/qINTERFACEUpload children)

I'm assigning FQ_CoDel to the scheduler on the parent limiter and leaving everything else alone. You can either edit the parameters, or leave them at default if you have a typical connection (FQ_CoDel is supposed to be "knobless" after all).

According to the following diagram, this is how the traffic will flow inside dummynet:

(flow_mask|sched_mask) sched_mask +---------+ weight Wx +-------------+ | |->-[flow]-->--| |-+ -->--| QUEUE x | ... | | | | |->-[flow]-->--| SCHEDuler N | | +---------+ | | | ... | +--[LINK N]-->-- +---------+ weight Wy | | +--[LINK N]-->-- | |->-[flow]-->--| | | -->--| QUEUE y | ... | | | | |->-[flow]-->--| | | +---------+ +-------------+ | +-------------+via: https://www.freebsd.org/cgi/man.cgi?query=ipfw&manpath=FreeBSD+9-current&format=html

Dissection: firewall traffic is assigned to a queue, which then generates flows defined by the mask, which pipe into the scheduler (set to FQ_CoDel), which then outputs to the pipe/link at the specified max bitrate.

To assign your traffic to queues, you could do something like I did, which is to use floating rules. I have two WANs, and I need independent shaping and all that, so if you're on a single WAN it may be different for you/you may have better options.

How I set the rules up:

- Interface: WAN A or B interface

- Direction: out

- Address Family: IPv4 or IPv6; I had to do two rules, one for each IP version

- Gateway: Select the applicable IPv4 or IPv6 gateway consistent with how traffic should be routed on that IP stack

- In / Out pipe: qCHARTERUpload / qCHARTERDownload

I have some filtering rules in play here as you can see in my screenshot, but that's only since I'm testing some issues I mentioned previously. It's up to you if you want to match certain protocols/ports, etc.

When I setup my floating rules I tried using the out direction a few times but have run into problems each and every time.

For instance if I set the rule direction to "out" and then set the gateway I get what appears to be a routing loop. Traffic forwards find and I can hit external hosts however when I do a traceroute from an internal host I don't see the hop routers I just see the destination IP over and over. Are you seeing this or have you changed your setup?

I have also been considering using the dynamic pipes. Anyone tried this with the fq_codel scheduler?

-

@cplmayo I also get a routing loop in traceroutes. Are you using the in direction?

-

@mattund I have been, I would prefer to use out but it makes any troubleshooting I may need to do difficult. Not that I do traceroutes a lot but it annoyed me.

I have wondered if this was due to how the rule is creating states. Like it is rerouting the packet once the rule is applied; very weird.

There are two floating rules both in; one on WAN the other all of my vlans.

I wish I could just apply them to the WAN with in & out as the directions.

-

Hello, I am new here. I have been following this thread since last week. Tried the new patch and it's working as intended just like the manual. I leave everything in default, I just add the Upload/Download bandwidth Queue Management Algorithm

CoDel and FQ_Codel for Scheduler.I have tested it, hogging my connections doing 20 torrents and 1 download on IDM. Played Online game to see the ping and CMD ping www.google.com -t, I experienced 1 to 2 packet loss, and 3 to 5 ping dancing and I can still play like as if there were no downloads happening on the background.

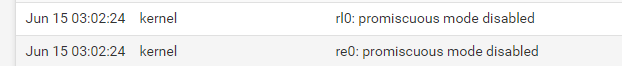

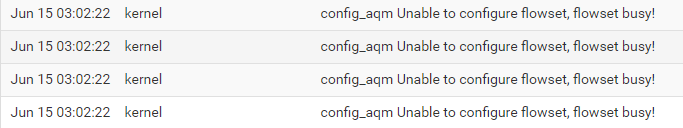

Some issues on the syslog, but fixed it by this command: ifconfig <nics> -promisc

Just a heads up! This one though, it's just showing on the syslogs everytime.

By the way, I have Floating rules active and limiters(for LAN scheds, no queues).

Overall, thank you. This helps me a lot.

-

@xraisen Your nics shouldn't need to be in promiscuous mode. That is a message that I would ignore.

Promiscuous mode is just going to have your NIC accept all packets.

Non-Promiscuous mode will have you NIC drop packets not destined for it.

Unless you are doing packet captures I can't think of a reason you would want to run in promiscuous mode.