Playing with fq_codel in 2.4

-

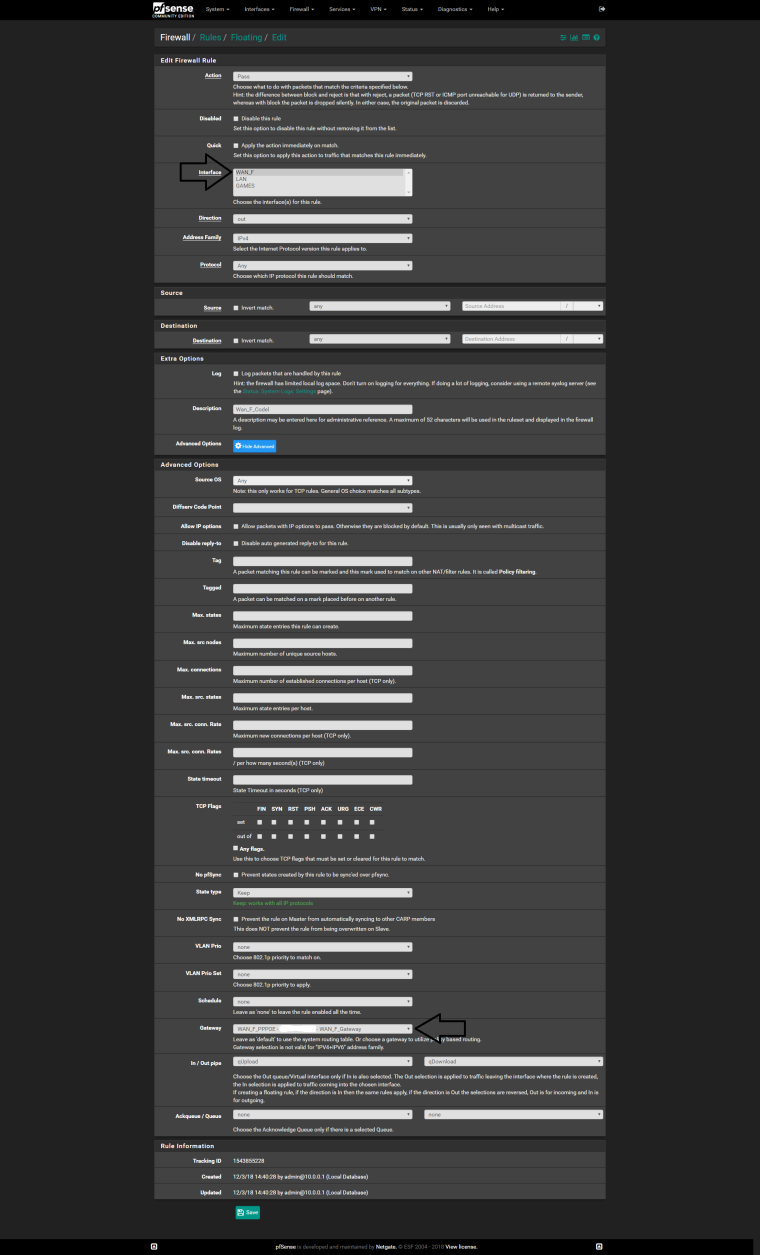

@wgstarks I've updated the post to better explain IN and OUT. From the perspective of WAN, IN is traffic coming into the interface from the Internet, aka download, and OUT is traffic leaving the interface and bound for the Internet, aka upload. Hope that clears things up.

-

@uptownvagrant

Thanks for clarifying.Also (minor issue), in rules creation step 3 I couldn’t select “Default” for gateway. Had to actually pick a gateway as you did in step 4.

-

@wgstarks Make sure you are choosing "Direction: In" on that rule. IN rules do not require a gateway be selected.

-

@uptownvagrant

Worked. Thanks again. -

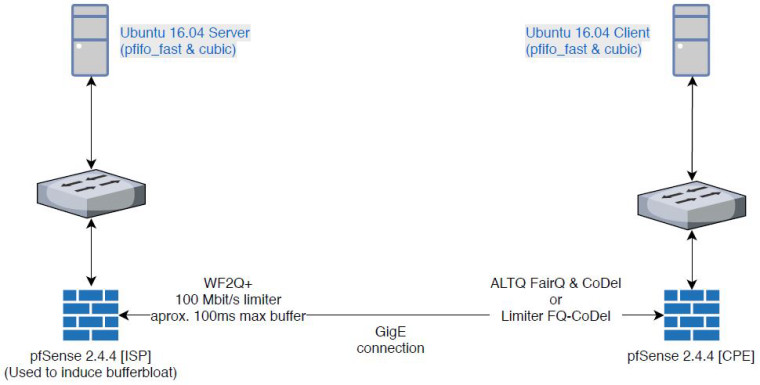

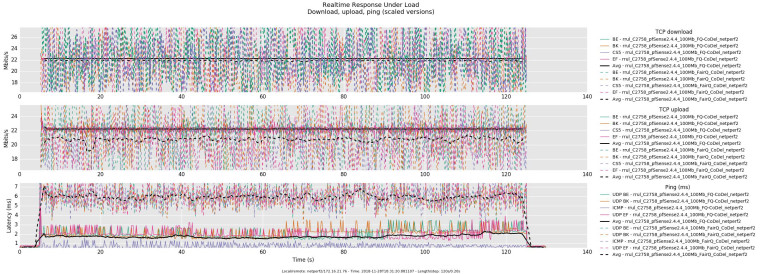

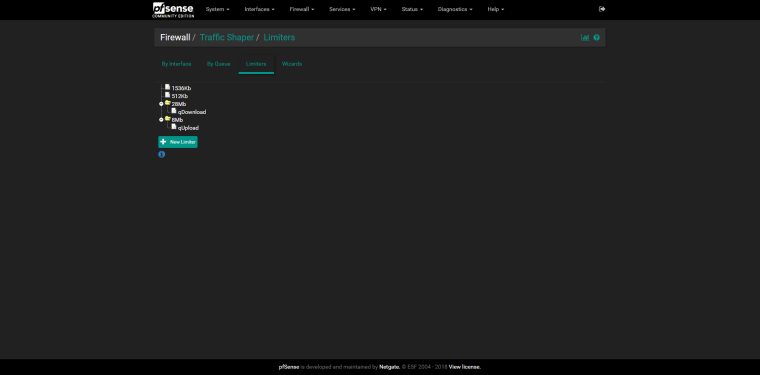

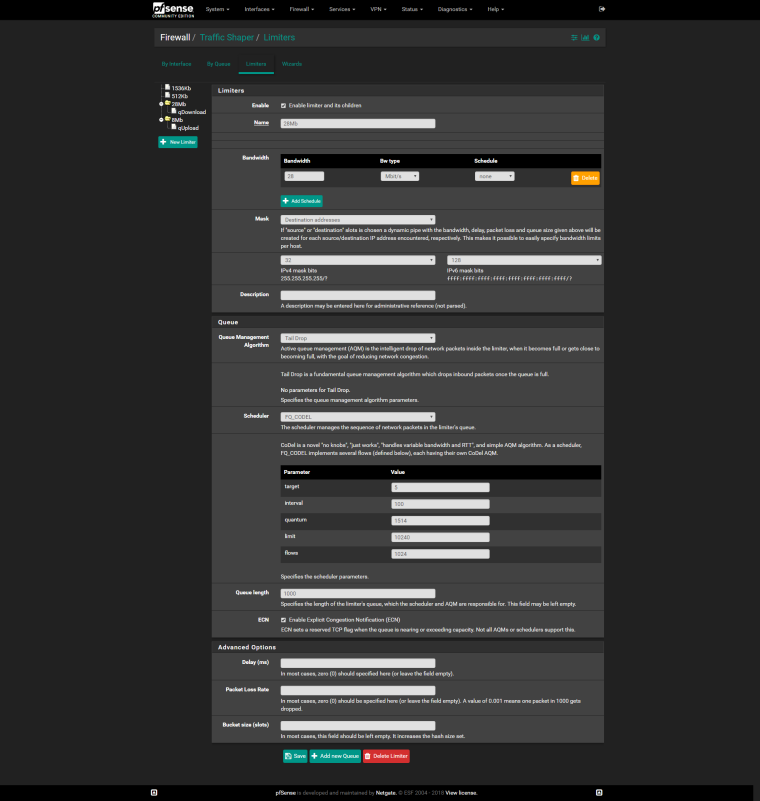

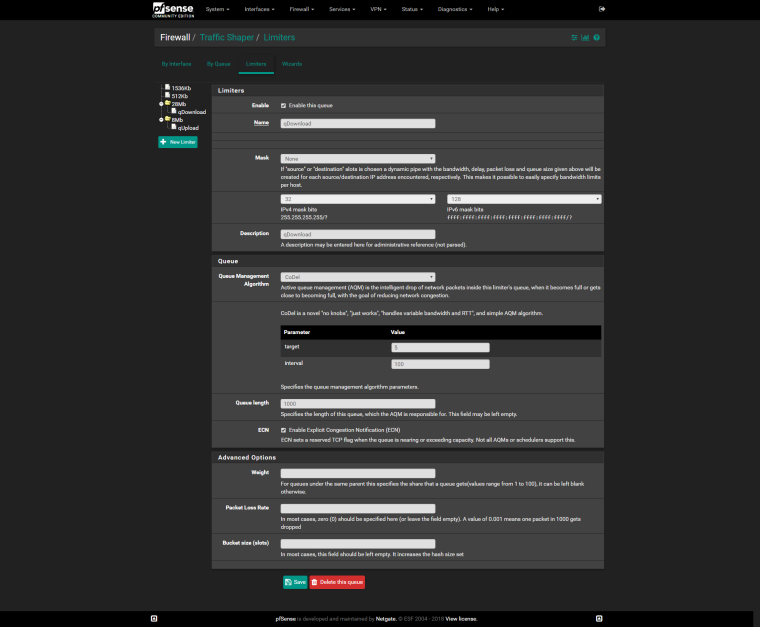

For those interested, here is a quick comparison I ran today of ALTQ shapers using FAIRQ + CoDel and DummyNet limiters using FQ-CoDel. I set both ALTQ shapers and the limiters to 94 Mbit/s.

- The ALTQ shapers used a FAIRQ parent discipline and one CoDel child queue for WAN and a FAIRQ parent discipline and on CoDel child queue for LAN.

- The DummyNet limiters used a FQ-CoDel scheduler and one Tail Drop queue for WAN-OUT and a FQ-CoDel scheduler and one Tail Drop queue for WAN-IN. ICMP was passed before entering the limiter.

0_1543552183964_rrul-2018-11-29T175857.808604.rrul_C2758_pfSense2_4_4_100Mb_FairQ_CoDel_netperf2.flent.gz

0_1543552199424_rrul-2018-11-28T183120.881107.rrul_C2758_pfSense2_4_4_100Mb_FQ-CoDel_netperf2.flent.gz -

@uptownvagrant Thanks!

fq_codel is definitely better, especially for upload bandwidth, but fairq+codel is not that bad for how easy it is to setup.

-

This post is deleted! -

Substitua CoDel por TailDrop. Não use o CoDel AQM com FQ-CoDel.

My apologies if the English to Portuguese translation is incorrect. Replace CoDel with TailDrop. Don't use CoDel AQM with FQ-CoDel.

-

This post is deleted! -

@harvy66 Okay, thank you so much, it worked.

-

This config work perfect! for 1 wan

I have 2 adsl, with balance group (tier 1 both wans).

But dont know how to config the floating rules to use this balance group.

The wans have different speeds.Thanks!

-

I do not speak English, but google speaks for me KKK, create 2 floating rules one for each gateway this way :

-

@ricardox Thanks, I'm going to try it

This is what I have

WAN1 and WAN2Create 8 floating rules (following the example of @uptownVagrant )

but I think that is not the correct way to achieve the desired goal1.)

Action: Pass

Quick: Tick Apply the action immediately on match.

Interface: WAN1

Direction: out

Address Family: IPv4

Protocol: ICMP

ICMP subtypes: Traceroute

Source: any

Destination: anyAction: Pass

Quick: Tick Apply the action immediately on match.

Interface: WAN2

Direction: out

Address Family: IPv4

Protocol: ICMP

ICMP subtypes: Traceroute

Source: any

Destination: any3.)

Action: Pass

Quick: Tick Apply the action immediately on match.

Interface: WAN1

Direction: any

Address Family: IPv4

Protocol: ICMP

ICMP subtypes: Echo reply, Echo Request

Source: any

Destination: any

Description: limiter drop echo-reply under load workaround4.)

Action: Pass

Quick: Tick Apply the action immediately on match.

Interface: WAN2

Direction: any

Address Family: IPv4

Protocol: ICMP

ICMP subtypes: Echo reply, Echo Request

Source: any

Destination: any

Description: limiter drop echo-reply under load workaround5.)

Action: Match

Interface: WAN1

Direction: in

Address Family: IPv4

Protocol: Any

Source: any

Destination: any

Gateway: WAN1

In / Out pipe: fq_codel_WAN1_in_q / fq_codel_WAN1_out_q6.)

Action: Match

Interface: WAN2

Direction: in

Address Family: IPv4

Protocol: Any

Source: any

Destination: any

Gateway: WAN2

In / Out pipe: fq_codel_WAN2_in_q / fq_codel_WAN2_out_q7.)

Action: Match

Interface: WAN1

Direction: out

Address Family: IPv4

Protocol: Any

Source: any

Destination: any

Gateway: WAN1

In / Out pipe: fq_codel_WAN1_out_q / fq_codel_WAN1_in_q8.)

Action: Match

Interface: WAN2

Direction: out

Address Family: IPv4

Protocol: Any

Source: any

Destination: any

Gateway: WAN2

In / Out pipe: fq_codel_WAN2_out_q / fq_codel_WAN2_in_q -

@mr-cairo

Do the same for Upload

-

So an update from myself.

Still trying to perfect the interactive packets been dropped issue, I noticed pie is now implemented on the AQM.

So what I have done for now to test on my own network is changed droptail to pie for the pipe and queue, scheduler is still using fq_codel.

Also in regards to the dynamic flow sets, it seems something isnt quite right, as the OP pointed out, there is just one single flow created and always shows src and target ip as 0.0.0.0 so regardless of what mask is configured there will always only be one flow and I suspect if multiple flows can be made to work results will be better. The reason been if you have say two flows, one is a full speed saturated download over fast ftp and the other is from an interactive ssh session, the latter will never fill its flow queue and wont drop packets, while the first would fill and drop packets, as it is now both tcp streams share the same flow, so how does the shaper know which packets to drop? the only help on that seems to come from the quantum value which prevents smaller packets from been put at the end of the queue.

--update--

Ok I have managed to get the multiple flows working. Was a lot of experimentation but I got there after finally understanding what the man page for ipfw/dummynet is explaining. If you get it wrong dummynet stops passing traffic ;) but I am only testing on my home network so is fine.

--update2--

I chose to have a flow per internet ip rather than per lan device. Also this is only for ipv4 right now, I think if you configure it so it overloads the flow limit then it breaks, and obviously the ipv6 address space is way bigger.

root@PFSENSE home # ipfw sched show 00001: 69.246 Mbit/s 0 ms burst 0 q00001 500 sl. 0 flows (256 buckets) sched 1 weight 0 lmax 0 pri 0 droptail mask: 0x00 0x000000ff/0x0000 -> 0x00000000/0x0000 sched 1 type FQ_CODEL flags 0x1 256 buckets 4 active FQ_CODEL target 5ms interval 30ms quantum 300 limit 1000 flows 1024 ECN mask: 0x00 0xffffff00/0x0000 -> 0x00000000/0x0000 Children flowsets: 1 BKT ___Prot___ _flow-id_ ______________Source IPv6/port_______________ _______________Dest. IPv6/port_______________ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0 ::/0 ::/0 6 288 0 0 0 17 ip 46.17.x.x/0 0.0.0.0/0 4 176 0 0 0 123 ip 208.123.x.x/0 0.0.0.0/0 5 698 0 0 0 249 ip 80.249.x.x/0 0.0.0.0/0 6 168 0 0 0 00002: 18.779 Mbit/s 0 ms burst 0 q00002 500 sl. 0 flows (256 buckets) sched 2 weight 0 lmax 0 pri 0 droptail mask: 0x00 0xffffffff/0x0000 -> 0x00000000/0x0000 sched 2 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 300ms quantum 300 limit 800 flows 1024 NoECN Children flowsets: 2--update 3--

Initial tests this is working sweet, on the below paste you can clearly see the bulk download flow and notice it only drops packets from that flow. I also tested on steam and zero dropped packets now on flows outside of steam, wow.

BKT ___Prot___ _flow-id_ ______________Source IPv6/port_______________ _______________Dest. IPv6/port_______________ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0 ::/0 ::/0 7 348 0 0 0 17 ip 46.17.x.x/0 0.0.0.0/0 3 132 0 0 0 58 ip 216.58.x.x/0 0.0.0.0/0 1 52 0 0 0 123 ip 208.123.x.x/0 0.0.0.0/0 5 698 0 0 0 135 ip 5.135.x.x/0 0.0.0.0/0 11919 17878500 3 4500 2 249 ip 80.249.x.x/0 0.0.0.0/0 6 168 0 0 0 254 ip 162.254.x.x/0 0.0.0.0/0 1 40 0 0 0 -

@chrcoluk said in Playing with fq_codel in 2.4:

So an update from myself.

Still trying to perfect the interactive packets been dropped issue, I noticed pie is now implemented on the AQM.

What interactive packets being dropped issue? Can you be specific? How are you measuring drops?

So what I have done for now to test on my own network is changed droptail to pie for the pipe and queue, scheduler is still using fq_codel.

When FQ-CoDel is used as the scheduler, the AQM you choose is not utilized. (fq_codel has separate enqueue/dequeue functions - AQM is ignored) https://forum.netgate.com/post/804118

Also in regards to the dynamic flow sets, it seems something isnt quite right, as the OP pointed out, there is just one single flow created and always shows src and target ip as 0.0.0.0 so regardless of what mask is configured there will always only be one flow and I suspect if multiple flows can be made to work results will be better. The reason been if you have say two flows, one is a full speed saturated download over fast ftp and the other is from an interactive ssh session, the latter will never fill its flow queue and wont drop packets, while the first would fill and drop packets, as it is now both tcp streams share the same flow, so how does the shaper know which packets to drop? the only help on that seems to come from the quantum value which prevents smaller packets from been put at the end of the queue.

What you are seeing using 'ipfw sched show' is not exposing the dynamic internal flows and sub-queues that FQ-CoDel is managing. It seems you are trying to create a visual representation, via 'ipfw sched show', of the mechanism that is already in place and operating within FQ-CoDel. See this post https://forum.netgate.com/post/803139

-

I am not convinced there is anything more than 1 flow on the existing configuration, especially after reading this and taking it all in, be warned its a big lot of text.

https://www.freebsd.org/cgi/man.cgi?query=ipfw&sektion=8&apropos=0&manpath=FreeBSD+11.2-RELEASE+and+Ports

But you can skip to the dummynet section.

I am measuring drops in two ways.

By looking at the output from the command I pasted on here, (I assumed that was obvious), and also holding a key down in a ssh terminal session whilst downloading bulk data to see if there is visible packet loss. Packet loss is very visible in a ssh terminal session as the cursor will stick and jump.

With a single flow fq_codel is fine at reducing jitter and latency, and that is all 95% seems to care about on here, just to get their dslreports grade scores up. However I did observe it was not able to differentiate between different tcp streams and as such not intelligently drop packets, the droptail AQM e.g. just blocks new packets entering the queue when its full, regardless of what those packets are.

Note that for flow separation the dummynet configuration states a mask needs to be set in the queue as well as the scheduler.

When running ipfw sched show, there is 2 indications of flows.

One is the number of buckets which stays as 1 on the older configuration, the other is on ipfw queue show and and the number of flows on that which stays at 0.

I managed to figure out the syntax for ipv6 flow separation as well based on how its configured for ipv4.

Now I am not saying you are wrong in that there is invisible flows not presented by the status commands, I am just saying I am seeing no evidence of that, based on performance metrics and the tools provided that show the active status of dummynet.

If you want to see the changes I made they are as following, these commands will generate a flow for each remote ipv4 address on downloads.

ipfw sched 1 config pipe 1 queue 500 mask src-ip 0xffffff00 type fq_codel ecn target 5 interval 30 quantum 300 limit 1000

ipfw queue 1 config sched 1 queue 500 mask src-ip 0x000000ffYou dont have to do this and it is more complex, if all you care about is bufferbloat then the existing single flow configuration is fine. As that's what it does it reduces bufferbloat. But if you want flow seperation, then the dummynet man page seems to clearly state that masks have to be set in scheduler and queue.

It is important to understand the role of the SCHED_MASK and FLOW_MASK, which are configured through the commands ipfw sched N config mask SCHED_MASK ... and ipfw queue X config mask FLOW_MASK .... The SCHED_MASK is used to assign flows to one or more scheduler instances, one for each value of the packet's 5-tuple after applying SCHED_MASK. As an example, using ``src-ip 0xffffff00'' creates one instance for each /24 destination subnet. The FLOW_MASK, together with the SCHED_MASK, is used to split packets into flows. As an example, using ``src-ip 0x000000ff'' together with the previous SCHED_MASK makes a flow for each individual source address. In turn, flows for each /24 subnet will be sent to the same scheduler instance.Sorry about the colour changes the source text isnt like that. There is mention of schedule instances which may be what you are reffering to, but these dont seem to provide intelligent dropping of packets whilst flow separation does.

-

Thanks for the info regarding AQM.

I did more testing, and I also get good results by just setting a mask on the queue only, leaving sched as default so just the single flow but reversing the mask.

So basically previously the original config was mask set so dst-ip on ingress and src-ip on egress, meaning the flows are per device on the LAN. I never wanted this, but I suppose good if you run an isp.

I then created this new config which creates seperate flows on the scheduler which is visible on the diagnostics, but forgot I also reversed the masking, this fixed my packet loss.

I then went back to the queue only mask, but also with the reversed mask so src-ip instead of dst-ip for ingress, and this was good as well.I wont bother testing alternative AQM's it was only briefly tried, and all thee testing with separate flows was done back on droptail, I will just accept what both of you say on that. So for me it simply seems all I had to do was reverse the masking so I have dynamic queues per remote host instead of per local host.

-

@chrcoluk Thank you for posting all of the details of your test method and config - much appreciated.

I'm on the US west coast so I'll dig into this later today, just after 0100 currently, to see if I can recreate your findings. That being said, I'm pinging @Rasool on this as my understanding is that your configuration should not be needed for flow separation as RFC 8290 states that FQ-CoDel is doing its own 5-tuple to identify flows.

-

@chrcoluk I am not able to recreate your findings based on the information you gave.

I tested using two Flent clients running overlapping RRUL tests while I used a separate client to initiate an SSH session to a separate destination and held down a key and watched for any sticking or jumping of the cursor. I did not experience any sticking or jumping. The RRUL tests ran for 130 seconds each, overlapping, and easily saturated the limiters during the test. I set the limiters to more closely match what you had set for bandwidth.

A couple of things i noticed about your config.

- You are using intervals, quantums, and limits that are not the default. If you set these to the defaults for each pipe does that change the behavior of SSH session while the limiters are under load?

- If you half your IN and OUT limiter bandwidth values, while using the default FQ-CoDel scheduler values, does this change the behavior of the SSH session while the limiters are under load?

Here is what my pipes, schedulers, and queues look like. I'm using the configuration I posted here https://forum.netgate.com/post/807490:

Limiters: 00001: 18.000 Mbit/s 0 ms burst 0 q131073 50 sl. 0 flows (1 buckets) sched 65537 weight 0 lmax 0 pri 0 droptail sched 65537 type FIFO flags 0x0 0 buckets 0 active 00002: 69.000 Mbit/s 0 ms burst 0 q131074 50 sl. 0 flows (1 buckets) sched 65538 weight 0 lmax 0 pri 0 droptail sched 65538 type FIFO flags 0x0 0 buckets 0 active Schedulers: 00001: 18.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 NoECN Children flowsets: 1 00002: 69.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 0 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 10240 flows 1024 NoECN Children flowsets: 2 Queues: q00001 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail q00002 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail