IPSEC VTI tunnels lost packets

-

@pete35 Manual ping inside VTI tunnel (from console)

PING 10.0.0.6 (10.0.0.6) from 10.0.0.5: 56 data bytes

64 bytes from 10.0.0.6: icmp_seq=0 ttl=64 time=8.693 ms

64 bytes from 10.0.0.6: icmp_seq=1 ttl=64 time=9.009 ms

64 bytes from 10.0.0.6: icmp_seq=2 ttl=64 time=8.533 ms

64 bytes from 10.0.0.6: icmp_seq=3 ttl=64 time=8.127 ms

64 bytes from 10.0.0.6: icmp_seq=4 ttl=64 time=8.372 ms

64 bytes from 10.0.0.6: icmp_seq=5 ttl=64 time=7.789 ms

64 bytes from 10.0.0.6: icmp_seq=6 ttl=64 time=9.185 ms

64 bytes from 10.0.0.6: icmp_seq=7 ttl=64 time=9.128 ms

64 bytes from 10.0.0.6: icmp_seq=8 ttl=64 time=8.564 ms

64 bytes from 10.0.0.6: icmp_seq=9 ttl=64 time=8.986 ms

64 bytes from 10.0.0.6: icmp_seq=10 ttl=64 time=8.397 ms

64 bytes from 10.0.0.6: icmp_seq=11 ttl=64 time=8.365 ms

64 bytes from 10.0.0.6: icmp_seq=12 ttl=64 time=8.565 ms

64 bytes from 10.0.0.6: icmp_seq=13 ttl=64 time=8.018 ms

64 bytes from 10.0.0.6: icmp_seq=14 ttl=64 time=8.314 ms

64 bytes from 10.0.0.6: icmp_seq=15 ttl=64 time=8.468 ms

64 bytes from 10.0.0.6: icmp_seq=16 ttl=64 time=7.918 ms

64 bytes from 10.0.0.6: icmp_seq=40 ttl=64 time=8.476 ms

64 bytes from 10.0.0.6: icmp_seq=42 ttl=64 time=8.745 ms

64 bytes from 10.0.0.6: icmp_seq=44 ttl=64 time=9.085 ms

64 bytes from 10.0.0.6: icmp_seq=45 ttl=64 time=8.446 ms

64 bytes from 10.0.0.6: icmp_seq=46 ttl=64 time=8.421 ms

64 bytes from 10.0.0.6: icmp_seq=47 ttl=64 time=7.987 ms

64 bytes from 10.0.0.6: icmp_seq=48 ttl=64 time=8.730 ms

64 bytes from 10.0.0.6: icmp_seq=49 ttl=64 time=8.659 ms

64 bytes from 10.0.0.6: icmp_seq=50 ttl=64 time=7.910 ms

64 bytes from 10.0.0.6: icmp_seq=51 ttl=64 time=8.086 ms

64 bytes from 10.0.0.6: icmp_seq=52 ttl=64 time=8.591 ms

64 bytes from 10.0.0.6: icmp_seq=53 ttl=64 time=8.262 ms

64 bytes from 10.0.0.6: icmp_seq=54 ttl=64 time=8.945 ms

64 bytes from 10.0.0.6: icmp_seq=55 ttl=64 time=9.079 ms

64 bytes from 10.0.0.6: icmp_seq=56 ttl=64 time=9.513 ms

64 bytes from 10.0.0.6: icmp_seq=57 ttl=64 time=9.241 ms

64 bytes from 10.0.0.6: icmp_seq=58 ttl=64 time=8.343 ms

64 bytes from 10.0.0.6: icmp_seq=65 ttl=64 time=8.575 ms

64 bytes from 10.0.0.6: icmp_seq=66 ttl=64 time=7.544 ms

64 bytes from 10.0.0.6: icmp_seq=67 ttl=64 time=7.647 ms

64 bytes from 10.0.0.6: icmp_seq=69 ttl=64 time=8.109 ms

64 bytes from 10.0.0.6: icmp_seq=70 ttl=64 time=8.974 ms

64 bytes from 10.0.0.6: icmp_seq=71 ttl=64 time=8.377 ms

64 bytes from 10.0.0.6: icmp_seq=72 ttl=64 time=8.489 ms

64 bytes from 10.0.0.6: icmp_seq=73 ttl=64 time=8.321 ms

64 bytes from 10.0.0.6: icmp_seq=74 ttl=64 time=8.157 ms

64 bytes from 10.0.0.6: icmp_seq=75 ttl=64 time=8.351 ms

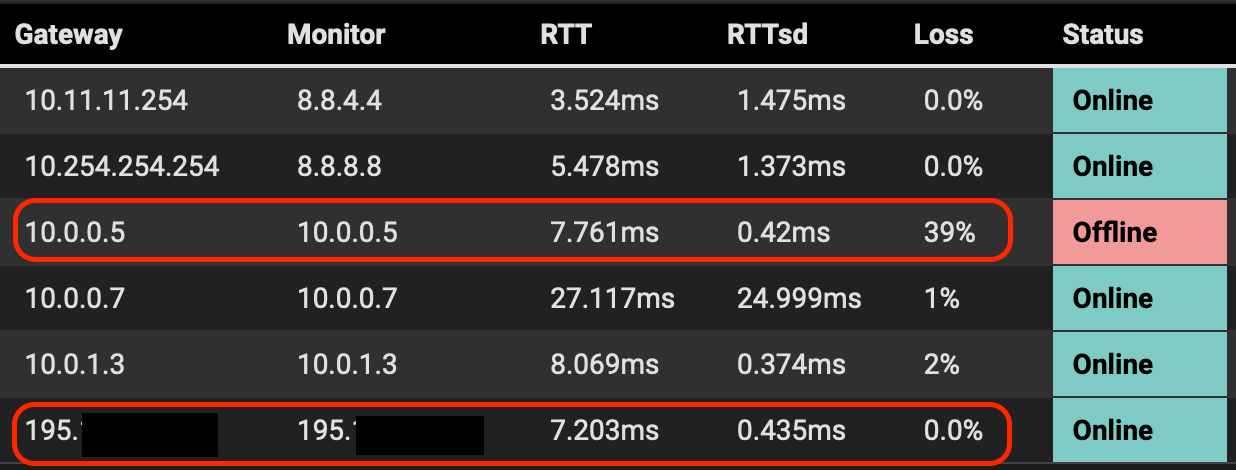

64 bytes from 10.0.0.6: icmp_seq=76 ttl=64 time=7.531 msAs you can see from the sequence number packets are lost.

But if you look at the latency of the previous packet and the later packet they are very similar, so I can assume that, rather than packet loss, there is packet discarding ...

-

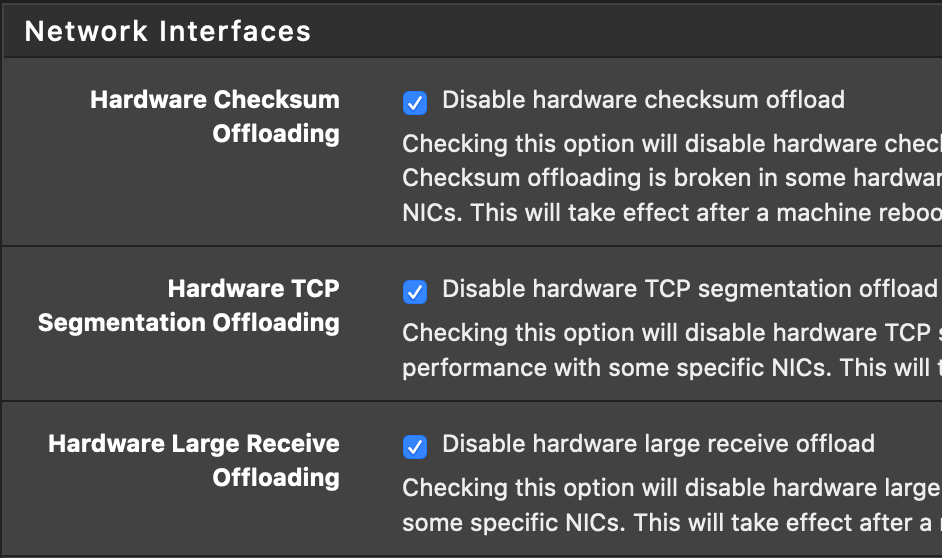

Have you checked these:

'Disable hardware checksum offload',

'Disable hardware TCP segmentation offload'

'Disable hardware large receive offload'all of them should be checked.

-

@pete35 Yes, everywhere

-

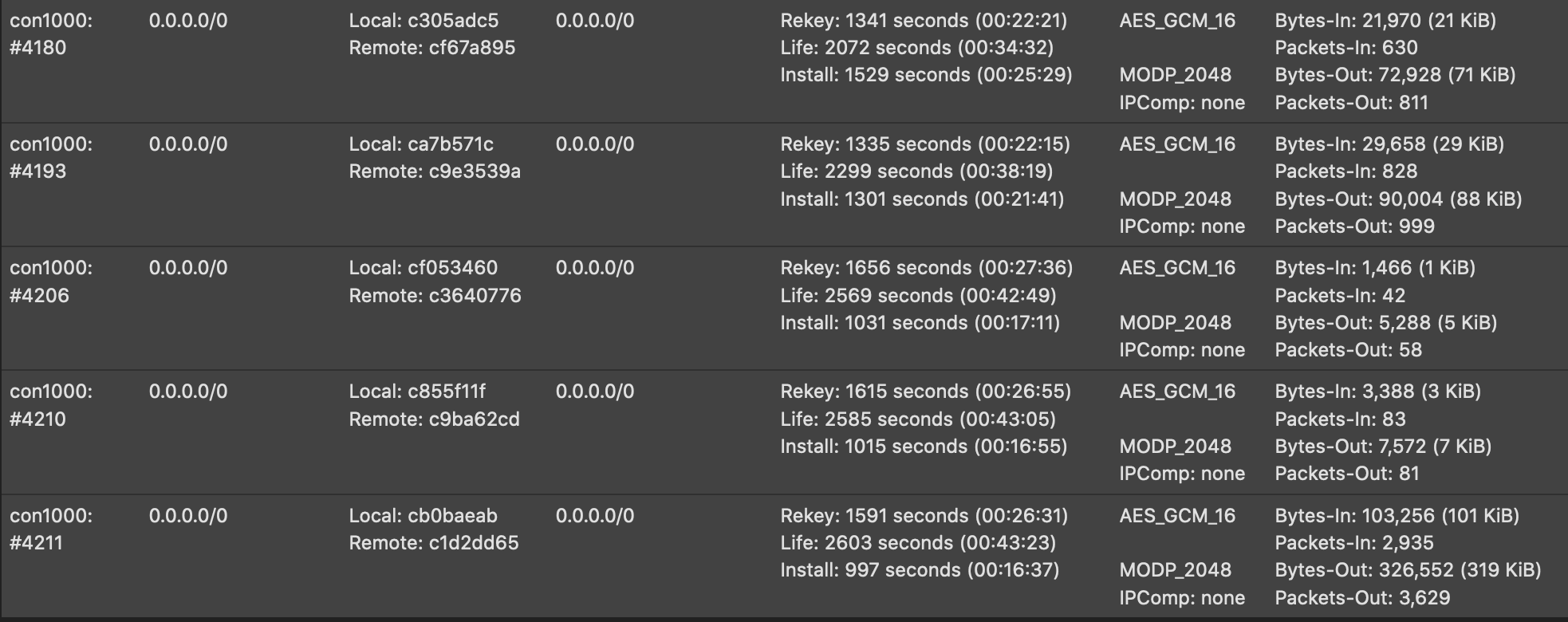

The packet loss in the ping log is for about 14 seconds... You disabled DPD ( try to configure it) .... is the tunnel renewed in that time ? Do you see multiple SA child entries for the tunnel at this time?

-

@pete35 Hi, we have also activated the DPD and we have the same problem.

As you know multiple SA child its "too normal" but sometime with P2 tunnel type we usualy view multiple SA childs.

But, how can we fix this?

-

@pete35 Timeouts:

P1 28800

P2 3600 -

It may be, that that frequent ( some seconds) rekeying causes packet loss.

You need to reconfigure the rekey/reauth parameters to overcome that:

Side 1: IKEv2, Rekey configured, Reauth disabled, child SA close action set to restart/reconnect

Side 2: IKEv2, Rekey configured, Reauth disabled, responder only set, child SA close action left at default (clear)look at this: https://redmine.pfsense.org/issues/10176

-

You wrote that you want to implement ospf with that vti tunnels. Be aware, that there are multiple issues with the ospf integration in pfsense. If you have less than 50 routes, the efforts to implement this isnt worth the outcome. And on top, there is no smooth sailing after that, because there are frequent interrupts in data traffic depending on instable tunnels, even on redundant routes.

-

@pete35 Hi Pete, I have applied the parameters that you have commented and, for now, it continues to lose packages.

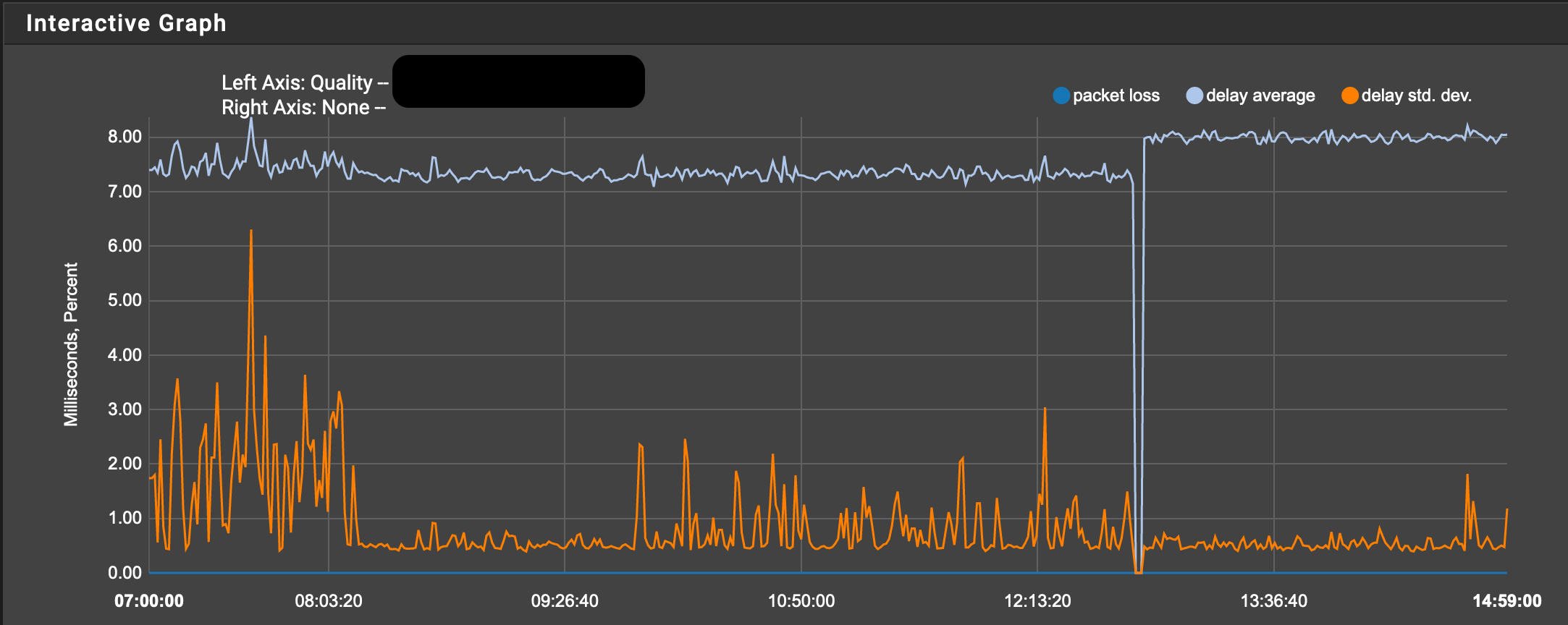

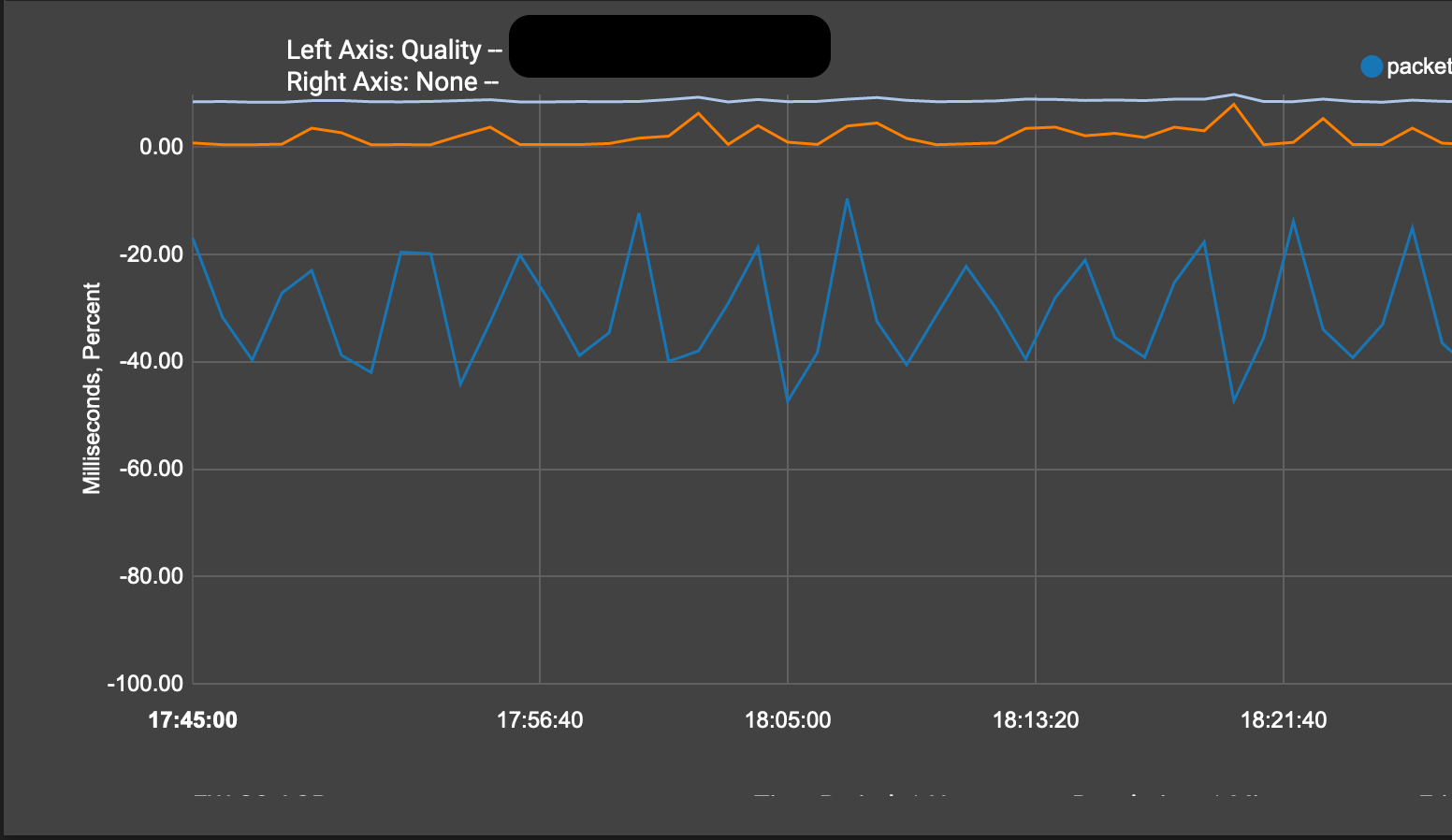

Anyway, if it was a renegotiation problem it would happen every 3600 seconds, right? and the graph we have of loss is "practically" constant, don't you think?

Look at the timeline

-

@pete35 I agree, this is the reason why I am looking for link stability, because if not implementing OSPF over unstable links, it might be the closest thing to hell...

-

The timemarks on your multiple sa's is showing a reinstall /rekey every 20 seconds. 3600 seconds would be fine, but it doesnt do that. Please check your SA situation again.

-

About OSPF, not only unstable links are causing interrupts, even simple adding or removing a route is causing an interrupt in the whole OSPF system, all pfsense routing devices will renew their routing table per restart ... , not only the OSPF routes will dissappear also any other routes in tne routing table. Interrupt time depends on settings but around 30 seconds is quite usual. If your applications can survive that, you are lucky.

-

@pete35 Perfect

-

@pete35 Yes, this is a issue, our intention, to minimize these situations is to increase the number of areas and play with death times and execution timeouts in order to minimize this problems.

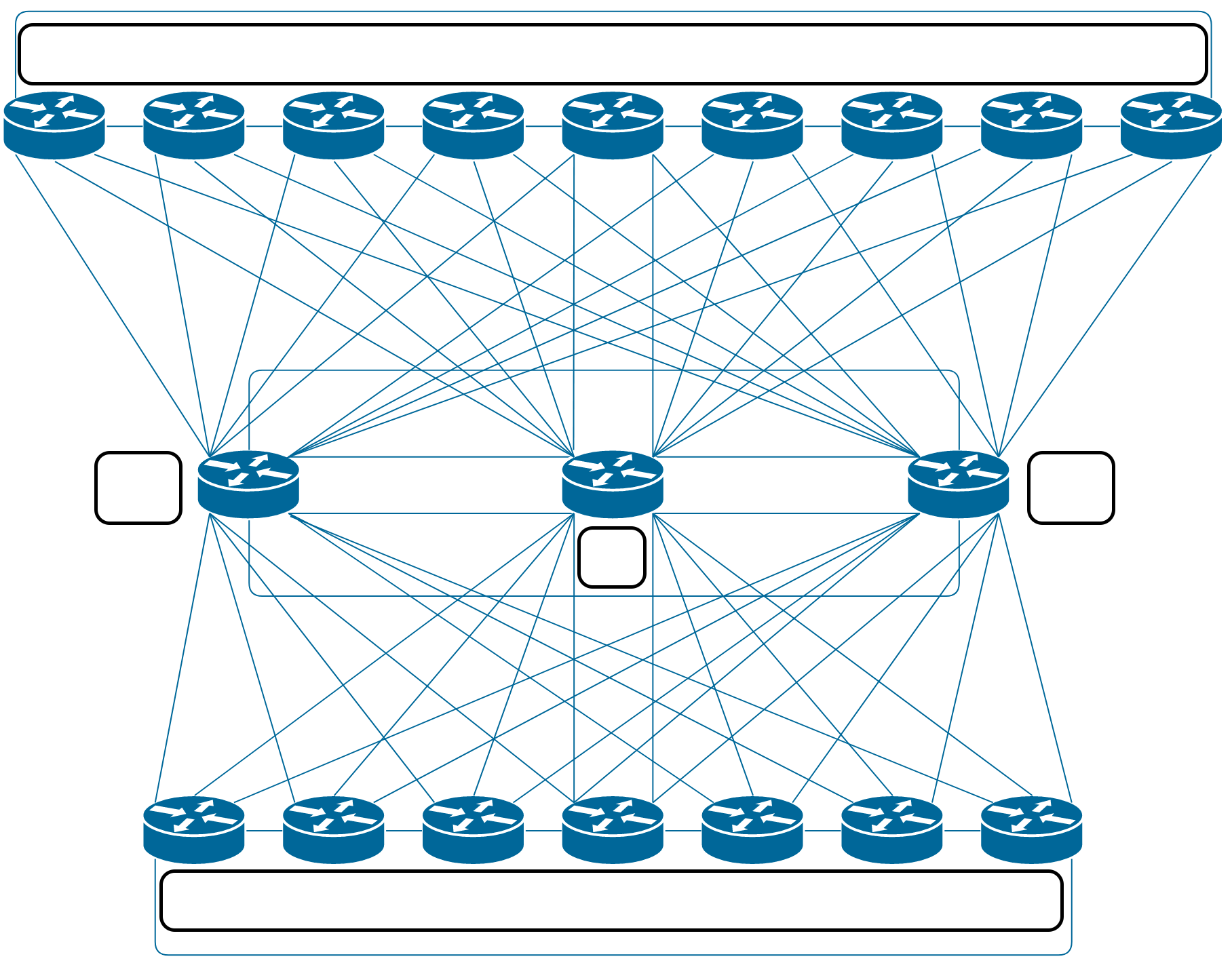

The main objective of the project is to offer high availability between two IPSEC tunnels, what we would like to do is have one IPSEC on WAN1 and another IPSEC (with the same destination) on WAN2. There are more reasons why we would like to implement dynamic routes, but it could be a separate topic.

Do you recommend another solution?

-

If you want HA with multiwan you may look for Gatewaygroups with a usually straightforward painless implementation. But this only works with a low number of routes. How many sites do you have?

Is the packetloss gone now? If not, you may post all your detailed configurations for the two sites, to check again all the parameters.

-

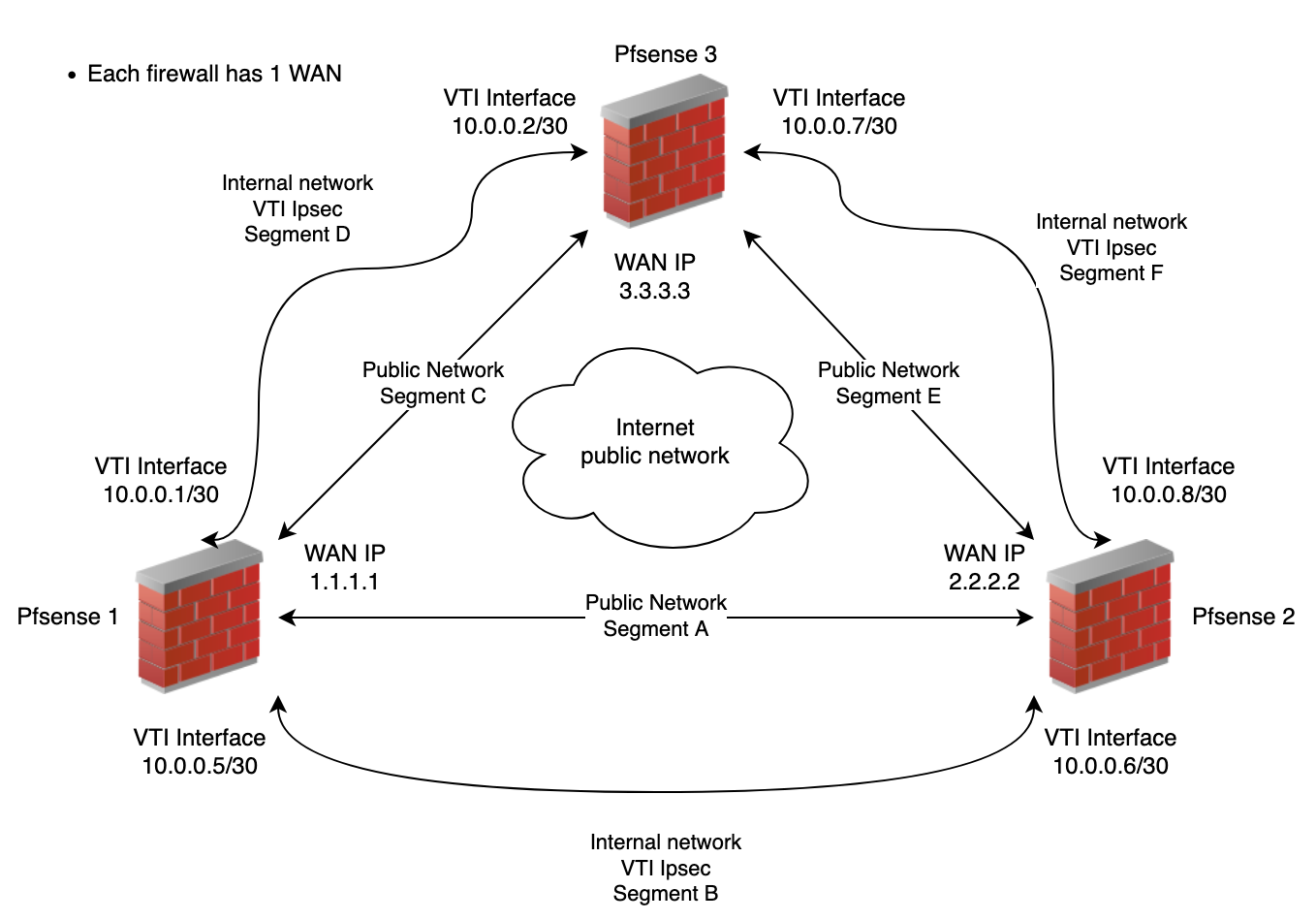

@pete35 Hi Pete, we are looking for, more than a multiwan solution, a high availability IPSEC solution. Another option that we are evaluating is to put P2 VTIs and configurate gateway groups within the routes that go within the VTIs.

Schema:

To the previous scheme you have to add 2 WANs for each site and in each site there are 2 Pfsense in HA. The main problem is that they want point-to-point connection between all the sites and we want to avoid it with some intermediate jump.

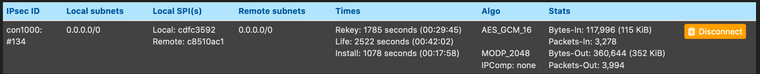

On the other hand, the tunnel maintains the same level of packet loss, although we have improved regarding the stability of the SAs

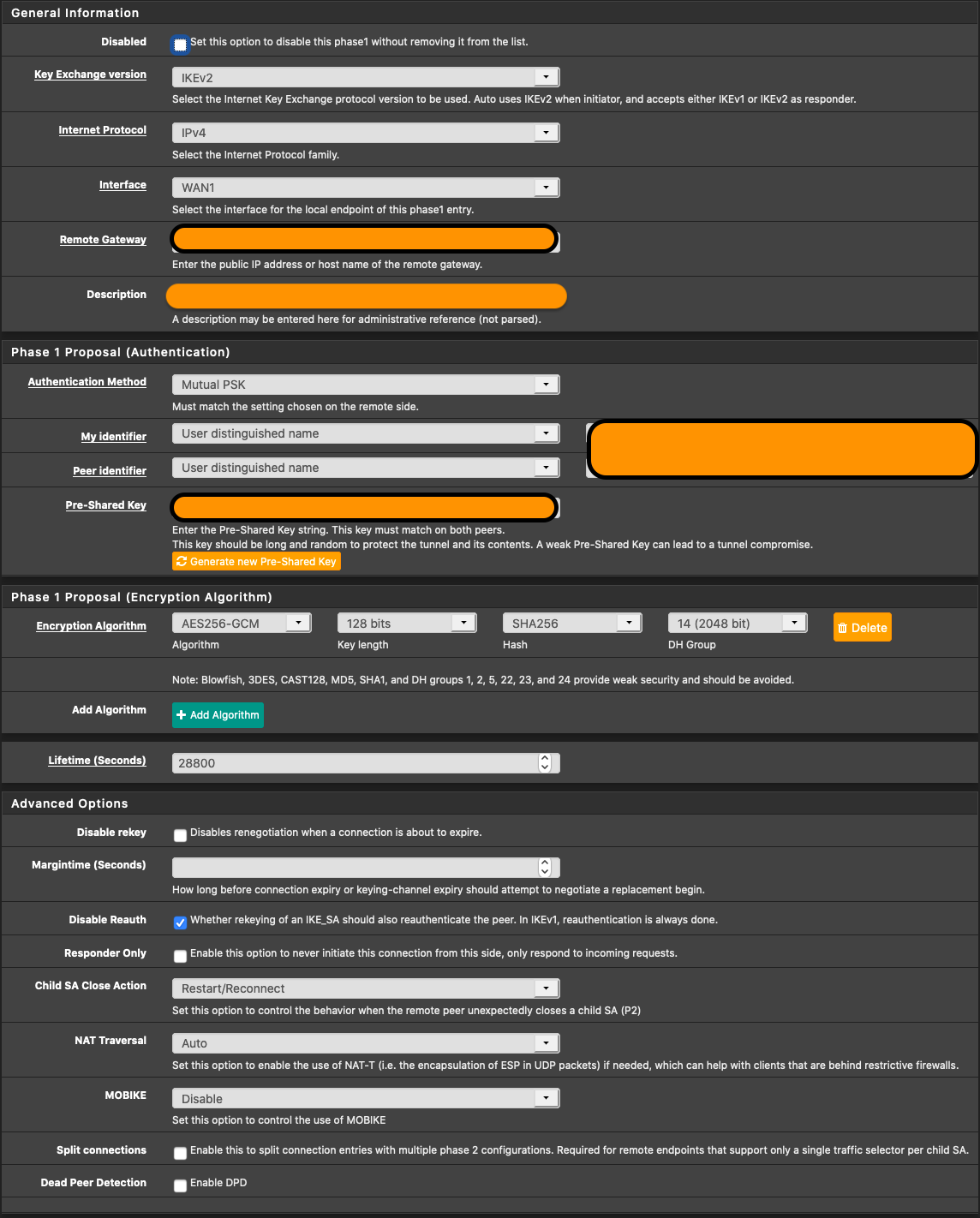

P1 Configuration

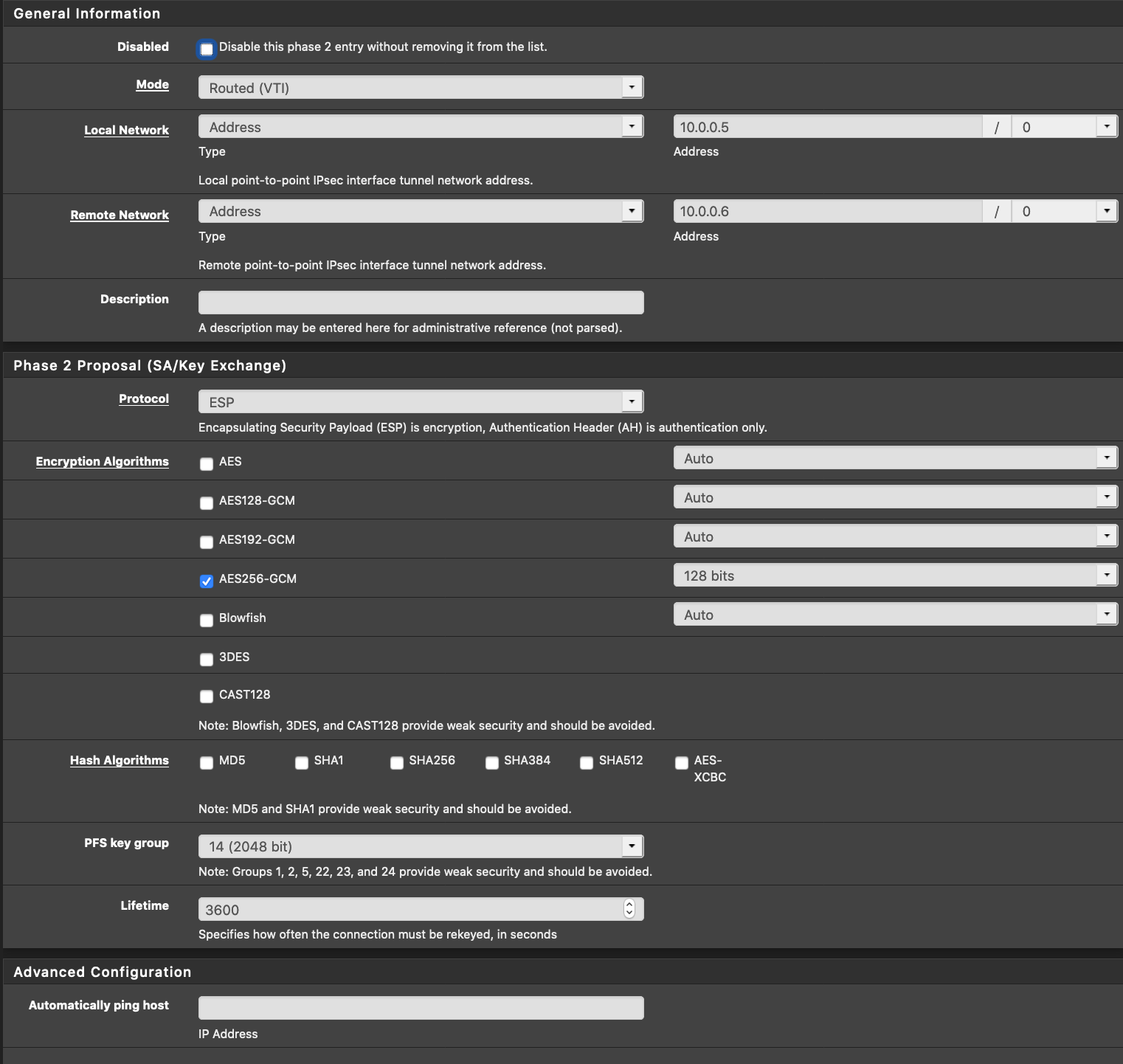

P2 Configuration

-

As for my experience IPSEC/VTI/OSPF together with pfsense HA isnt a reliable solution at all. Each of these functionalities have their problems within pfsense and together it will be a mess. Maybe you look for less functionality or choose another Router.

As for the packet loss: Want kind of Hardware do you use, APUs are fine, but you need the right BIOS for them, please dont test with pfsense as VM's. Please configure exactly along the pfsense documentation: DPD is missing, you dont choose a hash in P2 ( which shouldnt be a problem, but who knows), configure MTU clamping to 1400 and the rekey/reauth functionality as shown above. Do the same on the other side and rule out any double NAT with the connection to the internet. Show your configuration for both sides in detail - it must match. Next show the routing tables, and the syslog for ipsec. Dont use VM's for pfsense, Netgate devices are prefered.

If you have configured all above correctly and if there is more packet loss, show the ping logs and some wireshark packetcaptures from WAN as we can rule out pfsense at that point.

I have several vti tunnels with OSPF up and running and if configured correctly, there is no packet loss.

-

@pete35 Hi Pete, answers:

-We work with several versions of APU, 2,3 and 4 all with 4 GB of RAM.

-We only have one pfsense as VM in the architecture and coincidentally the VTIs that go against that firewall are the ones that lose the least packets.

-DPD is set and is not set to test. No change.

-Hash in P2. Remember that we are changing from tunnel mode to VTI mode and we are using the same configuration, in tunnel mode it works perfectly (hardware included) and in VTI mode there is packet loss.

-MTU clamping set at 1400. Let's see if the behavior changes.

-Pfsense has only one NAT to go internet.

-We are going to capture traffic to see if we see where the ICMPs are lost.

-Remember that we do not have OSPF mounted (yet), packet losses always occur, even in models reinstalled with dedicated hardware, in fact, we have now bought 6 new computers to do tests in the laboratory.Thanks for your tips.

-

did you resolve this issue?

we had set up some ipsec VTI were seeing random SMB connection issues over VPN. it seems this was/is the root causeIPSec in transport mode between FreeBSD hosts blackholes TCP traffic

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=242744 -

@metisit

A little late on this reply, but for anyone coming across this- that link concerns racoon and not strongswan.