Using 2 public addresses to hide a single internal IP and get replied from the correct one

-

@johnpoz Huh I just checked and you are right, only the first packet goes to the load balancer, and the following ones go to the backend directly..... that's not what I wanted.

And yes it's UDP traffic.

Do you know how I could achieve this?

-

If I remove the 1:1 on the backend, everything goes into the Load balancer (correct), but the backend reply doesn't arrive to me (client).

-

So your goal is to send all traffic hitting your wan IP on port XYZ to nginx load balancer at .211.. which then sends this traffic to .213..

And you want 213 to return traffic direct back to pfsense. But pfsense to continue to send all traffic that hits its wan on to .211?

So asymmetrical traffic flow..

hmmmm - yeah going to need more coffee, if not beers... Off the top of my head, I don't really think such a setup is possible??

Once your return traffic is allowed from .213, not sure new traffic would even go to 211, because pfsense would keep track of the conversation.. Hmmmmm

-

@johnpoz I see so the reason I just receive the first packet in the load balancer and the next ones directly on the backends, it's because the state is already there and then NAT 1:1 is applied for my source IP? But for new IPs they will have to send also first a the first packet to the LB, right?

Could I then remove the option to keep the state and keep the 1:1 on the backend, and that should deliver everything to the load balancer even if I already queried it?

-

@adrianx said in Using 2 public addresses to hide a single internal IP and get replied from the correct one:

Could I then remove the option to keep the state and keep the 1:1 on the backend

Not sure sure such a thing is possible??

Why can you not just return traffic back to nginx? And let it send traffic back to source IP 1.2.3.4? That is normally how it would be setup.. And that would be just simple port forwards on pfsense.

-

@adrianx

So how does the server responed? Check with packet capture.

It should use the VIP as source address in respond packets. I suspect that is not the case. -

@johnpoz The reason is because I'm doing this to distribute the load of incoming UDP requests for a UDP flood attack with spoofed IPs, so I will get around 50000 requests from different IPs per second. This saturates the NGINX leaving it without ports to bind when communicating with the backends. That's why I want to delegate the reply to the backend. Do you see my point?

-

wouldn't you have the same problem with pfsense..

Confused how that would solve the problem?

-

@viragomann So with packet capture on the LAN, I see that the backend replies this:

15:32:57.557414 IP 192.168.1.213.7777 > Client.Public.IP.60428: UDP, length 15

So not using the Virtual IP. Is there a way to make it use the public IP?

-

@adrianx

So DSR is not configured correctly on the servers.From the linked site above:

the service VIP must be configured on a loopback interface on each backend and must not answer to ARP requests

-

^ exactly.

But I still don't see how that really solves a state exhaustion issue.. No matter how many IP you send to behind pfsense.. Pfsense is natting to its public IP, which has a limit of how many states it can have.

The way to solve state exhaustion issue would be to filter the traffic that is "bad" before a state is created..

-

I'd suppose, if the backend servers are configured correctly for DSR (responding using the VIP and not responding to ARP requests) the states will be fine.

However, I've never set up something like that. -

so I will get around 50000 requests from different IPs per second

He still have his public IP with states.. Doesn't matter how many IPs he sends to behind.. While sure the local boxes would have less states.. His public IP would still have the states.. at 50k a second that is going to burn through states like crazy..

I don't really see how doing something like this could solve a state exhaustion issue to be honest..

Well lets not really call them states if they are UDP... But pf tracks them like they were.. You can set an option in pf for how long these are tracked..

But yeah I believe using the VIP on these end boxes for the IP of the load balancer is how such a setup is to be done. To solve the asymmetrical flow problem.. Since pfsense will only send traffic to what it thinks is 1 IP.. And the return traffic to pfsense will be coming from that same IP. As far as pfsense knows, since its source would be the vip address.

-

@johnpoz said in Using 2 public addresses to hide a single internal IP and get replied from the correct one:

so I will get around 50000 requests from different IPs per second

Yes, you're absolutly right. It didn't realize, that he is really having such high load and may exhausting the state table.

However, with enough memory and cpu power, increasing the state table size and shortening the state timeouts it may be doable. -

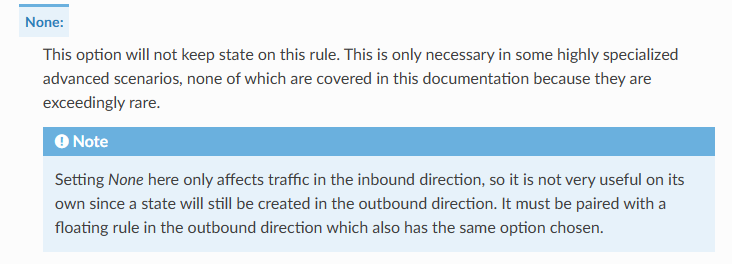

Regarding the "states" for the public IP, I can modify the associated rule with the port forwarding and choose "State type" as "none", and that would solve it, no?

-

Hmmm?

https://docs.netgate.com/pfsense/en/latest/firewall/configure.html

-

@johnpoz Yes, that, plus also not keeping it in the outbound, no?. And how to configure UDP state expiration?

-

Well if you don't keep any states for the rule - it shoudn't matter.. But in the advanced section of the rule you can set the timeout option for states.. Also would need to be done on a outbound rule that matches.

I am not 100% sure if that would also pertain to what pf does for udp tracking - I would assume so..

-

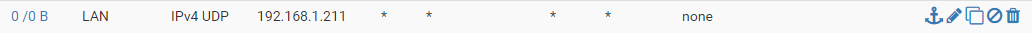

@johnpoz So the Floating rule for the Outbound, should be something like:

Direction: In. State type: none

So traffic going into the LAN from the internal load balancer, intended to get towards the wan to the outside world... no? Or I'm getting it wrong?

-

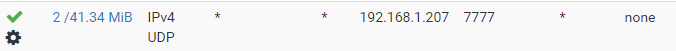

@johnpoz Also, any idea why it still creates states even if I have the port forward rule set to "state type: none"? See:

(DELETED TO AVOID SHOWING PUBLIC IP)