pfTop hangs my GUI in 2.5.2 RC

-

All the same, see:

pkg info -x pfSense | grep 2.5.2

pfSense-2.5.2.r.20210613.1712

pfSense-base-2.5.2.r.20210613.1712

pfSense-default-config-2.5.2.r.20210613.1712

pfSense-kernel-pfSense-2.5.2.r.20210613.1712

pfSense-rc-2.5.2.r.20210613.1712

pfSense-repo-2.5.2.r.20210613.1712uname -a

FreeBSD pfsense2.... 12.2-STABLE FreeBSD 12.2-STABLE RELENG_2_5_2-n226655-2ce2a4a2f71 pfSense amd64ls -l

which pftop

-r-xr-xr-x 1 root wheel 192352 Jun 10 23:54 /usr/local/sbin/pftopsha256

which pftop

SHA256 (/usr/local/sbin/pftop) = 0c525409788804d8d578068cb79408cd4f209dc384ddf65a8865eefe481e2a52ldd

which pftop

/usr/local/sbin/pftop:

libncurses.so.8 => /lib/libncurses.so.8 (0x800279000)

libc.so.7 => /lib/libc.so.7 (0x8002d2000) -

Maybe connected:

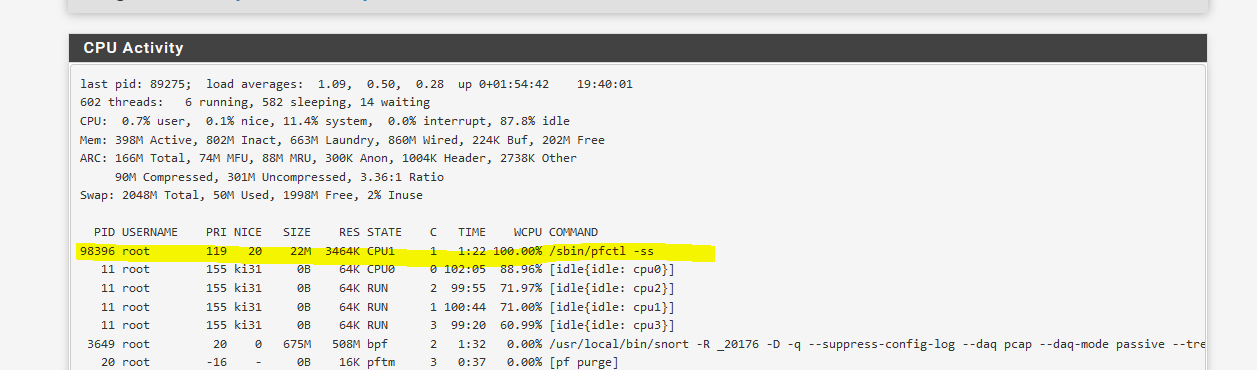

It hangs my FW, when there are 4 proccesses like this...

-

Maybe:

ps -ax | grep pfctl

8530 - L 42:21.31 /sbin/pfctl -i ovpnc3 -Fs

17907 - I 0:00.00 sh -c /sbin/pfctl -vvss | /usr/bin/grep creator | /usr/bin/cut -d" " -f7 | /usr/bin/sort -u

18015 - R 337:49.40 /sbin/pfctl -vvss

38322 - RN 365:07.87 /sbin/pfctl -ss

61701 - I 0:00.00 sh -c /sbin/pfctl -vvss | /usr/bin/grep creator | /usr/bin/cut -d" " -f7 | /usr/bin/sort -u

61787 - L 17:56.93 /sbin/pfctl -vvss

82409 - I 0:00.00 sh -c /sbin/pfctl -vvss | /usr/bin/grep creator | /usr/bin/cut -d" " -f7 | /usr/bin/sort -u

82711 - L 198:37.49 /sbin/pfctl -vvss

84187 - RN 385:23.46 /sbin/pfctl -ss

95032 - I 0:00.00 sh -c /sbin/pfctl -vvss | /usr/bin/grep creator | /usr/bin/cut -d" " -f7 | /usr/bin/sort -u

95080 - R 281:20.27 /sbin/pfctl -vvss

43823 0 S+ 0:00.00 grep pfctl -

Indeed pfctl and pftop are not very happy, when looking at cpu usage...

last pid: 46705; load averages: 4.63, 5.26, 5.75 up 0+07:02:25 19:51:31 119 processes: 9 running, 106 sleeping, 1 zombie, 3 lock CPU 0: 0.7% user, 0.0% nice, 63.3% system, 0.0% interrupt, 36.0% idle CPU 1: 0.0% user, 0.0% nice, 87.4% system, 0.0% interrupt, 12.6% idle CPU 2: 0.0% user, 0.0% nice, 51.1% system, 0.0% interrupt, 48.9% idle CPU 3: 0.0% user, 0.0% nice, 74.8% system, 0.0% interrupt, 25.2% idle CPU 4: 0.0% user, 0.0% nice, 79.7% system, 0.0% interrupt, 20.3% idle CPU 5: 0.0% user, 0.0% nice, 63.9% system, 0.0% interrupt, 36.1% idle CPU 6: 0.0% user, 0.0% nice, 39.5% system, 0.0% interrupt, 60.5% idle CPU 7: 0.0% user, 0.0% nice, 51.9% system, 0.0% interrupt, 48.1% idle Mem: 189M Active, 650M Inact, 4241M Wired, 165M Buf, 10G Free Swap: 3656M Total, 3656M Free PID USERNAME THR PRI NICE SIZE RES STATE C TIME WCPU COMMAND 18015 root 1 20 0 503M 61M CPU7 7 345:55 99.96% pfctl 38322 root 1 20 20 134M 18M CPU4 4 373:08 99.95% pfctl 84187 root 1 20 20 134M 18M CPU3 3 393:25 99.91% pfctl 95080 root 1 80 0 503M 61M RUN 5 289:22 99.90% pfctl 39212 root 1 20 0 112M 91M CPU6 6 18:23 62.80% pftop 39821 root 1 80 0 112M 92M *pf_id 4 15:57 38.04% pftop 30353 root 1 72 0 146M 3636K RUN 7 2:32 2.41% pftop 11621 root 1 20 0 113M 98M *pf_id 5 15:43 2.41% pftop 40726 root 1 75 0 112M 3264K CPU1 1 18:52 1.00% pftop 82711 root 1 81 0 503M 61M CPU0 0 199:33 0.95% pfctl 18221 root 1 20 0 17M 7284K select 4 1:21 0.37% openvpn 45830 root 1 20 0 13M 3740K CPU5 5 0:00 0.07% top 93616 unbound 8 20 0 232M 50M kqread 7 0:06 0.06% unbound -

Can you share your

/tmp/rules.debug?Do you have any packages installed?

Any large pf tables/aliases? If so, how large?

-

@jimp

Packages

acme security 0.6.9_3 Automated Certificate Management Environment, for automated use of LetsEncrypt certificates.Package Dependencies:

pecl-ssh2-1.3.1 socat-1.7.4.1_1 php74-7.4.20 php74-ftp-7.4.20

Cron sysutils 0.3.7_5 The cron utility is used to manage commands on a schedule.

haproxy-devel net 0.62_3 The Reliable, High Performance TCP/HTTP(S) Load Balancer.

This package implements the TCP, HTTP and HTTPS balancing features from haproxy.

Supports ACLs for smart backend switching.Package Dependencies:

haproxy-2.2.14

iperf benchmarks 3.0.2_5 Iperf is a tool for testing network throughput, loss, and jitter.Package Dependencies:

iperf3-3.10.1

openvpn-client-export security 1.6_1 Allows a pre-configured OpenVPN Windows Client or Mac OS X's Viscosity configuration bundle to be exported directly from pfSense.Package Dependencies:

openvpn-client-export-2.5.2 openvpn-2.5.2_2 zip-3.0_1 p7zip-16.02_3

snortNo large tables at all.. largest has like 5 entries

-

I can share files and screen, but not in this forum. To many IPs in there.

Packages:

FRR, FreeRadius, OpenVPN Client ExportAliases:

Couple of our networks, like 12x 192.168.0.0/24 networks, couple of dns aliases,

did have bogus network on in the interface config, and disabled it now, but i think you have to reboot... -

Is this bare metal hardware or a VM?

How many CPUs/cores?

-

Bare metal SuperMicro:

Intel(R) Atom(TM) CPU C3758 @ 2.20GHz

8 CPUs: 1 package(s) x 8 core(s)

AES-NI CPU Crypto: Yes (active)

QAT Crypto: Yes (inactive) -

I thought maybe https://redmine.pfsense.org/issues/10414 had popped back up, but I can't replicate it using the commands that used to trigger it before. It's worth trying on your hardware though to see what happens.

Do you have bogons enabled?

If so, try the following commands:

$ /etc/rc.update_bogons.sh 0 $ time pfctl -t bogonsv6 -T flush $ time pfctl -t bogonsv6 -T add -f /etc/bogonsv6 $ time pfctl -t bogonsv6 -T add -f /etc/bogonsv6(yes, that last one is done twice)

You may have to reboot before doing that if it's already in the bad state it was in before.

-

time pfctl -t bogonsv6 -T flush 122609 addresses deleted. 0.000u 0.048s 0:00.04 100.0% 240+210k 0+0io 0pf+0w time pfctl -t bogonsv6 -T add -f /etc/bogonsv6 122609/122609 addresses added. 0.226u 0.218s 0:00.44 97.7% 212+185k 0+0io 0pf+0w time pfctl -t bogonsv6 -T add -f /etc/bogonsv6 0/122609 addresses added. 0.231u 0.079s 0:00.31 96.7% 208+182k 0+0io 0pf+0wPS: It was still in this bad state but the commands still worked...

-

I had similar result.

Hyperv, 4 cores.

Before the upgrade not a single issue wigh beta...

-

OK, so at least we know it isn't the same bug coming back to haunt us, though that would have likely made tracking down the cause and resolution much easier.

From your earlier output, the

pfctlcommands getting hung up are dumping the state table contents.Try each of the following, but no need to post the individual state output, just the timing:

$ pfctl -si | grep -A4 State $ time pfctl -ss $ time pfctl -vvss -

State Table Total Rate

current entries 1434

searches 3714325 359.4/s

inserts 138111 13.4/s

removals 136676 13.2/s4.50 real 0.01 user 4.48 sys

1.32 real 0.01 user 1.30 sys

-

Okay, after a reboot the pftop page works again.

CPU usage was back to none. Then a pfctl -ss was running long....

After it ended I run:

pfctl -si | grep -A4 State State Table Total Rate current entries 2407 searches 404293 546.3/s inserts 20698 28.0/s removals 18291 24.7/s time pfctl -ss 0.141u 140.191s 2:20.52 99.8% 203+177k 0+0io 0pf+0w time pfctl -vvss 0.157u 87.638s 1:28.08 99.6% 203+177k 0+0io 0pf+0wAnd perhaps these aliases add a lot of ipv4 and ipv6:

Jun 15 20:21:31 filterdns 68338 Adding Action: pf table: Office365Server host: outlook.office365.com

Jun 15 20:21:31 filterdns 68338 Adding Action: pf table: Office365Server host: outlook.office.com

Jun 15 20:21:31 filterdns 68338 Adding Action: pf table: MailExternalServerIPs host: outlook.office365.com

Jun 15 20:21:31 filterdns 68338 Adding Action: pf table: IcingaExternClients host: outlook.office365.com -

Hmm, it definitely shouldn't be taking that long to print out only 2400 states.

-

Block bogon networks was now on before reboot and is still...

pfctl shows up in top every now and then and uses a lot of cpu...

-

It's not likely related to bogons or aliases/tables at this point, but something in the state table.

I've started https://redmine.pfsense.org/issues/12045 for this, and one of the other devs has a lead on a possible solution.

We're still trying to find a way to replicate it locally yet, but no luck.

-

OK I can't quite get up to the number of states you had with a quick and dirty test but I was able to get up to about 900 states and I definitely saw a slowdown.

20-50 states: 0.01s

300-450 states: 3s

850 states: 20sI could easily see it degrading fast, need more data points but that certainly appears to be significant growth. The FreeBSD commit I linked in the Redmine above mentions factorial time (

O(N!)) which would be quite bad in terms of efficiency.We're working on getting a fix into a new build, it should be available soon.

-

Sounds greate. I did a ktrace und kdump on pfctl -ss

62033 pfctl 0.126404 CALL mmap(0,0xa01000,0x3<PROT_READ|PROT_WRITE>,0x1002<MAP_PRIVATE|MAP_ANON>,0xffffffff,0) 62033 pfctl 0.126446 RET mmap 34374418432/0x800e00000 62033 pfctl 0.126700 CALL ioctl(0x3,DIOCGETSTATESNV,0x7fffffffe410) 62033 pfctl 71.020411 RET ioctl 0 62033 pfctl 71.020973 CALL mmap(0,0x5000,0x3<PROT_READ|PROT_WRITE>,0x1002<MAP_PRIVATE|MAP_ANON>,0xffffffff,0) 62033 pfctl 71.020984 RET mmap 34368081920/0x8007f5000It keeps 71 sec? (not so sure about how kdump time output) in ioctl(0x3,DIOCGETSTATESNV,0x7fffffffe410).... Does this confirm the nvlist bottleneck or is ioctl something else ?