-

@dennypage Do you have any ideas why this would happen in a Synology NAS when it is connected to the pfsense NUT server?

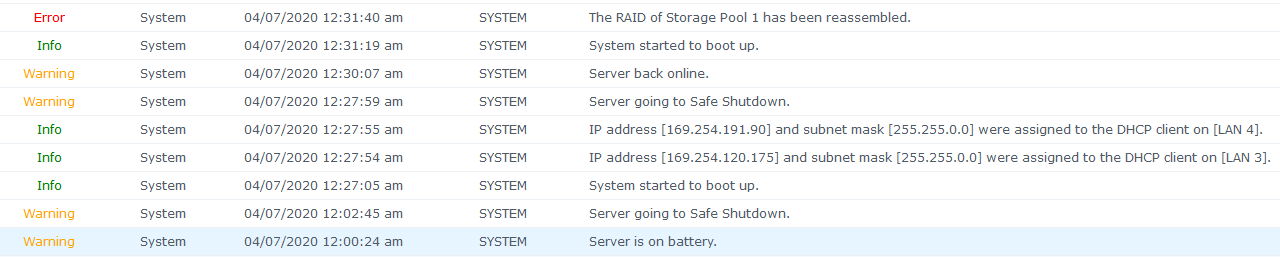

So what happened there was:

- 12:00:24 AM -> blackout

- 12:02:45 AM -> FSD issued by NUT server and Syno went to safe shutdown (expected)

- 12:27:05 AM -> power went back on and everything started to boot up (expected)

- 12:27:59 AM -> Syno went to safe shutdown again (unexpected and I don't why it did that). I know that the NUT server thinks that the UPS is still in Low Battery state but I don't think it issued another FSD but I can't say for sure.

- 12:30:07 AM -> server back online which means it detected that AC was restored to the UPS already (unexpected because I would've thought that this message should've logged around the same time as #3 above when power really went back on)

- 12:31:40 AM -> RAID got reassemble which is the most disturbing part and what I'm trying to fix here.

My pfsense "ups*" logs around that time:

Apr 22 23:30:02 upsd 29008 User monuser@192.168.10.10 logged into UPS [ups] Apr 22 23:25:38 upsd 29008 User monuser@192.168.10.10 logged into UPS [ups] Apr 22 23:25:37 upsmon 21716 UPS ups battery is low Apr 22 23:25:37 upsmon 21716 Communications with UPS ups established Apr 22 23:25:34 upsd 29008 Connected to UPS [ups]: usbhid-ups-ups Apr 22 23:25:32 upsmon 21716 Communications with UPS ups lost Apr 22 23:25:32 upsmon 21716 Poll UPS [ups] failed - Driver not connected Apr 22 23:25:32 upsd 29008 User local-monitor@::1 logged into UPS [ups] Apr 22 23:25:30 upsd 28190 Can't connect to UPS [ups] (usbhid-ups-ups): No such file or directory Apr 22 23:25:28 upsd 12714 User monuser@192.168.10.10 logged into UPS [ups] Apr 22 23:25:27 upsd 12000 Can't connect to UPS [ups] (usbhid-ups-ups): No such file or directory Apr 22 23:25:25 upsd 78256 User local-monitor@::1 logged out from UPS [ups] Apr 22 23:25:24 upsmon 68274 Communications with UPS ups lost Apr 22 23:25:24 upsmon 68274 Poll UPS [ups] failed - Driver not connected Apr 22 23:25:24 upsd 78256 User local-monitor@::1 logged into UPS [ups] Apr 22 23:25:21 upsd 78160 Can't connect to UPS [ups] (usbhid-ups-ups): No such file or directory Apr 22 23:25:20 upsmon 22145 Communications with UPS ups lost Apr 22 23:25:20 upsmon 22145 Poll UPS [ups] failed - Driver not connected Apr 22 23:25:20 upsd 82186 User local-monitor@::1 logged into UPS [ups] Apr 22 23:25:18 upsd 80149 Can't connect to UPS [ups] (usbhid-ups-ups): No such file or directory Apr 22 23:25:17 upsd 60464 User local-monitor@::1 logged out from UPS [ups] Apr 22 23:25:10 upsmon 1149 UPS ups battery is low Apr 22 23:25:10 upsmon 1149 Communications with UPS ups established Apr 22 23:25:07 upsd 60464 Connected to UPS [ups]: usbhid-ups-ups Apr 22 23:25:05 upsd 60464 User monuser@192.168.10.10 logged into UPS [ups] Apr 22 23:25:05 upsmon 1149 UPS ups is unavailable Apr 22 23:25:05 upsmon 1149 Poll UPS [ups] failed - Driver not connected Apr 22 23:25:00 upsmon 1149 Communications with UPS ups lost Apr 22 23:25:00 upsmon 1149 Poll UPS [ups] failed - Driver not connected Apr 22 23:25:00 upsd 60464 User local-monitor@::1 logged into UPS [ups] Apr 22 23:24:58 upsd 59485 Can't connect to UPS [ups] (usbhid-ups-ups): No such file or directory Apr 22 23:24:55 upsd 23672 Can't connect to UPS [ups] (usbhid-ups-ups): No such file or directory Apr 22 23:18:33 upsd 31647 Client local-monitor@::1 set FSD on UPS [ups] Apr 22 23:18:33 upsmon 31178 UPS ups battery is low Apr 22 23:15:32 upsmon 31178 UPS ups on battery Apr 22 23:14:52 upsmon 31178 Communications with UPS ups established Apr 22 23:14:51 upsd 31647 UPS [ups] data is no longer stale Apr 22 23:14:47 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:42 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:37 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:32 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:27 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:22 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:17 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:12 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:07 upsmon 31178 Poll UPS [ups] failed - Data stale Apr 22 23:14:02 upsmon 31178 Poll UPS [ups] failed - Data staleI've had the same occurrence for about 2 or 3 times now. Can you help? Thanks.

-

@kevindd992002 said in NUT package:

@dennypage Do you have any ideas why this would happen in a Synology NAS when it is connected to the pfsense NUT server?

It's not related to the pfSense NUT package itself. It could be a result of how Synology manages NUT, or how Synology manages boot up. Either way, the best place to seek help would be the Synology forums.

One question comes to mind to prime the pump... did the Synology actually complete its shutdown? Did it remove the POWERDOWNFLAG?

-

@dennypage said in NUT package:

@kevindd992002 said in NUT package:

@dennypage Do you have any ideas why this would happen in a Synology NAS when it is connected to the pfsense NUT server?

It's not related to the pfSense NUT package itself. It could be a result of how Synology manages NUT, or how Synology manages boot up. Either way, the best place to seek help would be the Synology forums.

One question comes to mind to prime the pump... did the Synology actually complete its shutdown? Did it remove the POWERDOWNFLAG?

It wasn't really acting up this way before, so I'm not sure what changed.

I wouldn't really know because Synology doesn't provide logs regarding the complete shutdown but what I do know is that it will only go to safe mode with all the lights in the NAS itself lit up but with no activity (unmounted volumes, stopped services, etc.) which it did reach. If it was an improper shutdown, it would tell in the logs too. How would I confirm if it did remove the POWERDOWNFLAG?

-

@kevindd992002 said in NUT package:

I wouldn't really know because Synology doesn't provide logs regarding the complete shutdown but what I do know is that it will only go to safe mode with all the lights in the NAS itself lit up but with no activity (unmounted volumes, stopped services, etc.) which it did reach. If it was an improper shutdown, it would tell in the logs too. How would I confirm if it did remove the POWERDOWNFLAG?

These questions are best explored in the Synology forums.

-

@dennypage said in NUT package:

@kevindd992002 said in NUT package:

I wouldn't really know because Synology doesn't provide logs regarding the complete shutdown but what I do know is that it will only go to safe mode with all the lights in the NAS itself lit up but with no activity (unmounted volumes, stopped services, etc.) which it did reach. If it was an improper shutdown, it would tell in the logs too. How would I confirm if it did remove the POWERDOWNFLAG?

These questions are best explored in the Synology forums.

Here's the response from Synology:

After discussing with our developer, we collect the time table as below: 12:00:24 AM Server is on battery. - AC power fail, UPS on battery 12:02:45 AM Server going to Safe Shutdown - enter safe mode (it will not shut down the NAS immediately but keep it on safe mode if you didn't enable the option "Shutdown UPS when the system enters Safe Mode") 12:?? AM - We suppose that UPS stops output the power to NAS so the NAS shutdown. Unfortunately, there doesn't have any log here cause by the current design. 12:27:05 AM System started to boot up - UPS power retrieved, reboot the NAS. 12:27:59 AM Server going to Safe Shutdown - When the NAS is rebooting, the UPS status changed to "on battery" and low battery, so the NAS will not show "server back online" log but enter in safe mode directly after rebooting completed. 12:28:06 AM - During the NAS enter the safe mode, the disk 4 and 5 in the expansion unit cannot be recognized in a short time then disabled by the system. Originally, the expansion unit will reset "all SATA port" then retry the link. However, it is during the safe shutdown so the expansion unit is also during the shutdown procedure, it would lead to disk 1 to disk 3 SATA link down directly as well. In conclusion, all expansion unit HDDs are disabled at the moment. [1] 12:30:07 AM Server back online- AC retrieved UPS power retrieved, NAS starts to leave safe mode. 12:31:19 AM System started to boot up - System force reboot after leave safe mode, it is the current policy. 12:31:40 AM The RAID of Storage Pool 1 has been reassembled.- When the system reboot, it tried to assemble the RAID. However, all expansion unit are disabled previously so the RAID cannot be assembled normally then system try to force assemble the RAID. [2] It looks like the disk 4 and disk 5 model ( WD100EMAZ-00WJTA0) are not stable with the NAS. After checking the HDD compatible list, it also doesn't list in it. Therefore, we cannot ensure the stability with the NAS or expansion unit To prevent this issue, please refer to our below suggestion: 1. Replace the two disks in the expansion unit to the compatible model. 2. The log shows the UPS has on battery/power symptoms frequently. Please double-check the UPS status and AC power stability. [1] 2020-04-07T00:28:03+08:00 Synology kernel: [ 110.053013] nfsd: last server has exited, flushing export cache 2020-04-07T00:28:06+08:00 Synology kernel: [ 113.429756] ata3.03: exception Emask 0x10 SAct 0x0 SErr 0x10002 action 0xf 2020-04-07T00:28:06+08:00 Synology kernel: [ 113.437457] ata3.03: SError: { RecovComm PHYRdyChg } 2020-04-07T00:28:06+08:00 Synology kernel: [ 113.443018] ata3.04: exception Emask 0x10 SAct 0x0 SErr 0x10002 action 0xf 2020-04-07T00:28:06+08:00 Synology kernel: [ 113.450728] ata3.04: SError: { RecovComm PHYRdyChg } 2020-04-07T00:28:06+08:00 Synology kernel: [ 113.456355] ata3.03: hard resetting link 2020-04-07T00:28:07+08:00 Synology kernel: [ 114.183930] ata3.03: SATA link down (SStatus 0 SControl 330) 2020-04-07T00:28:07+08:00 Synology kernel: [ 114.190281] ata3: No present pin info for SATA link down event 2020-04-07T00:28:07+08:00 Synology kernel: [ 114.196906] ata3.04: hard resetting link 2020-04-07T00:28:08+08:00 Synology kernel: [ 114.924569] ata3.04: SATA link down (SStatus 0 SControl 330) 2020-04-07T00:28:08+08:00 Synology kernel: [ 114.930895] ata3: No present pin info for SATA link down event 2020-04-07T00:28:09+08:00 Synology kernel: [ 116.198231] ata3.03: hard resetting link 2020-04-07T00:28:09+08:00 Synology kernel: [ 116.507737] ata3.03: SATA link down (SStatus 0 SControl 330) 2020-04-07T00:28:09+08:00 Synology kernel: [ 116.514072] ata3: No present pin info for SATA link down event 2020-04-07T00:28:10+08:00 Synology kernel: [ 116.938827] ata3.04: hard resetting link 2020-04-07T00:28:10+08:00 Synology kernel: [ 117.248352] ata3.04: SATA link down (SStatus 0 SControl 330) 2020-04-07T00:28:10+08:00 Synology kernel: [ 117.254756] ata3: No present pin info for SATA link down event 2020-04-07T00:28:10+08:00 Synology kernel: [ 117.261447] ata3.03: limiting SATA link speed to 1.5 Gbps 2020-04-07T00:28:10+08:00 Synology kernel: [ 117.267527] ata3.04: limiting SATA link speed to 1.5 Gbps 2020-04-07T00:28:11+08:00 Synology kernel: [ 118.522052] ata3.03: hard resetting link 2020-04-07T00:28:12+08:00 Synology kernel: [ 118.831588] ata3.03: SATA link down (SStatus 0 SControl 310) 2020-04-07T00:28:12+08:00 Synology kernel: [ 118.837924] ata3: No present pin info for SATA link down event 2020-04-07T00:28:12+08:00 Synology kernel: [ 119.262669] ata3.04: hard resetting link 2020-04-07T00:28:12+08:00 Synology kernel: [ 119.572171] ata3.04: SATA link down (SStatus 0 SControl 310) 2020-04-07T00:28:12+08:00 Synology kernel: [ 119.578506] ata3: No present pin info for SATA link down event 2020-04-07T00:28:14+08:00 Synology kernel: [ 120.845854] ata3.03: hard resetting link 2020-04-07T00:28:14+08:00 Synology kernel: [ 121.155430] ata3.03: SATA link down (SStatus 0 SControl 300) 2020-04-07T00:28:14+08:00 Synology kernel: [ 121.161759] ata3: No present pin info for SATA link down event 2020-04-07T00:28:14+08:00 Synology kernel: [ 121.586423] ata3.04: hard resetting link 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.895970] ata3.04: SATA link down (SStatus 0 SControl 300) 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.902307] ata3: No present pin info for SATA link down event 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.908915] ata3.03: disabled 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.912251] ata3.03: already disabled (class=0x2) 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917520] ata3.03: already disabled (class=0x2) 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917691] sd 2:3:0:0: rejecting I/O to offline device 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917706] Result: hostbyte=0x01 driverbyte=0x00 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917713] cdb[0]=0x35: 35 00 00 00 00 00 00 00 00 00 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917720] end_request: I/O error, dev sdjd, sector in range 9437184 + 0-2(12) 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917722] md: super_written_retry for error=-5 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917751] sd 2:3:0:0: rejecting I/O to offline device 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917753] md: super_written gets error=-5, uptodate=0 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917755] syno_md_error: sdjd3 has been removed 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917759] md/raid:md2: Disk failure on sdjd3, disabling device. 2020-04-07T00:28:15+08:00 Synology kernel: [ 121.917759] md/raid:md2: Operation continuing on 12 devices. 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.001181] ata3.04: disabled 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.004540] ata3.04: already disabled (class=0x2) 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.009822] ata3.04: already disabled (class=0x2) 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.015122] sd 2:4:0:0: rejecting I/O to offline device 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.042203] Result: hostbyte=0x01 driverbyte=0x00 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.051467] cdb[0]=0x35: 35 00 00 00 00 00 00 00 00 00 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.057276] end_request: I/O error, dev sdje, sector 9437192 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.063604] md: super_written_retry for error=-5 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.068893] sd 2:4:0:0: rejecting I/O to offline device 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.074737] md: super_written gets error=-5, uptodate=0 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.080575] syno_md_error: sdje3 has been removed 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.085824] md/raid:md2: Disk failure on sdje3, disabling device. 2020-04-07T00:28:15+08:00 Synology kernel: [ 122.085824] md/raid:md2: Operation continuing on 11 devices. [2] 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.434928] md: kicking non-fresh sdje3 from array! 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.451639] md: kicking non-fresh sdja3 from array! 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.475308] md: kicking non-fresh sdjb3 from array! 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.495328] md: kicking non-fresh sdjc3 from array! 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.515317] md: kicking non-fresh sdjd3 from array! 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.596768] md/raid:md2: not enough operational devices (5/13 failed) 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.603977] md/raid:md2: raid level 5 active with 8 out of 13 devices, algorithm 2 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.648541] Buffer I/O error on device md2, logical block 0 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.654784] Buffer I/O error on device md2, logical block 0 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.661032] Buffer I/O error on device md2, logical block 0 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.667276] Buffer I/O error on device md2, logical block 0 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.678806] Buffer I/O error on device md2, logical block in range 17567100928 + 0-2(12) 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.687892] Buffer I/O error on device md2, logical block 17567101616 2020-04-07T00:31:38+08:00 Synology spacetool.shared: spacetool.c:1428 Try to force assemble RAID [/dev/md2]. [0x2000 file_get_key_value.c:81] 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.727347] md: md2: set sda3 to auto_remap [0] 2020-04-07T00:31:38+08:00 Synology kernel: [ 71.732457] md: md2: set sdg3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology kernel: [ 71.737533] md: md2: set sdh3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology kernel: [ 71.742606] md: md2: set sde3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology kernel: [ 71.747672] md: md2: set sdf3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology kernel: [ 71.752740] md: md2: set sdd3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology kernel: [ 71.757806] md: md2: set sdc3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology kernel: [ 71.762873] md: md2: set sdb3 to auto_remap [0] 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1156 [Info] maximum superblock.events of RAID /dev/md2 = 296085 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sda3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdb3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdc3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdd3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sde3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdf3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdg3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdh3 (superblock.events = 296085) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdja3 (superblock.events = 296076) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdjb3 (superblock.events = 296076) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdjc3 (superblock.events = 296076) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdjd3 (superblock.events = 296076) for force assemble 2020-04-07T00:31:39+08:00 Synology spacetool.shared: spacetool.c:1162 [Info] add /dev/sdje3 (superblock.events = 296076) for force assembleI will not replace my expansion unit's disks 4 and 5 because those are brand new 10TB drives that I shucked from WD Easystore drivers and are known to be stable with Synology NAS'es, that's not the issue.

I wanted to specifically understand the 12:27:59 AM event though and I hope you can share your ideas too. If I understand correctly, they're saying that when a UPS comes back on after a power blackout and is still in charging/low battery mode, the NAS detects that low battery condition and will go to a 2nd safe shutdown mode even though there is no FSD signal sent by NUT? If so, then that really isn't good.

-

I think I really need to implement the Ups.delay.start now. Where do you put it though? I tried putting in the extra arguments to driver section and the service won't start afterwards.

-

output of

upsrw ups:[outlet.desc] Outlet description Type: STRING Maximum length: 20 Value: Main Outlet [output.voltage.nominal] Nominal output voltage (V) Type: ENUM Option: "200" Option: "208" Option: "220" Option: "230" SELECTED Option: "240" [ups.delay.shutdown] Interval to wait after shutdown with delay command (seconds) Type: STRING Maximum length: 10 Value: 20 [ups.start.battery] Allow to start UPS from battery Type: STRING Maximum length: 5 Value: yesSo does that mean that the ups.delay.start variable is not available for my ups? If so, any other idea how to get around the issue then?

-

@kevindd992002 said in NUT package:

I wanted to specifically understand the 12:27:59 AM event though and I hope you can share your ideas too. If I understand correctly, they're saying that when a UPS comes back on after a power blackout and is still in charging/low battery mode, the NAS detects that low battery condition and will go to a 2nd safe shutdown mode even though there is no FSD signal sent by NUT? If so, then that really isn't good.

This makes good sense to me. A low battery condition indicates that power could be removed at any time. In general, you don't want the system to be attempting recovery of RAID in a situation such as this.

On a separate note, you have some disk issues as well. I'm not sure it's a good choice to dismiss discard Synology's advice so casually. If you want a stable NAS, you should stick with enterprise drives. This is one of those "you get what you pay for" situations.

-

@kevindd992002 said in NUT package:

So does that mean that the ups.delay.start variable is not available for my ups? If so, any other idea how to get around the issue then?

It's not available via NUT. It could be controllable via some other method on your UPS.

That your UPS is restarting while still having a low battery is somewhat problematic. You may wish to discuss this with the UPS manufacturer.

-

Failed to install nut on my SG-3100 today due to net-snmp can't make temp file.

>>> Installing pfSense-pkg-nut... Updating pfSense-core repository catalogue... pfSense-core repository is up to date. Updating pfSense repository catalogue... pfSense repository is up to date. All repositories are up to date. Checking integrity... done (0 conflicting) The following 4 package(s) will be affected (of 0 checked): New packages to be INSTALLED: pfSense-pkg-nut: 2.7.4_7 [pfSense] nut: 2.7.4_13 [pfSense] neon: 0.30.2_4 [pfSense] net-snmp: 5.7.3_20,1 [pfSense] Number of packages to be installed: 4 The process will require 15 MiB more space. [1/4] Installing neon-0.30.2_4... [1/4] Extracting neon-0.30.2_4: .......... done [2/4] Installing net-snmp-5.7.3_20,1... [2/4] Extracting net-snmp-5.7.3_20,1: ...... pkg-static: Fail to create temporary file: /usr/local/lib/perl5/site_perl/mach/5.30/.SNMP.pm.DbwkOhQlQnQU:Input/output error [2/4] Extracting net-snmp-5.7.3_20,1... done Failed -

@sauce That looks like a generic package install problem, failing during install of net-snmp. You might re-try the install, perhaps following a reboot, and if it fails again follow up in the general pfSense packages forum.

-

@dennypage rebooting pfsense fix the installation of nut . Thank you for your help.

-

I'm having a bit of a problem with nut and pfSense.

I want to have pfSense shut down when low battery is at 30%. I tried at the default 20% (Eaton 5px) and I found it just did not leave enough time for pfSense to gracefully shut down.

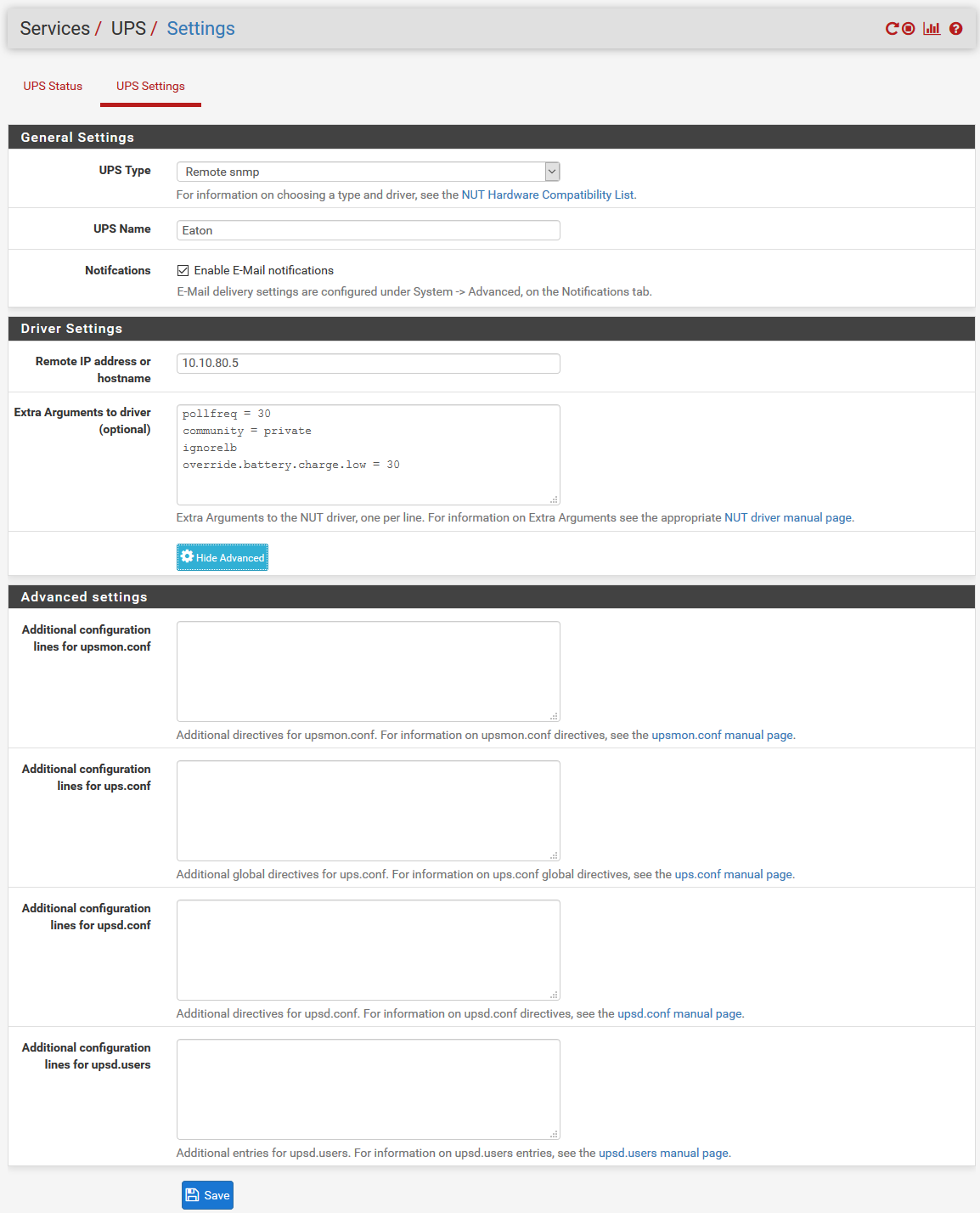

I have configured nut as per below:

However, in my logs I get the error "dstate_setflags: base variable (battery.charge.low) is immutable". It is literally flooding my system logs.

Am I doing something wrong? Nut seems to ignore the ignorelb command and is still waiting until low battery gets to 20% (as reported by the ups).

I have also placed the below arguments in the ups.conf section to no avail. However, there were no error messages in the system logs.

community = private

ignorelb

override.battery.charge.low = 30I'm guessing I am doing something wrong but can't work it out. If it helps I am running an Eaton 5px with network-ms card

-

@Sir_SSV said in NUT package:

20 % - it just did not leave enough time for pfSense to gracefully shut down.

pfSense shuts down relatively fast.

"20 %" should leave you with 30 seconds or so, probably far more.

If non, the battery isn't in good shape. Or the UPS overloaded. Which decreases battery life / shape.I advise you to check out the NUT support forum.

-

@Sir_SSV Few quick comments

The "ignorelb" option literally means that you want to ignore a low battery condition signaled by the UPS. Unless your UPS sends a low battery indication immediately upon going to battery, this probably isn't something you want.

battery.charge.low is a value managed in the UPS. You are trying to set it, and don't have SNMP privs to do so. This probably isn't something you want either.

SNMP is polled at 30 seconds. This means there is an up to 30 second delay before nut knows it is on battery, and up to 30 seconds before nut knows that the battery is low.

In general, if you want to shut down sooner it's better to look at runtime remaining than battery charge.

-

@Gertjan said in NUT package:

@Sir_SSV said in NUT package:

20 % - it just did not leave enough time for pfSense to gracefully shut down.

pfSense shuts down relatively fast.

"20 %" should leave you with 30 seconds or so, probably far more.

If non, the battery isn't in good shape. Or the UPS overloaded. Which decreases battery life / shape.I advise you to check out the NUT support forum.

The ups is new, only had it for approx 1 month. It is not overloaded, have pfSense, freenas and a couple unifi switches.

@dennypage said in NUT package:

@Sir_SSV Few quick comments

The "ignorelb" option literally means that you want to ignore a low battery condition signaled by the UPS. Unless your UPS sends a low battery indication immediately upon going to battery, this probably isn't something you want.

battery.charge.low is a value managed in the UPS. You are trying to set it, and don't have SNMP privs to do so. This probably isn't something you want either.

SNMP is polled at 30 seconds. This means there is an up to 30 second delay before nut knows it is on battery, and up to 30 seconds before nut knows that the battery is low.

In general, if you want to shut down sooner it's better to look at runtime remaining than battery charge.

Thanks. Should I set the default polling time to a lower value?

How would I go about setting a runtime remaining configuration in nut?

-

@Sir_SSV said in NUT package:

Should I set the default polling time to a lower value?

How would I go about setting a runtime remaining configuration in nut?

As to the NUT config, it all depends upon how much runtime the UPS has in general, and how much runtime is remaining when the UPS signals a low battery situation. If the UPS has 3 or more minutes of runtime when entering low battery, I would simply let the UPS handle things. If it has less, you may want to shorten the pollfreq.

Given that you have a snmp management card, you likely have a good deal of configuration available in the UPS to control when low battery state is entered. The UPS is generally a better place to drive decisions than in the NUT configuration.

-

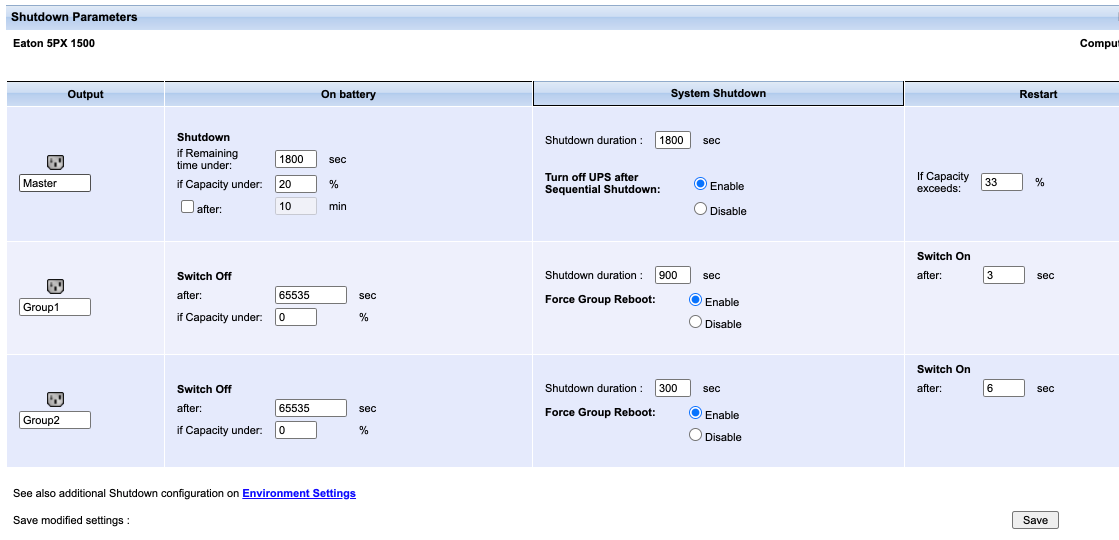

I recall my Eaton 5PX throw OB (on battery) and LB (low battery) at the same time, even with a large runtime remaining. In your UPS configuration you can configure the shutdown timers as Denny mentioned. Heres my 5px shutdown parameters.

If at 20% your UPS is failing to shutdown pfSense its likely your batteries are toast. Even new UPS can sit on shelves for sometime so worth testing before putting into production use.

-

@q54e3w said in NUT package:

I recall my Eaton 5PX throw OB (on battery) and LB (low battery) at the same time, even with a large runtime remaining. In your UPS configuration you can configure the shutdown timers as Denny mentioned. Heres my 5px shutdown parameters.

If at 20% your UPS is failing to shutdown pfSense its likely your batteries are toast. Even new UPS can sit on shelves for sometime so worth testing before putting into production use.

Thank you for the screen shot.

Under on battery, the values of 1800 seconds and 20% does that mean both of those values have to be met before the ups Initiated a shuts down?

If I wanted pfSense to shut down at 30% battery, would I pull the mains and see time remaining at 30% and input that value in to remaining time and shut down duration?

-

@Sir_SSV Been quite some time since I set this up so memory may be fuzzy but i recall its a 'OR' not 'AND'. You could stick 30% in percentage field and a small number in the time and it should shutdown (or flag LB & OB) at 30%.

Set it to 95% or something to test with to save running batteries up and down.EDIT: I just checked the manual and TL;DR

The first shutdown criteria initiates the restart of the shutdown sequence.

- If remaining time is under (0 to 99999 seconds, 180 by default) is the minimum remaining backup time

from which the shutdown sequence is launched. - If battery capacity is under (0 to 100%); this value cannot be less than that of the UPS and is the

minimum remaining battery capacity level from which the shutdown sequence is launched. - Shutdown after (0 to 99999 minutes, not validated by default) is the operating time in minutes left for

users after a switch to backup before starting the shutdown sequence. - Shutdown duration (120 seconds by default) is the time required for complete shutdown of systems

when a switch to backup time is long enough to trigger the shutdown sequences. It is calculated

automatically at the maximum of Shutdown duration of subscribed clients but can be modified in the

Advanced mode. - If battery capacity exceeds Minimum battery level to reach before restarting the UPS after utility

restoration

- If remaining time is under (0 to 99999 seconds, 180 by default) is the minimum remaining backup time

Copyright 2025 Rubicon Communications LLC (Netgate). All rights reserved.