Playing with fq_codel in 2.4

-

@tman222 I've tested similarly, albeit lower configured pipes, and I actually find that using queues lowers latency.

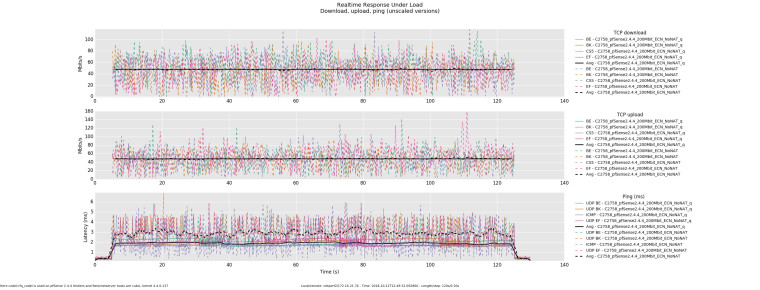

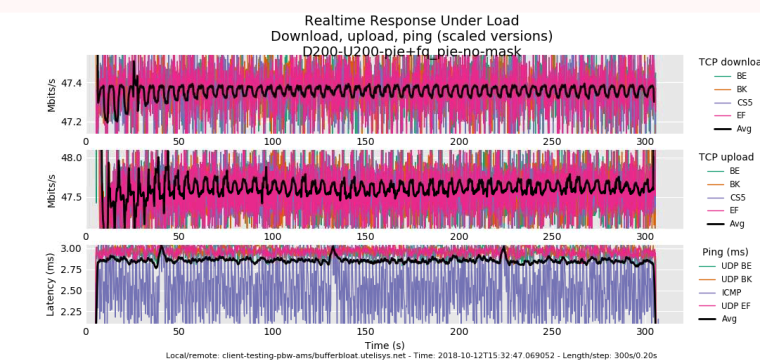

Here codel+fq_codel is used on pfSense 2.4.4 limiters and flent/netserver hosts are cubic, kernel 4.4.0-137. (q denotes that one up and one down queue was used)

-

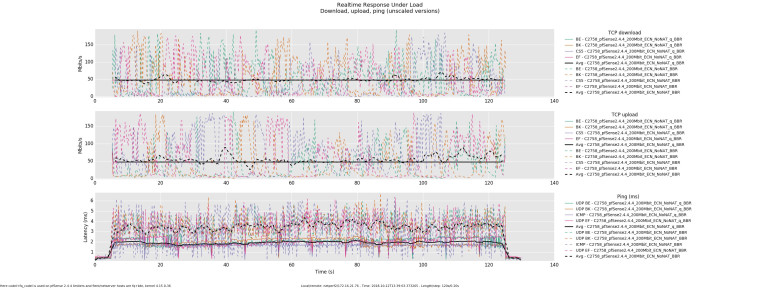

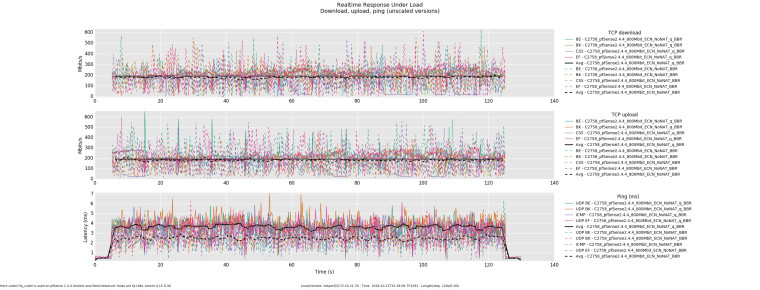

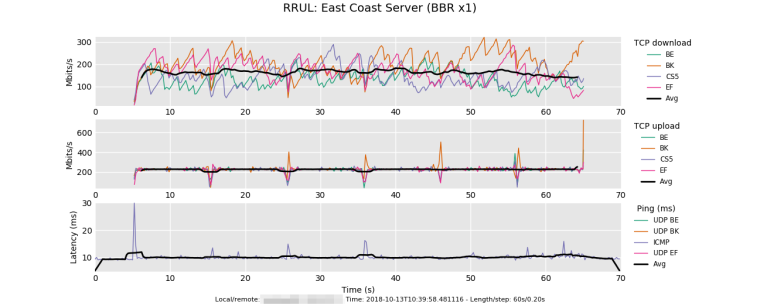

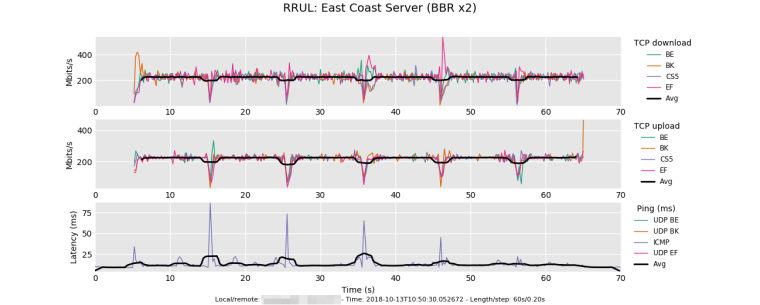

Here codel+fq_codel is used on pfSense 2.4.4 limiters and flent/netserver hosts are fq+bbr, kernel 4.15.0-36. (q denotes that one up and one down queue was used)

-

And then the opposite occurs as throughput increases, latency becomes higher when utilizing queues.

@dtaht any thoughts on what might we be running into here?

-

My first thought, this morning, when asked about my thoughts, was to get some food, a sixpack of beer, and go sailing. :)

Loving seeing y'all doing the comparison plot thing now. you needn't post your *.flent.gz files here if yu don't want to (my browser plugin dumps them into flent automaticall), but feel free to tarball 'em and email dave dot taht at gmail.com.

I don't get what you mean by codel + fq_codel. did you post a setup somewhere?

bb tomorrow. PDT.

-

@tman222 said in Playing with fq_codel in 2.4:

Since people have started sharing flent test results, I figured I'd share a couple interesting one as well from some 10Gbit testing that I have done.

these results were so lovely that I figured the internet didn't need me today.

Test 3) BQL, TSQ, and BBR are amazing. One of my favorite little tweaks is that in aiming for 98.x of the rate it just omits the ip headers from its cost estimate. As for "perfect", one thing you might find ironic, is that the interpacket latency you are getting for this load, is about what you'd get at a physical rate of 100mbits with cubic cake + a 3k bql. Or sch_fq bbr (possibly cubic also) with 3k bql. Thats basically an emulation of the original decchip tulip ethernet card from the good ole days. Loved that card ( https://web.archive.org/web/20000831052812/http://www.rage.net:80/wireless/wireless-howto.html )

If cpu context switch time had improved over the years, well, ~5usec would be "perfect" at 10gige. So, while things are about, oh, 50-100x better than they were 6 years back, it really woud be nice to have cpus that could context switch in 5 clocks.

Still: ship it! we won the internets.

could you rerun this test with cubic instead? (--te=CC=cubic)

sch_fq instead of fq_codel on the client/server? (or fq_codel if you are already using fq)

Test 2) OSX uses reno, with a low IW, and I doubt they've (post your netstat -I thatdevice -qq ?) fq'd that driver.

So (probly?) their transmit queue has filled with acks and there's not a lot of space to fit full size tcp packets. Apple's "innovation" in their stack is stretch acks, so after a while they send less acks per transfer. I think this is tunable on/off but they don't call the option stretch ack. (it may be the delack option you'll find via googling) You can clearly see it kick in with tcptrace though on the relevant xplot.org tsg plot. But 10 sec? wierd. bbr has a 10 sec probe phase... hmmm....Everybody here uses tcptrace -G + xplot.org daily on their packet caps yes?

Test 1) you are pegging it with tcpdump? there's faster alternatives out there. Does the ids need more that 128 bytes?

-

@xciter327 said in Playing with fq_codel in 2.4:

Any chance You can share if You are configuring the bufferbloat testing servers in a special way(sysctl tweaks or something else). The only thing I do over vanilla ubuntu is to set-up iptables and set fq_codel as default qdisc.

I've also sorted my weird results. Turns out there was a bottleneck between me and my netperf server.

-

tail-drop + fq_codel

-

pie + fq_pie

I will share my bufferbloat.net configuration if you gimme these fq_pie vs fq_codel flent files!!! fq_pie shares the same fq algo as fq_codel, but the aqm is different, swiping the rate estimator from codel while retaining a random drop probability like RED. (or pie). So the "wobblyness" of the fq_pie U/D graphs is possibly due to the target 20ms and longer tcp rtt in pie or some other part of the algo... but unless (when posting these) you lock them to the exact same scale (yep, theres a flent option for this) that's hard by eyeball here without locking the graphs. Bandwidth compare via bar chart. Even then, the tcp_nup --socket-stats option (we really need to add that to rrul) makes it easier to look harder at the latency being experienced by tcp.

ecn on on the hosts? ecn on pie is very different.

Pure pie result?

PS

I don't get what anyone means when they say pie + fq_pie or codel + fq_codel. I really don't. I was thinking you were basically doing the FAIRQ -> lots of codel or pie queues method?

-

-

@gsakes said in Playing with fq_codel in 2.4:

Three more tests; all were run with the same up/down b/w parameters, within a few minutes of each other.

1) PFSense - no shaping, baseline:

2) PFSense - codel + fq_codel:

3) Linux - sch_cake, noecn + ack-filter:

your second plot means one of three things:

A) you uploaded the wrong plot

B) you didn't actually manage to reset the config between test runs

C) you did try to reset the config and the reset didn't workI've been kind of hoping for C on several tests here, notably @strangegopher 's last result.

I cannot even begin to describe how many zillion times I've screwed up per B and C. B: I sometimes go weeks without noticing I left an inbound or outbound shaper on or off. In fact, I just did that yesterday - while testing the singapore box and scratching my head. My desktop had a 40mbit inbound shaper on for, I dunno, at least a month. C: even after years of trying it get it right, sqm-scripts does not always succeed in flushing the rules. pfsense?

so, like, trust, but verify! with the ipfw(?) utility.

(flent captures this on linux (see the metadata browser in the gui) , but I didn't even know the command existed on bsd). flent -x captures way more data.

added a feature request to: https://github.com/tohojo/flent/issues/151

-

@dtaht said in Playing with fq_codel in 2.4:

@tman222 said in Playing with fq_codel in 2.4:

Since people have started sharing flent test results, I figured I'd share a couple interesting one as well from some 10Gbit testing that I have done.

these results were so lovely that I figured the internet didn't need me today.

Test 3) BQL, TSQ, and BBR are amazing. One of my favorite little tweaks is that in aiming for 98.x of the rate it just omits the ip headers from its cost estimate. As for "perfect", one thing you might find ironic, is that the interpacket latency you are getting for this load, is about what you'd get at a physical rate of 100mbits with cubic cake + a 3k bql. Or sch_fq bbr (possibly cubic also) with 3k bql. Thats basically an emulation of the original decchip tulip ethernet card from the good ole days. Loved that card ( https://web.archive.org/web/20000831052812/http://www.rage.net:80/wireless/wireless-howto.html )

If cpu context switch time had improved over the years, well, ~5usec would be "perfect" at 10gige. So, while things are about, oh, 50-100x better than they were 6 years back, it really woud be nice to have cpus that could context switch in 5 clocks.

Still: ship it! we won the internets.

could you rerun this test with cubic instead? (--te=CC=cubic)

sch_fq instead of fq_codel on the client/server? (or fq_codel if you are already using fq)

this is one place where you would see a difference in fq with --te=upload_streams=200 on the 10gige test,

but, heh, ever wonder what happens when you hit hit a 5mbit link with more flows than it could rationally handle?What should happen?

What do you want to happen?

What actually happens?

It's a question of deep protocol design and value, with behaviors specified in various rfcs.

-

@dtaht ...uploaded the wrong plot:) thx for pointing it out, I posted the proper plot just now.

-

Hi @dtaht - a couple more fun Flent Charts to add to the mix:

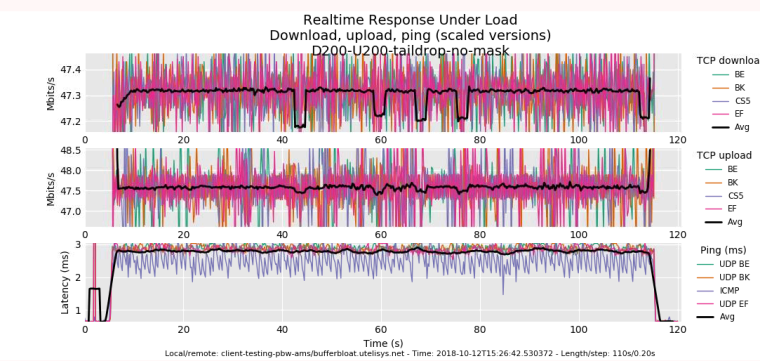

These Flent tests were done through my WAN connection (1Gbit symmetric Fiber) to a Google Cloud VM. The tests were done from East Coast US --> East Coast US. Traffic shaping via fq_codel enabled on pfSense router using default algorithm parameters and 950Mbit up/down limiters.

Test 1: Local host runs Linux (Debian 9) with BBR and enabled and fq qdisc. Remote host also runs Linux (Debian 9), but no changes to default TCP congestion configuration:

Test 2: Local host runs Linux (Debian 9) with BBR and enabled and fq qdisc. Remote host also runs Linux (Debian 9), and this time also has BBR and fq qdisc enabled just like the local host:

The differences here are substantial: An overall throughput increase (up and down combined) of almost 15% while download alone saw an increase of approximately 36%. BBR is quite impressive!

-

The every 10 sec unified drops in the last test are an artifact of BBR's RTT_PROBE operation, in the real world, people shouldn't be opening 4 connections at the exact same time to the exact same place. (rrul IS a stress test). the rtt_probe thing happens also during natural "pauses" in the conversation, so during crypto setup for https it can happen, as one example, doing dash-style video streaming is another.

The flent squarewave test showing bbr vs cubic behavior is revealing, however, with and without the shaper enabled. It's not all glorious....

tcp_4up_squarewave

To test a bbr download on that test type, use flent's --swap-up-down option (and note you did that in the title)

the 75ms (!!) induced latency spike is bothersome (the other side of the co-joined recovery phase), and it looks to my eye you dropped all the udp traffic on both tests for some reason. ?? Great tcp is one thing, not being able to get dns kind of problematic.

you can get more realistic real-world bbr behavior by switching to the rtt_fair tests and hitting 4 different servers at the same time.

-

@gsakes the download even-ness between flows on your last cake plot either shows diffserv priorities being stripped "out there" (common at the ISP and MPLS layer), or it shows cake "besteffort" being used on the ifb device.

tc -s qdisc show

Some ISPs re-mark all traffic they don't recognize the diffserv bits on to something else (comcast remarks to cs1). This is where you want to wash their bits out and re-mark them how you want.

If you have diffserv3 set on cake, your isp is stripping the bits. (complain), and you can save on cpu by switching inbound cake to "besteffort" mode.

To figure out if your isp isn't stripping those bits, you can switch the current cake instance over to diffserv via

tc qdisc cake change dev ifbwhichever root cake diffserv3 and re-run the test.

For those in the video distribution biz, diffserv4 gives much more bandwidth to flows with the right markings and nearly nothing to best effort.

to try and bring this back to pfsense, can it do stuff based on these bits? wash? classify? While I certainly think mostly all everyone needs is a single fq_aqm qdisc, others really want classification, port number, etc

http://snapon.lab.bufferbloat.net/~d/draft-taht-home-gateway-best-practices-00.html

-

On the BBR front, more progress.

https://lwn.net/ml/netdev/20180921155154.49489-1-edumazet@google.com/

-

@dtaht said in Playing with fq_codel in 2.4:

I don't get what you mean by codel + fq_codel. did you post a setup somewhere?

@dtaht said in Playing with fq_codel in 2.4:

I don't get what anyone means when they say pie + fq_pie or codel + fq_codel. I really don't. I was thinking you were basically doing the FAIRQ -> lots of codel or pie queues method?

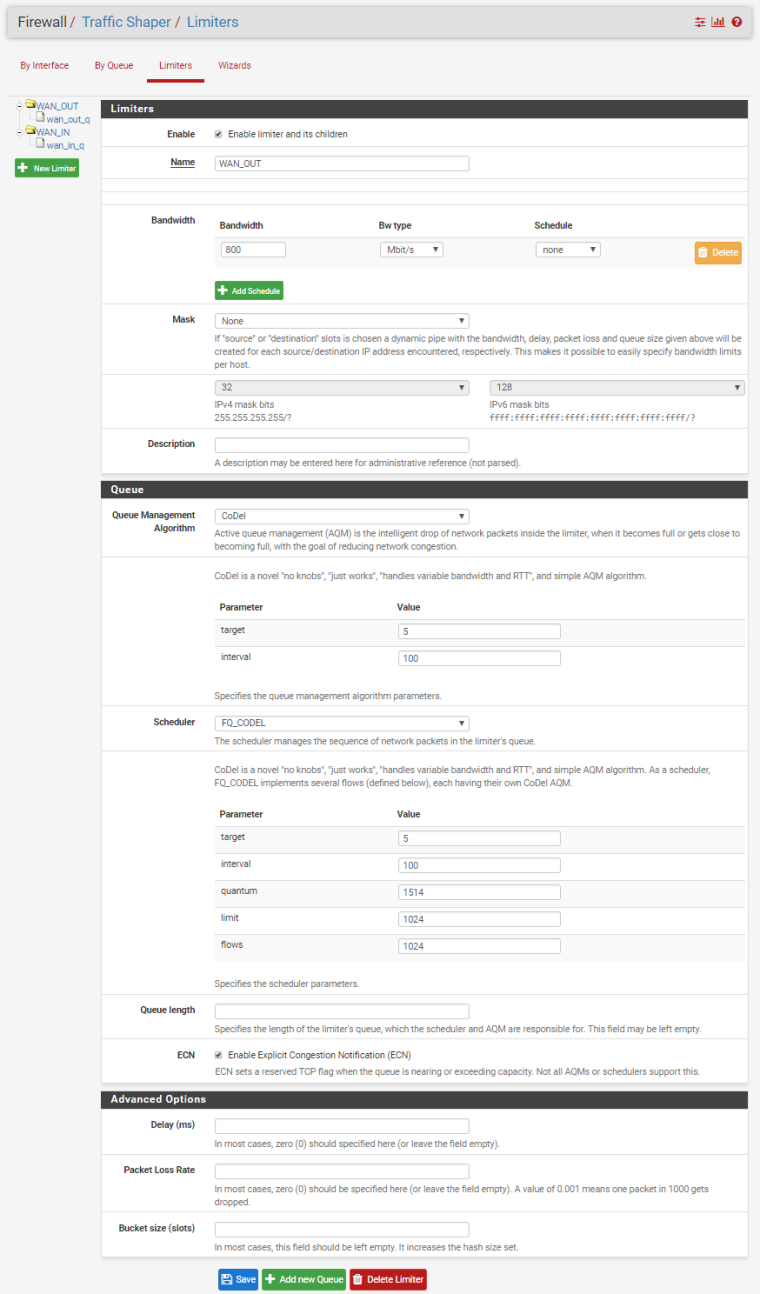

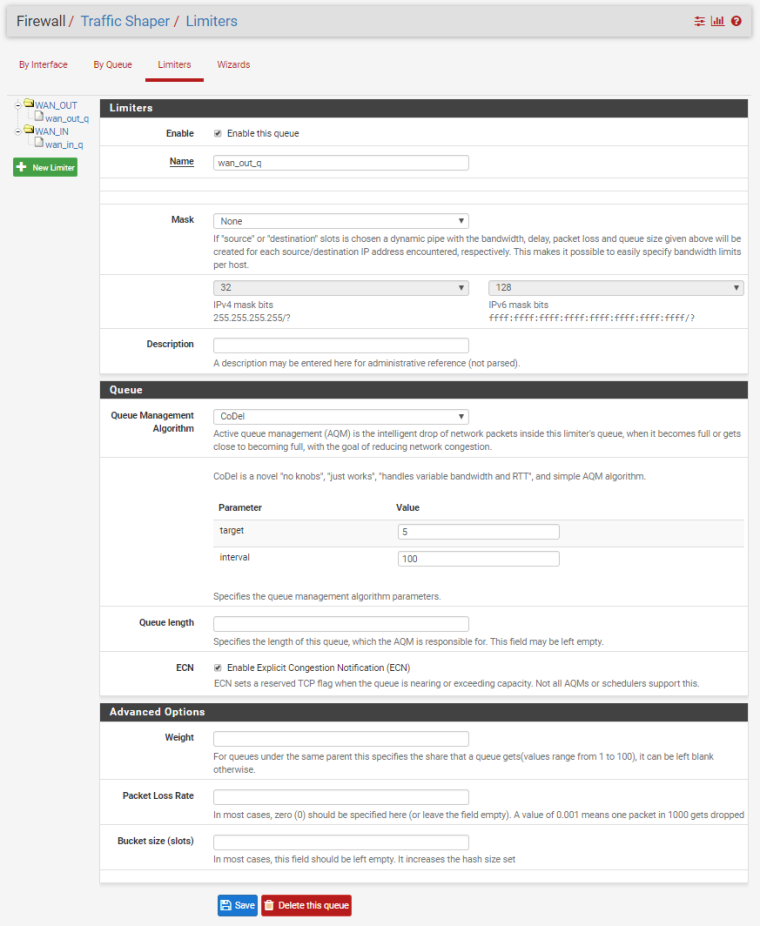

Sorry, I'm trying to keep this straight as well. I'm finding little inconsistencies in the implementation/documentation that I, and others, may be perpetuating as "how it is". The screenshot below shows the limiter configuration screen where you can choose your AQM and scheduler in the pfSense WebUI. What I see now though is that even if I choose CoDel as the pipe AQM, and FQ_CODEL as the scheduler, it appears that ipfw is using droptail as the AQM. Anyone else seeing this? Another bug?

[2.4.4-RELEASE][admin@pfSense.localdomain]/root: cat /tmp/rules.limiter pipe 1 config bw 800Mb codel target 5ms interval 100ms ecn sched 1 config pipe 1 type fq_codel target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ecn queue 1 config pipe 1 codel target 5ms interval 100ms ecn pipe 2 config bw 800Mb codel target 5ms interval 100ms ecn sched 2 config pipe 2 type fq_codel target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ecn queue 2 config pipe 2 codel target 5ms interval 100ms ecn [2.4.4-RELEASE][admin@pfSense.localdomain]/root:[2.4.4-RELEASE][admin@pfSense.localdomain]/root: ipfw sched show 00001: 800.000 Mbit/s 0 ms burst 0 q65537 50 sl. 0 flows (1 buckets) sched 1 weight 0 lmax 0 pri 0 droptail sched 1 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ECN Children flowsets: 1 BKT Prot ___Source IP/port____ ____Dest. IP/port____ Tot_pkt/bytes Pkt/Byte Drp 0 ip 0.0.0.0/0 0.0.0.0/0 39516 47775336 134 174936 0 00002: 800.000 Mbit/s 0 ms burst 0 q65538 50 sl. 0 flows (1 buckets) sched 2 weight 0 lmax 0 pri 0 droptail sched 2 type FQ_CODEL flags 0x0 0 buckets 1 active FQ_CODEL target 5ms interval 100ms quantum 1514 limit 1024 flows 1024 ECN Children flowsets: 2 0 ip 0.0.0.0/0 0.0.0.0/0 384086 482821168 163 232916 0 [2.4.4-RELEASE][admin@pfSense.localdomain]/root:[2.4.4-RELEASE][admin@pfSense.localdomain]/root: pfctl -vvsr | grep limiter @86(1539633367) match out on igb0 inet all label "USER_RULE: WAN_OUT limiter" dnqueue(1, 2) @87(1539633390) match in on igb0 inet all label "USER_RULE: WAN_IN limiter" dnqueue(2, 1) [2.4.4-RELEASE][admin@pfSense.localdomain]/root: -

@dtaht I noticed that I get tons of bufferbloat when on 2.4 ghz, when compared to 5 ghz. On 5ghz I get no bufferbloat and it maxes out the speed of 150 mbits down. On 2.4 ghz I get a B score with 500+ ms in bufferbloat and speed is limited to 80 mbits down. Upload of 16 mbits works just fine on either band. Any way to create a tired system for 2.4 ghz clients to use different queue?

-

EDIT:

Turns out you CAN configure these queues, but you have to do so after the fact. We don't know the ID ahead of time, these are generated automatically. So we would have to make the patch handle a new number space, possibly reserved or pre-calculated, to use for configuring these queues.

Here's a re-iteration of the flow diagram for dummynet:

(flow_mask|sched_mask) sched_mask +---------+ weight Wx +-------------+ | |->-[flow]-->--| |-+ -->--| QUEUE x | ... | | | | |->-[flow]-->--| SCHEDuler N | | +---------+ | | | ... | +--[LINK N]-->-- +---------+ weight Wy | | +--[LINK N]-->-- | |->-[flow]-->--| | | -->--| QUEUE y | ... | | | | |->-[flow]-->--| | | +---------+ +-------------+ | +-------------+ -

@strangegopher said in Playing with fq_codel in 2.4:

@dtaht I noticed that I get tons of bufferbloat when on 2.4 ghz, when compared to 5 ghz. On 5ghz I get no bufferbloat and it maxes out the speed of 150 mbits down. On 2.4 ghz I get a B score with 500+ ms in bufferbloat and speed is limited to 80 mbits down. Upload of 16 mbits works just fine on either band. Any way to create a tired system for 2.4 ghz clients to use different queue?

The problem with wifi is that it can have a wildly variable rate. (Move farther from the AP). We wrote up that fq_codel design (for linux) here: https://arxiv.org/pdf/1703.00064.pdf and it was covered in english here: https://lwn.net/Articles/705884/

There was a bsd dev working on it last I heard. OSX has it, I'd love it if they pushed their solution back into open source.

So while you can attach a limiter to 80 mbit on the wifi device that won't work if your rate falls below that as you move farther away.

-

@mattund said in Playing with fq_codel in 2.4:

EDIT:

Turns out you CAN configure these queues, but you have to do so after the fact. We don't know the ID ahead of time, these are generated automatically. So we would have to make the patch handle a new number space, possibly reserved or pre-calculated, to use for configuring these queues.

Here's a re-iteration of the flow diagram for dummynet:

(flow_mask|sched_mask) sched_mask +---------+ weight Wx +-------------+ | |->-[flow]-->--| |-+ -->--| QUEUE x | ... | | | | |->-[flow]-->--| SCHEDuler N | | +---------+ | | | ... | +--[LINK N]-->-- +---------+ weight Wy | | +--[LINK N]-->-- | |->-[flow]-->--| | | -->--| QUEUE y | ... | | | | |->-[flow]-->--| | | +---------+ +-------------+ | +-------------+Even with that diagram I'm confused. :) I think some of the intent here is to get per host and per flow fair queuing + aqm which to me is something that

uses the fairq scheduler per IP, each instance of which has a fq_codel qdisc.But I still totally don't get what folk are describing as codel + fq_codel.

...

My biggest open question however is that we are hitting cpu limits on various higher speeds, and usually the way to improve that is to increase the token bucket size. Is there a way to do that here?

-

@dtaht @strangegopher There's also this https://www.bufferbloat.net/projects/make-wifi-fast/wiki/

-

@pentangle said in Playing with fq_codel in 2.4:

@dtaht @strangegopher There's also this https://www.bufferbloat.net/projects/make-wifi-fast/wiki/

That website (I'm the co-author) is a bit out of date. We didn't get funding last year... or this year. Still, the google doc at the bottom of that page is worth reading.... lots more can be done to make wifi better.