IPSec Dashboard Widget not displaying proper status

-

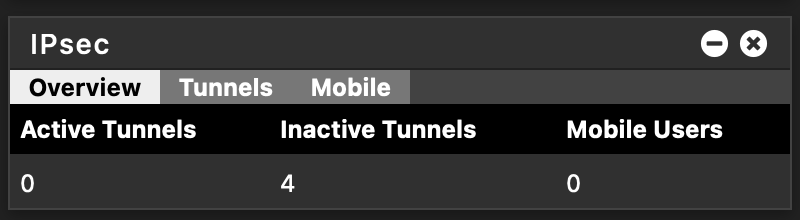

Running PfSense 2.5 RC on both ends and running an IPSec S2S tunnel between both of them. The IPSec tunnel is working and is reported as connected in the status page (in addition to being able to access the shared resources at each site). However, on the dashboard widget on both ends, it shows that the tunnel is down (even though it isn’t). Is there perhaps something I need to change in order for the widget to properly display the tunnel is active on the dashboard?

-

@mxnpd Having the same problem on 2.5 RC

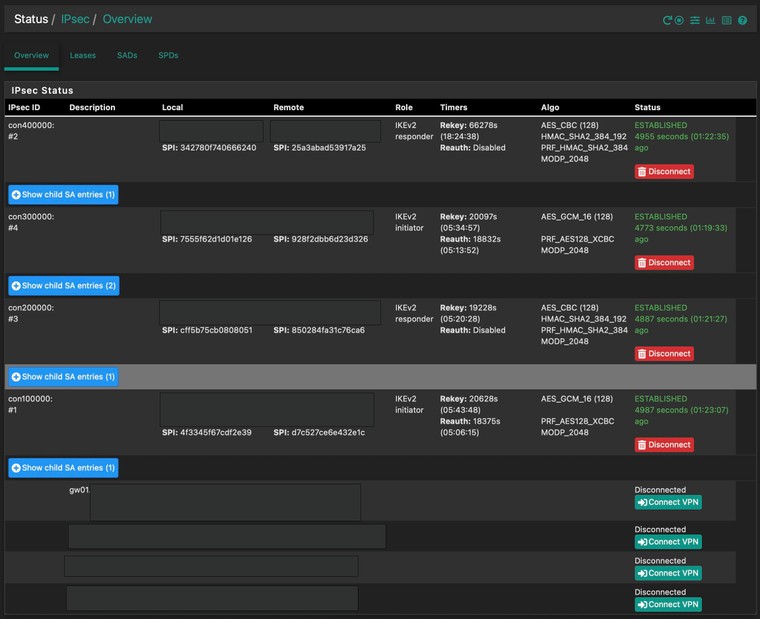

When I go into IPSec --> Status, I see duplicate entries for the tunnels, half of which are offline. I can ping across all tunnels w/o problem.

-

Status is correctly reflected here on the 2.5.0 systems I checked. Will need a lot more information than "it doesn't work".

Starting with screenshots of the widget and Status > IPsec along with the output of

swanctl --list-connsandswanctl --list-sas.You can redact some info from it, but don't redact so much that it's impossible to tell what's what in the output.

-

@jimp said in IPSec Dashboard Widget not displaying proper status:

swanctl --list-conns

swanctl --list-sas

Status > IPsec[2.5.0-RC][admin@gw01.tree.example.org]/root: swanctl --list-conns bypass: IKEv1/2, no reauthentication, rekeying every 14400s local: %any remote: 127.0.0.1 local unspecified authentication: remote unspecified authentication: bypasslan: PASS, no rekeying local: 10.0.0.0/16|/0 fc00::/64|/0 remote: 10.0.0.0/16|/0 fc00::/64|/0 con100000: IKEv2, no reauthentication, rekeying every 25920s, dpd delay 10s local: 102.5.11.12 remote: 32.161.158.23 local pre-shared key authentication: id: djt-tree-nw.dyndns.org remote pre-shared key authentication: id: gw01.aws.example.org con100000: TUNNEL, rekeying every 3240s, dpd action is restart local: 10.0.0.0/16|/0 remote: 10.10.0.0/24|/0 con200000: IKEv2, no reauthentication, rekeying every 25920s, dpd delay 10s local: 102.5.11.12 remote: djt-mountains.dyndns.org local pre-shared key authentication: id: djt-tree.dyndns.org remote pre-shared key authentication: id: djt-mountains.dyndns.org con200000: TUNNEL, rekeying every 3240s, dpd action is restart local: 10.0.0.0/16|/0 remote: 10.1.0.0/16|/0 con300000: IKEv2, no reauthentication, rekeying every 25920s, dpd delay 10s local: 102.5.11.12 remote: fyre.dyndns.org local pre-shared key authentication: id: djt-tree.dyndns.org remote pre-shared key authentication: id: fyre.dyndns.org con300000: TUNNEL, rekeying every 77760s, dpd action is restart local: 10.0.0.0/16|/0 remote: 10.5.0.0/16|/0 con400000: IKEv2, no reauthentication, rekeying every 77760s, dpd delay 10s local: 102.5.11.12 remote: friendhome.net local pre-shared key authentication: id: djt-tree.dyndns.org remote pre-shared key authentication: id: friendhome.net con400000: TUNNEL, rekeying every 77760s, dpd action is restart local: 10.0.0.0/16|/0 remote: 10.3.0.0/16|/0[2.5.0-RC][admin@gw01.tree.example.org]/root: swanctl --list-sas con400000: #2, ESTABLISHED, IKEv2, 25a3abad53917a25_i 342780f740666240_r* local 'djt-tree.dyndns.org' @ 102.5.11.12[4500] remote 'friendhome.net' @ 142.147.101.171[4500] AES_CBC-128/HMAC_SHA2_384_192/PRF_HMAC_SHA2_384/MODP_2048 established 4733s ago, rekeying in 66500s con400000: #6, reqid 4, INSTALLED, TUNNEL, ESP:AES_GCM_16-128 installed 4733s ago, rekeying in 71086s, expires in 81667s in c796477f, 598152356 bytes, 414268 packets, 3s ago out cbf1b204, 9060628 bytes, 76144 packets, 3s ago local 10.0.0.0/16|/0 remote 10.3.0.0/16|/0 con300000: #4, ESTABLISHED, IKEv2, 7555f62d1d01e126_i* 928f2dbb6d23d326_r local 'djt-tree.dyndns.org' @ 102.5.11.12[500] remote 'fyre.dyndns.org' @ 71.105.247.162[500] AES_GCM_16-128/PRF_AES128_XCBC/MODP_2048 established 4551s ago, rekeying in 20319s, reauth in 19054s con300000: #17, reqid 3, INSTALLED, TUNNEL, ESP:AES_GCM_16-128/MODP_2048 installed 951s ago, rekeying in 72551s, expires in 85449s in c49e803b, 0 bytes, 0 packets, 200s ago out c4db7612, 0 bytes, 0 packets local 10.0.0.0/16|/0 remote 10.5.0.0/16|/0 con300000: #18, reqid 3, INSTALLED, TUNNEL, ESP:AES_GCM_16-128/MODP_2048 installed 951s ago, rekeying in 71259s, expires in 85449s in c75c664e, 1008 bytes, 12 packets, 200s ago out c7b972e4, 1680 bytes, 12 packets, 200s ago local 10.0.0.0/16|/0 remote 10.5.0.0/16|/0 con200000: #3, ESTABLISHED, IKEv2, 850284fa31c76ca6_i cff5b75cb0808051_r* local 'djt-tree.dyndns.org' @ 102.5.11.12[500] remote 'djt-mountains.dyndns.org' @ 73.78.141.7[500] AES_CBC-128/HMAC_SHA2_384_192/PRF_HMAC_SHA2_384/MODP_2048 established 4665s ago, rekeying in 19450s con200000: #15, reqid 2, INSTALLED, TUNNEL, ESP:AES_CBC-128/HMAC_SHA2_384_192/MODP_2048 installed 1671s ago, rekeying in 1254s, expires in 1929s in c305582b, 4368 bytes, 52 packets, 171s ago out c7734f0c, 8528 bytes, 52 packets, 171s ago local 10.0.0.0/16|/0 remote 10.1.0.0/16|/0 con100000: #1, ESTABLISHED, IKEv2, 4f3345f67cdf2e39_i* d7c527ce6e432e1c_r local 'djt-tree-nw.dyndns.org' @ 68.233.179.107[4500] remote 'gw01.aws.example.org' @ 32.161.158.23[4500] AES_GCM_16-128/PRF_AES128_XCBC/MODP_2048 established 4765s ago, rekeying in 20850s, reauth in 18597s con100000: #16, reqid 1, INSTALLED, TUNNEL-in-UDP, ESP:AES_GCM_16-128/MODP_2048 installed 1669s ago, rekeying in 1243s, expires in 1931s in c1411d6b, 758071 bytes, 9486 packets, 1s ago out c5e4b34b, 19357148 bytes, 20233 packets, 1s ago local 10.0.0.0/16|/0 remote 10.10.0.0/24|/0Note that in the Status > IPsec, duplicate tunnels appear. The bottom 4 match the top 4 but are "offline."

-

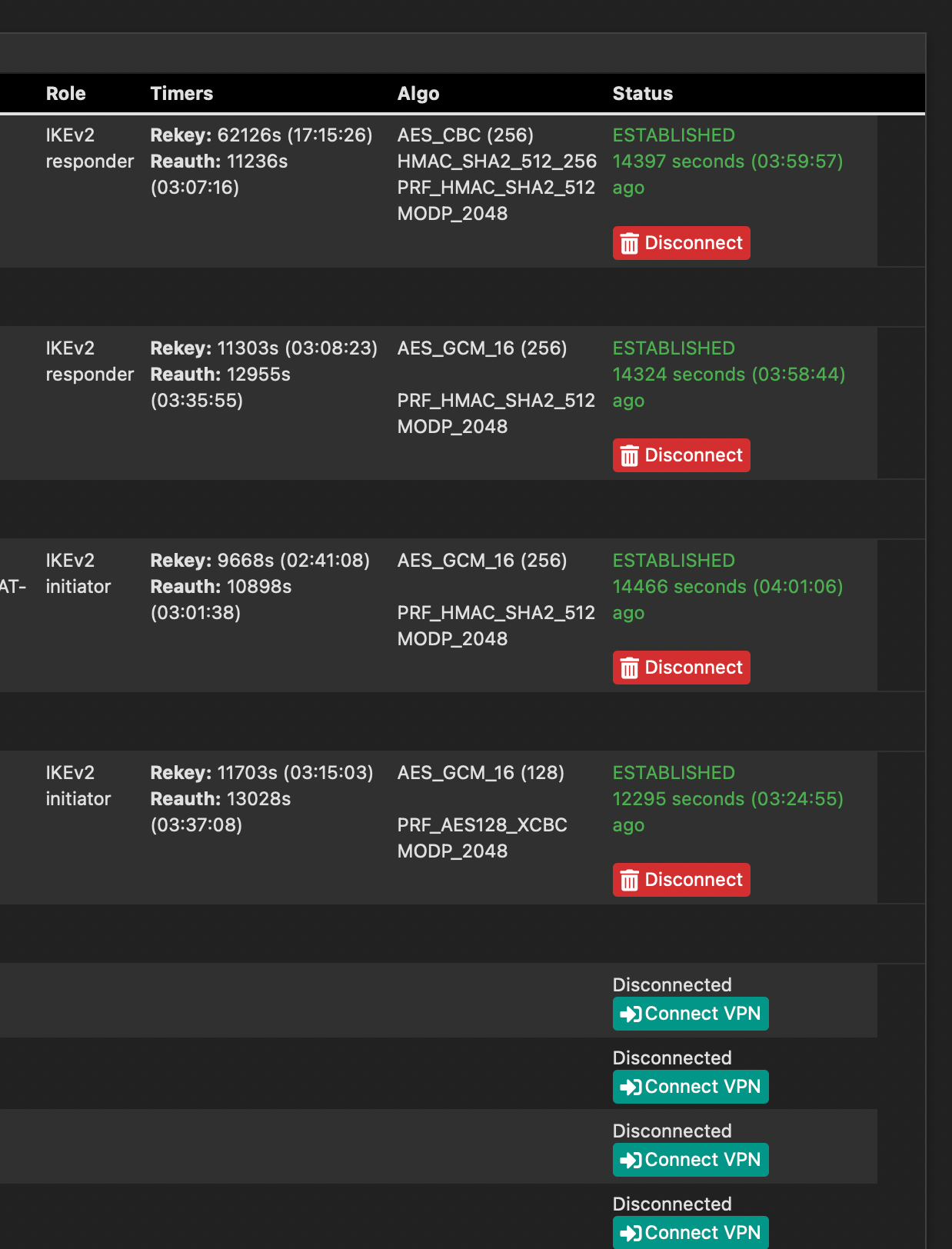

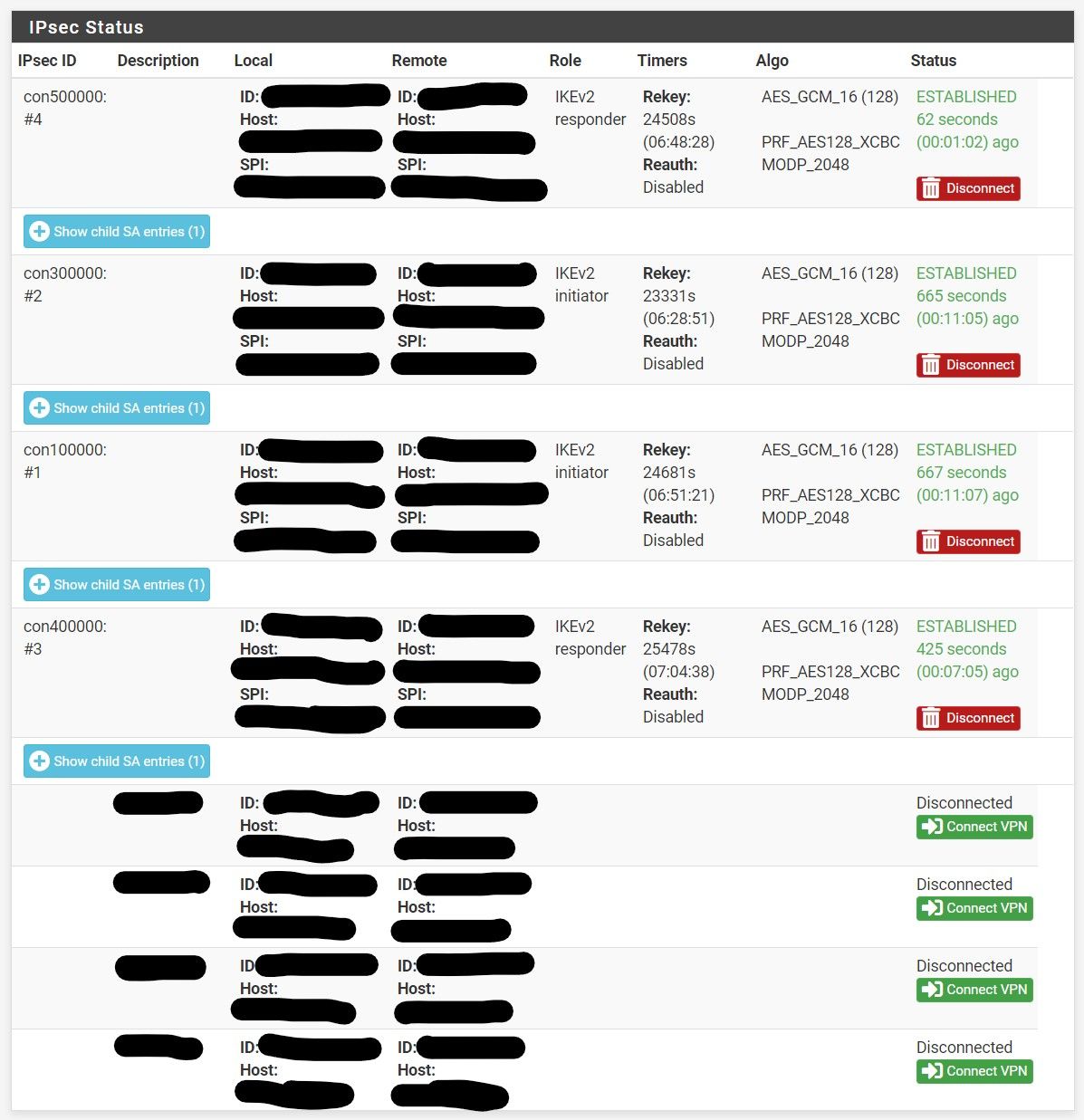

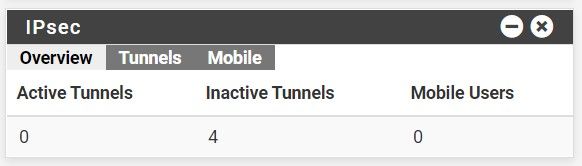

Also this issue, whether from Strongswan 5.9.1 or not, is reflected in the IPsec widget which shows inaccurate information:

All these tunnels are up.

-

If you look at the output, none of the connection definitions exactly match what is in the active SA list, due to differences in remote entries (hostnames vs IP addresses) and so on. It's no surprise it can't line them up since it has no reliable way to do so. IIRC it can't just assume the conXXXX name matches for one reason or another.

-

@jimp Not sure I follow. Are you saying if I use a dynamic host name as the remote endpoint, it will never "line up" because the host name does not match the resolved IP address? Both sides use host names and have "matching" configurations. If so, what is the proposed solution? As a reference, this is a new problem as of 2.5 RC. 2.4.5 works fine. Thank you.

-

That's right because the IKE connection configuration has the hostname but no knowledge of what it resolved to (and you don't want it to, because it could change, and it gets re-resolved by strongSwan when attempting to match connections)

The active SA list knows what the current remote endpoint is because it has to, it's actually communicating with it right now, but it doesn't retain knowledge of the hostname.

There were major changes to strongSwan backend behavior in 2.5.0 so it's no surprise there are some other differences here. Primarily in this realm we changed from the deprecated strongSwan ipsec.conf/stroke configuration system to their current swanctl.conf/VICI method.

It may be safer to make assumptions based on the con name now to improve that matching, but I'm not sure we'd want to 100% of the time since there could be use cases which benefit from them being separate.

-

@jimp Understood, and thanks for the explanation. In that case an obvious suggestion: why not resolve the hostname to IP before generating swanctl.conf, and update on IP changes?

Or, is there a workaround? Because it appears in the current implementation, there is no way to get a site-to-site tunnel displaying properly when remote_addrs is a hostname. Thank you

-

@ensnare said in IPSec Dashboard Widget not displaying proper status:

@jimp Understood, and thanks for the explanation. In that case an obvious suggestion: why not resolve the hostname to IP before generating swanctl.conf, and update on IP changes?

Then we have to have another daemon constantly updating the config when strongSwan can do it itself automatically in a more reliable way. Also strongSwan can check it when new connections are coming in so it's more responsive to changes than waiting on a daemon to do it periodically.

Or, is there a workaround? Because it appears in the current implementation, there is no way to get a site-to-site tunnel displaying properly when remote_addrs is a hostname. Thank you

The status is correct in the status page, just not the widget count. There is likely some room for improvement there for future versions but for the moment it's just a fact of life if you must use hostnames instead of IP addresses.

-

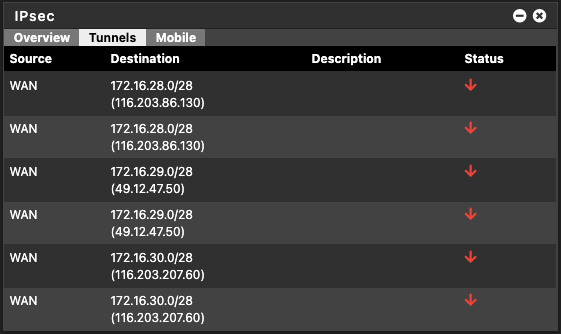

I'm experiencing the same issue, however my site-to-site config is set as IP addresses and does not use hostnames in the configuration. I'm seeing the same issue with the widget & status showing tunnels not connected, but also a substantial amount of logspam around dead peer detection it seems.

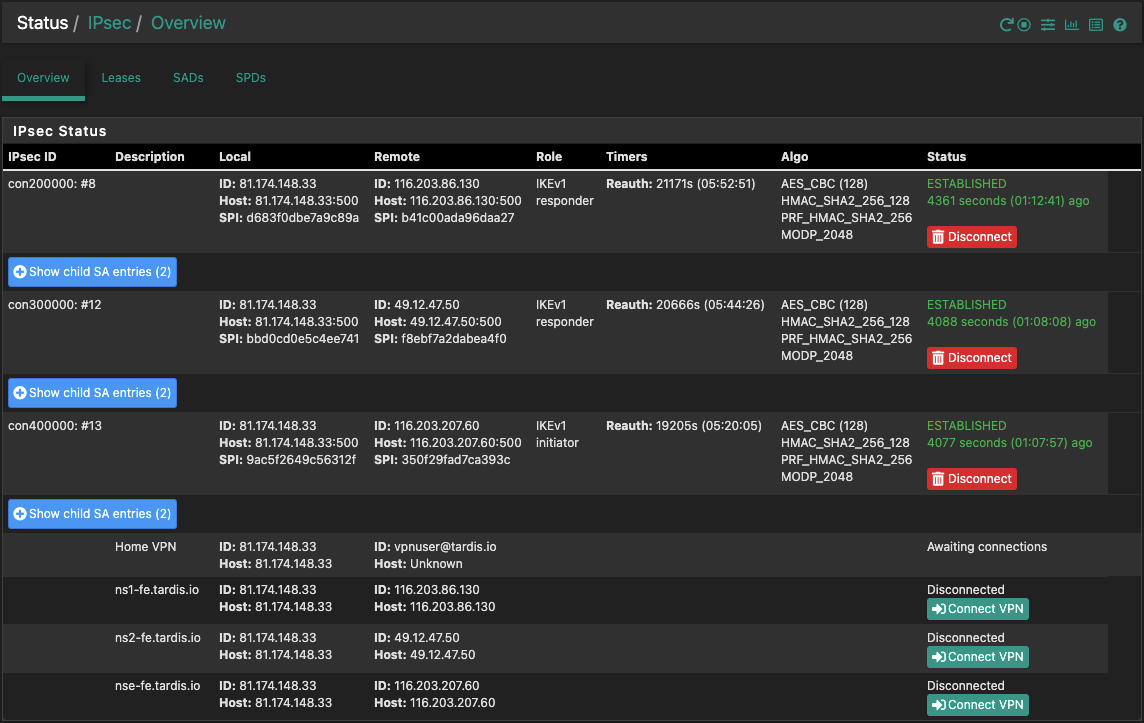

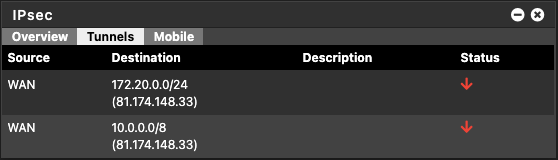

Host A (Primary):

Widget:

IPSec --> Status

: swanctl --list-conns bypass: IKEv1/2, no reauthentication, rekeying every 14400s local: %any remote: 127.0.0.1 local unspecified authentication: remote unspecified authentication: bypasslan: PASS, no rekeying local: 10.0.0.0/8|/0 2001:470:6a3c:1115::/64|/0 remote: 10.0.0.0/8|/0 2001:470:6a3c:1115::/64|/0 con-mobile: IKEv1/2, no reauthentication, rekeying every 25920s, dpd delay 10s local: 81.174.148.33 remote: 0.0.0.0/0 remote: ::/0 local pre-shared key authentication: id: 81.174.148.33 remote pre-shared key authentication: remote XAuth authentication: con-mobile: TUNNEL, rekeying every 3240s, dpd action is clear local: 0.0.0.0/0|/0 remote: dynamic con200000: IKEv1, reauthentication every 25920s, dpd delay 10s local: 81.174.148.33 remote: 116.203.86.130 local pre-shared key authentication: id: 81.174.148.33 remote pre-shared key authentication: id: 116.203.86.130 con0: TUNNEL, rekeying every 3240s, dpd action is hold local: 172.20.0.0/24|/0 remote: 172.16.28.0/28|/0 con1: TUNNEL, rekeying every 3240s, dpd action is hold local: 10.0.0.0/8|/0 remote: 172.16.28.0/28|/0 con300000: IKEv1, reauthentication every 25920s, dpd delay 10s local: 81.174.148.33 remote: 49.12.47.50 local pre-shared key authentication: id: 81.174.148.33 remote pre-shared key authentication: id: 49.12.47.50 con0: TUNNEL, rekeying every 3240s, dpd action is hold local: 172.20.0.0/24|/0 remote: 172.16.29.0/28|/0 con1: TUNNEL, rekeying every 3240s, dpd action is hold local: 10.0.0.0/8|/0 remote: 172.16.29.0/28|/0 con400000: IKEv1, reauthentication every 25920s, dpd delay 10s local: 81.174.148.33 remote: 116.203.207.60 local pre-shared key authentication: id: 81.174.148.33 remote pre-shared key authentication: id: 116.203.207.60 con0: TUNNEL, rekeying every 3240s, dpd action is hold local: 172.20.0.0/24|/0 remote: 172.16.30.0/28|/0 con1: TUNNEL, rekeying every 3240s, dpd action is hold local: 10.0.0.0/8|/0 remote: 172.16.30.0/28|/0: swanctl --list-sas con200000: #8, ESTABLISHED, IKEv1, b41c00ada96daa27_i d683f0dbe7a9c89a_r* local '81.174.148.33' @ 81.174.148.33[500] remote '116.203.86.130' @ 116.203.86.130[500] AES_CBC-128/HMAC_SHA2_256_128/PRF_HMAC_SHA2_256/MODP_2048 established 4125s ago, reauth in 21407s con0: #25, reqid 1, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 1147s ago, rekeying in 1740s, expires in 2453s in cfe26eb3, 1655745 bytes, 1264 packets, 6s ago out c20430ee, 6392 bytes, 42 packets, 83s ago local 172.20.0.0/24|/0 remote 172.16.28.0/28|/0 con1: #26, reqid 2, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 1054s ago, rekeying in 1921s, expires in 2546s in c91c01bb, 1434954 bytes, 1407 packets, 99s ago out c7bf2c9f, 97128 bytes, 698 packets, 54s ago local 10.0.0.0/8|/0 remote 172.16.28.0/28|/0 con300000: #12, ESTABLISHED, IKEv1, f8ebf7a2dabea4f0_i bbd0cd0e5c4ee741_r* local '81.174.148.33' @ 81.174.148.33[500] remote '49.12.47.50' @ 49.12.47.50[500] AES_CBC-128/HMAC_SHA2_256_128/PRF_HMAC_SHA2_256/MODP_2048 established 3852s ago, reauth in 20902s con0: #28, reqid 3, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 869s ago, rekeying in 2267s, expires in 2731s in c8c59fdf, 1286776 bytes, 1050 packets, 3s ago out c966e53c, 80724 bytes, 123 packets, 107s ago local 172.20.0.0/24|/0 remote 172.16.29.0/28|/0 con1: #29, reqid 4, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 852s ago, rekeying in 2150s, expires in 2748s in cf22683f, 2016 bytes, 24 packets, 134s ago out c433623f, 3744 bytes, 24 packets, 134s ago local 10.0.0.0/8|/0 remote 172.16.29.0/28|/0 con400000: #13, ESTABLISHED, IKEv1, 9ac5f2649c56312f_i* 350f29fad7ca393c_r local '81.174.148.33' @ 81.174.148.33[500] remote '116.203.207.60' @ 116.203.207.60[500] AES_CBC-128/HMAC_SHA2_256_128/PRF_HMAC_SHA2_256/MODP_2048 established 3841s ago, reauth in 19441s con0: #27, reqid 5, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 876s ago, rekeying in 2070s, expires in 2724s in cf53a3e5, 1164684 bytes, 897 packets, 8s ago out c86a97be, 5704 bytes, 38 packets, 66s ago local 172.20.0.0/24|/0 remote 172.16.30.0/28|/0 con1: #30, reqid 6, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 846s ago, rekeying in 2058s, expires in 2754s in cbef0452, 2016 bytes, 24 packets, 137s ago out c52f80c4, 3744 bytes, 24 packets, 137s ago local 10.0.0.0/8|/0 remote 172.16.30.0/28|/0Host B (Remote):

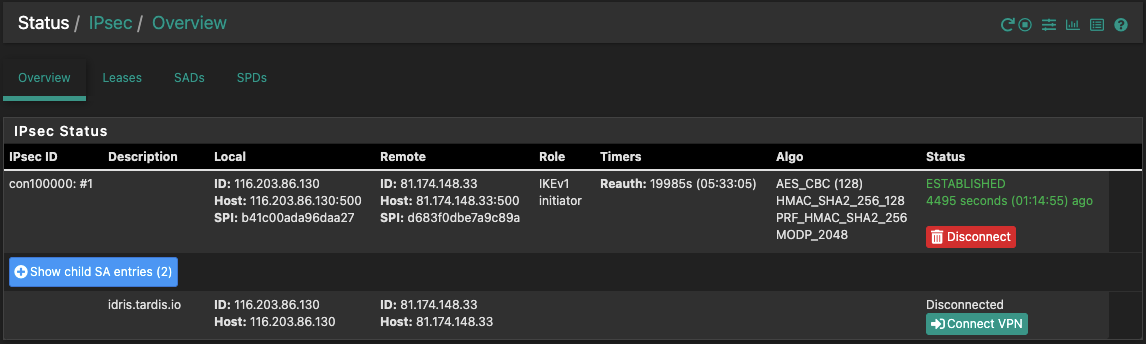

Widget:

IPSec --> Status:

: swanctl --list-conns bypass: IKEv1/2, no reauthentication, rekeying every 14400s local: %any remote: 127.0.0.1 local unspecified authentication: remote unspecified authentication: bypasslan: PASS, no rekeying local: 172.16.28.2/32|/0 remote: 172.16.28.2/32|/0 con100000: IKEv1, reauthentication every 25920s, dpd delay 10s local: 116.203.86.130 remote: 81.174.148.33 local pre-shared key authentication: id: 116.203.86.130 remote pre-shared key authentication: id: 81.174.148.33 con0: TUNNEL, rekeying every 3240s, dpd action is hold local: 172.16.28.0/28|/0 remote: 172.20.0.0/24|/0 con1: TUNNEL, rekeying every 3240s, dpd action is hold local: 172.16.28.0/28|/0 remote: 10.0.0.0/8|/0: swanctl --list-sas con100000: #1, ESTABLISHED, IKEv1, b41c00ada96daa27_i* d683f0dbe7a9c89a_r local '116.203.86.130' @ 116.203.86.130[500] remote '81.174.148.33' @ 81.174.148.33[500] AES_CBC-128/HMAC_SHA2_256_128/PRF_HMAC_SHA2_256/MODP_2048 established 4634s ago, reauth in 19846s con0: #5, reqid 1, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 1656s ago, rekeying in 1316s, expires in 1944s in c20430ee, 5248 bytes, 64 packets, 3s ago out cfe26eb3, 2517760 bytes, 1828 packets, 3s ago local 172.16.28.0/28|/0 remote 172.20.0.0/24|/0 con1: #6, reqid 2, INSTALLED, TUNNEL, ESP:AES_CBC-256/HMAC_SHA2_256_128/MODP_3072 installed 1563s ago, rekeying in 1415s, expires in 2037s in c7bf2c9f, 102181 bytes, 1271 packets, 12s ago out c91c01bb, 1752956 bytes, 2037 packets, 12s ago local 172.16.28.0/28|/0 remote 10.0.0.0/8|/0As for the log spam, this is what I am seeing (taken from Host B, however this is present on all instances). This was never an issue/present in 2.4.5 prior to the upgrade:

Feb 17 19:47:31 charon 99460 12[CFG] vici client 23 connected Feb 17 19:47:31 charon 99460 09[CFG] vici client 23 registered for: list-conn Feb 17 19:47:31 charon 99460 14[CFG] vici client 23 requests: list-conns Feb 17 19:47:31 charon 99460 14[CFG] vici client 23 disconnected Feb 17 19:47:36 charon 99460 07[CFG] vici client 24 connected Feb 17 19:47:36 charon 99460 09[CFG] vici client 24 registered for: list-sa Feb 17 19:47:36 charon 99460 09[CFG] vici client 24 requests: list-sas Feb 17 19:47:36 charon 99460 09[CFG] vici client 24 disconnected Feb 17 19:47:39 charon 99460 07[CFG] vici client 25 connected Feb 17 19:47:39 charon 99460 13[CFG] vici client 25 registered for: list-sa Feb 17 19:47:39 charon 99460 09[CFG] vici client 25 requests: list-sas Feb 17 19:47:39 charon 99460 09[CFG] vici client 25 disconnected Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> sending DPD request Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> queueing ISAKMP_DPD task Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> activating new tasks Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> activating ISAKMP_DPD task Feb 17 19:47:46 charon 99460 09[ENC] <con100000|1> generating INFORMATIONAL_V1 request 2019671338 [ HASH N(DPD) ] Feb 17 19:47:46 charon 99460 09[NET] <con100000|1> sending packet: from 116.203.86.130[500] to 81.174.148.33[500] (108 bytes) Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> activating new tasks Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> nothing to initiate Feb 17 19:47:46 charon 99460 09[NET] <con100000|1> received packet: from 81.174.148.33[500] to 116.203.86.130[500] (108 bytes) Feb 17 19:47:46 charon 99460 09[ENC] <con100000|1> parsed INFORMATIONAL_V1 request 3098609444 [ HASH N(DPD_ACK) ] Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> activating new tasks Feb 17 19:47:46 charon 99460 09[IKE] <con100000|1> nothing to initiate Feb 17 19:47:47 charon 99460 13[CFG] vici client 26 connected Feb 17 19:47:47 charon 99460 10[CFG] vici client 26 registered for: list-sa Feb 17 19:47:47 charon 99460 09[CFG] vici client 26 requests: list-sas Feb 17 19:47:47 charon 99460 09[CFG] vici client 26 disconnected Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> sending DPD request Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> queueing ISAKMP_DPD task Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> activating new tasks Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> activating ISAKMP_DPD task Feb 17 19:47:56 charon 99460 10[ENC] <con100000|1> generating INFORMATIONAL_V1 request 3217847920 [ HASH N(DPD) ] Feb 17 19:47:56 charon 99460 10[NET] <con100000|1> sending packet: from 116.203.86.130[500] to 81.174.148.33[500] (108 bytes) Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> activating new tasks Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> nothing to initiate Feb 17 19:47:56 charon 99460 10[NET] <con100000|1> received packet: from 81.174.148.33[500] to 116.203.86.130[500] (108 bytes) Feb 17 19:47:56 charon 99460 10[ENC] <con100000|1> parsed INFORMATIONAL_V1 request 866177057 [ HASH N(DPD_ACK) ] Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> activating new tasks Feb 17 19:47:56 charon 99460 10[IKE] <con100000|1> nothing to initiate Feb 17 19:48:00 newsyslog 39991 logfile turned over due to size>500K Feb 17 19:48:00 newsyslog 39991 logfile turned over due to size>500K Feb 17 19:48:00 charon 99460 15[CFG] vici client 27 connected Feb 17 19:48:00 charon 99460 10[CFG] vici client 27 registered for: list-sa Feb 17 19:48:00 charon 99460 11[CFG] vici client 27 requests: list-sas Feb 17 19:48:00 charon 99460 10[CFG] vici client 27 disconnected Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> sending DPD request Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> queueing ISAKMP_DPD task Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> activating new tasks Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> activating ISAKMP_DPD task Feb 17 19:48:06 charon 99460 10[ENC] <con100000|1> generating INFORMATIONAL_V1 request 3767522245 [ HASH N(DPD) ] Feb 17 19:48:06 charon 99460 10[NET] <con100000|1> sending packet: from 116.203.86.130[500] to 81.174.148.33[500] (108 bytes) Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> activating new tasks Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> nothing to initiate Feb 17 19:48:06 charon 99460 10[NET] <con100000|1> received packet: from 81.174.148.33[500] to 116.203.86.130[500] (108 bytes) Feb 17 19:48:06 charon 99460 10[ENC] <con100000|1> parsed INFORMATIONAL_V1 request 3843431717 [ HASH N(DPD_ACK) ] Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> activating new tasks Feb 17 19:48:06 charon 99460 10[IKE] <con100000|1> nothing to initiate Feb 17 19:48:14 charon 99460 11[CFG] vici client 28 connected Feb 17 19:48:14 charon 99460 08[CFG] vici client 28 registered for: list-sa Feb 17 19:48:14 charon 99460 10[CFG] vici client 28 requests: list-sas Feb 17 19:48:14 charon 99460 10[CFG] vici client 28 disconnected Feb 17 19:48:27 charon 99460 06[CFG] vici client 29 connected Feb 17 19:48:27 charon 99460 08[CFG] vici client 29 registered for: list-sa Feb 17 19:48:27 charon 99460 08[CFG] vici client 29 requests: list-sas Feb 17 19:48:27 charon 99460 16[CFG] vici client 29 disconnected Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> sending DPD request Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> queueing ISAKMP_DPD task Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> activating new tasks Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> activating ISAKMP_DPD task Feb 17 19:48:31 charon 99460 16[ENC] <con100000|1> generating INFORMATIONAL_V1 request 485220965 [ HASH N(DPD) ] Feb 17 19:48:31 charon 99460 16[NET] <con100000|1> sending packet: from 116.203.86.130[500] to 81.174.148.33[500] (108 bytes) Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> activating new tasks Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> nothing to initiate Feb 17 19:48:31 charon 99460 16[NET] <con100000|1> received packet: from 81.174.148.33[500] to 116.203.86.130[500] (108 bytes) Feb 17 19:48:31 charon 99460 16[ENC] <con100000|1> parsed INFORMATIONAL_V1 request 1050251960 [ HASH N(DPD_ACK) ] Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> activating new tasks Feb 17 19:48:31 charon 99460 16[IKE] <con100000|1> nothing to initiate -

OK after checking dozens of my local systems I finally found one that has the problem.

I opened https://redmine.pfsense.org/issues/11435 to track it. Not sure how easy it will be to fix, but doesn't look too hard.

-

I committed a fix for this which is working OK for me.

You can install the System Patches package and then create an entry for

ead6515637a34ce6e170e2d2b0802e4fa1e63a00to fix the status page and57beb9ad8ca11703778fc483c7cba0f6770657acto fix the widget. -

@jimp Thanks so much I just upgraded to the new version and saw these same issues. Your patch definitely resolved my issues on 21.02

-

@jimp Thanks for the quick update. Those patches fixed my setup too.

-

@jimp Works great. Thanks so much. Also noticed that Status > IPSec loads more quickly now.

-

works for me too. thx

-

I get this only on the firewall I did a fresh install of 2.5.0 on. All of the ones that were upgraded don't do it. I'll just wait for 2.5.1.

-

The status problem is already known and fixed. To ensure you have all of the current known and fixed IPsec issues corrected, You can install the System Patches package and then create entries for the following commit IDs to apply the fixes: