Average writes to Disk

-

There's no way to get write data from eMMC AFAIK.

-

@fireodo said in Average writes to Disk:

Thank you! Those settings do the trick! Maybe @jimp can take a look on this thread and maybe its something for the next version ...?

I changed it a few years ago because I was annoyed by constant blinking of the LED when SSD was working :). But I used those options having very basic knowledge of zfs. Now I know it a little bit better so I understand what are downsides of this configuration. And I guess it is far from being optimal. Also I'm not sure if setting it by default on zfs for next version would be good. There are too many variables for different devices to be sure if this is completely safe. But fortunately it easy to restore configuration if something is broken and I don't store important information on pfsense's drive.

-

@tomashk said in Average writes to Disk:

Also I'm not sure if setting it by default on zfs for next version would be good.

Thats why I thought it would be good if some of the developer with far more knowledge about that ZFS take a look at this situation and maybe find a more robust solution...

-

@stephenw10 if zfs does, at least in its default config cause more writes to the eMMC or SSD.. You mentioned something about

There was a bug at one time that was not mounting root as noatime

That increased drive writes in normal use, is there some change to the zfs config that might be prudent to change.. As being mentioned in this thread for example

System -> Advanced -> System Tunables - set vfs.zfs.txg.timeout to 180

I mean writing 20GB a day for not really doing anything does seem like a lot, its possible there is some error in the math, or interpretation of the info that leads to an exaggerated understanding off the amount written.. The amount of life time writes to eMMC is a concern if zfs is in fact drastically increasing the amount of data written..

-

@johnpoz said in Average writes to Disk:

I mean writing 20GB a day for not really doing anything does seem like a lot, its possible there is some error in the math

This cannot be excludet. I was searching and testing this matter aprox a week ago before I came to the forum with it.

-

I always disable DNS Reply Logging, check if that helps..

-

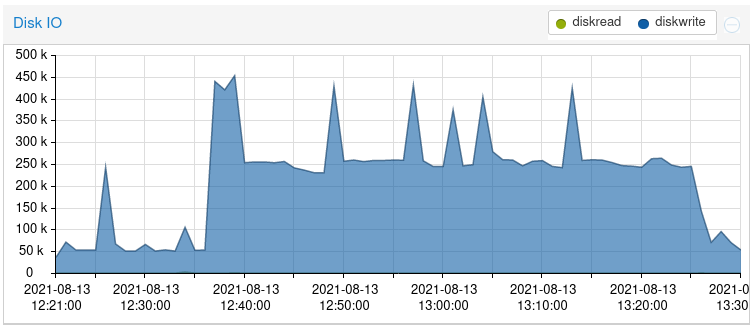

One thing I've noticed here is that having the dashboard open with the system information widget up will cause significantly more disk writes. Something that can easily happen when you are testing:

That's a 21.09 snapshot running ZFS.

Steve

-

@fireodo said in Average writes to Disk:

This cannot be exclude.

I didn't mean to seem like not trusting of your work, and how much data is being written. But I personally have a lack of understanding in enough depth to correlate what the output iostat shows and actual writes to the disk amounts too.

Even with smart data from SSD, these numbers can also be misinterpreted because the values shown in smart have to take into account the specific maker of the ssd, etc.. I have seen this myself on my nas, where is reports an insane amount of data written to the nvme cache. But if you do the math a bit different, then the numbers seem more sane..

Either way I feel your information does warrant further look into validation of how much actual data is being written.. Since if 20GB a day is being written, and you have small sized ssd or eMMC which do have limited amount of write for their life, the typical life could be shortened by a drastic amount..

edit: @stephenw10 that looks interesting.. Must be something new for 21.09, I do not see any sort of graph available in 21.05.1... Or is that something outside of pfsense, like vm host where you have pfsense running?

edit2: Would help if could pull such info from eMMC... From the limited amount looking into I have done so far, the mmc-util can be installed on pfsense.. but have not been able to glean any info from /dev/da0 using it.. Only thing I have managed to do is get this using smartctl

[21.05.1-RELEASE][admin@sg4860.local.lan]/: smartctl -d scsi -x /dev/da0 smartctl 7.2 2020-12-30 r5155 [FreeBSD 12.2-STABLE amd64] (local build) Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Vendor: Generic Product: Ultra HS-COMBO Revision: 1.98 User Capacity: 30,601,641,984 bytes [30.6 GB] Logical block size: 512 bytes >> Terminate command early due to bad response to IEC mode pageI would of hoped this would of provided some info like the smart info you can get from a ssd

[21.05.1-RELEASE][admin@sg4860.local.lan]/: mmc --help Usage: mmc extcsd read <device> Print extcsd data from <device>.But just asking for status returns

[21.05.1-RELEASE][admin@sg4860.local.lan]/: mmc status get /dev/da0 open: Operation not permitted -

IMO, disabling sync means you put yourself in danger of not having a consistent filesystem on disk which is critical for a firewall. If sync is disabled it means ZFS lies to the OS about what has been committed to disk.

Maybe it could be disabled for just the log dataset, or

/tmpfor example, or something along those lines, but disabling it globally is a bad idea. Even log data could be critical in a situation that needs debugging.If it bugs you enough to disable it on a disk, I'd say that's a personal choice, but I'm not sure I could get behind recommending anyone do that by default.

I've been running ZFS on SSDs for years and so far haven't killed a disk with it.

-

ZFS is designed to be a highly resilient file system. That means it keeps multiple copies of some things spread around the disk to hopefully be able to patch around a future bad block. It also, by default, writes information to the disk every 5 seconds. Some of its behavior can be tweaked. Do a Google search for "zfs topology" and you will find some technical details.

Just my own WAG (wild-as*** guess), but ZFS is going to be much chattier writing to disk than a less robust file system such as UFS. It has to constantly flush things out to physical media, and write critical file system details to multiple locations. If you tweak it harshly and drastically alter the intervals between these disk writes, you may save your SSD from premature wear, but you will be losing some of the resiliency that drove you to install ZFS in the first place. This is because some critical piece of file allocation table update was still in RAM when the system crashed or lost power and had not gotten written to disk yet.

This may be a classic case of you can't "have your cake and eat it, too" ...

.

. -

@jimp said in Average writes to Disk:

IMO, disabling sync means you put yourself in danger of not having a consistent filesystem on disk which is critical for a firewall. If sync is disabled it means ZFS lies to the OS about what has been committed to disk.

Maybe it could be disabled for just the log dataset, or /tmp for example, or something along those lines, but disabling it globally is a bad idea. Even log data could be critical in a situation that needs debugging.

If it bugs you enough to disable it on a disk, I'd say that's a personal choice, but I'm not sure I could get behind recommending anyone do that by default.Thanks for looking in and clarifying things! Much appreciated!

Regards,

fireodo -

@jimp said in Average writes to Disk:

I've been running ZFS on SSDs for years and so far haven't killed a disk with it.

Exactly - you would think if zfs drastically shortened the life of SSD, or eMMC there would be loads of info about it all over.

There has always been since the early days of SSD info about limiting unwarranted writes to lengthen in an attempt to extend their life.. But I have a few SSDs that have constant writes for years and have never had any issues.. Used SSD in my esxi host will multiple VMS on it - with constant writes.. The box is still working, ssd still works and looks healthy, etc.

Much of the info you can find is just not good on such details.. Even with small SSDs with 60TBW, that is a lot of freaking writes.. So even with large amounts per day you should have no problems out living the useful life of the device.. Sure not going to be using that ssd 20 years from now, etc..

But if in fact some 20GB of data is being written and you have some small say 4GB eMMC.. such amount of write could burn through its life in an amount in an amount of time that is shorter than what you would expect for the life of the device.. Could it not? I am just not clear on if the interpretation of what iostat shows actually works out to amount of data actually written. If so I would think there would be a more than few people bringing up that their 2GB or 4GB eMMC on their device has died, etc..

-

For my system I took these settings (considering also jimps opinion)

zfs set sync=disabled zroot/tmp (pfSense/tmp on new 2.5.2 install)

zfs set sync=standard zroot/var (pfSense/var)and fine tuning: vfs.zfs.txg.timeout=120

I hope to get a balance between security and the write intensity to disk. I will report in the next 24 hours.

Thank you all for your Patience and help!

fireodo -

@johnpoz said in Average writes to Disk:

edit: @stephenw10 that looks interesting.. Must be something new for 21.09, I do not see any sort of graph available in 21.05.1... Or is that something outside of pfsense, like vm host where you have pfsense running?

Yeah, sorry, that's a VM in Proxmox. Waaaay easier to see the actual disk use there IMO.

Steve

-

@stephenw10 said in Average writes to Disk:

Yeah, sorry, that's a VM in Proxmox.

For a second there - thought we were getting some new information graphs ;) In light of this discussion, such info in the monitor graphs would be slick.. Especially if could show total amount of data written lifetime, etc.

-

As I promised:

after 24 hours the main system (with the settings above) reduced the writings from about 20GB/day to about 1,8GB/day :-)

edit1: after another 24h the writes have settled at 0,89 GB/day ;-)edit2: after about 2 weeks the writes come to a middle of 0,70GB/day

(for those who are interested)

(for those who are interested) -

That is a signification change to be sure..

How much does UFS write?

-

@bmeeks said in Average writes to Disk:

This may be a classic case of you can't "have your cake and eat it, too" ... .

You're right, its always a balance and taking the decision goes hand in in hand with support the consequences :-)

-

@johnpoz said in Average writes to Disk:

That is a signification change to be sure..

Indeed.

How much does UFS write?

I have no unit with UFS so I cannot say. The unit I had setup with UFS is in the meantime on ZFS. But I remember the writings where much lower ...

-

Well my question is then - if your going to drastically reduce the sync to disk which is one of the advantages of zfs.

Until such time that some of the other things are available for easy use, like snapshots and say rollback of updates that zfs could bring to the table.

If your concerned about the amount of writes because of ssd or emmc, wouldn't it make more sense to just use ufs?