Pfsense not responding to large packet pings

-

@johnpoz said in Pfsense not responding to large packet pings:

I have a really hard time believing its 65k either..

Yep, even with jumbo frames, you're not likely to see that. The most I've seen that a switch can handle is 16K and typical use is 9K.

-

IIRC, we had 4K frames on token ring, when I was at IBM.

-

@gemeenaapje said in Pfsense not responding to large packet pings:

70 fragments received

That doesn't look like enough...

What are the NICs you are passing though? How do they appear in pfSense in

pciconf -lvfor example?

I could definitely believe this is some NIC hardware offloading or driver issue.The frame size us not really relevant here. I would expect to be able to pass a 65K packet in fragments over a link of any MTU size (within reason!). As long is it's correctly fragmented and assembled, which seems to be failing here.

Steve

-

@stephenw10 said in Pfsense not responding to large packet pings:

The frame size us not really relevant he

Very true..

-

@stephenw10 Hi Steve.

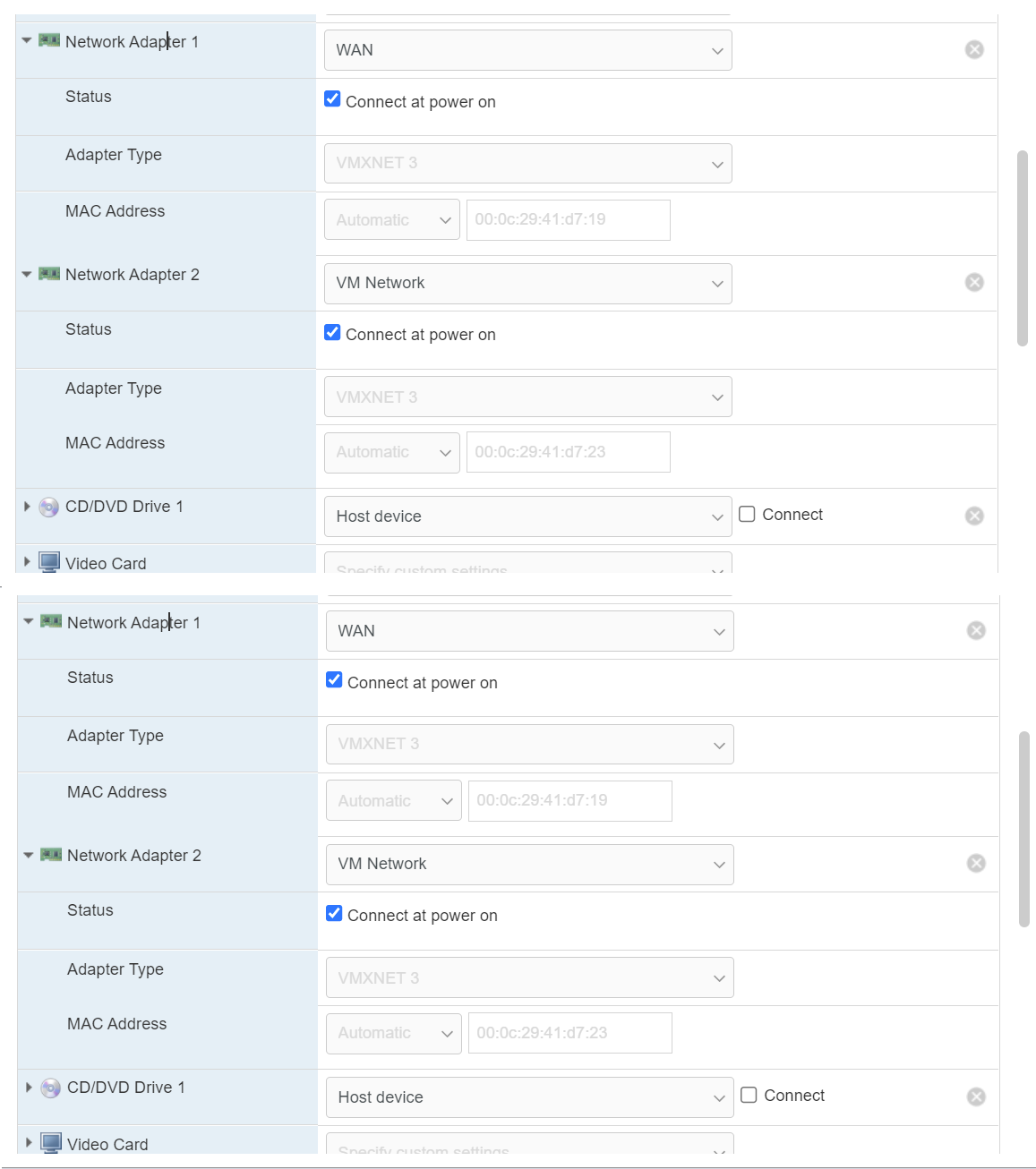

Here's the results of that command....hostb0@pci0:0:0:0: class=0x060000 card=0x197615ad chip=0x71908086 rev=0x01 hdr=0x00 vendor = 'Intel Corporation' device = '440BX/ZX/DX - 82443BX/ZX/DX Host bridge' class = bridge subclass = HOST-PCI pcib1@pci0:0:1:0: class=0x060400 card=0x00000000 chip=0x71918086 rev=0x01 hdr=0x01 vendor = 'Intel Corporation' device = '440BX/ZX/DX - 82443BX/ZX/DX AGP bridge' class = bridge subclass = PCI-PCI isab0@pci0:0:7:0: class=0x060100 card=0x197615ad chip=0x71108086 rev=0x08 hdr=0x00 vendor = 'Intel Corporation' device = '82371AB/EB/MB PIIX4 ISA' class = bridge subclass = PCI-ISA atapci0@pci0:0:7:1: class=0x01018a card=0x197615ad chip=0x71118086 rev=0x01 hdr=0x00 vendor = 'Intel Corporation' device = '82371AB/EB/MB PIIX4 IDE' class = mass storage subclass = ATA intsmb0@pci0:0:7:3: class=0x068000 card=0x197615ad chip=0x71138086 rev=0x08 hdr=0x00 vendor = 'Intel Corporation' device = '82371AB/EB/MB PIIX4 ACPI' class = bridge none0@pci0:0:7:7: class=0x088000 card=0x074015ad chip=0x074015ad rev=0x10 hdr=0x00 vendor = 'VMware' device = 'Virtual Machine Communication Interface' class = base peripheral vgapci0@pci0:0:15:0: class=0x030000 card=0x040515ad chip=0x040515ad rev=0x00 hdr=0x00 vendor = 'VMware' device = 'SVGA II Adapter' class = display subclass = VGA mpt0@pci0:0:16:0: class=0x010000 card=0x197615ad chip=0x00301000 rev=0x01 hdr=0x00 vendor = 'Broadcom / LSI' device = '53c1030 PCI-X Fusion-MPT Dual Ultra320 SCSI' class = mass storage subclass = SCSI pcib2@pci0:0:17:0: class=0x060401 card=0x079015ad chip=0x079015ad rev=0x02 hdr=0x01 vendor = 'VMware' device = 'PCI bridge' class = bridge subclass = PCI-PCI pcib3@pci0:0:21:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib4@pci0:0:21:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib5@pci0:0:21:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib6@pci0:0:21:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib7@pci0:0:21:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib8@pci0:0:21:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib9@pci0:0:21:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib10@pci0:0:21:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib11@pci0:0:22:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib12@pci0:0:22:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib13@pci0:0:22:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib14@pci0:0:22:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib15@pci0:0:22:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib16@pci0:0:22:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib17@pci0:0:22:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib18@pci0:0:22:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib19@pci0:0:23:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib20@pci0:0:23:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib21@pci0:0:23:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib22@pci0:0:23:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib23@pci0:0:23:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib24@pci0:0:23:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib25@pci0:0:23:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib26@pci0:0:23:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib27@pci0:0:24:0: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib28@pci0:0:24:1: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib29@pci0:0:24:2: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib30@pci0:0:24:3: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib31@pci0:0:24:4: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib32@pci0:0:24:5: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib33@pci0:0:24:6: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI pcib34@pci0:0:24:7: class=0x060400 card=0x07a015ad chip=0x07a015ad rev=0x01 hdr=0x01 vendor = 'VMware' device = 'PCI Express Root Port' class = bridge subclass = PCI-PCI uhci0@pci0:2:0:0: class=0x0c0300 card=0x197615ad chip=0x077415ad rev=0x00 hdr=0x00 vendor = 'VMware' device = 'USB1.1 UHCI Controller' class = serial bus subclass = USB ehci0@pci0:2:3:0: class=0x0c0320 card=0x077015ad chip=0x077015ad rev=0x00 hdr=0x00 vendor = 'VMware' device = 'USB2 EHCI Controller' class = serial bus subclass = USB vmx0@pci0:3:0:0: class=0x020000 card=0x07b015ad chip=0x07b015ad rev=0x01 hdr=0x00 vendor = 'VMware' device = 'VMXNET3 Ethernet Controller' class = network subclass = ethernet vmx1@pci0:11:0:0: class=0x020000 card=0x07b015ad chip=0x07b015ad rev=0x01 hdr=0x00 vendor = 'VMware' device = 'VMXNET3 Ethernet Controller' class = network subclass = ethernet bxe0@pci0:19:0:0: class=0x020000 card=0x3382103c chip=0x168e14e4 rev=0x10 hdr=0x00 vendor = 'Broadcom Inc. and subsidiaries' device = 'NetXtreme II BCM57810 10 Gigabit Ethernet' class = network subclass = ethernet bxe1@pci0:19:0:1: class=0x020000 card=0x3382103c chip=0x168e14e4 rev=0x10 hdr=0x00 vendor = 'Broadcom Inc. and subsidiaries' device = 'NetXtreme II BCM57810 10 Gigabit Ethernet' class = network subclass = ethernetSorry I was mistaken also in that the VM NICs are connected in the guest. As backups I think, it's been so long since I set them up I forget.

For the HP SFP+ NIC, one connects the server to the main switch (fibre). The other connects straight in from my fibre ISP

-

Do you see the same thing if you use the vmx interfaces instead or is it just on the bxe NICs?

Do you have all the hardware off-loading disabled?

-

@stephenw10 said in Pfsense not responding to large packet pings:

Do you see the same thing if you use the vmx interfaces instead or is it just on the bxe NICs?

Do you have all the hardware off-loading disabled?

I tried disabling the "disable" button (so enabling offload) one by one but that didn't help. So I disabled offloading again.

The router is being used for a web server I run at home so I can't risk changing the settings and swapping NICs or my customers will get upset. Also took me so long just to get this working with all the vlans tags and stuff coming from the ISP.

Is there anything else I could test?

-

@gemeenaapje said in Pfsense not responding to large packet pings:

Is there anything else I could test?

Yeah ;)

Change the title of the thread, as it is pretty clear by know that "big packets" is a hardware/driver issue.

Plenty of proof shown above that pfSense itself can go to 65xxx.@gemeenaapje said in Pfsense not responding to large packet pings:

Also took me so long ....

Well, let's say you're nothing finished yet.

Go bare bone with pfsense, exclude the VM from the configuration and you're ok.

The VM support might be able to tell you if very big packets are possible.. -

@gertjan Can't change title, too much time has passed it says.

Other VMs respond fine to 65500 over the network. It's just pfsense which is using the direct passthrough of the additional NIC card.

It must be the drivers then, I just don't know where to start with it :-/ Linux is so difficult.

Having the VM gives me a huge advantage over bare bone in that I can, if necessary, quickly roll back broken upgrades or changes. I've done this many times and it reduces downtime for my business.

-

Ok something just came back to me!

I'm using the drivers on the pfsense box, not the VM. So the pfsense OS controls the drivers.

The commands for the drivers are here:

https://www.freebsd.org/cgi/man.cgi?query=bxe&sektion=4I see settings there for offloading too.

Is it necessary for me to change some of these settings or should the normal web config page for pfsense control these too?

Here's a dump of some settings:

hw.bxe.udp_rss: 0 hw.bxe.autogreeen: 0 hw.bxe.mrrs: -1 hw.bxe.max_aggregation_size: 32768 hw.bxe.rx_budget: -1 hw.bxe.hc_tx_ticks: 50 hw.bxe.hc_rx_ticks: 25 hw.bxe.max_rx_bufs: 4080 hw.bxe.queue_count: 4 hw.bxe.interrupt_mode: 2 hw.bxe.debug: 0 dev.bxe.1.queue.3.nsegs_path2_errors: 0 dev.bxe.1.queue.3.nsegs_path1_errors: 0 dev.bxe.1.queue.3.tx_mq_not_empty: 0 dev.bxe.1.queue.3.bd_avail_too_less_failures: 0 dev.bxe.1.queue.3.tx_request_link_down_failures: 1 dev.bxe.1.queue.3.bxe_tx_mq_sc_state_failures: 0 dev.bxe.1.queue.3.tx_queue_full_return: 0 dev.bxe.1.queue.3.mbuf_alloc_tpa: 64 dev.bxe.1.queue.3.mbuf_alloc_sge: 1020 dev.bxe.1.queue.3.mbuf_alloc_rx: 4080 dev.bxe.1.queue.3.mbuf_alloc_tx: 0 dev.bxe.1.queue.3.mbuf_rx_sge_mapping_failed: 0 dev.bxe.1.queue.3.mbuf_rx_sge_alloc_failed: 0 dev.bxe.1.queue.3.mbuf_rx_tpa_mapping_failed: 0 dev.bxe.1.queue.3.mbuf_rx_tpa_alloc_failed: 0 dev.bxe.1.queue.3.mbuf_rx_bd_mapping_failed: 0 dev.bxe.1.queue.3.mbuf_rx_bd_alloc_failed: 0 dev.bxe.1.que -

@gemeenaapje said in Pfsense not responding to large packet pings:

Here's a dump of some settings:

Looks like these settings belong into /boot/loader.conf.local

-

@gertjan Looks like it's already disabled....

bxe1: flags=8943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500 description: LAN options=120b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_MAGIC,VLAN_HWFILTER> ether 10:60:4b:b4:a0:44 inet6 fe80xxxxxxxxxxxa044%bxe1 prefixlen 64 scopeid 0x4 inet6 200xxxxxxxxxxxxx prefixlen 64 inet 192.168.2.2 netmask 0xffffff00 broadcast 192.168.2.255 media: Ethernet autoselect (10Gbase-SR <full-duplex>) status: active nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> -

Strange, it looks like offloading (and other) settings are different for WAN vs LAN.

I've no idea what I should be using though.

bxe0: flags=8843<UP,BROADCAST,RUNNING,SIMPLEX,MULTICAST> metric 0 mtu 1508 options=527bb<RXCSUM,TXCSUM,VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,TSO4,TSO6,LRO,WOL_MAGIC,VLAN_HWFILTER,VLAN_HWTSO> ether 10:60:4b:b4:a0:40 inet6 fe80:***************40%bxe0 prefixlen 64 scopeid 0x3 media: Ethernet autoselect (10Gbase-SR <full-duplex>) status: active nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> bxe1: flags=8943<UP,BROADCAST,RUNNING,PROMISC,SIMPLEX,MULTICAST> metric 0 mtu 1500 description: LAN options=120b8<VLAN_MTU,VLAN_HWTAGGING,JUMBO_MTU,VLAN_HWCSUM,WOL_MAGIC,VLAN_HWFILTER> ether 10:60:4b:b4:a0:44 inet6 fe80xxxxxxxxxxxa044%bxe1 prefixlen 64 scopeid 0x4 inet6 200xxxxxxxxxxxxx prefixlen 64 inet 192.168.2.2 netmask 0xffffff00 broadcast 192.168.2.255 media: Ethernet autoselect (10Gbase-SR <full-duplex>) status: active nd6 options=21<PERFORMNUD,AUTO_LINKLOCAL> -

Do that have the same capabilities? Try:

ifconfig -vvvmaAre those vmxnet NICs the pfSense VM has assigned currently?

If not try assigning one to something and see if that responds to large packets.

This seems likely to be an issue with the bxe driver or the NIC itself but we need to confirm that by, for example, showing vmx is not affected.

Steve