VMware Workstation VMs Web Traffic Being Blocked

-

@stephenw10

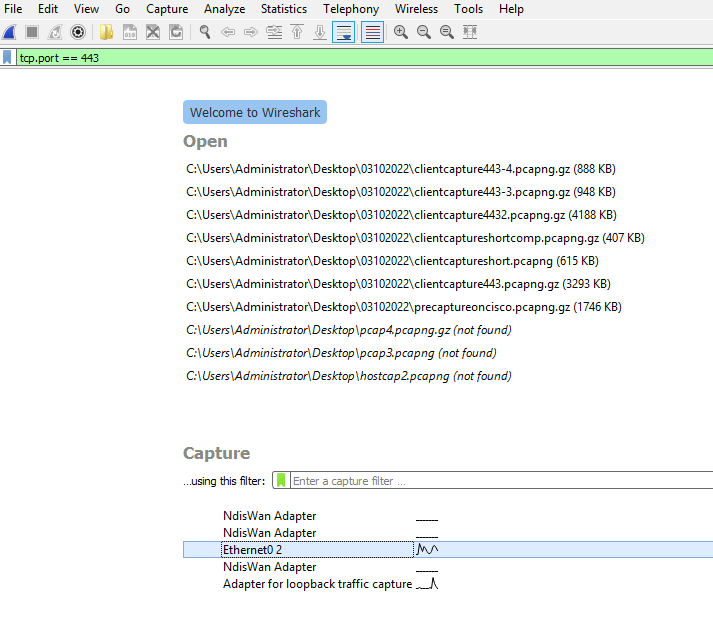

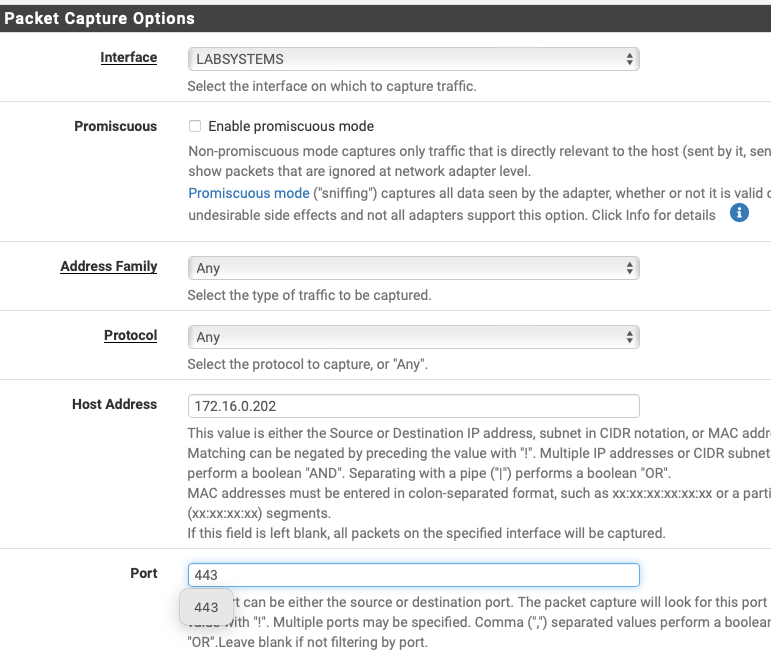

That is interesting about the packet size being virtual side only.Yep they're as simultaneous and I can think to make them. The capture from pfsense will be longer as I do these steps: Pfsense > Packet capture > set interface to labsystems (vlan 2000 - 172.16.0.0/24) > host 172.16.0.202 > port 443 > start capture > jump to vm > start Wireshark listing for tcp 443 > bring up chrome and browse to the sites that aren't playing ball > let capture run for about 8-10 seconds > stop > save as with compression > stop capture of pfsense > upload both to this forum.

I'd be happy to run another capture. I have a 2mb hard upload limit on this site so I was intentionally trying to keep these small. I'd be happy to upload a bigger packet capture just let me know where.

Can you frame out for me exactly how you want me to kick off the capture, how long and with doing what? Just want to make sure I get it to the exact criteria that you need to see.

-

Yeah, that's absolutely what's happening here. Look at the second pair of captures. Filter by

ip.addr == 151.101.2.219.

That IP sends the Server Hello in 4 TCP segments but the client only receives the last part, smallest packet.

Connection fails. -

@stephenw10

So are you thinking that comms are getting out from the client out to the hosting internet server, the hello traverses back and then the larger packets aren't surviving? What do you think could cause that? -

Can you pcap on the VMWare host interface? to see if it arrives there?

-

@dfinjr said in VMware Workstation VMs Web Traffic Being Blocked:

What do you think could cause that?

as Stephen says " Can you pcap on the VMWare host interface?"

pls. plug the laptop directly into the SG's port 4 and start a packet capture on both the box and the laptop

BTW:

if the .pcap is too big give us a dropbox or mega, etc link -

I want to make sure I capture this right. So I'll eliminate the switch, go direct host > netgate.

Start a capture on the vm targeting this

Then also capture such as this from Pfsense:

If that is all correct, how long of a capture would you like?

Please tune before the capture want to get you all what you need first shot.

-

FYI I have disconnected the switch from scenario so it is direct from netgate > vm hosting laptop.

Still seeing the browsing issue but I know we're about to capture based on that, just wanted to let you know.

-

You should filter by the destination IP in pfSense if you can otherwise we see all the local traffic and make sure you set the packet count to 1000.

On the other side try to capture on the VMWare host not the VM. We already have caps from the VM showing the large packets failing to arrive. We need to see if they arrive at the VM host interface.

Steve

-

@stephenw10

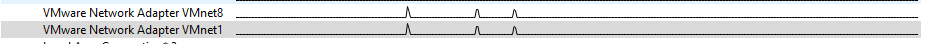

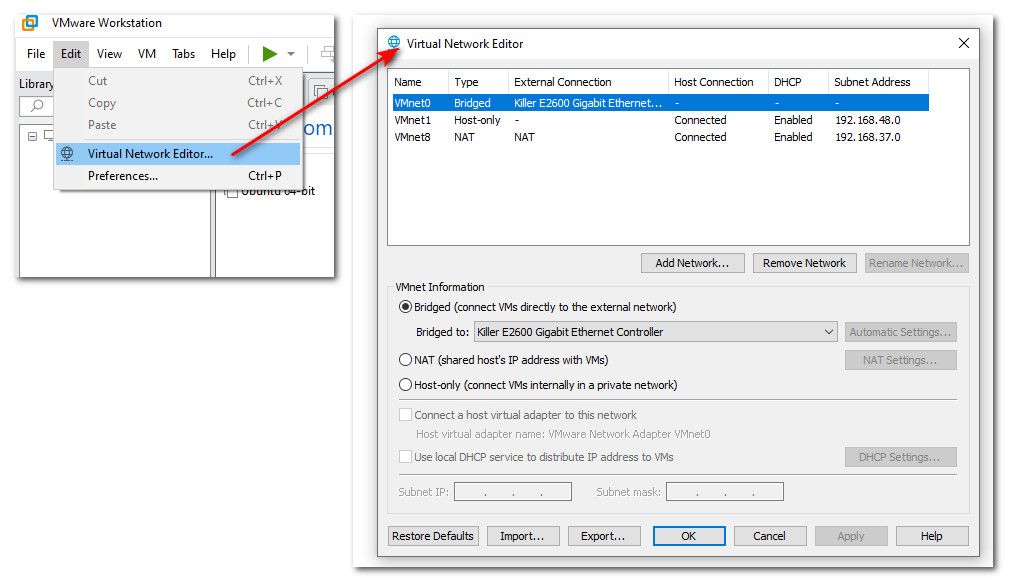

So some difficulty here.attempting to capture packets I have this two virtual adapters on the hosting system:

Very interesting. Just sniffing these two interface yield nothing. I go to .202 and browse to netgate.com and 0 packets show up on either of these.

Did you mean the literal physical adapter on the host machine?

-

@dfinjr Those are like I showed you - those are for use say with natting or just a host network and only vms can only talk to each other.

For someone that has multiple workstations running vm workstation, seem not to know much about how it works ;)

I am really curious where exactly use sniffed and on what - because when I snff on the physical interface of the host that is doing bridging like you said your doing.. I see duplicated packets.. Which is common on such a setup..

https://ask.wireshark.org/question/8712/duplicate-packets-from-vmware-host/

There was actually a some what recent thread around here where user was seeing the dupe packets, and it was this because of his vm setup and what interface sniffing on, etc. But that is not actually what is going on the wire.. If you sniff elsewhere on the network..

If you notice the time stamps - they are nano seconds apart, you are seeing as enters the bridge and when leaves the bridge. You can see the same sort of thing when sniffing on a vlan interface on a higher end switch, you see when it enters the vlan and when it leaves because your sniffing on the vlan and not specific interface. Same sort of thing here when your sniffing on a bridged interface..

-

@dfinjr said in VMware Workstation VMs Web Traffic Being Blocked:

Did you mean the literal physical adapter on the host machine?

Yes I would start there. Make sure the replies from the external hosts are making it that far first. Since you only changed the Cisco for pfSense it seems something must be different. Though it's hard to see what!

-

@stephenw10

Sorry got held up with work, attempting the capture pieces now. -

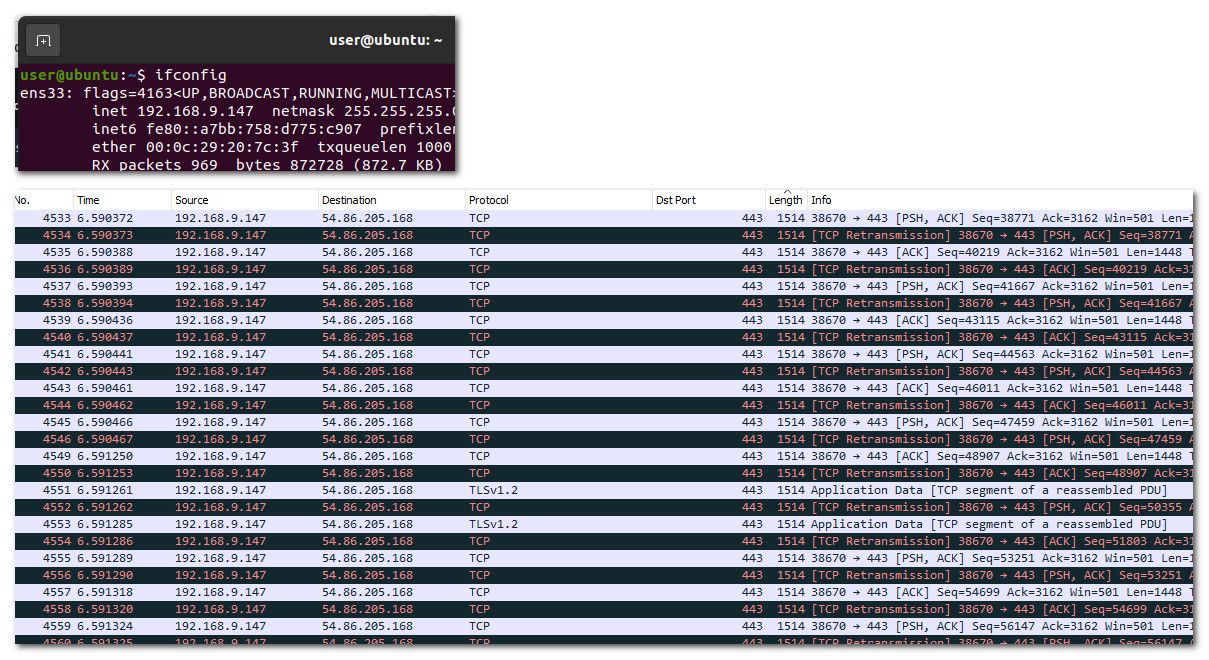

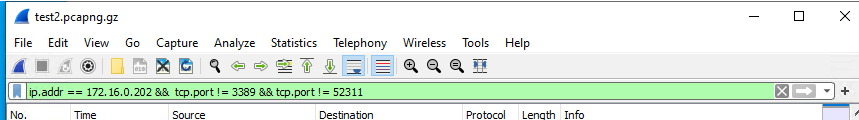

Apologize that took me so long everyone. Had some work stuff hit me between the eyes and beyond that I wanted to get a good clean capture, filtering out most of the noise by ports that don't matter to this. I think I have a good capture. 2 files: test2 capture was done from the VM hosting system listening just for the IP address 172.16.0.202 and not capturing anything to do with 3389 and 52311 (BigFix Port) and file packet capture-5 is from pfsense listening on the interface where the system resides but filtering out 3389 and 52311 again. I haven't analyzed this myself yet but think it is the most solid one I've captured yet. With the filters I was able to capture a far longer. Captured till the browser on the VM gave up and spit out an "err_timed_out". Please let me know what you think. packetcapture-5.cap test2.pcapng.gz

-

Think I finally got a good capture. I've added it to the forum.

I'm doing my best with the VMware Workstation software. It isn't something I use outside of a means to an end and don't have any real professional experience with it. It hosts my VMs but I am far from an expert with it. Any educational points you can throw my way is of course appreciated.

-

Ah, it looks like on the test2 cap, on the VMWare host, you filtered by 172.16.0.202 as destination so we don't see any of the traffic 172.16.0.202 is sending.

But that does mean we can see the large packets leaving the pfSense LAN and arriving at the VMWare host. If you filter by, for example,ip.addr == 151.101.2.219again you can see that.So given that those packets do not arrive at the actual VM it must be some issue with virtual networking dropping that.

It looks like none of the traffic leaving pfSense toward the VM host is flagged do-not-fragment. And it was previously. You might want to uncheck that setting in pfSense if it's still enabled because that should not be required.

Steve

-

@stephenw10

Thank you for the information Steve. I'll look at what I can do to alter the vmware configuration to allow the traffic to flow. Not sure what to do there but at least I can focus on it a bit more to see if I can get it to go.Question is, in your opinion, with knowing that this is the way that the traffic was flowing from the ASA before, do you think that the Cisco appliance was shaping the traffic or something so that it could flow?

-

@stephenw10

Also, this is the filter I did for the Wireshark. I did have the .202 IP but it was destination only:

-

If it's an MTU issue try testing that with large pings. You can just try pinging out from a VM to pfSense with do-not-fragment set and see where it fails. Or doesn't fail.

It seems like Cisco was reducing the path-mtu somehow. mss set perhaps? That might show in the cap we have from when it was in place...

-

Or maybe we don't have a pcap taken when the Cisco was routing?

-

@stephenw10

packetcapture-6.cap VMhostSpeedTest.pcapng VMSpeedTest.pcapngDoes this by chance show anything different. You're much faster on the analysis than I am. What I did is started a capture generic vlan on the pfsense, did a capture based on the vm host and the vm itself all fixated on speedtest.net.

I started with a ping and then attempted to browse via IP over 443.