Slow DNS after 22.05

-

@steveits

I have this problem really hard to troubleshoot as it impacts only certain domains not resolving at sporadic times. Restarting unbound service solves the problem for a while, but it's not sustainable as only yesterday I had to restart unbound 4 times. -

@bmeeks said in Slow DNS after 22.05:

One thing the Netgate team could consider is bringing the current 1.16.2 version of

unboundinto their 22.05 FreeBSD Ports tree and thus making it available for manual installation (or upgrade) for those users impacted by the bug. Or put the older 1.13.2 version into the pfSense Plus 22.05 package repo. That would be a more complicated "update" for users, though, as they would likely need to manually remove theunboundpackage and then add it back aspkgwould not normally see 1.13.2 as an "upgrade" for 1.15.0.This would be great people who don't have any problems could stay on default unbound version, and these with problems could manually install latest.

I unfortunately live in Japan and here lots of users like yahoo.co.jp from my observations this is worst offender, breaks 1 to 5 times a day, also other sites in co.jp break way more than com, net etc. Yesterday had amazon.co.jp break but amazon.com working perfectly. rebooting unbound everyday is getting a chore. Waiting 2-3 months until 22.11 release would be hell. -

I have major issue with unbound on PfSense Plus latest stable version :

DNS lookups are slow because unbound (the DNS Resolver) frequently restarts

I do not know what to do, as system logs show no useful information

I'm with unbound 1.15.0.

I see strange hotplug events regarding igc0 in the General tab simultaneously, which may cause new dhcp lease and unbound restart.

Should I open a ticket, is it yet another intel nic driver / hardware issue ?

(SG-6100)

-

@yellowrain EDIT : I do not use the igc0 port anymore.

Just realized ix0 is smoother. Too bad I did not do that earlier. -

@yellowrain said in Slow DNS after 22.05:

I see strange hotplug events regarding igc0 in the General tab simultaneously, which may cause new dhcp lease and unbound restart.

igc0 is your WAN ?

That would be a very valid reason for unbound, actually any process, that uses interfaces.

3 things to test : the cable. The interface on the other side, the igc0 from your 6100.

The cable test is easy ;)

You could use another WAN interface on your 6100, it has plenty of interfaces ;)

Testing the other side : use another NIC, if ythe upstream device has more then one, or put a switch between your WAN (igc0) and your upstream device. This will hide the problem, you still have to check why the upstream device pulls its interface down. If this is a modem type device, it does so because your uplink went down. -

S SteveITS referenced this topic on

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

G Gertjan referenced this topic on

G Gertjan referenced this topic on

-

S scottlindner referenced this topic on

-

S scottlindner referenced this topic on

-

S scottlindner referenced this topic on

-

S scottlindner referenced this topic on

-

@gertjan my current config was igc0 for LAN. ix2/ix3 for WAN.

I had time to fully investigate all logs this summer.the interface on the other side has been rock stable (trusty business grade switches Zyxel XG1930-10).

2.5gbps on igc0 is still not as stable as I would wish, based on my experience. (connection lost even at max power, green ethernet not working, short cable setting unreliable. That makes unbound restart and the restart process takes time)

Cable may be one reason, you're right, I had Cat 6A, though even another fully compatible 10gbps cable make the igc0 exhibit same symptoms (maybe less, but I rushed thoses tests...).

So at the end, for today, I use only ix0 for LAN, ix2/ix3 for WAN. Those interfaces are server-level Intel chipsets based. Other interfaces I have in my homelab are almost all X550 (NAS, server), and that works well. Only one exception is one aquantia thunderbolt 3 interface for my laptop, which is great also for this type of device.

I also fully investigated the wireless part, thanks to openwrt on wrt3200acm (6ghz wifi routers are still not widely available here in Europe). There I found some IoT smart plugs screwing 2.4Ghz network, on which devices land sometimes. I had to add a separate 2.4Ghz radio to isolate thes IoT devices. 5ghz was already optimized, but the latest 22.03 OpenWRT build brought long uptime stability (or at least uncluttered the logs).

That way, confcalls over Teams, voWifi calls and DNS resolution are now stable.

-

S SteveITS referenced this topic on

-

This post is deleted! -

This post is deleted! -

I installed BIND on my 3100 given the issues I'm still having with Unbound, expecting it to be able to behave as a resolver on my network.

However, devices using DHCP are issued with the IP addresses of DNS servers set in the "general settings" rather than the IP address of the 3100 itself as happens when you use the native DNS Resolver (Unbound). This means any locally set DNS records (and I only have one that I use) are ignored as all devices are going out to Google's DNS.

Appreciate this might be considered slightly off-topic, but based on my reading, BIND should offer a viable alternative to Unbound as a resolver.

-

@istacey said in Slow DNS after 22.05:

BIND should offer a viable alternative to Unbound as a resolver.

Like unbound, bind doesn't need "8.8.8.8". Both are resolvers.

8.8.8.8 is a DNS resolver where you can forward to.If you wan to deal with 8.8.8.8 because you have to give them your private DNS requests, use the forwarder (dnsmasq), you won't be needing any local resolver.

-

@gertjan I don't want 8.8.8.8 issued via DHCP to devices, but it is and I can't see how/where this is set. Switching back to Unbound goes back to what I'd expected/wanted, that is DHCP issuing 192.168.1.2 as the DNS server).

I can't see how I stop a BIND setup doing this.

-

@istacey said in Slow DNS after 22.05:

I can't see how I stop a BIND setup doing this.

Bind has nothing to do with the DNS server IP, send by the DHCP server to a client that requests a lease.

See for example here : a DHCP request and answer :If your LAN clients receives "8.8.8.8" as a DNS server IP during the lease negotiation, check your DHCP server settings.

The DHCP server doesn't know what '8.8.8.8' is unless you've instructed it. -

@gertjan Yes, my fault! I'd left DNS servers configured in the General Settings. Removed these now and DHCP is issuing my firewall address as DNS... but now I need to work out how to make BIND work properly because that's left nothing resolving!

-

@istacey Had you tried the DNS Forwarder feature instead of Resolver?

-

@steveits I'm using the forwarder only because the Unbound resolver is unreliable. But the forwarder doesn't give me the things I need so I wanted something the resolver.

-

@istacey said in Slow DNS after 22.05:

But the forwarder doesn't give me the things I need

What is that exactly?

-

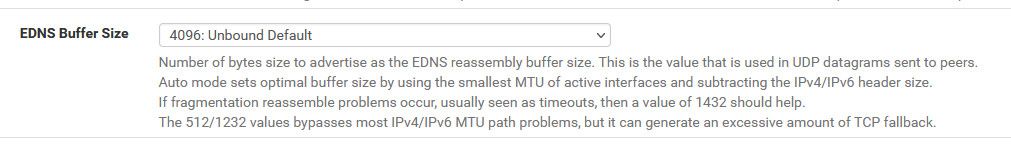

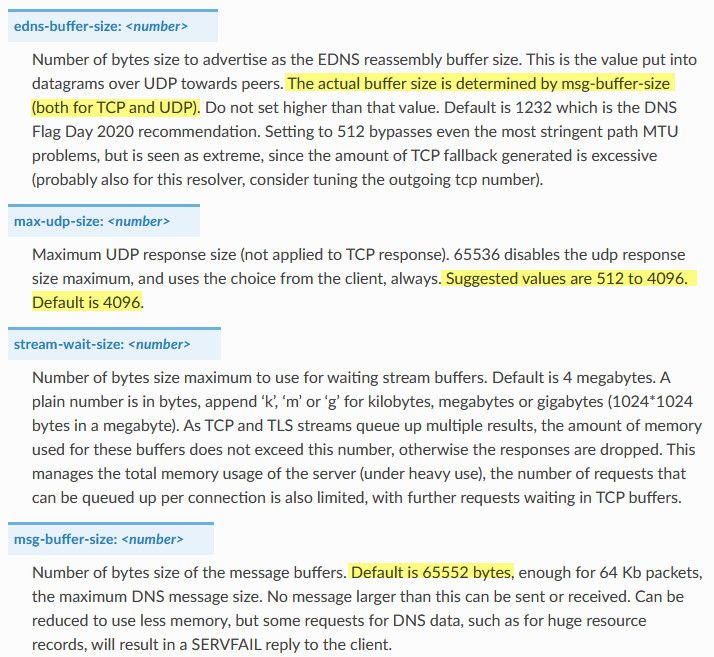

Problems with my unbound DNS resolver stopping to resolve certain domains randomly seems to be reduced or even solved by setting Unbound EDNS Buffer Size to

4096 - Unbound default in advanced settings.

Need more testing but currently it looks promising. -

@vbjp what was it before, 4096 is the unbound default is it not, that is what mine says.

And if I look at my 2.6 VM, which I would not have touched shows automatic based on mtu.. I prob edit my main system long time I ago to 4096.. Which per the doc's is the max udp size default.

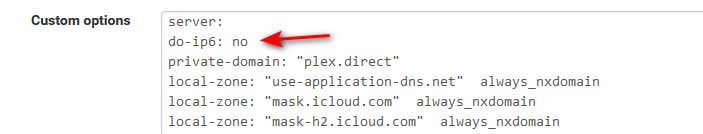

If that that is a problem, then that would explain why I have never seen any of these issues others have reported, as well as I my setting of not doing IPv6 for dns. Which I also think came up as being problematic for this version of unbound at least.

-

@johnpoz

Never changed any setting for that also 2.6 an 22.01 worked without any problems. Problem started with 22.05 update.

Did not check what it was in previous versions but in 22.05 that setting was set to "Automatic value based on active interface MTUs"

And I think it cased all the problems in 22.05 with DNS resolution intermittent failures, mostly it failed to resolve co.jp domains while I rarely saw problems with .com domains like amazon.com haven't seen failing, while Amazon.co.jp failed at least few times a day.

I also had ipv6 disabled in custom options as per some suggestions in this thread but it didn't solve my problem. -

@vbjp if I had to guess something about fallback to tcp in this version of unbound, and if your udp size is set lower its quite possible you are switching over to tcp more often, etc.

IPv6 is quite likely to switch over to tcp more often, especially if there is a lower mtu setting for the udp size, which IPv6 does normally have a lower mtu of like 1280 or something, etc. etc..

It will be good when the unbound version updates on pfsense to see if the issues people are seeing go away.