Wierd Routing behaviour that I may need to work around [solved]

-

@justconfused

A few general things to look at:Everything on

192.168.38.0should be able to reach each other without the firewall knowing about it. Are the firewall and switch separate? Can you unplug the pfSense from the switch and have the remaining devices ping/traceroute each other? How are the other devices configured, DHCP, static? Is the switch managed, does it have any VLANs, port isolation, or routed ports? Ping/traceroute from the TN Scale hosts, if possible.The first hop from the container is

172.16.0.1. Thus the container must have an address172.16.0.X, right? Is that address visible to the rest of the network, or is TN peforming NAT? If so, is that desired? Is there a way to look at TN's routing table (perhapsnetstat -nr)I'm assuming you haven't subnetted

192.168.38.0? Are all the machines using the same netmask255.255.255.0?Does the traceroute change when you have the VPN on or off?

-

@mariatech - some good questions

"Everything on 192.168.38.0 should be able to reach each other without the firewall knowing about it." - I agree - and this works normally for everything else

"Are the firewall and switch separate? " - Yes - everything here is physical (apart from the containers). There is VMWare - but not involved.

"Can you unplug the pfSense from the switch and have the remaining devices ping/traceroute each other?" - good logical question - I will test - see below

"How are the other devices configured, DHCP, static?" - All theses physical devices are static. There is DHCP, but not involved

"Is the switch managed, does it have any VLANs, port isolation, or routed ports?" - all switches are manageable (but I do very little with them). There are VLANs (iSCSI is on its own VLAN & switch) - but none are involved here. No port isolation or routed ports. The reason for multiple switches is that some of this kit is in the garage and the firewall is in the house. Some kit is 10Gb, some kit is 1Gb. There is both fibre and copper between the house and the garage

"Ping/traceroute from the TN Scale hosts, if possible." - normal and correct behaviour - goes straight to the desired host and not via the firewall.

"The first hop from the container is 172.16.0.1. Thus the container must have an address 172.16.0.X, right?" - Yes

"Is that address visible to the rest of the network, or is TN peforming NAT?" - TN is performing NAT - at least that was my understanding

"If so, is that desired?" - there is no option for anything else. This is not portainer (perhaps unfortunately)

"Is there a way to look at TN's routing table (perhaps netstat -nr)"? Yes - nothing unusual hereroot@NewNAS[~]# netstat -nr Kernel IP routing table Destination Gateway Genmask Flags MSS Window irtt Iface 0.0.0.0 192.168.38.15 0.0.0.0 UG 0 0 0 enp7s0f4 172.16.0.0 0.0.0.0 255.255.0.0 U 0 0 0 kube-bridge 172.22.16.0 0.0.0.0 255.255.255.0 U 0 0 0 enp7s0f4d1 192.168.38.0 0.0.0.0 255.255.255.0 U 0 0 0 enp7s0f4 root@NewNAS[~]#172.22.16.0 = iSCSI network

172.16.0.0 is reserved for all things kubernetes / containery"I'm assuming you haven't subnetted 192.168.38.0?" - Correct. No wierd subnetting. I am lazy and don't like to work that out if I can avoid it and I have no need for it

"Are all the machines using the same netmask 255.255.255.0?" - Yes

Does the traceroute change when you have the VPN on or off?" - Yes. I am assuming by VPN On/Off that you mean when I toggle the gateway. - see belowLonger Answers below

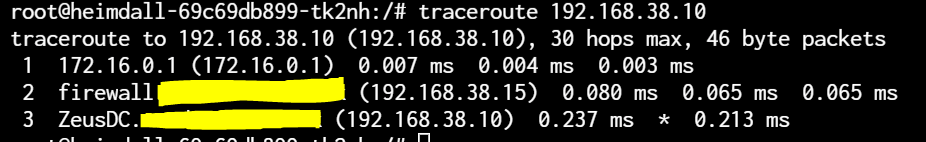

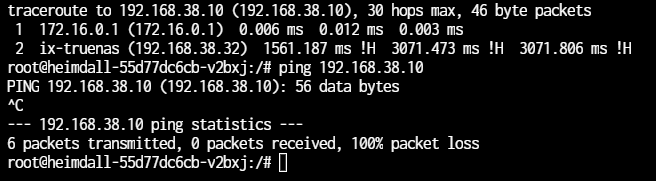

Traceroute to 192.168.38.10 with Gateway On

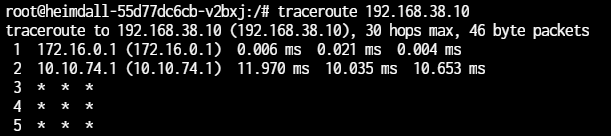

Traceroute to 192.168.38.10 with Gateway disabled

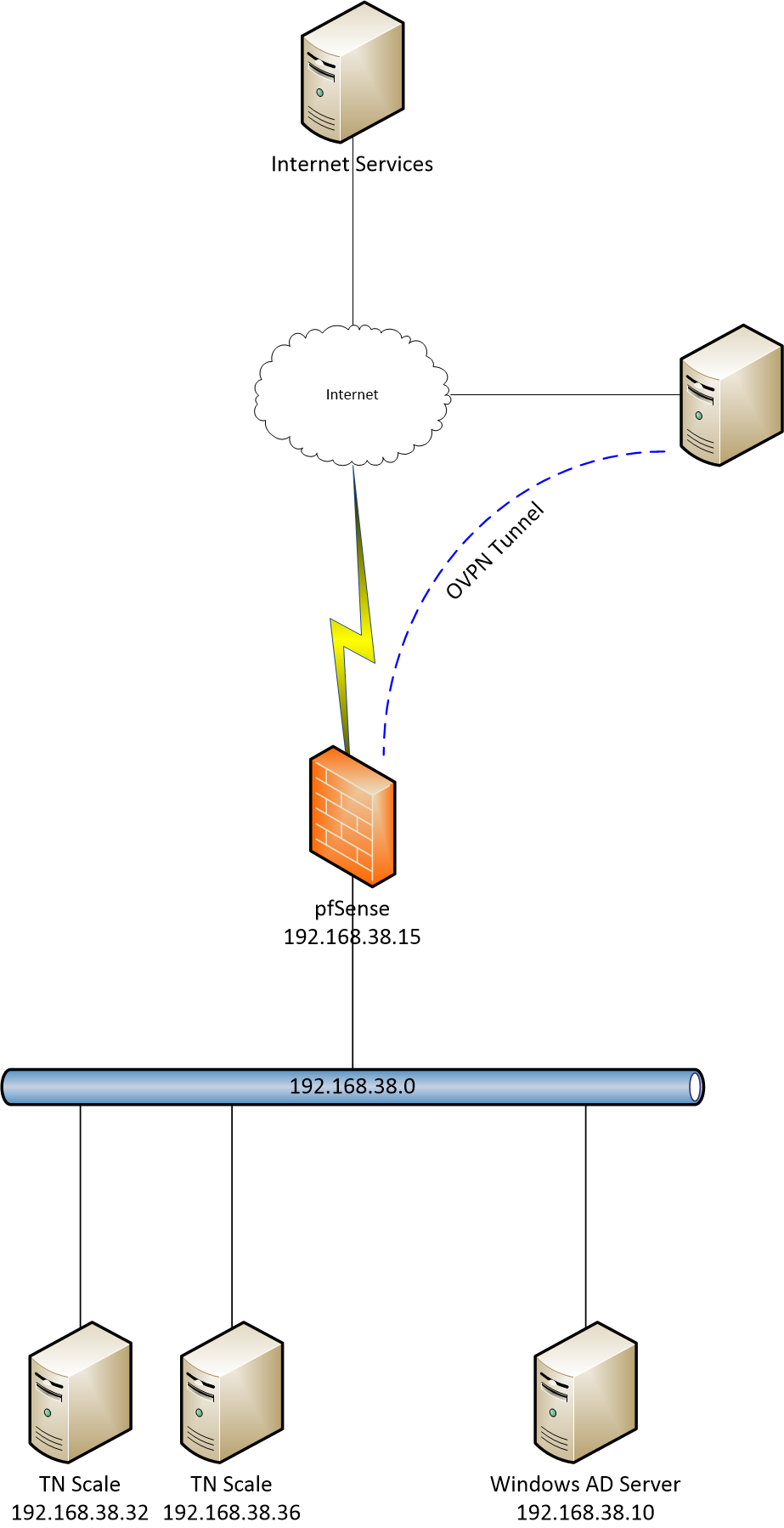

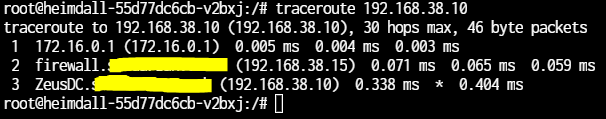

Testing removing the firewall (physical unplug of internal NIC)

Traceroute (and ping) to 192.168.38.10

Heimdall container is 172.16.3.165 with a 255.255.0.0 subnet. Gateway is 172.16.0.1

My current conclusion is still the same as in my OP. The routing of the container traffic is / must be a bug in TrueNAS as its highly suboptimal and doubles traffic to the firewall that doesn't need to be there. This bug is shown up by the PBR in pfSense grabbing the traffic (correctly really) and pushing it into the VPN

-

@justconfused said in Wierd Routing behaviour that I may need to work around:

However the bigger question is why is all the local traffic being sent to the firewall rather than being dumped onto the LAN properly - which I feel must be a bug in TrueNAS

Yeah your 172.16.0.1 is container network, but that should be natted to your dockers host IP..

So I run a heimdall container and if grab a console on it..

root@heimdall:/# ifconfig eth0 Link encap:Ethernet HWaddr 02:42:AC:11:00:02 inet addr:172.17.0.2 Bcast:172.17.255.255 Mask:255.255.0.0 UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1 RX packets:22 errors:0 dropped:0 overruns:0 frame:0 TX packets:3 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:1916 (1.8 KiB) TX bytes:238 (238.0 B) lo Link encap:Local Loopback inet addr:127.0.0.1 Mask:255.0.0.0 UP LOOPBACK RUNNING MTU:65536 Metric:1 RX packets:0 errors:0 dropped:0 overruns:0 frame:0 TX packets:0 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:1 RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)This is on a host 192.168.9.10, if I traceroute to something on the 192.168.9/24 network I do show hitting 172.16.0.1 but then directly to the 192.168.9.100 address for example..

traceroute to 192.168.9.100 (192.168.9.100), 30 hops max, 46 byte packets 1 172.17.0.1 (172.17.0.1) 0.008 ms 0.007 ms 0.004 ms 2 i9-win.local.lan (192.168.9.100) 0.505 ms * 0.337 msIf I ping the pfsense box on the 192.168.9 from the heimall container it looks likes coming from the docker host 192.168.9.10

If I had to guess, and I am sure not a docker guru for sure.. I run them on my synology nas this seems to be something odd in your docker networking..

-

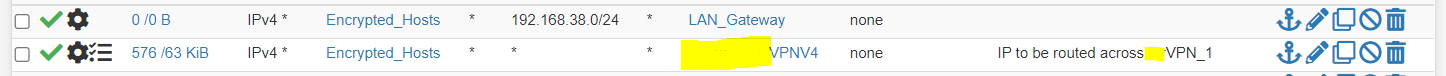

So, how can I use pfSense to work around this issue? (if I can)

I have discovered that if I disable the policy based routing down the tunnel then the router diverts the traffic back where it needs to go correctly, although it shouldn't need to

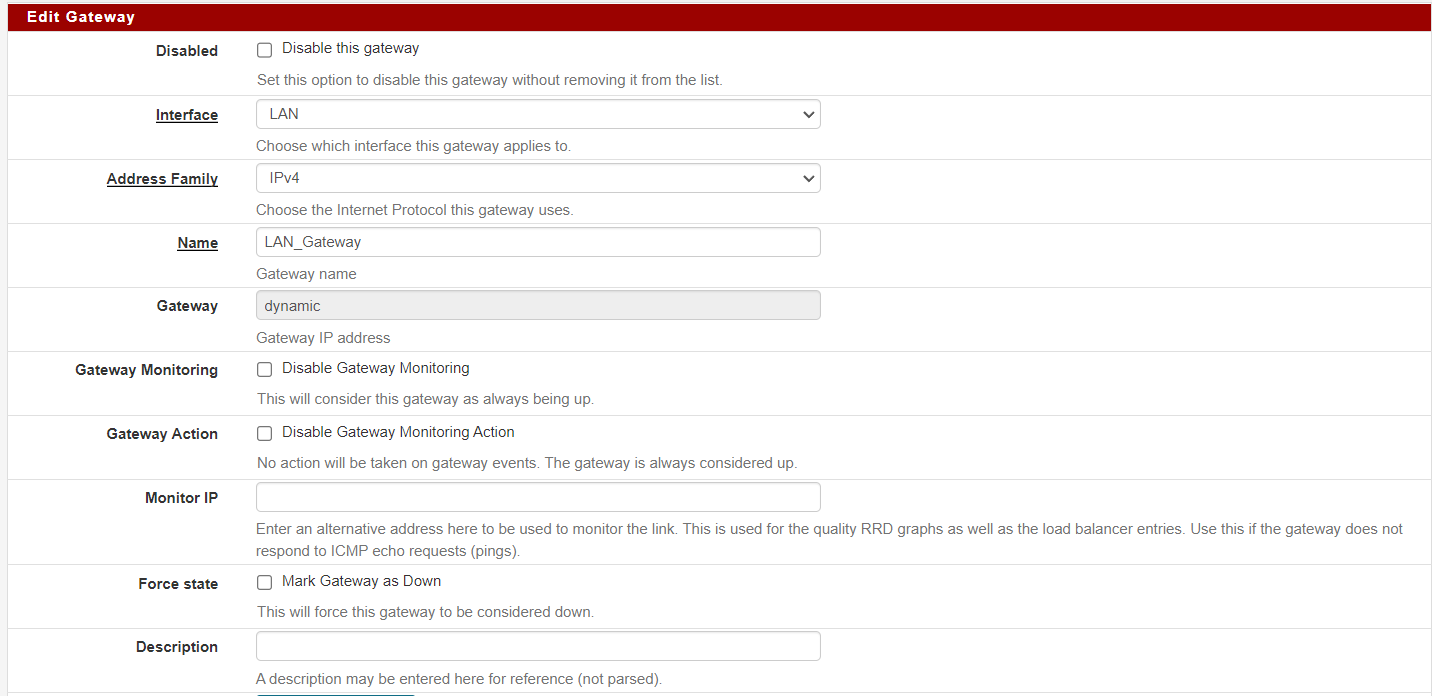

Try 1:

I have tried adding a LAN Gateway (sort of a dummy really)

and then adding a new PBR in front of the rule causing the issue

But this doesn't work - I suspect because the LAN_Gateway isn't really a gateway as it has no address

Try 2:

Basically the same but point the gateway at 192.168.38.11 (a different AD Server)

I wasn't expecting this to work - and I cannot say that I am suprised that it doesn'tThat unfortunately tapped me out for ideas at the moment (barring a major network redesign). Maybe put another firewall/router in the middle with no PBR and shove my real firewall out 1 network step. I have the kit available (the good news), could just use pfSense with the FW turned off.

-

@justconfused not sure why you think this has anything to do with pfsense.

I think maybe your coming at it from the wrong direction. Because your having problems accessing it from your network?

With your example of heimdall - This is accessed from the network, it doesn't normally access stuff directly - does it? mine sure isn't for anything I am doing with it. Well maybe it does, since it shows like my overseerr how much its processing.. And my tautulli if any streams are running. But those are just setup in heimdall to the fqdn of those services. Have never looked into exactly how it gets that info - if it initiates the connection from itself, or if it just presents my browser with how to get that info.

So for example I access it from my networks via the docker hosts fqdn on port 80

http://nas.local.lan:8056/

This presents me with the interface..

Are you trying to run something on this heimdall docker that needs to access something on its own? A connection it started? Is something not specifically working?

Again not a docker guru - but if the docker creates a connection to anything outside the host, be it the hosts network, or some other network.. That should be natted to the hosts IP..

For example if I get a ping going from heimdall docker to something on the hosts network 192.168.9 pfsense never even sees that traffic.. Because the docker host sends it on to my host on the 192.168.9.100 directly..

You have something odd in your docker setup in my opinion. And zero to do with pfsense.. Sure if pfsense gets sent traffic it will try and route it.. But your host on your network X, should never send traffic to pfsense if some docker is trying to talk to some IP the docker host is directly connected too.

If your trying to get to something from your docker on another network other than the docker hosts network, then sure it would be sent to pfsense, from the docker host IP.. And pfsense would route it best it can. If you have a policy route sending traffic somewhere then it would go there vs just some other network on pfsense.

You need to allow normal routing on pfsense. This would be a policy route bypass

https://docs.netgate.com/pfsense/en/latest/multiwan/policy-route.html#bypassing-policy-routing

But from your drawing with the 192.168.38 devices them talking to each other should never send any traffic to pfsense.. Devices on the same network talking have nothing to do with pfsense - the gateway to get off said network.

-

@johnpoz

I don't think this is pfSense's fault at all. pfSense is doing nothing wrong. Also don't get hung up on Heimdall, its just an available container with nslookup, ping and traceroute available inside the container (most don't have that much)The fault IS with the kubernetes setup - but that isn't very configurable by the user (aka Me) and whilst I have logged a ticket with IX I suspect this will take a while.

I am just hoping I can bodge around the issue with pfsense (which is configurable) until TN gets fixed.

I shall give your idea a try. Bypassing Policy Routing

And it works - it was what I was trying to achieve - but:

- Simpler

- Works - at least in initial testing

Thank you

-

@justconfused I agree. The only way pfSense could get hold of that traffic is by poisoning Scale's ARP cache, or MAC flooding the switch, and by physically unplugging it we have eliminated even that! A packet with

192.168.38.10as a destination somehow ends up with pfSense's MAC address. There must be some policy in Scale that's bypassing the host's routing table.Speaking of not being configurable, something like this must be happening on my Chromebook. Crostini has an address that looks like it's on the local prefix, but Chrome inserts itself as an extra hop between it and the rest of the LAN. And it's not reachable from the LAN until I create some state by initiating an outward connection from the VM.

Anyhoo, Good Luck!

-

Can this thread be marked as solved somehow?

From pfSense's PoV it is solved by enabling a workaround. The problem still exists - but its not pfSense's issue

Thanks to all the contributed

-

@justconfused i'm on my mobile right now so I'll admit, I didn't read this with a fine tooth comb but I will say: I ran into a very strange and similar sounding issue some months ago involving docker containers and networking. it boiled down to the fact that I wasn't aware that the default internal docker network was 172.17.0.0/16. this was causing routing and VPN issues for me that I could not figure out for days. eventually I ended up changing some of my VPN transit networks to work around that and everything was smooth.

not sure if this applies at all but wanted to throw it out there.

-

@justconfused I can marked it solved for you. So your currently working? While as I said I really don't think this is a pfsense issue - want to help in anyway we can to get you working.