23.1 using more RAM

-

@jimp said in 23.1 using more RAM:

I'd have to look over the code but one thing it does during the daily periodic script is check if ZFS pools need to be scrubbed. I wouldn't expect that to trigger high memory usage in general, but it's possible. That wouldn't explain why some people see it and others don't, though.

Someone could reboot and then run periodic script by hand to see if doing so also triggers the increase in memory usage. Or even just try

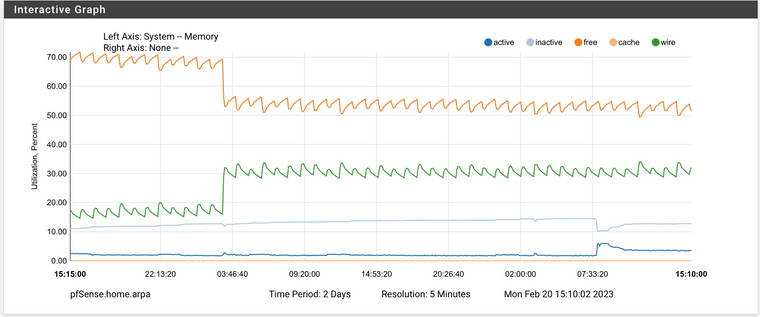

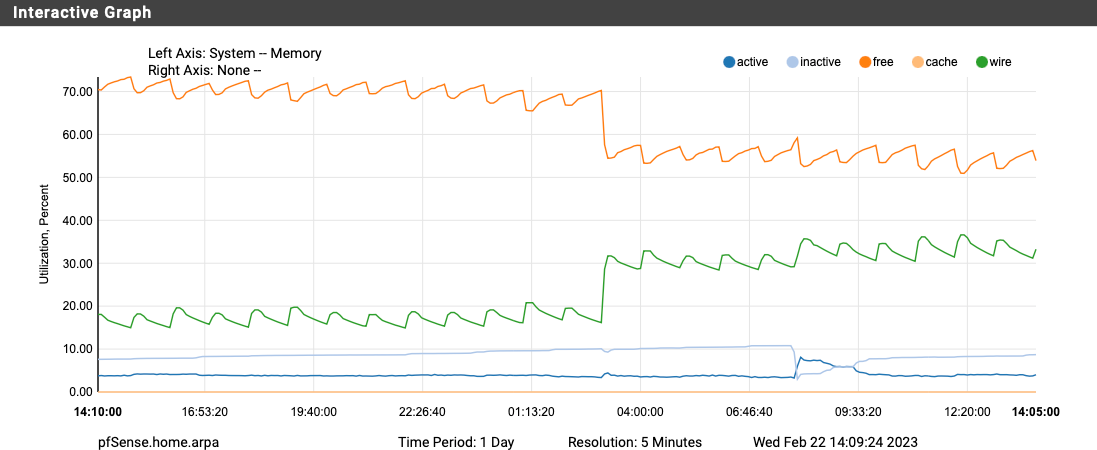

zpool scrub pfSenseand check before/after that finishes. You can runzpool status pfSenseto check on the status of an active scrub operation.Hi Jim, I will try that when I get some free time today. This is a daily occurrence on my 6100 MAX. Here's 2-day graph from a few days ago. The memory never gets returned after the spike occurs.

-

@jimp : Me too having that memory jump and do not use ZFS!??

Regards

-

If it's ZFS ARC usage that's what I'd expect to see. It won't give it up right away but if something else needs the RAM the kernel should reduce the cache as needed under memory pressure. That doesn't mean it would never swap, but it should mean it doesn't fill up swap.

You could also experiment with setting an upper bound on the ARC size (

vfs.zfs.arc_max=<bytes>as a tunable, doesn't need to be a loader tunable) for example, but the exact value for that depends a lot on your system (RAM size/Kernel memory, disk size, etc.). There are likely other ZFS parameters that could be tuned if that does turn out to be related.If you aren't using ZFS then we'll need to keep digging into what exactly is happening at that time that might also be contributing.

-

Its a SG-3100 and I never did setup this appliance with ZFS.

Regards

-

@jimp Running periodic was done in this thread https://forum.netgate.com/topic/178023/1100-upgrade-22-05-23-01-high-mem-usage/30 and triggered it.

-

@fsc830 said in 23.1 using more RAM:

Its a SG-3100 and I never did setup this appliance with ZFS.

The 3100 isn't capable of ZFS anyhow (it's 32-bit ARM). But just to be certain, do you see any messages referring to ZFS in the system log (Status > System Logs, General tab), boot log (Status > System Logs, OS Boot tab), or kernel message buffer (From a shell or Diagnostics > Command, run

dmesg)?Even if the system doesn't use ZFS for its filesystem something may be inadvertently triggering the modules to load.

-

D DefenderLLC referenced this topic on

D DefenderLLC referenced this topic on

-

D DefenderLLC referenced this topic on

D DefenderLLC referenced this topic on

-

D DefenderLLC referenced this topic on

D DefenderLLC referenced this topic on

-

@jimp said in 23.1 using more RAM:

I'd have to look over the code but one thing it does during the daily periodic script is check if ZFS pools need to be scrubbed. I wouldn't expect that to trigger high memory usage in general, but it's possible. That wouldn't explain why some people see it and others don't, though.

Someone could reboot and then run periodic script by hand to see if doing so also triggers the increase in memory usage. Or even just try

zpool scrub pfSenseand check before/after that finishes. You can runzpool status pfSenseto check on the status of an active scrub operation.No change after launching those commands.

-

@jimp : Nothing seen about ZFS in any log.

I am aware that the SG-3100 is not using ZFS, anyhow, I did see some posts here in which people say, they had setup the appliance with ZFS!???But I didnt take care for more investigating in this as I dont not intend to use ZFS.

Regards

-

It would require an extremely unorthodox install to get ZFS on a 3100. I won't say it's impossible because I'm sure someone would prove me wrong! But it would require a significant level of FreeBSD knowledge to even know where to start. So it's probably just a misunderstanding.

-

Looks like from the periodic scripts the

pkgrelated ones in/usr/local/etc/periodic/securityare the ones that seems to trigger it for me. They usepkgto validate parts of the system in various ways, but we don't do anything with the output so it is really unnecessary.Someone who is seeing this, try creating

/etc/periodic.confwith the following content:security_status_baseaudit_enable="NO" security_status_pkg_checksum_enable="NO" security_status_pkgaudit_enable="NO"And then reboot and watch it overnight (or try

periodic dailyfrom a shell prompt, I suppose)You can also knock the ARC usage back down by setting a lower limit than you have now which is almost certainly 0 (unlimited) which is the default. So you can do this to set a 256M limit:

sysctl vfs.zfs.arc_max='268435456'You can set it back to

0to remove the limit after making sure the ARC usage dropped back down.All that said, I still can't reproduce it being a problem source here. I have some small VMs with ~1GB RAM and ZFS and their wired usage is high but drops immediately when the system needs active memory.

-

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

@jimp said in 23.1 using more RAM:

their wired usage is high but drops immediately when the system needs active memory.

I think this is part of the problem to be honest - users hate to see all their memory being used for whatever reason ;) Even if something is using it because nothing else is needing any memory and if that something needs it will be freed up for that something.

Just memory use showing high seems to trigger something is wrong in the users perspective.

-

@johnpoz said in 23.1 using more RAM:

@jimp said in 23.1 using more RAM:

their wired usage is high but drops immediately when the system needs active memory.

I think this is part of the problem to be honest - users hate to see all their memory being used for whatever reason ;) Even if something is using it because nothing else is needing any memory and if that something needs it will be freed up for that something.

Just memory use showing high seems to trigger something is wrong in the users perspective.

Right, "Free RAM is wasted RAM" and all, but some people are implying that it isn't giving up the memory on their systems or it's causing other problems such as interfering with traffic, but it's not clear if there is a direct causal link here yet. But if we can reduce/eliminate the impact of ARC as a potential cause it will at least help narrow down what those others issues might be.

-

@jimp all true..

X doesn't work - oh my memory use is showing 90% that must be it.. When that most likely has nothing to do with X.. But sure if you can reduce it so memory is only showing 10% used and still have X then more likely to look deeper to that is actually causing their X issue.

edit:

Just an attempt to put it another way, not trying to mansplain it to you hehehe ;) ROFL.. -

OMG... you mean...

the memory is sucked by extra terrestrials?

Or why do you refer to the X-files?

SCNR

Regards

-

@fsc830 Maybe they have balloons....

-

The facts are something changed on my 6100 MAX between 22.05 and 23.01 that has essentially doubled my memory utilization at 3AM and never returns to normal without a reboot only to repeat itself again at 3AM.

Is it causing me a problem today? No, but it does bother me when I go from 18% to almost 40% for no apparent reason. This is clearly happening on other Netgate appliances too. Thanks, guys.

-

Right but we still don't know if you have a problem or the perception of a problem. It's almost certainly the latter.

It looks like on 22.05 those periodic jobs were all entirely disabled but it's not clear where that change got lost, since the file hasn't changed upstream.

For now you can edit

/etc/crontaband remove theperiodiclines or comment them out -- do not touch therc.periodiclines as those are OK.Before:

# Perform daily/weekly/monthly maintenance. 1 3 * * * root periodic daily 15 4 * * 6 root periodic weekly 30 5 1 * * root periodic monthlyAfter

# Perform daily/weekly/monthly maintenance. #1 3 * * * root periodic daily #15 4 * * 6 root periodic weekly #30 5 1 * * root periodic monthly -

@jimp said in 23.1 using more RAM:

/etc/crontab

Just made those edits to the crontab file and will reboot these evening. Thanks, Mr. P!

-

@jimp said in 23.1 using more RAM:

try creating /etc/periodic.conf with the following content

@jrey said in 1100 upgrade, 22.05->23.01, high mem usage:

Yup I went a little further in testing, and pin pointed the exact step.

But I also then just disabled the 450.status-security in the config.

as it was configured out of box as (mail root) no one would ever see the output anyway.

following through I also sent the output to log files so in case I might like to look at it one day (NOT)

this thread details all the steps I took and at what point it breaks, the settings quoted on the reference thread will do the same as disabling 450.status-security

there is nothing to see there anyway, especially as configured out of box.

security_status_baseaudit_enable="NO"

security_status_pkg_checksum_enable="NO"

security_status_pkgaudit_enable="NO"Agree it's not a "problem" unless one needs the memory. However, 1100 users are probably more sensitive to memory usage...that's one reason we sold 2100s instead. :) It looks alarming on the graph but at least with 4 GB, for most people it's a bit irrelevant if 30% or 50% is in use.

However if as jrey says it's an unused feature then it shouldn't hurt to disable it.

-

@steveits said in 23.1 using more RAM:

@jimp said in 23.1 using more RAM:

try creating /etc/periodic.conf with the following content

@jrey said in 1100 upgrade, 22.05->23.01, high mem usage:

Yup I went a little further in testing, and pin pointed the exact step.

But I also then just disabled the 450.status-security in the config.

as it was configured out of box as (mail root) no one would ever see the output anyway.

following through I also sent the output to log files so in case I might like to look at it one day (NOT)

this thread details all the steps I took and at what point it breaks, the settings quoted on the reference thread will do the same as disabling 450.status-security

there is nothing to see there anyway, especially as configured out of box.

security_status_baseaudit_enable="NO"

security_status_pkg_checksum_enable="NO"

security_status_pkgaudit_enable="NO"Agree it's not a "problem" unless one needs the memory. However, 1100 users are probably more sensitive to memory usage...that's one reason we sold 2100s instead. :) It looks alarming on the graph but at least with 4 GB, for most people it's a bit irrelevant if 30% or 50% is in use.

However if as jrey says it's an unused feature then it shouldn't hurt to disable it.

Just rebooted after making those changes. I will report back here tomorrow morning!