Major DNS Bug 23.01 with Quad9 on SSL

-

-

@cmcdonald

@stephenw10

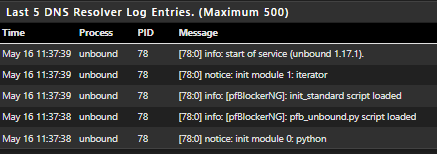

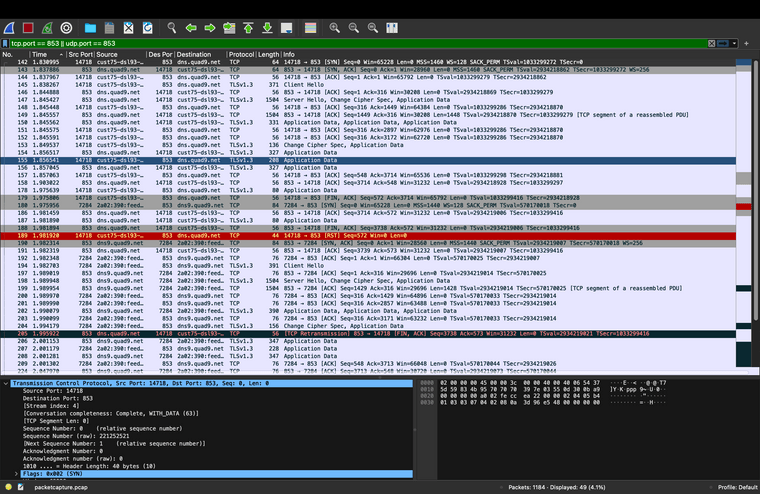

In an effort to explain why DoT is so slow on pfSense I have run multiple pcaps to try and understand how the resolver is handling forwarded queries to the servers set in 'General Setup'. The findings are illuminating and I now understand why slow queries are selected, compounded and compounded again by TLS to the point of failure, whilst ignoring faster name servers.On this simple successful test I am using 4 name servers from

dns.quad9.net.Two are ipv4 servers and 2 more are on ipv6:- 9.9.9.9

- 149.112.112.112

- 2620:fe::fe

- 2620:fe::9

From these servers a typical fast response is 7ms but can be as high as 12ms. Clearly if there is a problem with a name server the response can be much slower, up to 300ms or more.

In this single-lookup example I used kia.com (as something unlikely to be used and therefore cached). The sequence:

- pfSense sends a single query to just 1 ipv4 server - 149.112.112.112

- All other servers ignored

- Answered to unbound in 151ms

- pfSense sends a single query to just 1 ipv6 server - 2620:fe::9

- All other servers ignored

- Answered to unbound in 297ms

- DNS answered to client in 448ms

- This is the sum of the 2 queries, 151 + 297ms, as they are asked and answered sequentially

- The ipv6 query does not start until the ipv4 query is fully answered

The forwarded query does not go to all servers, one is simply picked at random. It does not matter how fast or slow a server is; as long as it is deemed valid and returning an answer in under 400ms it can be picked. If a server normally capable of returning an answer in 7ms is struggling, but still under 400ms, it will continue to be used. Multiples of this added latency will then pollute the back-and-forth of the DoT TCP and TLS handshakes, leading to a considerable delay or potentially a failure.

I have no answer as to why the attempt at using a ipv6 server only starts once the ipv4 DoT sequence is completed. Hopefully someone with more unbound insight can answer this element?

For those of us with upstream servers normally operating in the 7 to 12ms range the acceptance of up to 400ms seems ridiculous. The random choice of server used does little for the client but clearly eases the load at the upstream provider. Not having an option to ask all servers and utilise the fastest compounds matters further. Only starting an ipv6 query once ipv4 has completed is another unhealthy delay. Added all together along with the additional handshakes of TCP/TLS we are left with a slow and potentially unreliable DoT capability.

The example pcap snapshot, for those that like data:

Ref:

https://nlnetlabs.nl/documentation/unbound/info-timeout/ ️

️[As an aside, for Quad9 users only, the ipv6 response fqdn is shown as dns9.quad9.net, rather than dns.quad9.net as shown on the Quad9 help pages.]

-

Hmm, well I guess that explains why using IPv6 servers makes it more likely to hit this.

-

@robbiett So if you remove the IPv6 (or v4) servers from the DNS (forwarding) list that cuts the time more or less in half?

-

@steveits said in Major DNS Bug 23.01 with Quad9 on SSL:

@robbiett So if you remove the IPv6 (or v4) servers from the DNS (forwarding) list that cuts the time more or less in half?

Yep, that seems to be the case.

I'd like someone else to check my work though. I think I have a bog-standard pfSense resolver setup (albeit now with the ASLR unset) but until it is peer-reviewed by someone nothing is proven.

️

️ -

@stephenw10 said in Major DNS Bug 23.01 with Quad9 on SSL:

Hmm, well I guess that explains why using IPv6 servers makes it more likely to hit this.

Indeed, especially if reply latency had been compounded already. Throw in multiple near simultaneous requests, say when rendering a typical 'noisy' webpage and you probably have to be thankful that it works at all.

The man pages for unbound does have some optional parameters that may help but not currently used in the pfSense version - such as:

-

Fast-server-permil: <number>

Specify how many times out of 1000 to pick from the set of fastest servers. 0 turns the feature off. A value of 900 would pick from the fastest servers 90 percent of the time, and would perform normal exploration of random servers for the remaining time. When prefetch is enabled (or serve-expired), such prefetches are not sped up, because there is no one waiting for it, and it presents a good moment to perform server exploration. The fast-server-num option can be used to specify the size of the fastest servers set. The default for fast-server-permil is 0. -

fast-server-num: <number>

Set the number of servers that should be used for fast server selection. Only use the fastest specified number of servers with the fast-server-permil option, that turns this on or off. The default is to use the fastest 3 servers.

I've no direct experience with these options though and I've not found anything that suggests an option to send ipv4 and ipv6 concurrently.

Still learning.

️

️ -

-

It would be best to split off any non-ASLR performance/tuning discussion to a new thread so this can stay relevant to the central underlying problem here.

-

@jimp said in Major DNS Bug 23.01 with Quad9 on SSL:

It would be best to split off any non-ASLR performance/tuning discussion to a new thread so this can stay relevant to the central underlying problem here.

It's your house so happy to do whatever but my only caution is that these issues are already intertwined. ASLR became a partial fix but perhaps not the whole story on the DNS issues observed by the OP and others.

️

️ -

It's hard to know for sure since there are multiple discussions happening in this one thread. The original failures seem to be solved by disabling ASLR. Any slowness/performance issues where it's not acting as fast as you expect are not failures. If disabling ASLR is degrading performance (which is unlikely) that is still a separate discussion because it's still working, not failing to resolve.

-

It's hard to know Jim, especially until we have some more verified proof.

My observations and issues were as the OP described, with things timing out, failing to load, becoming intermittent and then suddenly ok again, for no apparent reason.

Now that we are deeper in, I am positive that the ASLR change made a significant difference but not an outright fix, especially for those running ipv6. I think we are closer to working out why cases such as mine are still hovering at the 'timing-out', 'intermittent failure' cliff-edge. The raw DNS performance is there but not much needs to go wrong for the pfSense / Unbound combination to go wrong, certainly with the way things are working right now. DNS being slow can in itself cause a failure to resolve.

Latency amplification through TLS, using a slow server over a faster one and only running ipv6 look-ups when ipv4 has been completed don't appear to be ideal, even when ASLR-unset collectively moved us all a bit further back from that cliff-edge.

Again, I'm still learning as this has thrown a few surprises along the way.

️

️ -

It's hard to know that your situation is even the same or similar to OP's in this case. You can't properly isolate things by changing so many variables at the same time in multiple different environments and chasing all these different potential threads.

There are multiple confirmations that disabling ASLR has corrected the original reported problem behavior for people (between here and the other various reports), even on FreeBSD 13.2 directly where the only real relevant change was that ASLR was turned on by default.

Anything else you're observing is unlikely to be directly relevant to that change. There is likely room for performance improvement in your environment in various ways but it's unlikely to be the same root cause here.

-

@jimp Ok, I'm back in my box.

️

️ -

If you want to keep discussing various ways to optimize the resolver, feel free to do so, just in a new thread where others can join in who maybe were not even hitting this original issue but might have other relevant observations.

-

@jimp That's ok, I'll just drop the subject so no need for another thread.

️

️ -

@johnpoz

So. Even though I understand and agree with your opinion, how do you explain that many users , including me, are still sticking with a DoT configuration? Aren't you, then, preaching in the desert?

If you could convice me to drop this setting, it would be remarkable. Othewise, your opinion is like the saying: "everybody has an opinion, and it's like an ass****, everybody has one and it stinks". Please enlighten us further? -

@marchand-guy said in Major DNS Bug 23.01 with Quad9 on SSL:

DoT configuration? Aren't you, then, preaching in the desert?

I could care if preaching to nothing - you go ahead and send all your info to whoever you want, I have no desire ever to forward.. I will resolve thank you very much ;)

I see no point forwarding - it sure isn't hiding anything from anyone, it has its own complications.. If you had some isp that was intercepting your your dns ok.. I would then run my own vps, and then resolve from there and forward to my vps.

If you like the filtering they do - hey you more than welcome to trust them.. but you sure are not hiding where your going from your isp like you think you are. Until such time ech is everywhere, since esni is dead. (ie the sni is encrypted) your not hiding anything from your isp if the want to see it.

Each their own.. These guys are good sales folks and love to scare monger, etc.. If you think sending all your dns to company X is in your best interest.. Have at it.. I don't really care where you send your dns, I know where I am not going to send it ;) I will just talk to the owning NSs for the domains and tlds I want to look up..

If my isp was messing with my dns, I would for starters be looking for another isp. if I was in some country where they all did it, then I would use a vpn, and that vpn wouldn't be any of these services it would be my own vps that I run a vpn too.

-

If you want to discuss the merits/worth of DoT that should also be moved to a new thread. It's not relevant to solving this problem. Let's keep this on topic.

-

@jimp Yessir! I'm done though.

-

H haraldinho referenced this topic on

-

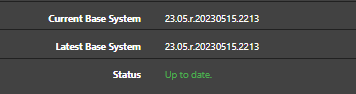

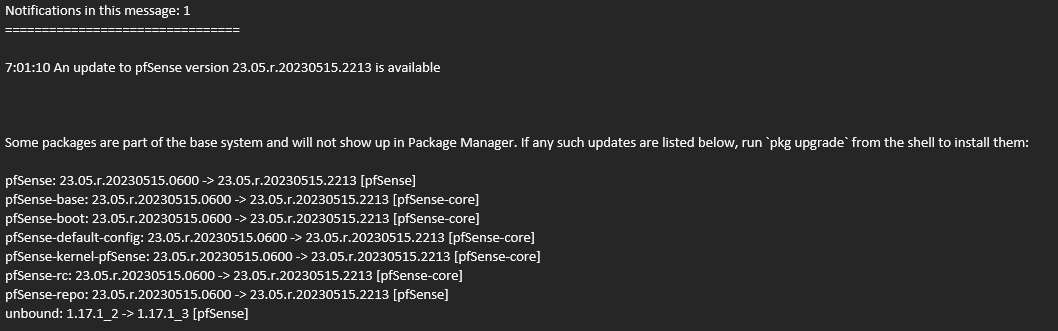

There seems to be some good news:

"Jaap Akkerhuis 2023-06-01 12:41:18 UTC

A fix is developed by upstairs. There will be a new release within weeks with this fix. For the inpatients among us, a prerelease is made available https://github.com/NLnetLabs/unbound/issues/887#issuecomment-1570136710." -

T TAC57 referenced this topic on

T TAC57 referenced this topic on

-

A potential upstream 'fix' or improvement for ASLR:

https://www.freebsd.org/security/advisories/FreeBSD-EN-23:15.sanitizer.asc

II. Problem Description Some of the Sanitizers cannot work correctly when ASLR is enabled. Therefore, at the initialization of such Sanitizers, ASLR is detected via procctl(2). If ASLR is enabled, it is first disabled, and then the main executable containing the Sanitizer is re-executed, after printing an appropriate message. However, the Sanitizers work by intercepting various function calls, and by mistake the already-intercepted procctl(2) function was used. This causes an internal error, which usually results in a segfault.VI. Correction details This issue is corrected as of the corresponding Git commit hash or Subversion revision number in the following stable and release branches: Branch/path Hash Revision - ------------------------------------------------------------------------- stable/14/ 1e4798e9677f stable/14-n265803 releng/14.0/ 78b4c762b20b releng/14.0-n265381 stable/13/ 7c25a53a2cb9 stable/13-n256726 - ------------------------------------------------------------------------- ️

️