(yet another) IPsec throughput help request

-

@michmoor said in (yet another) IPsec throughput help request:

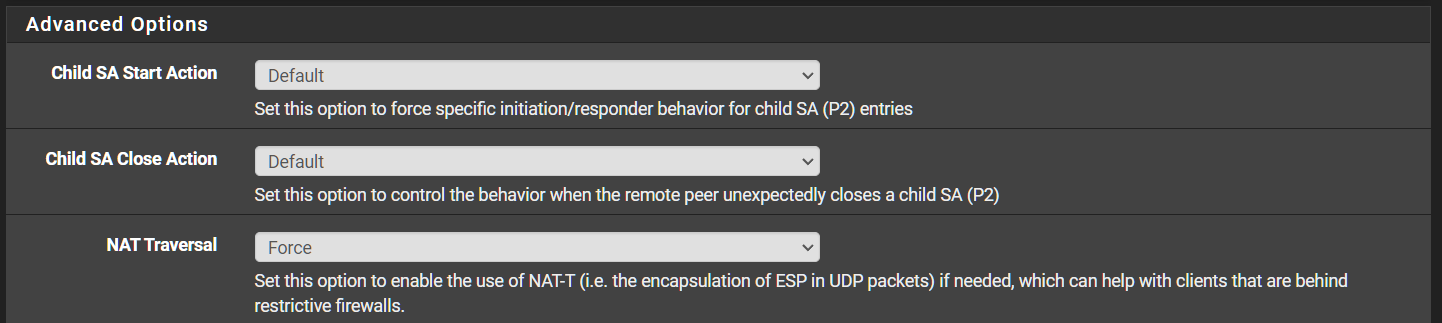

It was recommended that I enable NAT-T Force

Interesting... can you say more about how you enabled it? Does this mean you are using IKE1 and not IKE2?

-

-

@michmoor

Thanks for the tip - unfortunately, it didn't make any difference in my case. -

@SpaceBass In that case whats the hardware on each site terminating the VPN tunnel?

Seems perhaps there is a limitation there -

Europe: 2 x Intel(R) Xeon(R) E-2386G CPU @ 3.50GHz with 128gb RAM, SSD ZFS Raid 1

US: 2x Intel(R) Xeon(R) CPU E3-1270 v5 @ 3.60GHz with 64gb RAM, SSD ZFS raid 1

-

@SpaceBass Intel NICs?

-

@michmoor

thanks for the continued troubleshooting help!US - intel bare metal

Europe - VirtIO, host NIC in Intel -

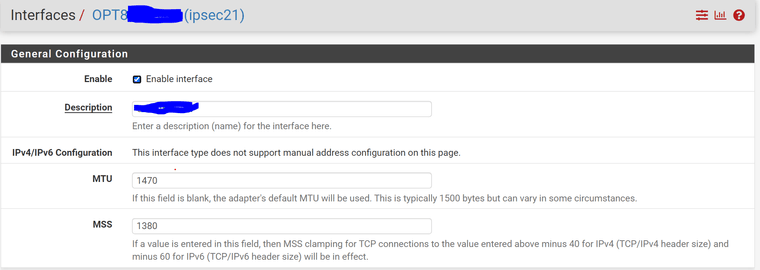

You may try to adjust your MTU/MSS Settings on both sides equally to exactly these numbers here:

-

@pete35 I dont (currently) use an interface for ipsec

-

@SpaceBass Do you have any Cryptographic Acceleration? Is it on?

-

@michmoor AES-NI, yes it is active on both pfSense machines

-

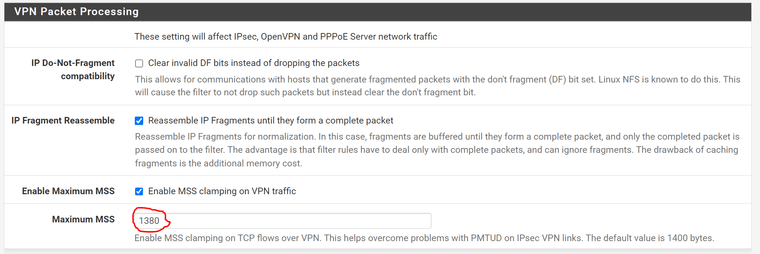

you can try to set MSS Clamping under system/advanced/firewall&Nat

Why dont you use routed vti?

-

For Tunnel mode MSS 1328 is most effective:

https://packetpushers.net/ipsec-bandwidth-overhead-using-aes/ -

@NOCling said in (yet another) IPsec throughput help request:

For Tunnel mode MSS 1328 is most effective:

https://packetpushers.net/ipsec-bandwidth-overhead-using-aes/WOAH! Massive difference (in only one direction)...

From US -> Europe

[SUM] 0.00-10.00 sec 252 MBytes 211 Mbits/sec 9691 sender [SUM] 0.00-10.20 sec 233 MBytes 192 Mbits/sec receiverEurope -> US

[SUM] 0.00-10.20 sec 22.0 MBytes 18.1 Mbits/sec 0 sender [SUM] 0.00-10.00 sec 20.5 MBytes 17.2 Mbits/sec receiver -

Nice, but now you have to find a way to the paring jungle how it will work fast on both ways.

Looks like US -> EU runs a other way than EU -> US.We talk about that, in our last meeting and the solution is not easy.

One Point is to use a Cloud Service Provider he is present on both sides and you can use the interconnect between this cloud instances. -

@NOCling and unfortunately my success was very short-lived ...

It looks like iperf3 traffic is still improved, but I'm moving data at 500kB/s - 1.50MB/s -

@SpaceBass if you temp switch to Wireguard does the issue follow?

If it does it may not be MTU related. -

How do you move your Data?

SMB is a very bad decision for high latency ways, you need rsync or other wan optimized protocols. -

@NOCling said in (yet another) IPsec throughput help request:

How do you move your Data?

rsync - have tried both an NFSv4 mount and over ssh (for testing purpose)