BGP - K3S Kubernetes

-

@vacquah said in BGP - K3S Kubernetes:

cilium bgp peering policy?

K3S Deployment

Install Cilium and K3S https://docs.k3s.io/cli/serverCILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC='--flannel-backend=none --disable-network-policy --disable=servicelb --disable=traefik --tls-san=172.16.100.110 --disable-kube-proxy --node-label bgp-policy=pandora' sh -

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

echo "export KUBECONFIG=/etc/rancher/k3s/k3s.yaml" >> ~/.bashrc

sudo -E cilium install --version 1.14.5 --set ipam.operator.clusterPoolIPv4PodCIDRList=10.43.0.0/16 --set bgpControlPlane.enabled=true --set k8sServiceHost=172.16.100.110 --set k8sServicePort=6443 --set kubeProxyReplacement=true --set ingressController.enabled=true --set ingressController.loadbalancerMode=dedicatedvi /etc/rancher/k3s/k3s.yaml

replace 127.0.0.1 with host ip 172.16.100.110

sudo -E cilium status --wait

sudo cilium hubble enable # need to run as root.. sudo profile issue

sudo -E cilium connectivity test

sudo -E kubectl get svc --all-namespaces

kubectl get services -A

sudo cilium hubble enableThen apply policysudo su - admin

cd /media/md0/containers/

vi cilium_policy.yaml

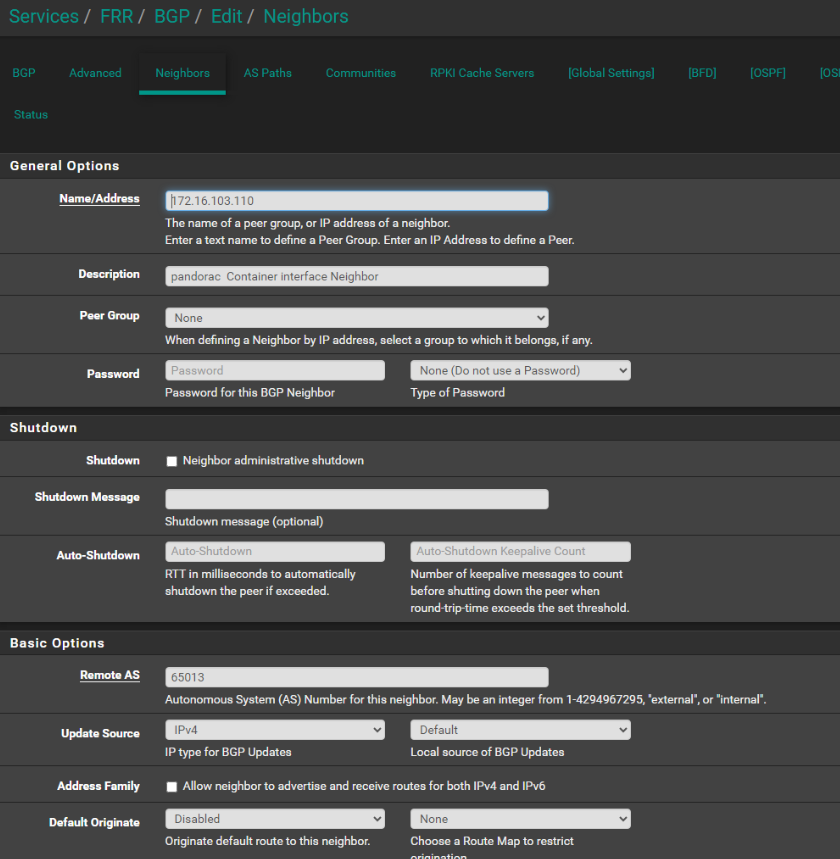

######################apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rt1

spec:

nodeSelector:

matchLabels:

bgp-policy: pandora

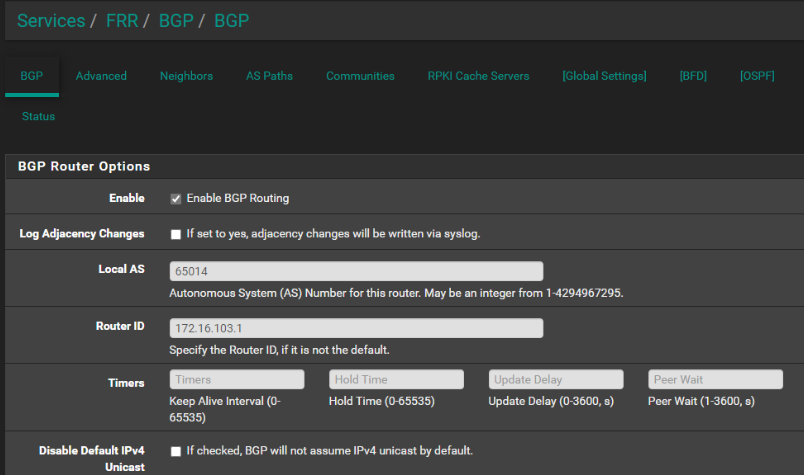

virtualRouters:- localASN: 65013

exportPodCIDR: true

neighbors:- peerAddress: 172.16.100.1/24

peerASN: 65014

eBGPMultihopTTL: 10

connectRetryTimeSeconds: 120

holdTimeSeconds: 90

keepAliveTimeSeconds: 30

gracefulRestart:

enabled: true

restartTimeSeconds: 120

serviceSelector:

matchExpressions:- {key: somekey, operator: NotIn, values: ['never-used-value']}

- peerAddress: 172.16.100.1/24

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: "pandorac"

spec:

cidrs:- cidr: "172.16.103.0/24"

##########

root@pandora:/media/md0/containers# kubectl apply -f cilium_policy.yaml

root@pandora:/media/md0/containers# kubectl get ippools -A

NAME DISABLED CONFLICTING IPS AVAILABLE AGE

pandorac false False 253 4s

root@pandora:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 6m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 5m54s

kube-system metrics-server ClusterIP 10.43.76.245 <none> 443/TCP 5m53s

kube-system hubble-peer ClusterIP 10.43.10.37 <none> 443/TCP 5m50s

kube-system hubble-relay ClusterIP 10.43.124.90 <none> 80/TCP 2m53s

cilium-test echo-same-node NodePort 10.43.151.50 <none> 8080:30525/TCP 2m3s

cilium-test cilium-ingress-ingress-service NodePort 10.43.4.143 <none> 80:31000/TCP,443:31001/TCP 2m3s

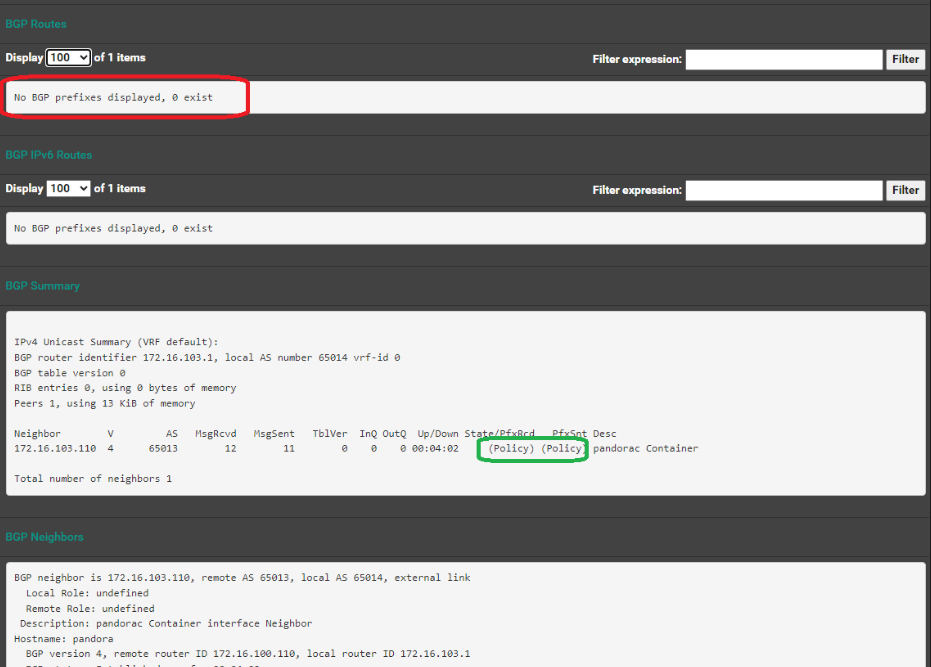

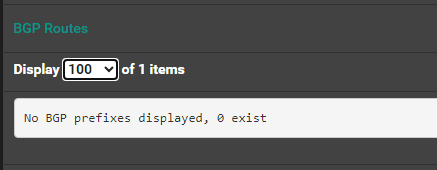

kube-system cilium-ingress LoadBalancer 10.43.36.151 172.16.103.248 80:32261/TCP,443:32232/TCP 5m50sNote Router BGP Status: Note state = Active Ex: in web ui of rt1 (pfsense router -> status -> frr -> BGP -> Neighbor)BGP neighbor is 172.16.100.110, remote AS 65013, local AS 65014, external link

Local Role: undefined

Remote Role: undefined

Description: pandorac Container interface Neighbor

Hostname: pandora

BGP version 4, remote router ID 172.16.100.110, local router ID 172.16.100.1

BGP state = Established, up for 00:01:12

Last read 00:00:12, Last write 00:00:12Optional: Test external routing of example test website works from Ex: windows host on 172.16.100.0/24PS C:\Users\Jerem> curl http://172.16.103.248

StatusCode : 200

StatusDescription : OK

Content : <!DOCTYPE html>

<html lang="en-US">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<meta name='robots' content='max-image-preview:large' />

<t...

RawContent : HTTP/1.1 200 OK - localASN: 65013

-

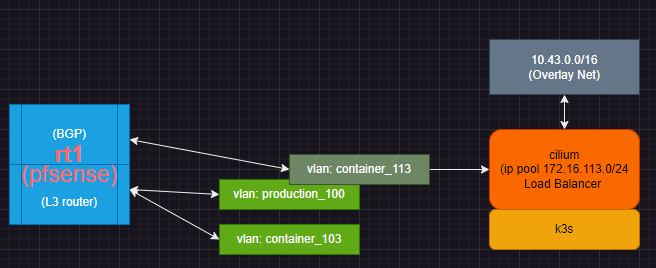

@penguinpages Thanks for sharing. I am getting confused / lost with all the IPs

Is 172.16.100.1/24 your pfsense router ip? Is 172.16.100.110 a specific kubernetes controlplane or worker node? I am having a hard time getting the big picture.

-

Is 172.16.100.1/24 your pfsense router ip? --> Yes.. Router connected to DGW for host and inteface for BGP communication

Is 172.16.100.110 a specific kubernetes controlplane or worker node? --> Yes. Host sending BGP hosting CNI "CiIium network 172.16.103.0/24 (IP Pool).. .with Overlay network 10.43.0.0/16

I am having a hard time getting the big picture. ---> See above before /after diagram

Working design:

99821352-79e2-47f3-8a16-807ac924ad2a-image.png