BGP - K3S Kubernetes

-

Not sure what you mean.

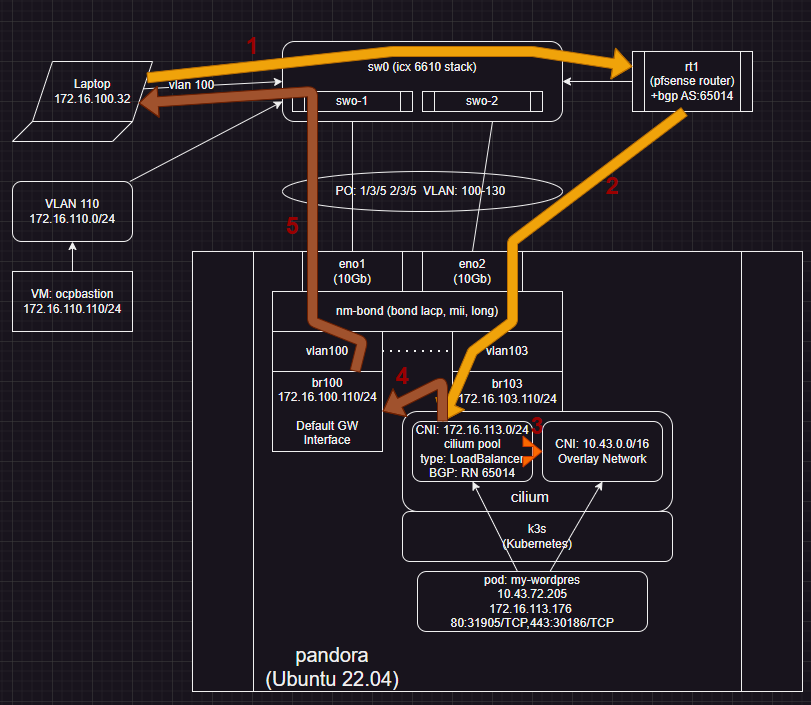

If my systems (windows / linux) are on 172.16.100.0/24 and know nothing of how to route to 172.16.113.0/24, they just use Default GW 172.16.100.1 which is pfsense.

Route (pfsense) then refers to its table, and based on BGP knows path to get to 172.16.113.0/24 is via 172.16.103.110.

PS C:\Users\user> route -p delete 172.16.113.0 MASK 255.255.255.0 172.16.103.110 METRIC 1 OK! PS C:\Users\user> route -p add 172.16.113.0 MASK 255.255.255.0 172.16.100.1 METRIC 1 OK! PS C:\Users\user> curl 172.16.113.176 # --> timeout PS C:\Users\user> route -p delete 172.16.113.0 MASK 255.255.255.0 172.16.100.1 METRIC 1 OK! PS C:\Users\user> route -p add 172.16.113.0 MASK 255.255.255.0 172.16.103.110 METRIC 1 OK! PS C:\Users\user> curl 172.16.113.176 StatusCode : 200 StatusDescription : OK Content : <!DOCTYPE html> <html lang="en-US"> <head> -

OK how does the client know how to reach 172.16.103.110? That must also be via pfSense at 172.16.100.1 right?

-

Yes

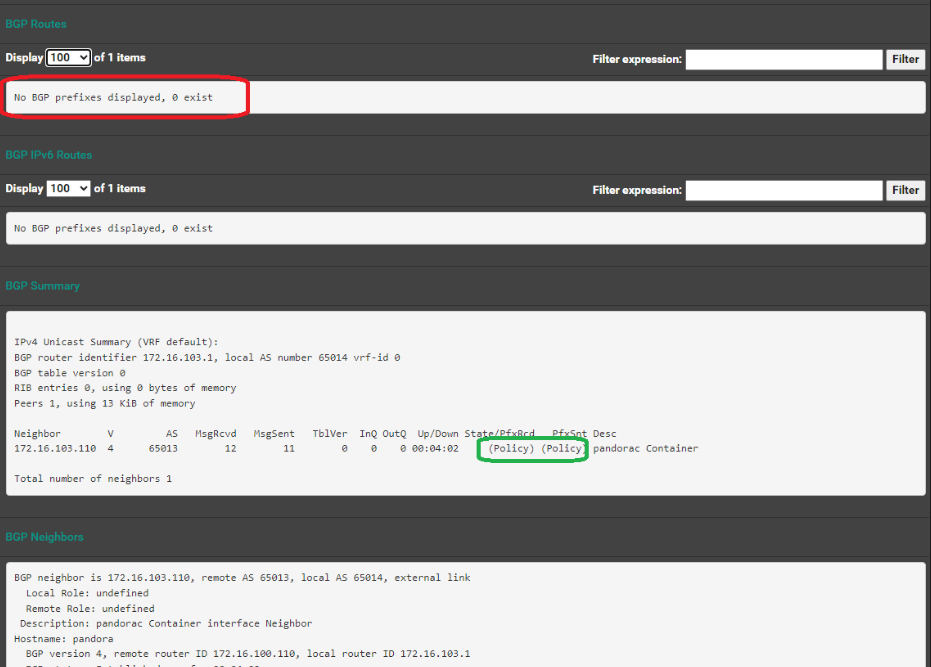

Route table from pfsense:

Codes: K - kernel route, C - connected, S - static, R - RIP, O - OSPF, I - IS-IS, B - BGP, E - EIGRP, T - Table, v - VNC, V - VNC-Direct, A - Babel, f - OpenFabric, > - selected route, * - FIB route, q - queued, r - rejected, b - backup t - trapped, o - offload failure K>* 0.0.0.0/0 [0/0] via 108.234.144.1, igc0, 4d02h15m C>* 10.10.10.1/32 [0/1] is directly connected, lo0, 4d02h15m B>* 10.43.0.0/24 [20/0] via 172.16.103.110, igc1.103, weight 1, 1d17h17m C>* 108.234.144.0/22 [0/1] is directly connected, igc0, 4d02h15m C>* 172.16.100.0/24 [0/1] is directly connected, igc1.100, 1d17h42m C>* 172.16.101.0/24 [0/1] is directly connected, igc1.101, 1d17h42m C>* 172.16.102.0/24 [0/1] is directly connected, igc1.102, 1d17h42m S 172.16.103.0/24 [1/0] via 172.16.103.110 inactive, weight 1, 1d17h42m C>* 172.16.103.0/24 [0/1] is directly connected, igc1.103, 1d17h42m C>* 172.16.104.0/24 [0/1] is directly connected, ovpns1, 4d02h15m C>* 172.16.110.0/24 [0/1] is directly connected, igc1.110, 1d17h42m C>* 172.16.111.0/24 [0/1] is directly connected, igc1.111, 1d17h42m C>* 172.16.112.0/24 [0/1] is directly connected, igc1.112, 1d17h42m B>* 172.16.113.72/32 [20/0] via 172.16.103.110, igc1.103, weight 1, 1d17h17m B>* 172.16.113.176/32 [20/0] via 172.16.103.110, igc1.103, weight 1, 1d02h20m C>* 172.16.120.0/24 [0/1] is directly connected, igc1.120, 1d17h42m C>* 172.16.121.0/24 [0/1] is directly connected, igc1.121, 1d17h42m C>* 172.16.122.0/24 [0/1] is directly connected, igc1.122, 1d17h42m C>* 172.16.130.0/24 [0/1] is directly connected, igc1.130, 1d17h42m C>* 172.16.131.0/24 [0/1] is directly connected, igc1.131, 1d17h42m C>* 172.16.132.0/24 [0/1] is directly connected, igc1.132, 1d17h42m -

So what did the states show when you try to open it without the static route on the client?

Looking at the pfSense routing table I wonder if the inactive more specific route to 172.16.103.0/24 is causing a problem.

-

Could be...

But.......

Why when I add route to host... does it start working?

Why when from the hosting system can I (without adding route) get to site? -

I'd still be checking the states and/or running an ping and pcaps to see where that is actually being sent.

-

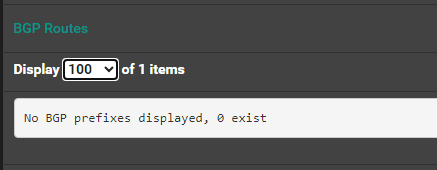

Just to close this out and also post what I learned.

Root cause: Server with multiple interfaces, where BGP and cilium are binding to the NOT default interface,, will never work.

Ex:

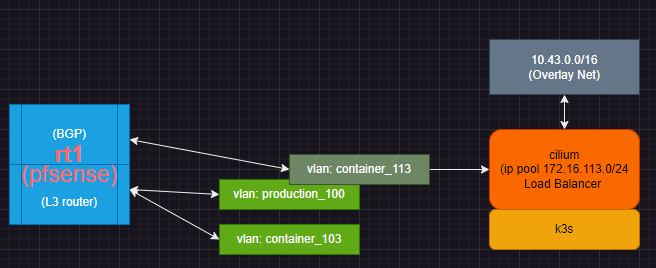

Idea was to have VLAN 103 for all containers.. used by various K8 clusters.... but.. Cilium returns routes based on underlying Linux .. which follows DGW through 172.16.100... which confuses hosts waiting for packets to return from pfsense 172.16.103.1

Working design:

Change:

- remove all L2/3 subnet for 172.16.103.0

- setup within CNI (Cilium) that its IP pool is now 172.16.103.0/24

- redirect all bgp through host with bound DGW 172.16.100.110 with bgp neighbor definition to 172.16.100.1 (pfsense)

Now BGP does not take weird packet paths etc.

How I root cause.: Watch packet sessions on host:

tcpdump -i br103 -s 0 'tcp port http'then

tcpdump -i br100 -s 0 'tcp port http'

Then from laptop

curl http://172.16.113.176what I saw was packets in (10x due to fail return)... on both interfaces... which means return was out different interface.

Thanks for those who helped respond and posting. Hope this helps others not shave the same yak.

-

Nice catch.

-

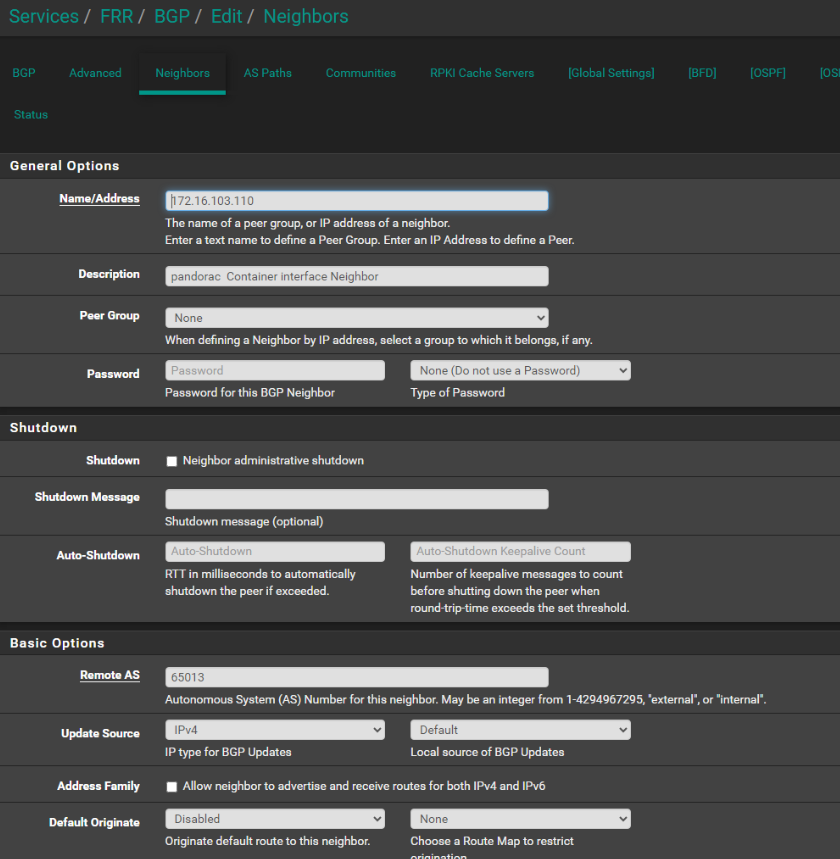

@penguinpages can you share your cilium bgp peering policy? I’d like to see what it looks like. I am having the same problem. I use almost the same equipment you have - I have a pfsense doing bgp, a brocade icx7250 doing layer 3 routing ( all my vlans are setup here ) and I have a server with 2 nics. I cant connect to a test nginx demo i have setup on my kubernetes cluster with cilium.

-

@vacquah said in BGP - K3S Kubernetes:

cilium bgp peering policy?

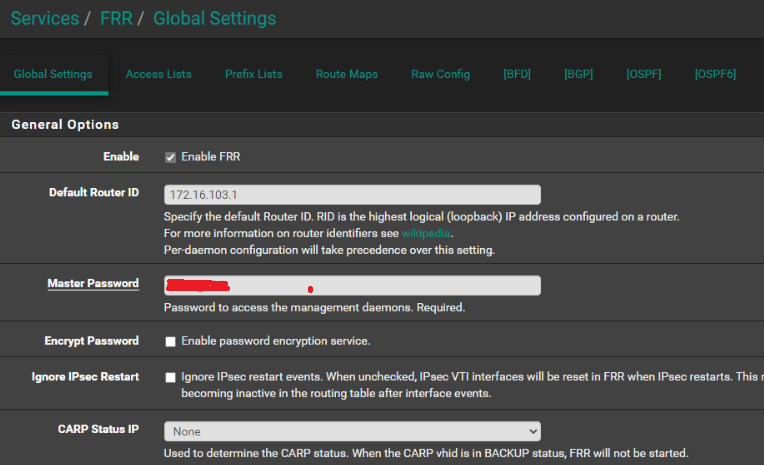

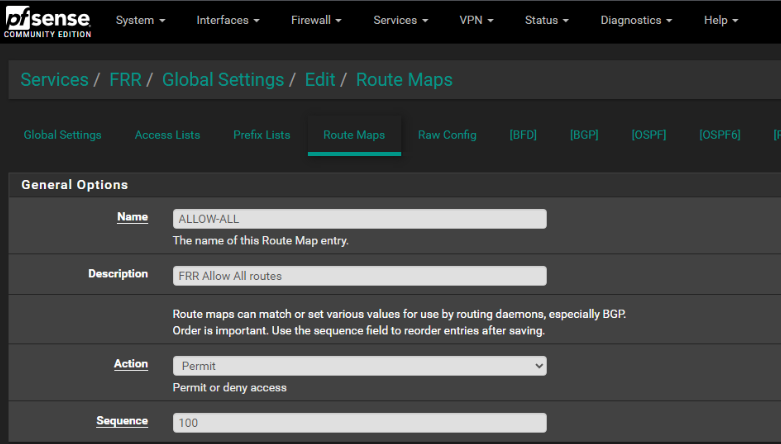

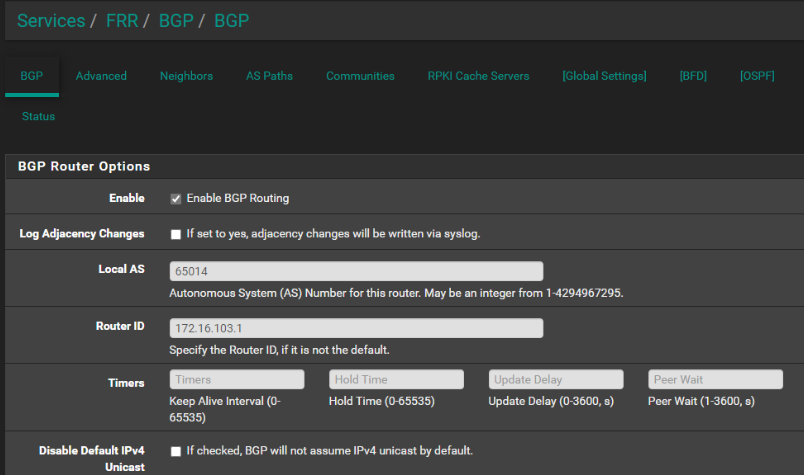

K3S Deployment

Install Cilium and K3S https://docs.k3s.io/cli/serverCILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC='--flannel-backend=none --disable-network-policy --disable=servicelb --disable=traefik --tls-san=172.16.100.110 --disable-kube-proxy --node-label bgp-policy=pandora' sh -

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

echo "export KUBECONFIG=/etc/rancher/k3s/k3s.yaml" >> ~/.bashrc

sudo -E cilium install --version 1.14.5 --set ipam.operator.clusterPoolIPv4PodCIDRList=10.43.0.0/16 --set bgpControlPlane.enabled=true --set k8sServiceHost=172.16.100.110 --set k8sServicePort=6443 --set kubeProxyReplacement=true --set ingressController.enabled=true --set ingressController.loadbalancerMode=dedicatedvi /etc/rancher/k3s/k3s.yaml

replace 127.0.0.1 with host ip 172.16.100.110

sudo -E cilium status --wait

sudo cilium hubble enable # need to run as root.. sudo profile issue

sudo -E cilium connectivity test

sudo -E kubectl get svc --all-namespaces

kubectl get services -A

sudo cilium hubble enableThen apply policysudo su - admin

cd /media/md0/containers/

vi cilium_policy.yaml

######################apiVersion: "cilium.io/v2alpha1"

kind: CiliumBGPPeeringPolicy

metadata:

name: rt1

spec:

nodeSelector:

matchLabels:

bgp-policy: pandora

virtualRouters:- localASN: 65013

exportPodCIDR: true

neighbors:- peerAddress: 172.16.100.1/24

peerASN: 65014

eBGPMultihopTTL: 10

connectRetryTimeSeconds: 120

holdTimeSeconds: 90

keepAliveTimeSeconds: 30

gracefulRestart:

enabled: true

restartTimeSeconds: 120

serviceSelector:

matchExpressions:- {key: somekey, operator: NotIn, values: ['never-used-value']}

- peerAddress: 172.16.100.1/24

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: "pandorac"

spec:

cidrs:- cidr: "172.16.103.0/24"

##########

root@pandora:/media/md0/containers# kubectl apply -f cilium_policy.yaml

root@pandora:/media/md0/containers# kubectl get ippools -A

NAME DISABLED CONFLICTING IPS AVAILABLE AGE

pandorac false False 253 4s

root@pandora:~# kubectl get svc -A

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 6m

kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 5m54s

kube-system metrics-server ClusterIP 10.43.76.245 <none> 443/TCP 5m53s

kube-system hubble-peer ClusterIP 10.43.10.37 <none> 443/TCP 5m50s

kube-system hubble-relay ClusterIP 10.43.124.90 <none> 80/TCP 2m53s

cilium-test echo-same-node NodePort 10.43.151.50 <none> 8080:30525/TCP 2m3s

cilium-test cilium-ingress-ingress-service NodePort 10.43.4.143 <none> 80:31000/TCP,443:31001/TCP 2m3s

kube-system cilium-ingress LoadBalancer 10.43.36.151 172.16.103.248 80:32261/TCP,443:32232/TCP 5m50sNote Router BGP Status: Note state = Active Ex: in web ui of rt1 (pfsense router -> status -> frr -> BGP -> Neighbor)BGP neighbor is 172.16.100.110, remote AS 65013, local AS 65014, external link

Local Role: undefined

Remote Role: undefined

Description: pandorac Container interface Neighbor

Hostname: pandora

BGP version 4, remote router ID 172.16.100.110, local router ID 172.16.100.1

BGP state = Established, up for 00:01:12

Last read 00:00:12, Last write 00:00:12Optional: Test external routing of example test website works from Ex: windows host on 172.16.100.0/24PS C:\Users\Jerem> curl http://172.16.103.248

StatusCode : 200

StatusDescription : OK

Content : <!DOCTYPE html>

<html lang="en-US">

<head>

<meta charset="UTF-8" />

<meta name="viewport" content="width=device-width, initial-scale=1" />

<meta name='robots' content='max-image-preview:large' />

<t...

RawContent : HTTP/1.1 200 OK - localASN: 65013

-

@penguinpages Thanks for sharing. I am getting confused / lost with all the IPs

Is 172.16.100.1/24 your pfsense router ip? Is 172.16.100.110 a specific kubernetes controlplane or worker node? I am having a hard time getting the big picture.

-

Is 172.16.100.1/24 your pfsense router ip? --> Yes.. Router connected to DGW for host and inteface for BGP communication

Is 172.16.100.110 a specific kubernetes controlplane or worker node? --> Yes. Host sending BGP hosting CNI "CiIium network 172.16.103.0/24 (IP Pool).. .with Overlay network 10.43.0.0/16

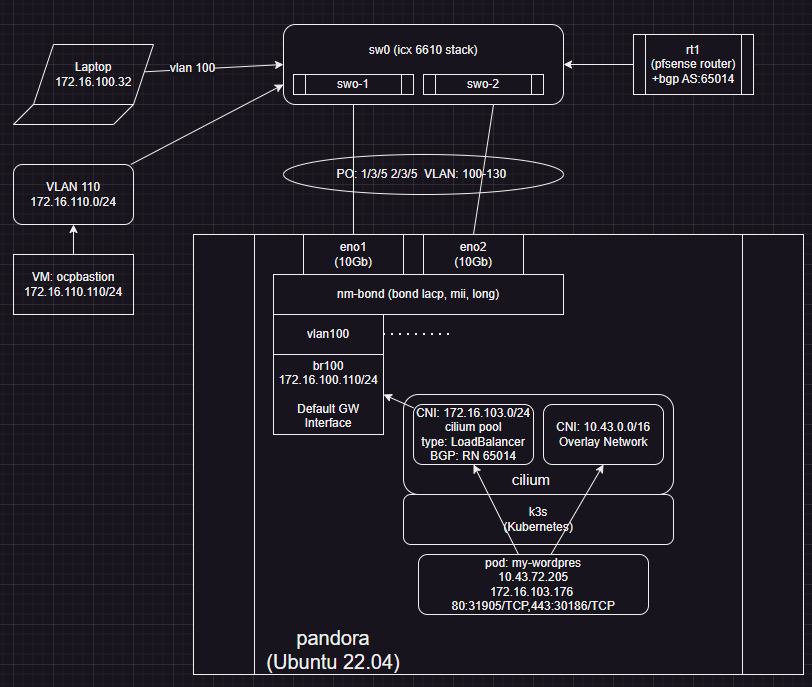

I am having a hard time getting the big picture. ---> See above before /after diagram

Working design:

99821352-79e2-47f3-8a16-807ac924ad2a-image.png