pfSense not enabling port

-

after the above.

what i have noticed though, my port on the switch has gone red, complaining of packet loss etc.

wonder if we need to go back to auto negotiate.

ip a

i did have to bring the interfaces up, both the vlan30 that sits on vmbr0 and vmbr40 was down.1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host noprefixroute valid_lft forever preferred_lft forever 2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff 3: enp3s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:72 brd ff:ff:ff:ff:ff:ff 4: enp5s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:73 brd ff:ff:ff:ff:ff:ff 5: enp6s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:74 brd ff:ff:ff:ff:ff:ff 8: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff inet 172.16.10.50/24 scope global vmbr0 valid_lft forever preferred_lft forever inet6 fe80::aab8:e0ff:fe02:a371/64 scope link valid_lft forever preferred_lft forever 9: vmbr40: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:05:f0:91 brd ff:ff:ff:ff:ff:ff inet6 fe80::aab8:e0ff:fe05:f091/64 scope link valid_lft forever preferred_lft forever 10: tap100i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i0 state UNKNOWN group default qlen 1000 link/ether c6:2d:5a:7a:41:d7 brd ff:ff:ff:ff:ff:ff 11: fwbr100i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether da:0c:52:e1:39:89 brd ff:ff:ff:ff:ff:ff 12: fwpr100p0@fwln100i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000 link/ether 76:c4:56:d3:6a:93 brd ff:ff:ff:ff:ff:ff 13: fwln100i0@fwpr100p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i0 state UP group default qlen 1000 link/ether da:0c:52:e1:39:89 brd ff:ff:ff:ff:ff:ff 14: tap100i1: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i1 state UNKNOWN group default qlen 1000 link/ether 76:c9:86:22:cc:30 brd ff:ff:ff:ff:ff:ff 15: fwbr100i1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 52:9a:1c:4d:76:a6 brd ff:ff:ff:ff:ff:ff 16: fwpr100p1@fwln100i1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000 link/ether 26:2d:af:5a:9a:b4 brd ff:ff:ff:ff:ff:ff 17: fwln100i1@fwpr100p1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i1 state UP group default qlen 1000 link/ether 52:9a:1c:4d:76:a6 brd ff:ff:ff:ff:ff:ff 18: tap100i2: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i2 state UNKNOWN group default qlen 1000 link/ether c2:9f:1f:4e:c6:2e brd ff:ff:ff:ff:ff:ff 19: fwbr100i2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 7a:a8:67:a9:29:14 brd ff:ff:ff:ff:ff:ff 20: fwpr100p2@fwln100i2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr40 state UP group default qlen 1000 link/ether 72:43:cc:4b:b3:40 brd ff:ff:ff:ff:ff:ff 21: fwln100i2@fwpr100p2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i2 state UP group default qlen 1000 link/ether 7a:a8:67:a9:29:14 brd ff:ff:ff:ff:ff:ff 22: vlan30@vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff inet6 fe80::aab8:e0ff:fe02:a371/64 scope link valid_lft forever preferred_lft forever 23: enp4s0f0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:05:f0:91 brd ff:ff:ff:ff:ff:ff 24: enp4s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:05:f0:92 brd ff:ff:ff:ff:ff:ffdmesg | grep ixgbe

[19185.217533] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [19185.321424] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [19185.529459] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [19185.633445] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [19185.862491] ixgbe 0000:04:00.1: complete [19185.862751] ixgbe 0000:04:00.0: removed PHC on enp4s0f0 [19185.922172] ixgbe 0000:04:00.0 enp4s0f0 (unregistering): left allmulticast mode [19185.922174] ixgbe 0000:04:00.0 enp4s0f0 (unregistering): left promiscuous mode [19185.984591] ixgbe 0000:04:00.0: complete [19186.014865] ixgbe: Intel(R) 10 Gigabit PCI Express Network Driver [19186.014867] ixgbe: Copyright (c) 1999-2016 Intel Corporation. [19186.183424] ixgbe 0000:04:00.0: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [19186.183731] ixgbe 0000:04:00.0: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [19186.183816] ixgbe 0000:04:00.0: MAC: 2, PHY: 19, SFP+: 5, PBA No: FFFFFF-0FF [19186.183817] ixgbe 0000:04:00.0: a8:b8:e0:05:f0:91 [19186.184842] ixgbe 0000:04:00.0: Intel(R) 10 Gigabit Network Connection [19186.195416] ixgbe 0000:04:00.0 enp4s0f0: renamed from eth0 [19187.336359] ixgbe 0000:04:00.1: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [19187.336664] ixgbe 0000:04:00.1: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [19187.336749] ixgbe 0000:04:00.1: MAC: 2, PHY: 1, PBA No: FFFFFF-0FF [19187.336750] ixgbe 0000:04:00.1: a8:b8:e0:05:f0:92 [19187.337749] ixgbe 0000:04:00.1: Intel(R) 10 Gigabit Network Connection [19187.353403] ixgbe 0000:04:00.1 enp4s0f1: renamed from eth0 -

on my switch side i run

172.16.10.0/24 as my primary on the physical port

then have the following vlans defined atm

(running on the same above physical port)

vlan20 - 172.16.20.0/24 -> all wifi devices

vlan30 - 172.16.30.0/24 -> will be "vmotion/k8s cluster interconnect"vlan40 - 172.16.40.0/24 -> will be storage/io to be used by all to access the TrueNAS. 10GbE based on dedicated port.

i've configured vmbr0 and vmbr40.

i ended configuring vlan30 as a vlan on vmbr0.G

-

@georgelza Did you unplug the module from the SPF+ port on the Proxmox machine? What happens if you plug it in again, only the Proxmox side and leave the switch side hanging. Anything new that shows up from dmesg?

-

did not touch the physical side.

i've since rechecked the switch and reset to manual and redefined it as 10GbE DFX

re-issued the ethtool command on the pmox level.

G

-

ok, got it back active on the switch.

noticed that in pmox under network it was active=no, switched it to autostart and it seemed to have figured out to start itself.

G

root@ubuntu-01:~# ifconfig -a docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:cb:d9:fb:26 txqueuelen 0 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens18: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet 172.16.10.51 netmask 255.255.255.0 broadcast 172.16.10.255 inet6 fe80::be24:11ff:fec0:e81e prefixlen 64 scopeid 0x20<link> ether bc:24:11:c0:e8:1e txqueuelen 1000 (Ethernet) RX packets 124398 bytes 94163032 (94.1 MB) RX errors 0 dropped 64 overruns 0 frame 0 TX packets 22096 bytes 2425895 (2.4 MB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens19: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::be24:11ff:fe81:2ccb prefixlen 64 scopeid 0x20<link> ether bc:24:11:81:2c:cb txqueuelen 1000 (Ethernet) RX packets 22 bytes 1566 (1.5 KB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 15 bytes 1146 (1.1 KB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 ens20: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500 inet6 fe80::be24:11ff:fe1e:20ac prefixlen 64 scopeid 0x20<link> ether bc:24:11:1e:20:ac txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 15 bytes 1146 (1.1 KB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 inet6 ::1 prefixlen 128 scopeid 0x10<host> loop txqueuelen 1000 (Local Loopback) RX packets 128 bytes 11444 (11.4 KB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 128 bytes 11444 (11.4 KB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 root@ubuntu-01:~ip a

root@ubuntu-01:~# ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:c0:e8:1e brd ff:ff:ff:ff:ff:ff inet 172.16.10.51/24 metric 100 brd 172.16.10.255 scope global dynamic ens18 valid_lft 5917sec preferred_lft 5917sec inet6 fe80::be24:11ff:fec0:e81e/64 scope link valid_lft forever preferred_lft forever 3: ens19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:81:2c:cb brd ff:ff:ff:ff:ff:ff inet6 fe80::be24:11ff:fe81:2ccb/64 scope link valid_lft forever preferred_lft forever 4: ens20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:1e:20:ac brd ff:ff:ff:ff:ff:ff inet6 fe80::be24:11ff:fe1e:20ac/64 scope link valid_lft forever preferred_lft forever 5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:cb:d9:fb:26 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever root@ubuntu-01:~#dmesg, flapping again

[23193.684199] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23196.284232] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23196.492219] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23196.700248] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23196.804186] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23197.012247] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23197.116191] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23197.325189] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23197.428371] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23199.924253] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23200.028227] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23200.756251] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23200.860223] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23200.964189] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23201.068135] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23201.172183] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23201.276115] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23201.484251] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23201.588222] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23203.668163] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23203.772131] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23204.916258] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23205.020120] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23205.228162] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23205.332137] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23205.540156] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23205.644121] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23205.748154] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23205.956212] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [23206.060156] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [23206.268107] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Downthen issued the ethtool command, which made my switch port go red.

then dmesg changed to

[23352.570211] ixgbe 0000:04:00.0: complete [23352.608227] ixgbe: Intel(R) 10 Gigabit PCI Express Network Driver [23352.608229] ixgbe: Copyright (c) 1999-2016 Intel Corporation. [23352.776181] ixgbe 0000:04:00.0: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [23352.776488] ixgbe 0000:04:00.0: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [23352.776573] ixgbe 0000:04:00.0: MAC: 2, PHY: 19, SFP+: 5, PBA No: FFFFFF-0FF [23352.776574] ixgbe 0000:04:00.0: a8:b8:e0:05:f0:91 [23352.777559] ixgbe 0000:04:00.0: Intel(R) 10 Gigabit Network Connection [23352.785028] ixgbe 0000:04:00.0 enp4s0f0: renamed from eth0 [23353.930084] ixgbe 0000:04:00.1: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [23353.930388] ixgbe 0000:04:00.1: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [23353.930472] ixgbe 0000:04:00.1: MAC: 2, PHY: 1, PBA No: FFFFFF-0FF [23353.930473] ixgbe 0000:04:00.1: a8:b8:e0:05:f0:92 [23353.931466] ixgbe 0000:04:00.1: Intel(R) 10 Gigabit Network Connection [23353.947017] ixgbe 0000:04:00.1 enp4s0f1: renamed from eth0 [23367.436987] ixgbe 0000:04:00.1: complete [23367.446889] ixgbe 0000:04:00.0: complete [23367.483630] ixgbe: Intel(R) 10 Gigabit PCI Express Network Driver [23367.483632] ixgbe: Copyright (c) 1999-2016 Intel Corporation. [23367.652922] ixgbe 0000:04:00.0: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [23367.653228] ixgbe 0000:04:00.0: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [23367.653313] ixgbe 0000:04:00.0: MAC: 2, PHY: 19, SFP+: 5, PBA No: FFFFFF-0FF [23367.653314] ixgbe 0000:04:00.0: a8:b8:e0:05:f0:91 [23367.654319] ixgbe 0000:04:00.0: Intel(R) 10 Gigabit Network Connection [23367.662770] ixgbe 0000:04:00.0 enp4s0f0: renamed from eth0 [23368.800920] ixgbe 0000:04:00.1: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [23368.801226] ixgbe 0000:04:00.1: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [23368.801311] ixgbe 0000:04:00.1: MAC: 2, PHY: 1, PBA No: FFFFFF-0FF [23368.801311] ixgbe 0000:04:00.1: a8:b8:e0:05:f0:92 [23368.802549] ixgbe 0000:04:00.1: Intel(R) 10 Gigabit Network Connection [23368.812911] ixgbe 0000:04:00.1 enp4s0f1: renamed from eth0 -

thinking need to figure out how to address the port...

tried

options ixgbe allow_unsupported_sfp=1

and

options ixgbe allow_unsupported_sfp=1.1

and

options ixgbe allow_unsupported_sfp=0.1

and

options ixgbe allow_unsupported_sfp=0.0 -

i'm starting to question if the 10GbE is actually up...

i'm thinking when i ping the gw from pmox or the ubuntu-01 image it actually goes out the default gw over the vmbr0 bridge. and not out via the SFP+for some reason i'm also not getting a ip on vlan30 on the guest vm, vlan30 runs over vmbr0

well let me add... if i make some changes on the pmox side it does break the switch side so the physical link is there, and there is some level of comm, or is that disagreement.

G

-

note to self, when de-configuring something to check something... dont reconfigure your main/only interface... especially if machines are 10foot of the ground on a shelve with no Kvm in place. ;)

can confirm that the green and orange light on the SFP+ port is having a party, matching on switch is just flickering red.

G

-

@Gblenn said in pfSense not enabling port:

ixgbe allow_unsupported_sfp=1

The allow_unsupported option can only be 1 or 0, it's on or off. It applies to all ixgbe attached devices applied there.

Nothing posted here so far looks like it's linking correctly to me.

Did you ever try just linking the two SFP+ ports directly to see if it would link up and be stable?

-

ok... my assumption confirmed...

even though there is light travelling down the fiber link it's not working.

the pings was going out the default gw.

i disabled the vlan0/172.16.10.51 interface on ubuntu-01 and tried to ping out on all networks, all failed, the second i re-enabled it all worked.

G

-

@stephenw10 said in pfSense not enabling port:

Did you ever try just linking the two SFP+ ports directly to see if it would link up and be stable?

explain.

ok, corrected mod_probe, set to =1.

executed the reload.below is the output, port on switch has gone red now.

wondering if it might sense to get the port and the interface to be back on auto negotiate.G

[31585.664382] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31585.769298] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31585.873330] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31585.888669] ixgbe 0000:04:00.1: complete [31585.889282] ixgbe 0000:04:00.0: removed PHC on enp4s0f0 [31585.949971] ixgbe 0000:04:00.0 enp4s0f0 (unregistering): left allmulticast mode [31585.949974] ixgbe 0000:04:00.0 enp4s0f0 (unregistering): left promiscuous mode [31586.012658] ixgbe 0000:04:00.0: complete [31586.041780] ixgbe: Intel(R) 10 Gigabit PCI Express Network Driver [31586.041782] ixgbe: Copyright (c) 1999-2016 Intel Corporation. [31586.217345] ixgbe 0000:04:00.0: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [31586.217650] ixgbe 0000:04:00.0: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [31586.217734] ixgbe 0000:04:00.0: MAC: 2, PHY: 19, SFP+: 5, PBA No: FFFFFF-0FF [31586.217735] ixgbe 0000:04:00.0: a8:b8:e0:05:f0:91 [31586.218736] ixgbe 0000:04:00.0: Intel(R) 10 Gigabit Network Connection [31586.234395] ixgbe 0000:04:00.0 enp4s0f0: renamed from eth0 [31587.366524] ixgbe 0000:04:00.1: Multiqueue Enabled: Rx Queue count = 12, Tx Queue count = 12 XDP Queue count = 0 [31587.366829] ixgbe 0000:04:00.1: 16.000 Gb/s available PCIe bandwidth, limited by 5.0 GT/s PCIe x4 link at 0000:00:1c.4 (capable of 32.000 Gb/s with 5.0 GT/s PCIe x8 link) [31587.366913] ixgbe 0000:04:00.1: MAC: 2, PHY: 1, PBA No: FFFFFF-0FF [31587.366914] ixgbe 0000:04:00.1: a8:b8:e0:05:f0:92 [31587.367921] ixgbe 0000:04:00.1: Intel(R) 10 Gigabit Network Connection [31587.378331] ixgbe 0000:04:00.1 enp4s0f1: renamed from eth0 -

:(

disabled auto negotiate on interface,

did a if reload -a

dmesg results in flapping again. port on switch has gone from red to grey/no errors.

[31747.031303] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31747.135279] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31748.071313] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31748.279274] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31749.007315] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31749.111264] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31751.815311] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31751.919316] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31752.439315] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31752.543250] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31752.751293] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31752.855259] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31753.167301] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31753.271254] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31753.895300] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31753.999229] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31754.935184] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31755.039143] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31756.287172] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31756.391041] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31758.055259] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31758.159230] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31761.591166] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31761.695076] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31761.903216] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31762.007245] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31762.215038] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31762.319061] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31762.943117] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31763.047212] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31764.503186] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31764.607154] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31764.919363] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31765.023138] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down [31765.127138] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TX [31765.231280] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Down -

just tried something, disabled the vmbr40 bridge, by removing the physical device.

configured a ip directly onto physical device.

can't ping the 172.16.40.1 gw.

it's saying no_carrier when i do a

ip aG

-

@georgelza said in pfSense not enabling port:

explain.

I mean just link the two SFP ports on the Topton together to see if it will link without flapping. You obviously won't be able to pass any traffic but if it shows a stable link on both ports in that situation it's probably some low level issue between the ix ports and the switch.

Though thinking more you could set that up in proxmox so you could pass traffic between them by having them on separate bridges.

-

@stephenw10 will try tomorrow.

received a package bout 30min, ago, havent opened it, but think it could be the DAC cable and the 2nd Transceiver.

G

-

Ran the following on the pfSense host:

pciconf -lcv ix0 ix0@pci0:4:0:0: class=0x020000 rev=0x01 hdr=0x00 vendor=0x8086 device=0x10fb subvendor=0xffff subdevice=0xffff vendor = 'Intel Corporation' device = '82599ES 10-Gigabit SFI/SFP+ Network Connection' class = network subclass = ethernet cap 01[40] = powerspec 3 supports D0 D3 current D0 cap 05[50] = MSI supports 1 message, 64 bit, vector masks cap 11[70] = MSI-X supports 64 messages, enabled Table in map 0x20[0x0], PBA in map 0x20[0x2000] cap 10[a0] = PCI-Express 2 endpoint max data 256(512) FLR RO NS max read 512 link x4(x8) speed 5.0(5.0) ASPM disabled(L0s) cap 03[e0] = VPD ecap 0001[100] = AER 1 0 fatal 0 non-fatal 1 corrected ecap 0003[140] = Serial 1 a8b8e0ffff05ef51 ecap 000e[150] = ARI 1 ecap 0010[160] = SR-IOV 1 IOV disabled, Memory Space disabled, ARI disabled 0 VFs configured out of 64 supported First VF RID Offset 0x0180, VF RID Stride 0x0002 VF Device ID 0x10ed Page Sizes: 4096 (enabled), 8192, 65536, 262144, 1048576, 4194304 -

sweeeeeet... so ye that delivery was 1 x DAC, 1 x other brand SFP+ Transciever.

plugged the DAC in between switch and pfsense SFP+ port 0

plugged the new Transceiver into pmox SFP+ port0

both started flashing...

go onto unifi network controller, both SFP+ ports immediately changed colour to hey we got something we recognise, vs grey or red.in pmox console,

dmesg =>

[78378.314597] ixgbe 0000:04:00.0 enp4s0f0: detected SFP+: 5 [78433.681346] ixgbe 0000:04:00.0 enp4s0f0: NIC Link is Up 10 Gbps, Flow Control: RX/TXand ip a

ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host noprefixroute valid_lft forever preferred_lft forever 2: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff 3: enp3s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:72 brd ff:ff:ff:ff:ff:ff 4: enp5s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:73 brd ff:ff:ff:ff:ff:ff 5: enp6s0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:02:a3:74 brd ff:ff:ff:ff:ff:ff 8: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:02:a3:71 brd ff:ff:ff:ff:ff:ff inet 172.16.10.50/24 scope global vmbr0 valid_lft forever preferred_lft forever inet6 fe80::aab8:e0ff:fe02:a371/64 scope link valid_lft forever preferred_lft forever 9: vmbr40: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether a8:b8:e0:05:f0:91 brd ff:ff:ff:ff:ff:ff inet6 fe80::aab8:e0ff:fe05:f091/64 scope link valid_lft forever preferred_lft forever 10: tap100i0: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i0 state UNKNOWN group default qlen 1000 link/ether c6:2d:5a:7a:41:d7 brd ff:ff:ff:ff:ff:ff 11: fwbr100i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether da:0c:52:e1:39:89 brd ff:ff:ff:ff:ff:ff 12: fwpr100p0@fwln100i0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000 link/ether 76:c4:56:d3:6a:93 brd ff:ff:ff:ff:ff:ff 13: fwln100i0@fwpr100p0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i0 state UP group default qlen 1000 link/ether da:0c:52:e1:39:89 brd ff:ff:ff:ff:ff:ff 14: tap100i1: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i1 state UNKNOWN group default qlen 1000 link/ether 76:c9:86:22:cc:30 brd ff:ff:ff:ff:ff:ff 15: fwbr100i1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 52:9a:1c:4d:76:a6 brd ff:ff:ff:ff:ff:ff 16: fwpr100p1@fwln100i1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr0 state UP group default qlen 1000 link/ether 26:2d:af:5a:9a:b4 brd ff:ff:ff:ff:ff:ff 17: fwln100i1@fwpr100p1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i1 state UP group default qlen 1000 link/ether 52:9a:1c:4d:76:a6 brd ff:ff:ff:ff:ff:ff 18: tap100i2: <BROADCAST,MULTICAST,PROMISC,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast master fwbr100i2 state UNKNOWN group default qlen 1000 link/ether c2:9f:1f:4e:c6:2e brd ff:ff:ff:ff:ff:ff 19: fwbr100i2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 7a:a8:67:a9:29:14 brd ff:ff:ff:ff:ff:ff 20: fwpr100p2@fwln100i2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master vmbr40 state UP group default qlen 1000 link/ether 72:43:cc:4b:b3:40 brd ff:ff:ff:ff:ff:ff 21: fwln100i2@fwpr100p2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master fwbr100i2 state UP group default qlen 1000 link/ether 7a:a8:67:a9:29:14 brd ff:ff:ff:ff:ff:ff 39: enp4s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr40 state UP group default qlen 1000 link/ether a8:b8:e0:05:f0:91 brd ff:ff:ff:ff:ff:ff 40: enp4s0f1: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000 link/ether a8:b8:e0:05:f0:92 brd ff:ff:ff:ff:ff:ffnow to reconfigure vlan40 from the igc0.40 onto ix0

ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens18: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:c0:e8:1e brd ff:ff:ff:ff:ff:ff inet 172.16.10.51/24 metric 100 brd 172.16.10.255 scope global dynamic ens18 valid_lft 4206sec preferred_lft 4206sec inet6 fe80::be24:11ff:fec0:e81e/64 scope link valid_lft forever preferred_lft forever 3: ens19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:81:2c:cb brd ff:ff:ff:ff:ff:ff inet6 fe80::be24:11ff:fe81:2ccb/64 scope link valid_lft forever preferred_lft forever 4: ens20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether bc:24:11:1e:20:ac brd ff:ff:ff:ff:ff:ff inet6 fe80::be24:11ff:fe1e:20ac/64 scope link valid_lft forever preferred_lft forever 5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:cb:d9:fb:26 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft foreverhappiness, making progress in right direction...

i configured my pfSense console to be accessible via 2nd address on 172.16.40.1. reconfigured that via primary 172.16.10.1 interface to now be serviced/routed via ix0, then connected to console via new 2nd, working so have a back door ;)

connected via back door and went and reconfigured vlan0, vlan20, vlan30 to now be routed via ix0 also. hit refresh on my browser which access console via 172.16.10.1 and did same for 172.16.40.1, still accessible.

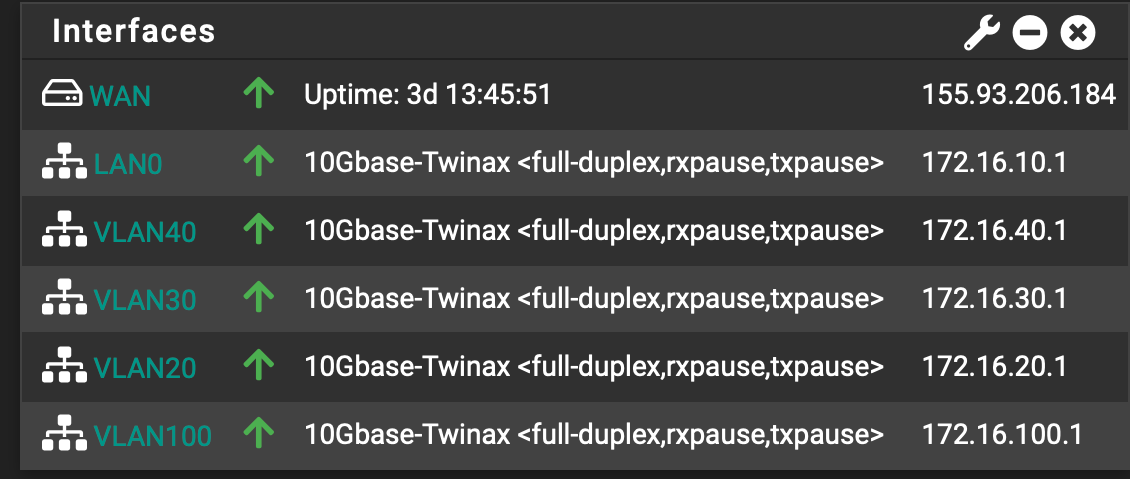

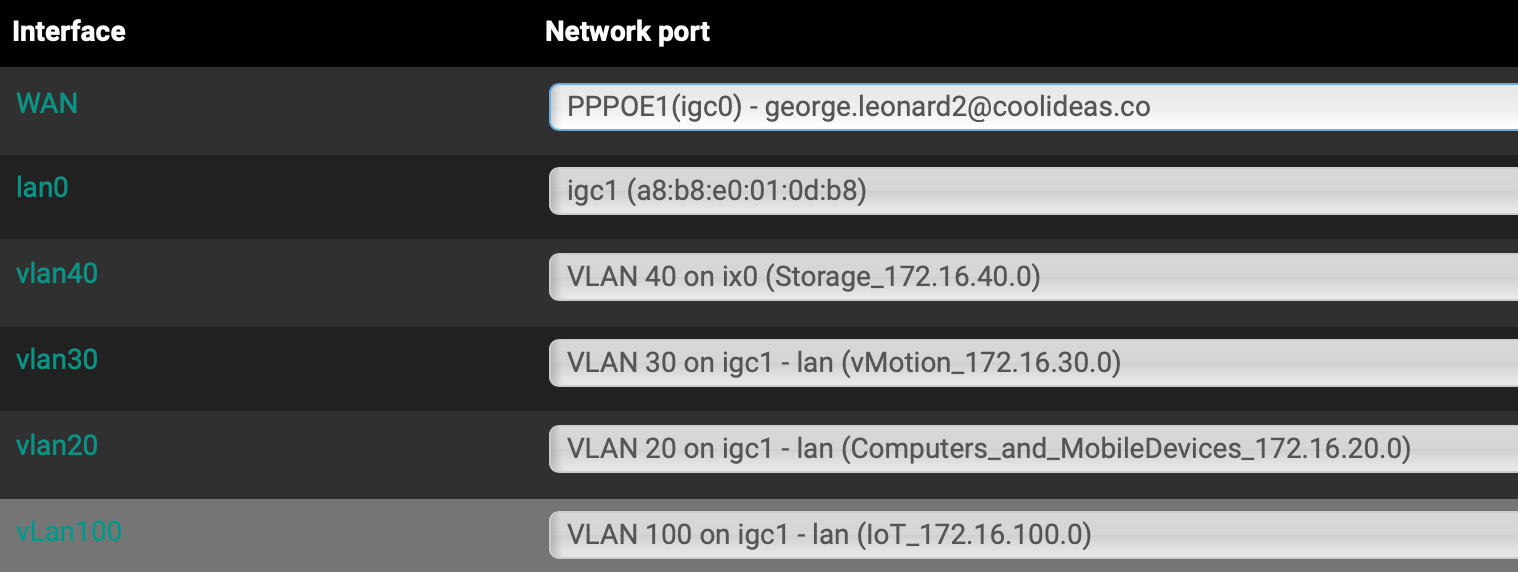

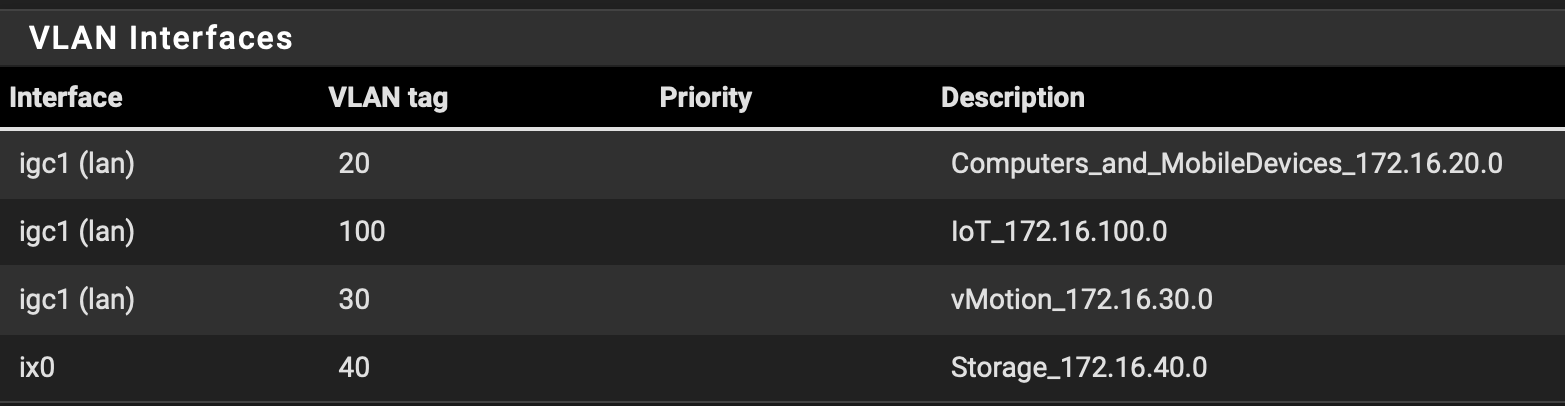

at this point the below picture reflect pfsense config.

Ok, this seemed good. but (once i unplugged the 2.5 igc1 cat6 cable between ProMax port24 and pfSense ) now found i could not access anything else that sat on 172.16.10.0/24 network.

At this point wondering... am i trying to get something to work and whats the value of it... does it make sense...

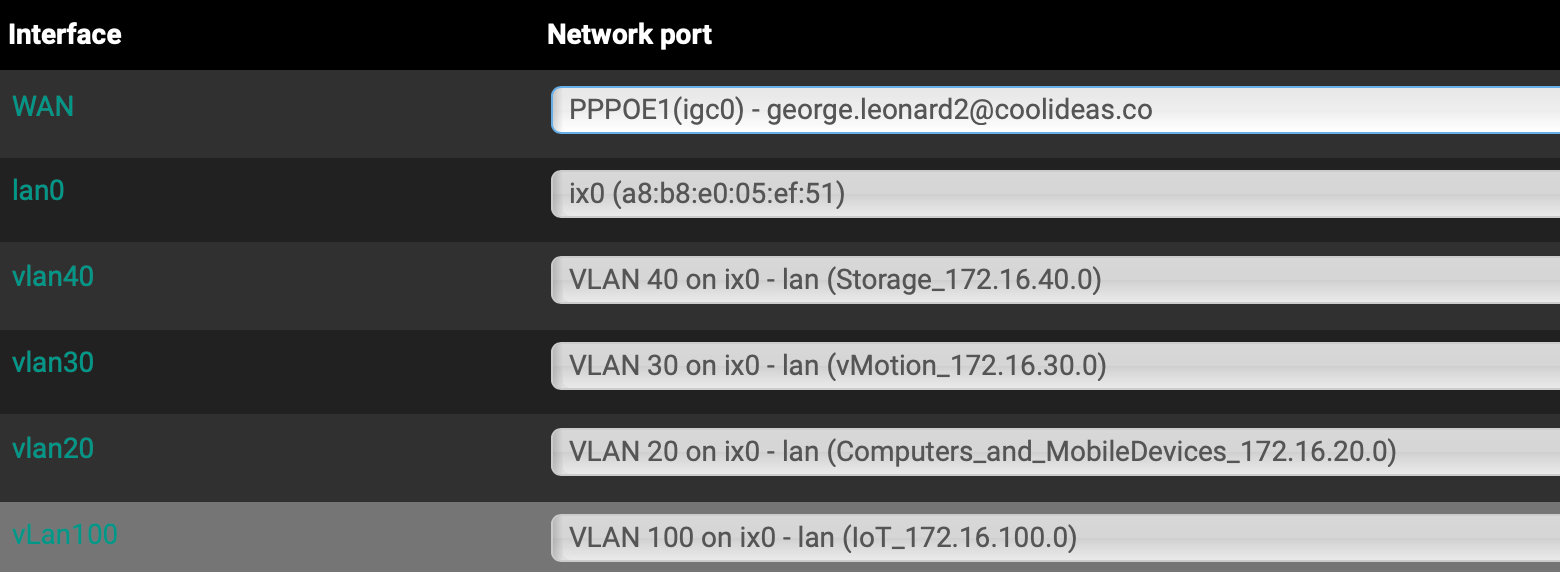

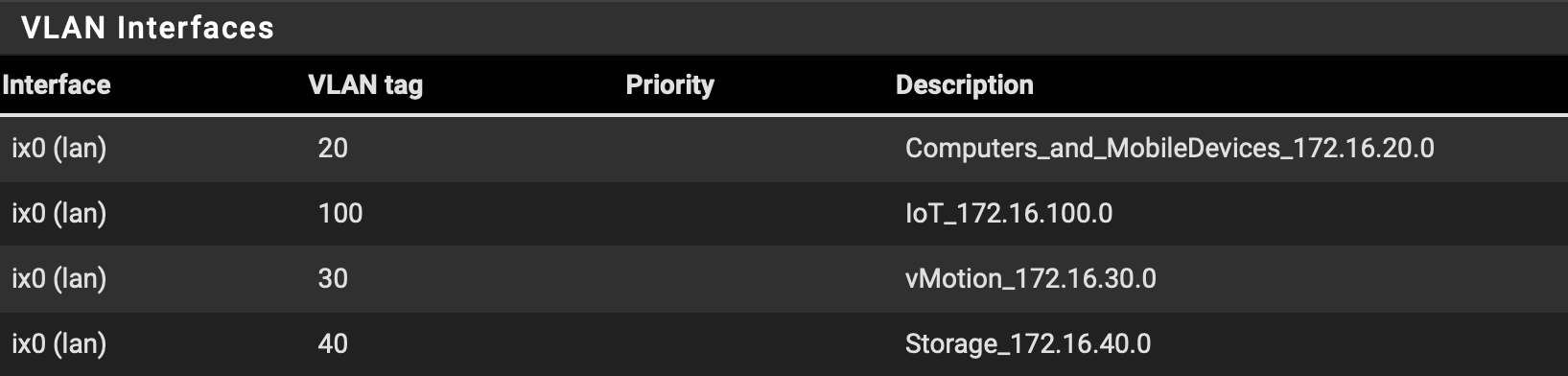

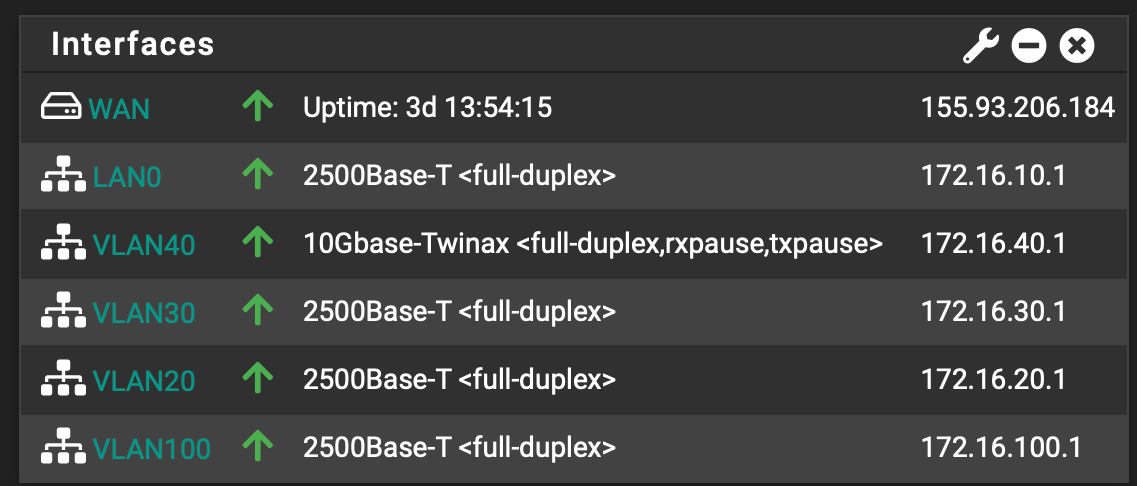

To fix the above I reconfigured onto this.

Maybe leave vlan0, vlan20, vlan30 and vlan100 on igc1 as all the device that connect to that themself are either wifi, 1GbE or 2.5GbE based.

then leave vlan40 on ix0 and the device that will on there is 10GbE based.

I got an aggregation on the way anyhow. so SFP+ port 2 on pfSense will go into aggregation and the pmox and TrueNAS will go into that.some comments, view's

note, on ubuntu-01 i still dont have a ip assigned to the vlan30 and vlan40 interfaces... so thats i guess the last to be fixed...

G

-

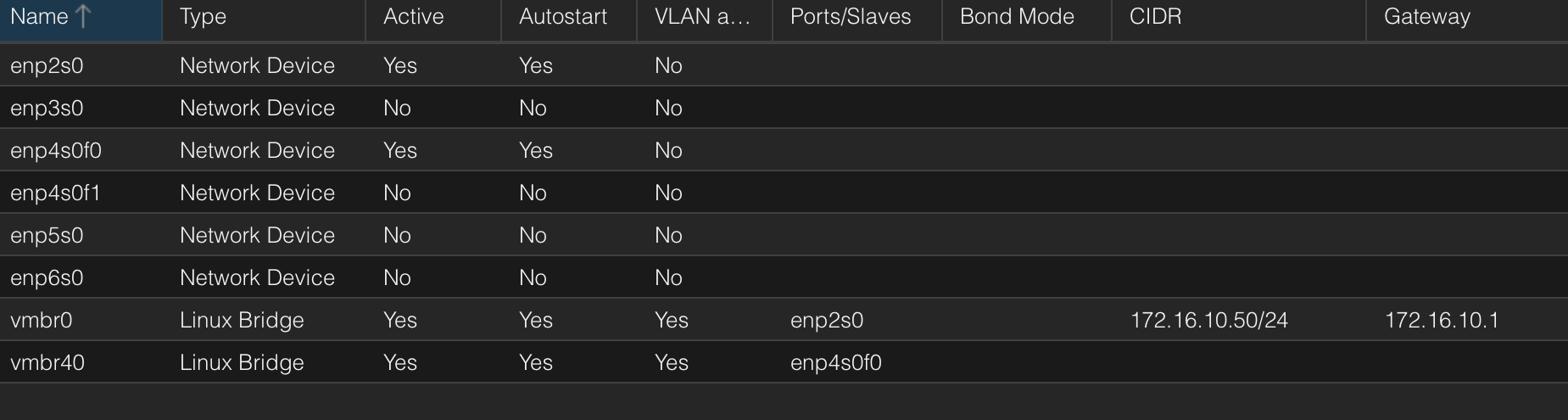

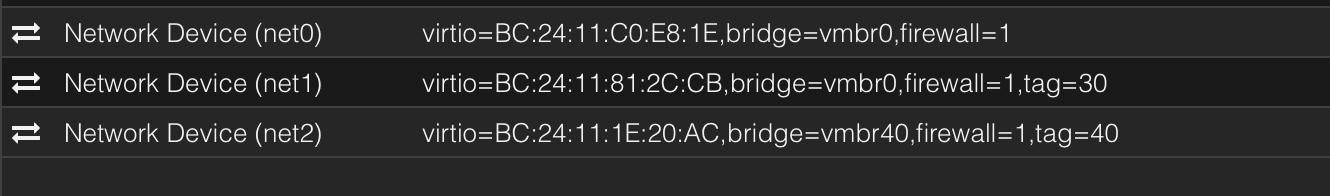

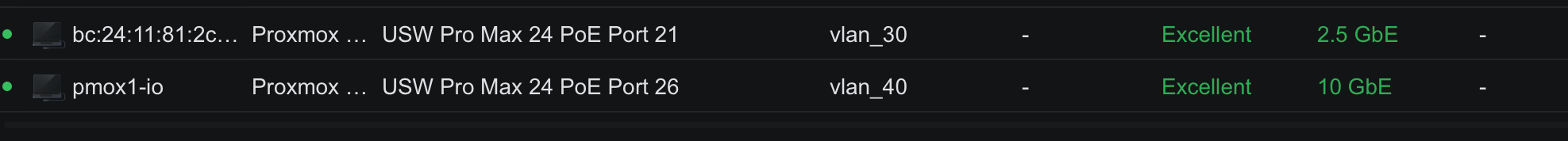

for reference, here is my pmox datacenter

and pmox1 server network configuration.

interesting enough, without telling my unifi environment, this is what it's showing.

<note the MAC addresses assigned to net1 and net2 has been dhcp reserved in pfsense on the respective vlans as 172.16.30.11 and 172.16.40.11

<interesting take away, the unifi has no problem with the Dell/EMC SFP+ (dell emc ftlx8574d3bnl-fc) Transcievers, it's on the Topton side where they are not liked, I have found a thread on lawrenceIT that points to the following transceivers working also>

10Gtek 10GBase-SR SFP+ LC Transceiver, 10G 850nm Multimode SFP Moduledell emc ftlx8574d3bnl-fc

-

@georgelza said in pfSense not enabling port:

Ok, this seemed good. but (once i unplugged the 2.5 igc1 cat6 cable between ProMax port24 and pfSense ) now found i could not access anything else that sat on 172.16.10.0/24 network.

Unclear exactly what you are testing to and from in that scenario. Just disconnecting the cable won't start routing via some other subnet if it still has an IP address directly in the subnet.

-

I wanted all traffic between the ProMax & pfSense to flow over the DAC.

why i reconfigured all vlan GW's to then sit on the Ix0 interface.

in the end what i got is vlan0, vlan20, vlan30 and vlan100 which is "low" bandwidth traffic get to the fSense via igb1 / 2.5GbE and then all traffic from vlan40, will sit on ix0,

G