Mixing different NIC Speeds (1Gb & 10Gb) Performance Problem Question

-

@ngr2001 One should imagine as that is a more enterprise focused switch. But looking at it’s specs it’s only equipped with 2Gb Memory, and that is distributed as 2MB/port for the 24 port edition and the rest for the OS.

So actually it’s really low on buffer - when you start a speedtest on even 1Gbe that buffer fils in less than 1/50th of a second when TCP L3 flow control is not “holding it back”So to make that work you definitively need Flow control enabled on the ports and your client. Depending on your pfSense NIC it should be enabled by default (intel adapters).

But have a look at the switch management CLI. I know those switches has some configurable buffer settings where you can increase it/preallocate more memory for buffers and possibly certain ports.

-

@ngr2001 The ports needs to be configured for Flow control negotiation enabled. Right now it is disabled.

-

Are there any 48 Port Enterprise grade switches that support 10Gb that have more memory and better flow control support that you would recommend, perhaps something that still goes for cheap on eBay.

-

@ngr2001 Sorry but I’m not up to speed on used prices and what models can be found used.

I believe the consensus is that a switch needs a 9MB packetbuffer to handle a 10Gbe port, and 32Mb buffer to handle 25Gbit.

But I would assume you can actually solve your problem on the Brocade simply by looking into the CLI and ask it to coalesce all packet queues buffer space into one or two queues of bigger size combined with enabling flow control.

-

@ngr2001 You don’t actually need to handle 10Gbe wirespeed from your WAN so a smaller buffer should be ample - and if flow control works, then it should really not be a problem

-

I managed to get flow control fully enabled on my client ports and the uplink to PFSense. Brocade manual had a typo very frustrating. Going to re-benchmark my various setups.

Is there an SSH command for PFSense to verify Flow Control is working ?

The correct syntax for my Brocade was to issue this globally:

symmetrical-flow-control enable

Then on each nic:

flow-control neg-on

This finally gave me the output:

Flow Control is config enabled, oper enabled, negotiation enabled

-

So enabling flow control by itself did not seem to fix the issue yet. Same exact benchmark problem as before.

You mentioned I probably need to increase my buffer, can you clarify which ports. Would all I need to do is increase the buffer on the uplink port that connects to PFSense ?

-

@ngr2001 Hmm, well maybe this thread can help you:

https://forum.netgate.com/topic/195289/10gb-lan-causing-strange-performance-issues-goes-away-when-switched-over-to-1gb

I don’t know the CLI of that switchmodel, so you would need to use google/the manual to figure out your options.

-

@ngr2001 ohh, that thread was started by you as well…

The SSH command for flow control is in that thread, and there is som inspiration for changing buffers on a switch

-

Lol totally forgot that, must have hit my head. Yes had the same issue with my Cisco switch it seems. I moved to a Brocade to get more 10Gb ports. Now I need to reproduce the same success.

-

Mmm, I would also check for MTU/MSS issues. They can present exactly like that.

I'd be amazed if the ICX7250 had a problem with that. Though it has many config options, it could misconfigured to do it!

-

So I forgot you solved this issue once for me when I had a Cisco 3650.

Seems like the Brocade ICX-7250 has the same issue but I find its CLI way more confusing and not as well documented as Cisco.

The Cisco fix was:

qos queue-softmax-multiplier 1200Brocade does not seem to have an equivalent that I can find, thus far I have tried.

Enabling Flow Control on all the Brocade Ports - Result no difference

Enabling "buffer-sharing-full" - Result no difference

Perhaps Brocades QOS "ingress-buffer-profile" or "egress-buffer-profile" would do the trick but the documentation and google searching is not leading me anywhere with something I can try.

If I cant get this working I may seriously consider getting a Cisco 3850, however I would like to get something that has 8Mb+ port buffers so I don't have to play this tuning game.

My ICX 7250 Config:

SSH@romulus>show run

Current configuration:

!

ver 08.0.95pT213

!

stack unit 1

module 1 icx7250-48-port-management-module

module 2 icx7250-sfp-plus-8port-80g-module

stack-port 1/2/1

stack-port 1/2/3

!

vlan 1 name DEFAULT-VLAN by port

router-interface ve 1

!

!

symmetrical-flow-control enable

!

!

optical-monitor

optical-monitor non-ruckus-optic-enable

aaa authentication web-server default local

aaa authentication login default local

enable aaa console

hostname romulus

ip dhcp-client disable

ip dns server-address 10.0.0.1

ip route 0.0.0.0/0 10.0.0.1

!

no telnet server!

clock timezone us Eastern

!

!

ntp

disable serve

server time.cloudflare.com

!

!

no web-management http

!

manager disable

!

!

manager port-list 987

!!

interface ethernet 1/1/4

flow-control neg-on

!

interface ethernet 1/1/48

flow-control neg-on

!

interface ethernet 1/2/1

flow-control neg-on

!

interface ethernet 1/2/8

flow-control neg-on

!

interface ve 1

ip address 10.0.0.3 255.255.255.0

!

!

end -

I would also try specifically disabling flow-control on all interfaces in the path. We have seen cases where flow-control itself was the problem. I really wouldn't expect flow-control to be an issue here when there are 1G links both up and down stream limiting the flow already.

-

Last time I tried it made no difference but I'll try again. To me its clearly a Brocade issue, much like the Cisco issue I had but was able to fix with your help, I just cant find a comparable setting.

I should have just bought a Cisco 3850 with the 12x multigig ports. I am seeing them on ebay for $99. At that price I may just buy one and give up on the brocade.

With the 3850 I could have my WAN, LAN, and Win 11 clients all at 2.5Gb with a few remaining Win 11 clients at 1Gb. With the larger buffer and known QOS tweaks it would likely go a lot smoother for me.

-

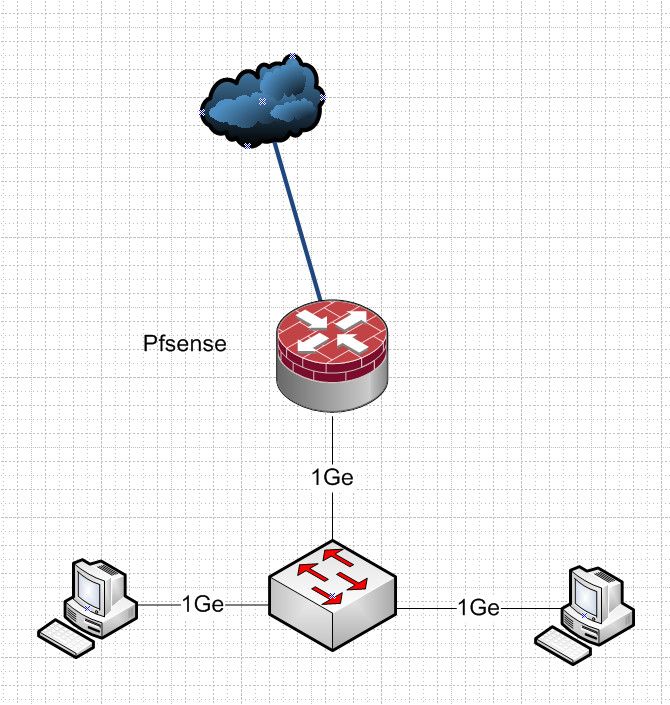

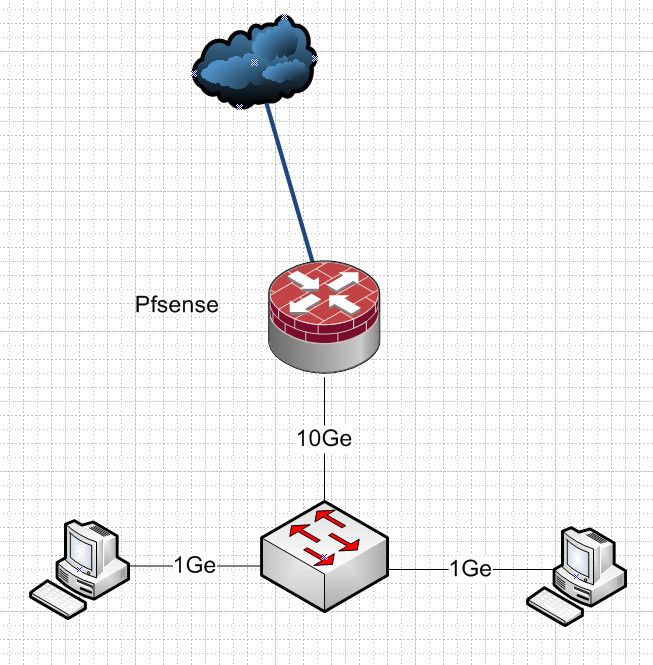

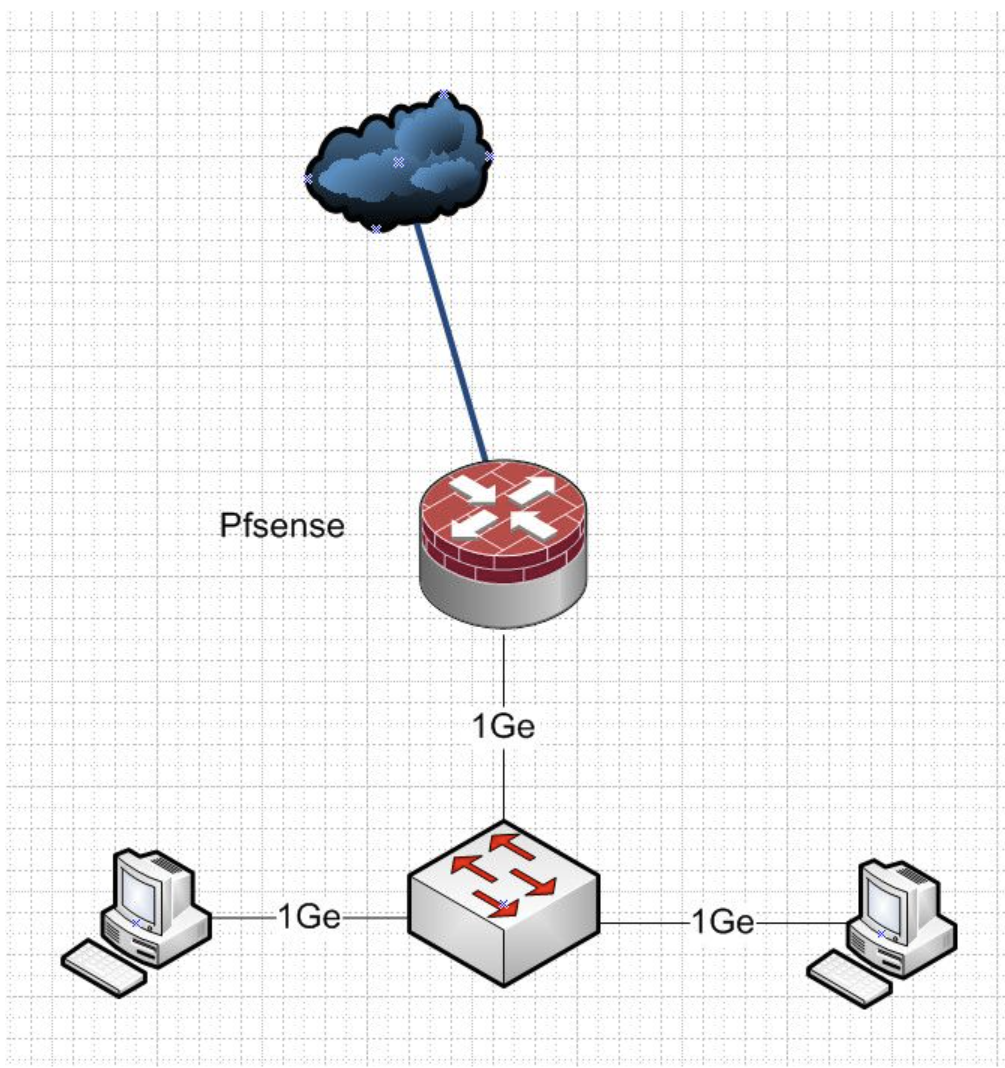

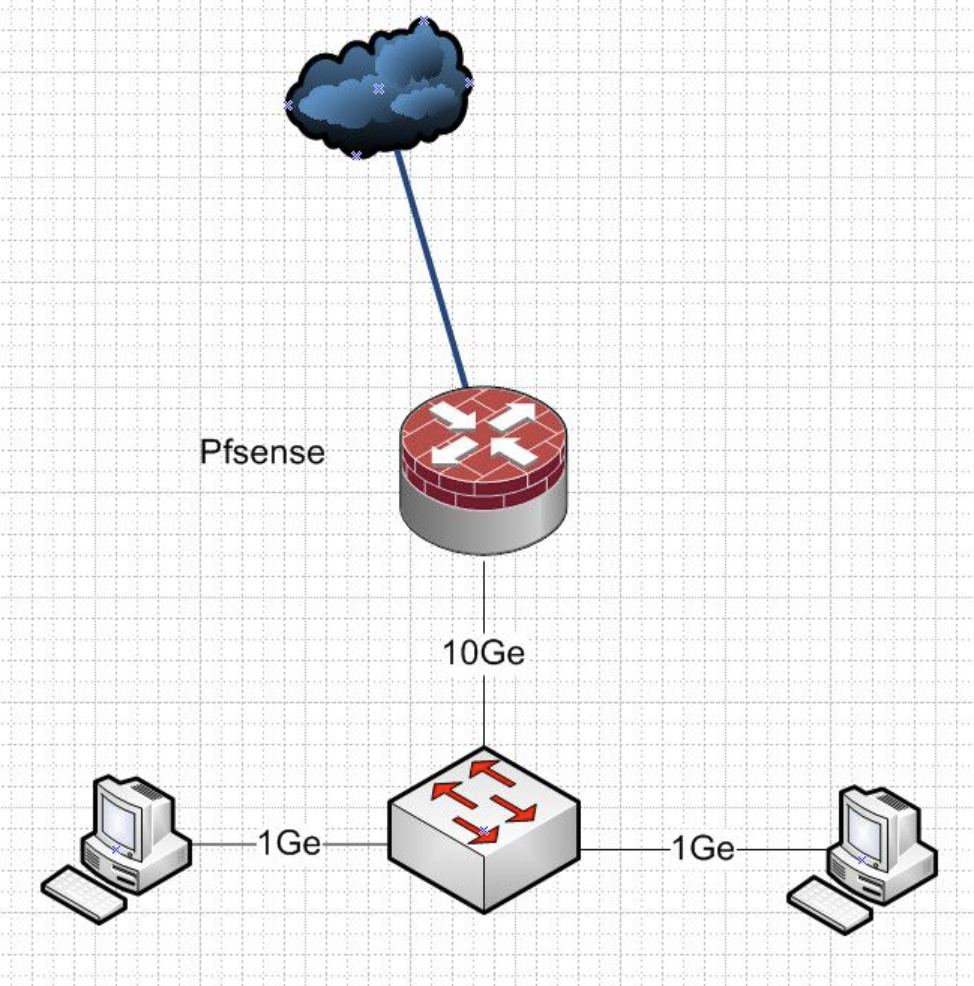

@ngr2001 Ok so we are sure we are on the same page..

In this config where its 1ge from your switch to pfsense, a single client is able to get 900ish Mbps..

But in this config.. Where pfsense has 10ge to your switch.. A single client is only able to get 600Mbps?

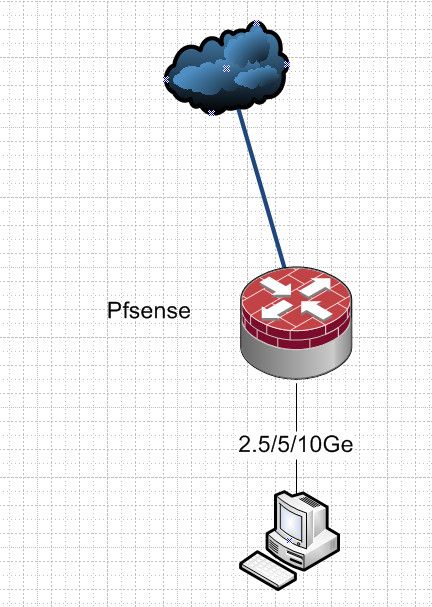

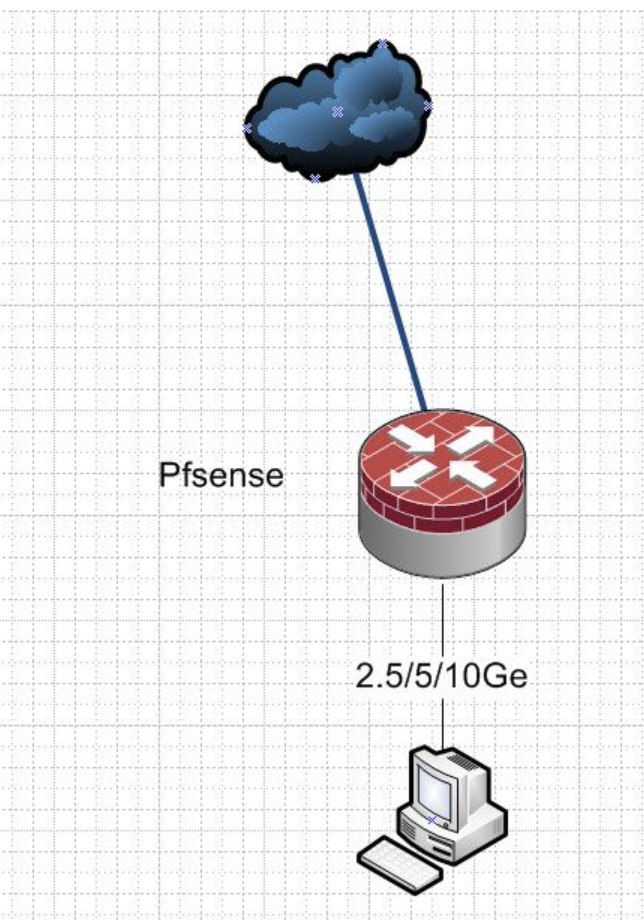

Is there any way you can test this config?

Where the client has a connection that can do your wan 2Ge isp connection? ie 2.5 or 5 or 10 directly connected to just a single client?

-

@ngr2001 said in Mixing different NIC Speeds (1Gb & 10Gb) Performance Problem Question:

Last time I tried it made no difference but I'll try again. To me its clearly a Brocade issue, much like the Cisco issue I had but was able to fix with your help, I just cant find a comparable setting.

I should have just bought a Cisco 3850 with the 12x multigig ports. I am seeing them on ebay for $99. At that price I may just buy one and give up on the brocade.

With the 3850 I could have my WAN, LAN, and Win 11 clients all at 2.5Gb with a few remaining Win 11 clients at 1Gb. With the larger buffer and known QOS tweaks it would likely go a lot smoother for me.

It just borderline insane how cheap Cisco switches are used in the US..... You really must have a lot of shops that just rotates all the equipment on a schedule instead of actually looking at the value and lifetime the products offer.

-

@keyser said in Mixing different NIC Speeds (1Gb & 10Gb) Performance Problem Question:

With the 3850

hahah - yeah you would also have what sounds like a jet taking off where ever you put it ;)

-

In this setup all clients perform great, 900+Mbps sustained speedtests, no issues.

In this setup those 1Gb clients have issues, speedtest starts off strong and quickly drops to 500-600Mbps.

In this test both PFSense LAN NIC and the Client is at 10Gb, is this scenario the clients performance is also perfect, hitting 1.9Gbps sustained speedtest scores. The WAN is at 2.5Gb.

-

@ngr2001then yeah that sure seems like its switch related to me.

-

So do you see dropped packets in the switch? On which ports?