Mixing different NIC Speeds (1Gb & 10Gb) Performance Problem Question

-

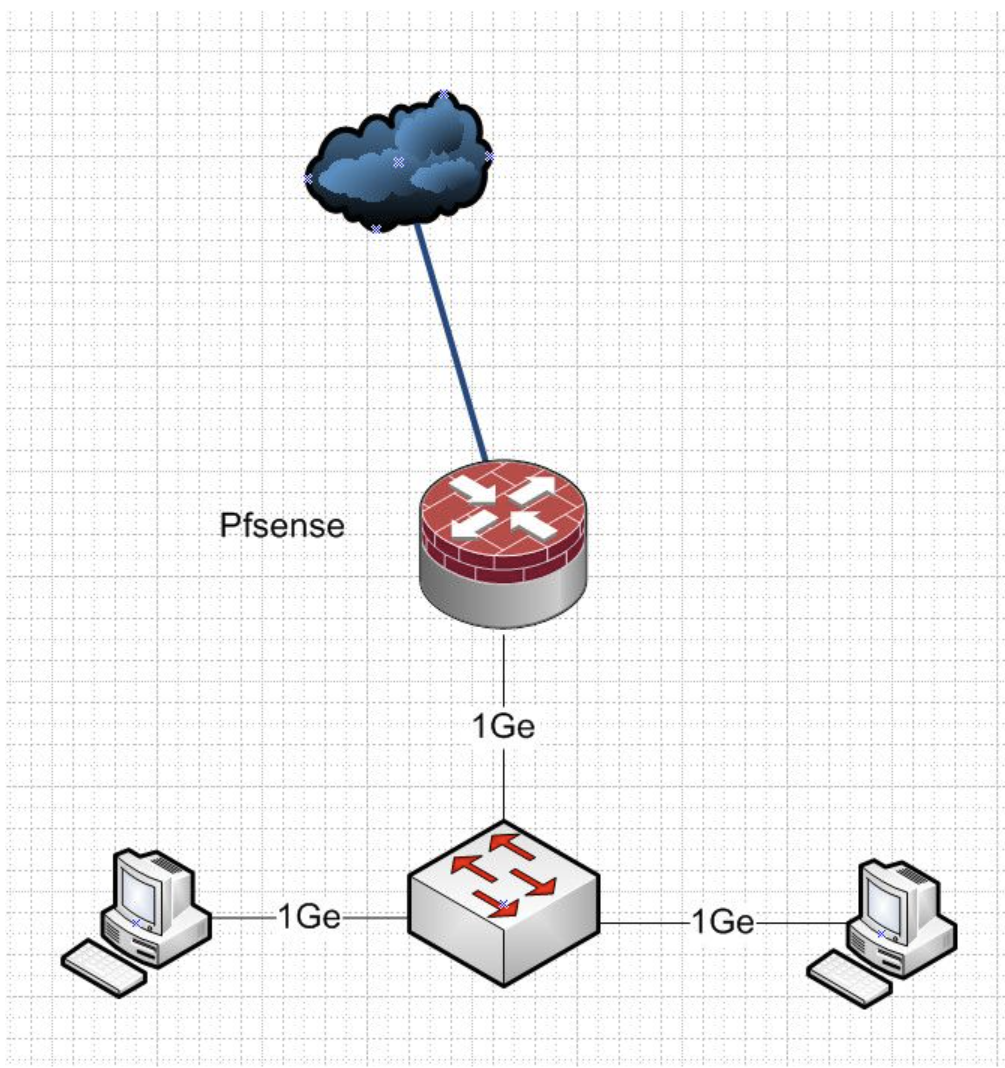

In this setup all clients perform great, 900+Mbps sustained speedtests, no issues.

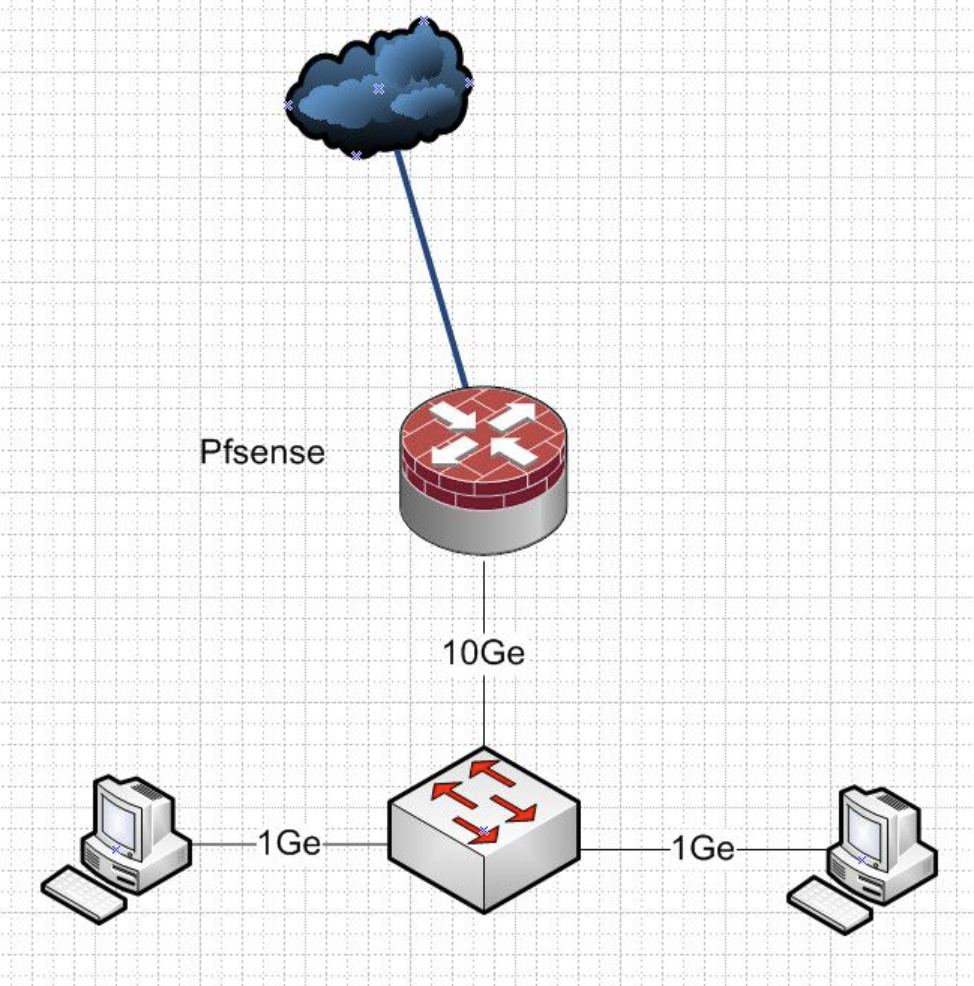

In this setup those 1Gb clients have issues, speedtest starts off strong and quickly drops to 500-600Mbps.

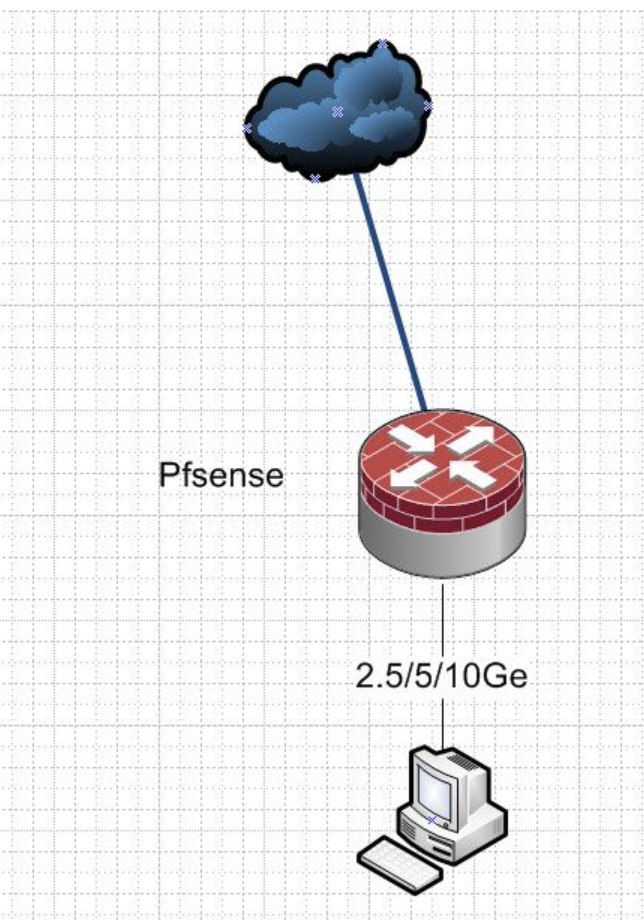

In this test both PFSense LAN NIC and the Client is at 10Gb, is this scenario the clients performance is also perfect, hitting 1.9Gbps sustained speedtest scores. The WAN is at 2.5Gb.

-

@ngr2001then yeah that sure seems like its switch related to me.

-

So do you see dropped packets in the switch? On which ports?

-

Oddly, on the new Brocade switch I am not seeing any dropped packets, even when the performance drops.

If you recall, the Cisco 3650 would record dropped packets like crazy until we modified the config.

-

Are you running the L2 or L3 firmware? L2 doesn't appear to offer the qos values but I'm not sure if that implies it doesn't use it.....

-

If you haven't already you might as well try bumping the ip-qos-session value.

-

On the Brocade ICX 7250 I have the Full Layer 3 Firmware / License.

Just the pure lack of community support and viable example documentation for the Brocade has me about to click buy-it now on a Cisco WS-C3850-12X48U-S.

Since I messed up once already, can anyone think of a better multigig switch than the WS-C3850-12X48U-S, by better I mean larger buffers to handle 10Gb traffic and mixed client speeds.

-

I must say I find it almost impossible to believe that the 7250 can't handle this. I have an older 6450 here and have never seen problems like that with it. I have a 7250 also I just haven't found time to install it. Yet.

-

I agree, I 100% feel its fixable, there is just not much info or example configs floating around to go off of.

Even their VLAN setup feels confusing compared to Cisco.

-

Ha, well you have to hand it to Cisco, they have successfully convinced the world that their UI is the only and best UI. Including the Cisco terms for things that already had perfectly good names.

But a 10G trunk link between a router and switch with 1G downstream devices is pretty common setup. I'd expect to see numerous threads complaining about this yet....

-

I wonder though, how many people go the length to properly test or verify their performance, even in my case I think the average person may have missed it.

I found this useful chart that attempts to document various switches and their buffer sizes, could come in handy for someone.

https://people.ucsc.edu/~warner/buffer.htmlI noted that the ICX7250 is only 2MB and the Cisco 3650 and 3850 are 6MB. I got the brocade only to get additional 10Gb ports but with the numbers showing it has 1/3 the buffer size, its probably no wonder the issues I am seeing,. Granted, may be fixable with some type of QOS or buffer tuning but there is not a whole lot of memory to go around.

https://people.ucsc.edu/~warner/buffer.html

The issue with my old 3650 was that it only had 2x 10Gb ports, with the 3850 having 12x I am thinking this may be my best path forward.

-

Mmm, just no real idea of how much buffer space might be needed...

I'll try and get mine setup for a test.

Do you still see it in a local test or only to the remote speedtest server?

-

Just on remote speed test thus far

Try fast.com and speedtest.net

as dumb as fast.com is, its seems to highlight the issue immediately.

-

Mmm, I hit that exact symptom recently and it turned out to be an MTU/MSS issue. The test at fast.com takes ages to start doing anything, then gives some crappy download figure and errors out on the upload.

Try setting an MSS value on the interface in pfSense as a test. I'd just use, say, 1460 to be sure.

-

Well that's very interesting.

All my MTU's are 1500 though, (PF WAN & LAN) all my switchports etc. Are you suggesting I change that everywhere to 1460, that seems like a pain for sure.

-

Nope it only needs to be set in one place. pf will force it to that when it passes the traffic as long as pf-scrub is enabled. So I would add it on the pfSense internal interface.

-

Wouldn't that cause packet fragmentation though, all the clients are going to be hitting the PF Lan Nic at a MTU of 1500.

-

No it should cause TCP to just send smaller packets. Where I hit it doing that completely resolved the issue even though it shouldn't have done anything as far as I could see. But something had broken PMTU. Took waaay too long to find it

Hard to see how the 1G switch interface could do that but the symptoms you're seeing are so similar it's worth trying. It's trivial to test too.

-

Ill try it, but what is "pf-scrub" how do I enable.

-

@ngr2001 I was the one that gave you the solution for your Cisco 3650 with the qos setting. This is a TCP Flow Control issue and I have more or less been trying to resolve this issue for 3 years now. I am going to make an educated guess that you are using the Comcast XB8. DOCSIS does not actually support TCP Flow Control which is what you want. You can use Ethernet Flow Control but it is a blunt sledgehammer solution pausing all traffic on the pfSense LAN interface. The XB8 also doesn't truly go into bridge mode as it still reaches out to the Comcast headend and receives its own public IPv4/6 to use with its hidden BSSIDs. Do a quick Google on TCP Flow Control and DOCSIS and you will see what I mean. DOCSIS has its own method for handling congestion.