Cannot Achieve 10g pfsense bottleneck

-

And the different subnets are all VLANs across that LAGG with pfSense routing between them I assume?

-

@stephenw10 exactly

-

Did a quick test in my work lab today.

- Host is ESXI on a 40-core DELL with 192GB memory & 10TB SSD.

- PfSense 2.7.2 running in ESXI with 10G interfaces. WAN Public IP connects to an external Juniper MX80 router.

- Another interface on the MX80 has our speedtest server with iperf3 running under Ubuntu.

- Client PC is windows10 VM with 12 CPU & 32GB memory.

So iperf3 traffic goes as follows, and achieves about 8-9Gbps.

Client-VM <--> PfSense <--> MX80 <--> iperf3_Server.

Downstream Test.

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.01 sec 1.43 GBytes 1.23 Gbits/sec 1279 sender

[ 5] 0.00-10.00 sec 1.43 GBytes 1.23 Gbits/sec receiver

[ 7] 0.00-10.01 sec 1.74 GBytes 1.49 Gbits/sec 408 sender

[ 7] 0.00-10.00 sec 1.74 GBytes 1.49 Gbits/sec receiver

[ 9] 0.00-10.01 sec 1.77 GBytes 1.52 Gbits/sec 604 sender

[ 9] 0.00-10.00 sec 1.77 GBytes 1.52 Gbits/sec receiver

[ 11] 0.00-10.01 sec 1.01 GBytes 868 Mbits/sec 480 sender

[ 11] 0.00-10.00 sec 1.01 GBytes 866 Mbits/sec receiver

[ 13] 0.00-10.01 sec 1.78 GBytes 1.52 Gbits/sec 777 sender

[ 13] 0.00-10.00 sec 1.77 GBytes 1.52 Gbits/sec receiver

[ 15] 0.00-10.01 sec 881 MBytes 739 Mbits/sec 838 sender

[ 15] 0.00-10.00 sec 879 MBytes 738 Mbits/sec receiver

[ 17] 0.00-10.01 sec 1010 MBytes 847 Mbits/sec 522 sender

[ 17] 0.00-10.00 sec 1007 MBytes 845 Mbits/sec receiver

[ 19] 0.00-10.01 sec 908 MBytes 761 Mbits/sec 1038 sender

[ 19] 0.00-10.00 sec 904 MBytes 759 Mbits/sec receiver

[SUM] 0.00-10.01 sec 10.5 GBytes 8.98 Gbits/sec 5946 sender

[SUM] 0.00-10.00 sec 10.4 GBytes 8.97 Gbits/sec receiverUpstream (without -R) only gets about 4-5Gbps, so not sure about that.

Note: this was a quick & dirty test with no optimisation of the ESXI 10G virtual NIC's etc, and no packages, limiters, etc on PfSense. Will upgrade to 2.8.x and see what happens.

-

Upgraded to 2.8.1 but no real difference. with GUI logged in I'm getting about 4.5-4.7Gbps Upstream, but with GUI logged out it increases to 5.1Gbps.

DS is the same at just over 9Gbps.

-

@pwood999 thanks for that, I suspect the 25G LAG connection to the downstream switch. I will try to disable the LAG and see what happens with another test.

And according to your test even with a simple setup, how come you could not achieve 10g both ways?

-

Ok well I'd try setting 8 queues on that system since I'd expect that NIC to suppprt them:

dev.ixl.0.iflib.override_nrxqs: # of rxqs to use, 0 => use default # dev.ixl.0.iflib.override_ntxqs: # of txqs to use, 0 => use default #You might also bump the queue descriptors:

dev.ixl.0.iflib.override_nrxds: list of # of RX descriptors to use, 0 = use default # dev.ixl.0.iflib.override_ntxds: list of # of TX descriptors to use, 0 = use default # -

@Laxarus I suspect it's a limitation on the iperf server. I tried WinVM to PfSense and get around 7.5-8.5G both ways.

PFsense client to the server seems limited on upstream to about 6Gbps. Not sure why yet, because we definitely got 8.5Gbps symmetric over XGS-PON using the same server. [UPDATE] just tried another Ubuntu VM with direct connection to the Juniper MX80 and that gets 8.7-9.4Gbps both ways with iperf3.

When I'm next in the Lab, I plan to connect our Excentis ByteBlower to the PfSense and try that. Much better traffic generator than iperf3, and I can then try UDP / TCP at various DS & US rates individually & simultaneous. It also allows me to set the packet payload to ensure no fragmentation

-

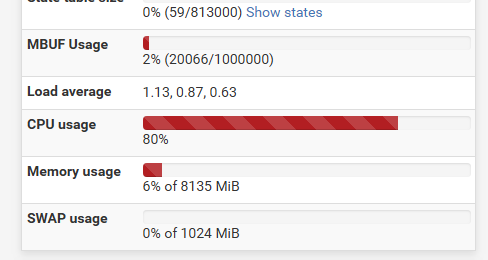

Another thing I noticed when running iperf for 30 seconds, is the PfSense CPU ramps up in stages. It goes to 27% after about 5secs, and then 45% 5 secs later, and finally about 80% for the remainder of the run.

Try adding this option "--get-server-output" and check the retries on the packet flows. That will reduce the effective data payload bitrate.

-

@stephenw10 how to modify these?

directly creating /boot/loader.conf.local and adding below ?# NIC queues dev.ixl.0.iflib.override_nrxqs="8" dev.ixl.0.iflib.override_ntxqs="8" dev.ixl.1.iflib.override_nrxqs="8" dev.ixl.1.iflib.override_ntxqs="8" # Queue descriptors dev.ixl.0.iflib.override_nrxds="2048" dev.ixl.0.iflib.override_ntxds="2048" dev.ixl.1.iflib.override_nrxds="2048" dev.ixl.1.iflib.override_ntxds="2048"Also If I increase the queues on both ix1 and ix2, then won't I be over-committing my cpu cores?

@pwood999 said in Cannot Achieve 10g pfsense bottleneck:

Try adding this option "--get-server-output" and check the retries on the packet flows. That will reduce the effective data payload bitrate.

will try that

-

Yes I would try that. Those values appear to be sysctls, you can rewrote them at runtime. but I'd expect to need to reload the driver to see that. So setting it as loader values might make more sense.

7 out of your 8 cores are 50% idle so adding more load shouldn't really be a bad thing IMO. You might also be able to achieve the same thing using core affinity with 4 queues. Right now it looks like you;re limited by 1 core that gets twice the loading somehow.

-

@stephenw10

Added these to /boot/loader.conf.local and rebooted the firewallthen

ixl1: netmap queues/slots: TX 8/2048, RX 8/2048 ixl1: SR-IOV ready ixl1: PCI Express Bus: Speed 8.0GT/s Width x8 ixl1: Allocating 8 queues for PF LAN VSI; 8 queues active ixl1: Ethernet address: 3c:ec:ec:dc:b3:a1 ixl1: Using MSI-X interrupts with 9 vectors ixl1: Using 8 RX queues 8 TX queues ixl1: Using 2048 TX descriptors and 2048 RX descriptors ixl1: PF-ID[1]: VFs 64, MSI-X 129, VF MSI-X 5, QPs 768, MDIO & I2C ixl1: fw 9.121.73281 api 1.15 nvm 9.20 etid 8000e153 oem 1.269.0 ixl1: <Intel(R) Ethernet Controller XXV710 for 25GbE SFP28 - 2.3.3-k> mem 0x38bffd000000-0x38bffdffffff,0x38bfff000000-0x38bfff007fff irq 40 at device 0.1 numa-domain 0 on pci7 ixl0: netmap queues/slots: TX 8/2048, RX 8/2048 ixl0: SR-IOV ready ixl0: PCI Express Bus: Speed 8.0GT/s Width x8 ixl0: Allocating 8 queues for PF LAN VSI; 8 queues active ixl0: Ethernet address: 3c:ec:ec:dc:b3:a2 ixl0: Using MSI-X interrupts with 9 vectors ixl0: Using 8 RX queues 8 TX queues ixl0: Using 2048 TX descriptors and 2048 RX descriptors ixl0: PF-ID[0]: VFs 64, MSI-X 129, VF MSI-X 5, QPs 768, MDIO & I2C ixl0: fw 9.121.73281 api 1.15 nvm 9.20 etid 8000e153 oem 1.269.0 ixl0: <Intel(R) Ethernet Controller XXV710 for 25GbE SFP28 - 2.3.3-k> mem 0x38bffe000000-0x38bffeffffff,0x38bfff008000-0x38bfff00ffff irq 40 at device 0.0 numa-domain 0 on pci7idle

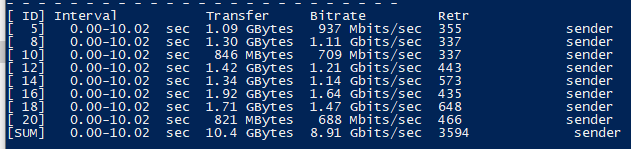

last pid: 28275; load averages: 1.19, 1.84, 1.08 up 0+00:08:07 03:49:25 644 threads: 9 running, 603 sleeping, 32 waiting CPU 0: 0.8% user, 0.0% nice, 0.4% system, 0.8% interrupt, 98.0% idle CPU 1: 0.8% user, 0.0% nice, 0.0% system, 0.8% interrupt, 98.4% idle CPU 2: 0.0% user, 0.0% nice, 2.0% system, 0.8% interrupt, 97.3% idle CPU 3: 1.2% user, 0.0% nice, 0.0% system, 0.8% interrupt, 98.0% idle CPU 4: 1.2% user, 0.0% nice, 2.0% system, 0.4% interrupt, 96.5% idle CPU 5: 0.4% user, 0.0% nice, 0.0% system, 0.4% interrupt, 99.2% idle CPU 6: 0.4% user, 0.0% nice, 0.4% system, 0.0% interrupt, 99.2% idle CPU 7: 1.2% user, 0.0% nice, 0.0% system, 0.4% interrupt, 98.4% idle Mem: 509M Active, 187M Inact, 2135M Wired, 28G Free ARC: 297M Total, 138M MFU, 148M MRU, 140K Anon, 1880K Header, 8130K Other 228M Compressed, 596M Uncompressed, 2.62:1 Ratio Swap: 4096M Total, 4096M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 11 root 187 ki31 0B 128K CPU1 1 6:12 98.71% [idle{idle: cpu1}] 11 root 187 ki31 0B 128K CPU3 3 6:13 97.83% [idle{idle: cpu3}] 11 root 187 ki31 0B 128K CPU4 4 6:18 97.72% [idle{idle: cpu4}] 11 root 187 ki31 0B 128K RUN 6 6:18 97.53% [idle{idle: cpu6}] 11 root 187 ki31 0B 128K CPU2 2 6:13 97.51% [idle{idle: cpu2}] 11 root 187 ki31 0B 128K RUN 5 6:01 97.14% [idle{idle: cpu5}] 11 root 187 ki31 0B 128K CPU7 7 5:59 97.12% [idle{idle: cpu7}] 11 root 187 ki31 0B 128K CPU0 0 5:49 97.08% [idle{idle: cpu0}] 71284 www 24 0 142M 39M kqread 6 0:31 7.91% /usr/local/sbin/haproxy -f /var/etc/haproxy/haproxy. 12 root -60 - 0B 384K WAIT 0 1:00 1.64% [intr{swi1: netisr 1}] 0 root -60 - 0B 2352K - 0 1:48 1.34% [kernel{if_io_tqg_0}] 12 root -60 - 0B 384K WAIT 5 1:09 1.19% [intr{swi1: netisr 7}] 0 root -60 - 0B 2352K - 2 0:35 0.94% [kernel{if_io_tqg_2}] 12 root -60 - 0B 384K WAIT 4 0:28 0.93% [intr{swi1: netisr 5}]and testing from win11 pc to a proxmox host

PS D:\Downloads\iperf3> .\iperf3.exe -c 192.168.55.21 -P 8 -w 256K -t 50 Connecting to host 192.168.55.21, port 5201 [ 5] local 192.168.30.52 port 62052 connected to 192.168.55.21 port 5201 [ 7] local 192.168.30.52 port 62053 connected to 192.168.55.21 port 5201 [ 9] local 192.168.30.52 port 62054 connected to 192.168.55.21 port 5201 [ 11] local 192.168.30.52 port 62055 connected to 192.168.55.21 port 5201 [ 13] local 192.168.30.52 port 62056 connected to 192.168.55.21 port 5201 [ 15] local 192.168.30.52 port 62057 connected to 192.168.55.21 port 5201 [ 17] local 192.168.30.52 port 62058 connected to 192.168.55.21 port 5201 [ 19] local 192.168.30.52 port 62059 connected to 192.168.55.21 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.01 sec 79.2 MBytes 659 Mbits/sec [ 7] 0.00-1.01 sec 75.4 MBytes 627 Mbits/sec [ 9] 0.00-1.01 sec 106 MBytes 885 Mbits/sec [ 11] 0.00-1.01 sec 96.5 MBytes 802 Mbits/sec [ 13] 0.00-1.01 sec 86.1 MBytes 716 Mbits/sec [ 15] 0.00-1.01 sec 101 MBytes 839 Mbits/sec [ 17] 0.00-1.01 sec 51.1 MBytes 425 Mbits/sec [ 19] 0.00-1.01 sec 68.2 MBytes 567 Mbits/sec [SUM] 0.00-1.01 sec 664 MBytes 5.52 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.01-2.01 sec 66.4 MBytes 557 Mbits/sec [ 7] 1.01-2.01 sec 76.8 MBytes 644 Mbits/sec [ 9] 1.01-2.01 sec 123 MBytes 1.03 Gbits/sec [ 11] 1.01-2.01 sec 70.2 MBytes 589 Mbits/sec [ 13] 1.01-2.01 sec 94.0 MBytes 788 Mbits/sec [ 15] 1.01-2.01 sec 104 MBytes 873 Mbits/sec [ 17] 1.01-2.01 sec 63.1 MBytes 529 Mbits/sec [ 19] 1.01-2.01 sec 65.6 MBytes 550 Mbits/sec [SUM] 1.01-2.01 sec 663 MBytes 5.56 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.01-3.01 sec 86.8 MBytes 729 Mbits/sec [ 7] 2.01-3.01 sec 99.9 MBytes 840 Mbits/sec [ 9] 2.01-3.01 sec 106 MBytes 891 Mbits/sec [ 11] 2.01-3.01 sec 110 MBytes 921 Mbits/sec [ 13] 2.01-3.01 sec 41.9 MBytes 352 Mbits/sec [ 15] 2.01-3.01 sec 78.2 MBytes 658 Mbits/sec [ 17] 2.01-3.01 sec 25.2 MBytes 212 Mbits/sec [ 19] 2.01-3.01 sec 53.8 MBytes 452 Mbits/sec [SUM] 2.01-3.01 sec 601 MBytes 5.05 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.01-4.00 sec 75.4 MBytes 635 Mbits/sec [ 7] 3.01-4.00 sec 84.6 MBytes 713 Mbits/sec [ 9] 3.01-4.00 sec 102 MBytes 860 Mbits/sec [ 11] 3.01-4.00 sec 119 MBytes 1.01 Gbits/sec [ 13] 3.01-4.00 sec 86.4 MBytes 727 Mbits/sec [ 15] 3.01-4.00 sec 88.1 MBytes 742 Mbits/sec [ 17] 3.01-4.00 sec 58.6 MBytes 494 Mbits/sec [ 19] 3.01-4.00 sec 54.4 MBytes 458 Mbits/sec [SUM] 3.01-4.00 sec 669 MBytes 5.63 Gbits/secstill 5G

and the top results

last pid: 1910; load averages: 5.12, 2.69, 1.53 up 0+00:11:13 03:52:31 644 threads: 15 running, 599 sleeping, 30 waiting CPU 0: 0.0% user, 0.0% nice, 97.7% system, 0.4% interrupt, 2.0% idle CPU 1: 0.8% user, 0.0% nice, 0.8% system, 31.3% interrupt, 67.2% idle CPU 2: 1.6% user, 0.0% nice, 28.9% system, 15.2% interrupt, 54.3% idle CPU 3: 0.8% user, 0.0% nice, 0.0% system, 59.8% interrupt, 39.5% idle CPU 4: 1.2% user, 0.0% nice, 6.3% system, 74.6% interrupt, 18.0% idle CPU 5: 0.8% user, 0.0% nice, 0.0% system, 35.9% interrupt, 63.3% idle CPU 6: 0.8% user, 0.0% nice, 17.6% system, 25.4% interrupt, 56.3% idle CPU 7: 2.3% user, 0.0% nice, 0.8% system, 44.1% interrupt, 52.7% idle Mem: 507M Active, 188M Inact, 2136M Wired, 28G Free ARC: 298M Total, 140M MFU, 146M MRU, 917K Anon, 1882K Header, 8181K Other 228M Compressed, 596M Uncompressed, 2.62:1 Ratio Swap: 4096M Total, 4096M Free PID USERNAME PRI NICE SIZE RES STATE C TIME WCPU COMMAND 0 root -60 - 0B 2352K CPU0 0 2:43 97.29% [kernel{if_io_tqg_0}] 12 root -60 - 0B 384K CPU4 4 1:45 94.90% [intr{swi1: netisr 1}] 12 root -60 - 0B 384K CPU3 3 2:15 87.69% [intr{swi1: netisr 7}] 11 root 187 ki31 0B 128K RUN 1 8:23 65.80% [idle{idle: cpu1}] 11 root 187 ki31 0B 128K RUN 5 8:05 63.96% [idle{idle: cpu5}] 11 root 187 ki31 0B 128K CPU2 2 8:25 58.48% [idle{idle: cpu2}] 11 root 187 ki31 0B 128K CPU6 6 8:36 57.28% [idle{idle: cpu6}] 11 root 187 ki31 0B 128K CPU7 7 8:04 51.95% [idle{idle: cpu7}] 12 root -60 - 0B 384K WAIT 1 0:47 44.46% [intr{swi1: netisr 4}] 11 root 187 ki31 0B 128K RUN 3 8:23 36.48% [idle{idle: cpu3}] 12 root -60 - 0B 384K WAIT 1 2:06 27.04% [intr{swi1: netisr 6}] 0 root -60 - 0B 2352K CPU2 2 0:52 26.96% [kernel{if_io_tqg_2}] 12 root -60 - 0B 384K WAIT 7 1:02 25.82% [intr{swi1: netisr 5}] 11 root 187 ki31 0B 128K RUN 4 8:23 16.71% [idle{idle: cpu4}] 0 root -60 - 0B 2352K - 6 0:53 16.47% [kernel{if_io_tqg_6}] 71284 www 28 0 142M 39M CPU5 5 0:48 10.20% /usr/local/sbin/haproxy -f /var/etc/haproxy/haproxy. 12 root -60 - 0B 384K WAIT 6 1:06 7.50% [intr{swi1: netisr 2}] 0 root -60 - 0B 2352K RUN 4 0:58 6.71% [kernel{if_io_tqg_4}] 11 root 187 ki31 0B 128K RUN 0 7:46 1.87% [idle{idle: cpu0}]I still suspect something happening on the lag it should not be that hard to get 10g cross-vlan with the right infrastructure and I sure do have one.

-

Hmm, are you able to test without the lagg?

Just between the two ixl NICs directly?

-

@stephenw10 yeah, next step would be that. I need to arrange a time frame for a downtime. Will post the results.

-

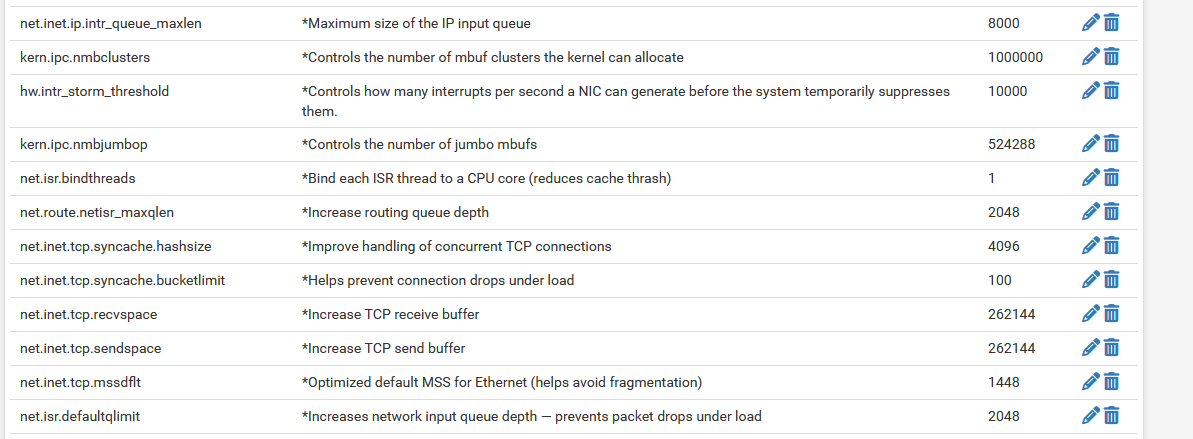

@stephenw10 okay, all the tests done but it was a nightmare

I have done more than disabling lagg

updated the NIC firmware

updated the BIOS

reinstalled the pfsense with uefi boot instead of legacy

set my nic pci to EFI instead of legacy

disabled the laggnow it is a single SFP28 25G DAC cable

tuned some system variables

enabled Hardware TCP Segmentation Offloading

but still cannot get more than 5g.

Next step would be to use the actual 10g onboard NIC to see if the problem is with the 25G card.

any other troubleshooting steps are welcome

maybe this intel NIC does not play nice with pfsense

-

May I suggest to use jumbo frames if you do not use them already !

I changed every thing within the 10G part of my network including the pfSense lagg related to that to '9000' (netto), which did improve the trough put.

I did start a discussion about that a couple of month ago on the forum.

-

Do you still see one CPU core hitting 100% while the others are 50% idle?

-

@stephenw10 I think it is hitting all the cores.

-

@stephenw10 said in Cannot Achieve 10g pfsense bottleneck:

I wouldn't expect to see much advantage to anything over 8 streams when you have 8 CPU cores.

The CPU has only 4 real cores, the other 4 are SMT placebos, which don't help in term of packet processing. Most likely they slowing things down. I'd suggest to disable SMT / HT at all in the BIOS and configure the NIC queues down to 4. Also Disable any other unneeded features like SR-IOV. My guess is you won't run any VMs on the machine and pass NICs to these.

The Power management configuration of your system affects also the maximum possible throughput. From your first post I can see that your CPU is running on the 2.2GHz base frequency. This is good for constant latency and throughput. Allowing the CPU to scale down the frequency introduces more variance to latency, but it allows to run longer on boost clock (3GHz), which can process more interrupts and make more throughput. Allowing the CPU to scale down, may make things worse. On some systems I had good results in term of throughput, with that on other I don't. It depends on the CPU and NICs.

The last thing I would check is how the interrupt distribution of the nic queues is under network load. As far as I remember you can check this with vmstat -i and systat -vmstat 1

Do you have net.isr.dispatch set to deferred? If yes and you don't have any PPPoE connection in use, you want to try the default dispatcher (direct)

-

@Laxarus

No errors logged anywhere?netstat -I ixl0 -w1 sysctl dev.ixl.0Try some live linux distribution, like Ubuntu, RHEL or Debian or just install pfSense in proxmox, without any pcie pass through, use card ports as virtual switches, one port per switch and test it again with iperf. If you hit the same limit there, you will need to go deeper.

-

@louis2 I tested jumbo frames but did not make any difference and my network have too different speeds so I have to stay at MTU 1500.

@Averlon said in Cannot Achieve 10g pfsense bottleneck:

The CPU has only 4 real cores, the other 4 are SMT placebos, which don't help in term of packet processing. Most likely they slowing things down. I'd suggest to disable SMT / HT at all in the BIOS and configure the NIC queues down to 4. Also Disable any other unneeded features like SR-IOV. My guess is you won't run any VMs on the machine and pass NICs to these.

I tried toggling the SR-IOV, did not see any difference.

Enabled "Extended APIC" and "Above 4G Decoding" is enabled by default.

I did not try disabling SMT/HT or playing with power settings. Currently, it is set to "Energy Efficient" profile, so no custom profiles yet.@Averlon said in Cannot Achieve 10g pfsense bottleneck:

The last thing I would check is how the interrupt distribution of the nic queues is under network load. As far as I remember you can check this with vmstat -i and systat -vmstat 1

It seems evenly distributed to me. (this was during a running iperf3 test)

2 users Load 1.99 2.31 1.79 Oct 25 22:47:13 Mem usage: 8%Phy 5%Kmem VN PAGER SWAP PAGER Mem: REAL VIRTUAL in out in out Tot Share Tot Share Free count 26 Act 685M 62444K 4613G 93312K 28840M pages 39 All 701M 78508K 4614G 366M ioflt Interrupts Proc: 3847 cow 53387 total r p d s w Csw Trp Sys Int Sof Flt 17K zfod 1129 cpu0:timer 320 132K 21K 14K 43K 23K 21K ozfod 1133 cpu1:timer %ozfod 1131 cpu2:timer 10.1%Sys 39.5%Intr 0.9%User 2.5%Nice 47.0%Idle daefr 1129 cpu3:timer | | | | | | | | | | | 19K prcfr 1132 cpu4:timer =====++++++++++++++++++++- 26K totfr 1129 cpu5:timer dtbuf react 1131 cpu6:timer Namei Name-cache Dir-cache 615219 maxvn pdwak 1134 cpu7:timer Calls hits % hits % 4989 numvn 90 pdpgs 23 xhci0 65 4569 4569 100 3402 frevn 33 intrn ixl0:aq 67 1829M wire 7733 ixl0:rxq0 Disks nda0 pass0 pass1 pass2 220M act 5309 ixl0:rxq1 KB/t 0.00 0.00 0.00 0.00 551M inact 18 ixl0:rxq2 tps 0 0 0 0 0 laund 4948 ixl0:rxq3 MB/s 0.00 0.00 0.00 0.00 28G free 7413 ixl0:rxq4 %busy 0 0 0 0 0 buf 7284 ixl0:rxq5 4568 ixl0:rxq6 6949 ixl0:rxq7 nvme0:admi nvme0:io0 nvme0:io1 nvme0:io2 nvme0:io3@Averlon said in Cannot Achieve 10g pfsense bottleneck:

Do you have net.isr.dispatch set to deferred? If yes and you don't have any PPPoE connection in use, you want to try the default dispatcher (direct)

it is set as direct by default, I did not touch that. My WAN is pppoe but I dont test WAN connections anyway.

@w0w said in Cannot Achieve 10g pfsense bottleneck:

No errors logged anywhere?

netstat -I ixl0 -w1

sysctl dev.ixl.0no errors at all.

dev.ixl.0.mac.xoff_recvd: 0 dev.ixl.0.mac.xoff_txd: 0 dev.ixl.0.mac.xon_recvd: 0 dev.ixl.0.mac.xon_txd: 0 dev.ixl.0.mac.tx_frames_big: 0 dev.ixl.0.mac.tx_frames_1024_1522: 375614975 dev.ixl.0.mac.tx_frames_512_1023: 16690370 dev.ixl.0.mac.tx_frames_256_511: 13612270 dev.ixl.0.mac.tx_frames_128_255: 4052150 dev.ixl.0.mac.tx_frames_65_127: 152914345 dev.ixl.0.mac.tx_frames_64: 500265 dev.ixl.0.mac.checksum_errors: 3 dev.ixl.0.mac.rx_jabber: 0 dev.ixl.0.mac.rx_oversized: 0 dev.ixl.0.mac.rx_fragmented: 0 dev.ixl.0.mac.rx_undersize: 0 dev.ixl.0.mac.rx_frames_big: 0 dev.ixl.0.mac.rx_frames_1024_1522: 377904852 dev.ixl.0.mac.rx_frames_512_1023: 16329494 dev.ixl.0.mac.rx_frames_256_511: 14111348 dev.ixl.0.mac.rx_frames_128_255: 3145926 dev.ixl.0.mac.rx_frames_65_127: 138214041 dev.ixl.0.mac.rx_frames_64: 184281 dev.ixl.0.mac.rx_length_errors: 0 dev.ixl.0.mac.remote_faults: 0 dev.ixl.0.mac.local_faults: 2 dev.ixl.0.mac.illegal_bytes: 0 dev.ixl.0.mac.crc_errors: 0 dev.ixl.0.mac.bcast_pkts_txd: 46661 dev.ixl.0.mac.mcast_pkts_txd: 644978 dev.ixl.0.mac.ucast_pkts_txd: 562692736 dev.ixl.0.mac.good_octets_txd: 593759862696 dev.ixl.0.mac.rx_discards: 0 dev.ixl.0.mac.bcast_pkts_rcvd: 75168 dev.ixl.0.mac.mcast_pkts_rcvd: 280282 dev.ixl.0.mac.ucast_pkts_rcvd: 549534492 dev.ixl.0.mac.good_octets_rcvd: 596407236239 dev.ixl.0.pf.txq07.itr: 122 dev.ixl.0.pf.txq07.bytes: 41143051364 dev.ixl.0.pf.txq07.packets: 47973936 dev.ixl.0.pf.txq07.mss_too_small: 0 dev.ixl.0.pf.txq07.tso: 28225 dev.ixl.0.pf.txq06.itr: 122 dev.ixl.0.pf.txq06.bytes: 66474474763 dev.ixl.0.pf.txq06.packets: 57167819 dev.ixl.0.pf.txq06.mss_too_small: 0 dev.ixl.0.pf.txq06.tso: 101247 dev.ixl.0.pf.txq05.itr: 122 dev.ixl.0.pf.txq05.bytes: 87535602506 dev.ixl.0.pf.txq05.packets: 73720437 dev.ixl.0.pf.txq05.mss_too_small: 0 dev.ixl.0.pf.txq05.tso: 365106 dev.ixl.0.pf.txq04.itr: 122 dev.ixl.0.pf.txq04.bytes: 70329303337 dev.ixl.0.pf.txq04.packets: 58308835 dev.ixl.0.pf.txq04.mss_too_small: 0 dev.ixl.0.pf.txq04.tso: 103427 dev.ixl.0.pf.txq03.itr: 122 dev.ixl.0.pf.txq03.bytes: 86678345387 dev.ixl.0.pf.txq03.packets: 77585525 dev.ixl.0.pf.txq03.mss_too_small: 0 dev.ixl.0.pf.txq03.tso: 79961 dev.ixl.0.pf.txq02.itr: 122 dev.ixl.0.pf.txq02.bytes: 63402843222 dev.ixl.0.pf.txq02.packets: 61088681 dev.ixl.0.pf.txq02.mss_too_small: 0 dev.ixl.0.pf.txq02.tso: 133040 dev.ixl.0.pf.txq01.itr: 122 dev.ixl.0.pf.txq01.bytes: 97729964394 dev.ixl.0.pf.txq01.packets: 101429107 dev.ixl.0.pf.txq01.mss_too_small: 0 dev.ixl.0.pf.txq01.tso: 1743 dev.ixl.0.pf.txq00.itr: 122 dev.ixl.0.pf.txq00.bytes: 75454052806 dev.ixl.0.pf.txq00.packets: 78235275 dev.ixl.0.pf.txq00.mss_too_small: 0 dev.ixl.0.pf.txq00.tso: 16482 dev.ixl.0.pf.rxq07.itr: 62 dev.ixl.0.pf.rxq07.desc_err: 0 dev.ixl.0.pf.rxq07.bytes: 42061911478 dev.ixl.0.pf.rxq07.packets: 46161672 dev.ixl.0.pf.rxq07.irqs: 18370252 dev.ixl.0.pf.rxq06.itr: 62 dev.ixl.0.pf.rxq06.desc_err: 0 dev.ixl.0.pf.rxq06.bytes: 65114634180 dev.ixl.0.pf.rxq06.packets: 57147031 dev.ixl.0.pf.rxq06.irqs: 17435678 dev.ixl.0.pf.rxq05.itr: 62 dev.ixl.0.pf.rxq05.desc_err: 0 dev.ixl.0.pf.rxq05.bytes: 83977364285 dev.ixl.0.pf.rxq05.packets: 72607299 dev.ixl.0.pf.rxq05.irqs: 24830962 dev.ixl.0.pf.rxq04.itr: 62 dev.ixl.0.pf.rxq04.desc_err: 0 dev.ixl.0.pf.rxq04.bytes: 70413959352 dev.ixl.0.pf.rxq04.packets: 57951469 dev.ixl.0.pf.rxq04.irqs: 13634250 dev.ixl.0.pf.rxq03.itr: 62 dev.ixl.0.pf.rxq03.desc_err: 0 dev.ixl.0.pf.rxq03.bytes: 88489465483 dev.ixl.0.pf.rxq03.packets: 76718350 dev.ixl.0.pf.rxq03.irqs: 20560555 dev.ixl.0.pf.rxq02.itr: 62 dev.ixl.0.pf.rxq02.desc_err: 0 dev.ixl.0.pf.rxq02.bytes: 64640643506 dev.ixl.0.pf.rxq02.packets: 60418291 dev.ixl.0.pf.rxq02.irqs: 28675547 dev.ixl.0.pf.rxq01.itr: 62 dev.ixl.0.pf.rxq01.desc_err: 0 dev.ixl.0.pf.rxq01.bytes: 73019041590 dev.ixl.0.pf.rxq01.packets: 75989766 dev.ixl.0.pf.rxq01.irqs: 30805377 dev.ixl.0.pf.rxq00.itr: 62 dev.ixl.0.pf.rxq00.desc_err: 0 dev.ixl.0.pf.rxq00.bytes: 104282185425 dev.ixl.0.pf.rxq00.packets: 102756379 dev.ixl.0.pf.rxq00.irqs: 34272354 dev.ixl.0.pf.rx_errors: 3 dev.ixl.0.pf.bcast_pkts_txd: 46661 dev.ixl.0.pf.mcast_pkts_txd: 652008 dev.ixl.0.pf.ucast_pkts_txd: 562692736 dev.ixl.0.pf.good_octets_txd: 591509013288 dev.ixl.0.pf.rx_discards: 4294964835 dev.ixl.0.pf.bcast_pkts_rcvd: 76284 dev.ixl.0.pf.mcast_pkts_rcvd: 142100 dev.ixl.0.pf.ucast_pkts_rcvd: 549533766 dev.ixl.0.pf.good_octets_rcvd: 596393803623 dev.ixl.0.admin_irq: 4 dev.ixl.0.link_active_on_if_down: 1 dev.ixl.0.eee.rx_lpi_count: 0 dev.ixl.0.eee.tx_lpi_count: 0 dev.ixl.0.eee.rx_lpi_status: 0 dev.ixl.0.eee.tx_lpi_status: 0 dev.ixl.0.eee.enable: 0 dev.ixl.0.fw_lldp: 1 dev.ixl.0.fec.auto_fec_enabled: 1 dev.ixl.0.fec.rs_requested: 1 dev.ixl.0.fec.fc_requested: 1 dev.ixl.0.fec.rs_ability: 1 dev.ixl.0.fec.fc_ability: 1 dev.ixl.0.dynamic_tx_itr: 0 dev.ixl.0.dynamic_rx_itr: 0 dev.ixl.0.rx_itr: 62 dev.ixl.0.tx_itr: 122 dev.ixl.0.unallocated_queues: 760 dev.ixl.0.fw_version: fw 9.153.78577 api 1.15 nvm 9.53 etid 8000fcfe oem 1.270.0 dev.ixl.0.current_speed: 25 Gbps dev.ixl.0.supported_speeds: 22 dev.ixl.0.advertise_speed: 22 dev.ixl.0.fc: 0 dev.ixl.0.iflib.rxq7.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq7.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq7.rxq_fl0.cidx: 1800 dev.ixl.0.iflib.rxq7.rxq_fl0.pidx: 1799 dev.ixl.0.iflib.rxq7.cpu: 6 dev.ixl.0.iflib.rxq6.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq6.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq6.rxq_fl0.cidx: 1693 dev.ixl.0.iflib.rxq6.rxq_fl0.pidx: 1692 dev.ixl.0.iflib.rxq6.cpu: 4 dev.ixl.0.iflib.rxq5.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq5.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq5.rxq_fl0.cidx: 1603 dev.ixl.0.iflib.rxq5.rxq_fl0.pidx: 1602 dev.ixl.0.iflib.rxq5.cpu: 2 dev.ixl.0.iflib.rxq4.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq4.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq4.rxq_fl0.cidx: 1261 dev.ixl.0.iflib.rxq4.rxq_fl0.pidx: 1260 dev.ixl.0.iflib.rxq4.cpu: 0 dev.ixl.0.iflib.rxq3.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq3.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq3.rxq_fl0.cidx: 270 dev.ixl.0.iflib.rxq3.rxq_fl0.pidx: 269 dev.ixl.0.iflib.rxq3.cpu: 6 dev.ixl.0.iflib.rxq2.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq2.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq2.rxq_fl0.cidx: 243 dev.ixl.0.iflib.rxq2.rxq_fl0.pidx: 242 dev.ixl.0.iflib.rxq2.cpu: 4 dev.ixl.0.iflib.rxq1.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq1.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq1.rxq_fl0.cidx: 774 dev.ixl.0.iflib.rxq1.rxq_fl0.pidx: 773 dev.ixl.0.iflib.rxq1.cpu: 2 dev.ixl.0.iflib.rxq0.rxq_fl0.buf_size: 2048 dev.ixl.0.iflib.rxq0.rxq_fl0.credits: 2047 dev.ixl.0.iflib.rxq0.rxq_fl0.cidx: 27 dev.ixl.0.iflib.rxq0.rxq_fl0.pidx: 26 dev.ixl.0.iflib.rxq0.cpu: 0 dev.ixl.0.iflib.txq7.r_abdications: 0 dev.ixl.0.iflib.txq7.r_restarts: 0 dev.ixl.0.iflib.txq7.r_stalls: 0 dev.ixl.0.iflib.txq7.r_starts: 47971641 dev.ixl.0.iflib.txq7.r_drops: 0 dev.ixl.0.iflib.txq7.r_enqueues: 48007467 dev.ixl.0.iflib.txq7.ring_state: pidx_head: 0299 pidx_tail: 0299 cidx: 0299 state: IDLE dev.ixl.0.iflib.txq7.txq_cleaned: 48236471 dev.ixl.0.iflib.txq7.txq_processed: 48236479 dev.ixl.0.iflib.txq7.txq_in_use: 8 dev.ixl.0.iflib.txq7.txq_cidx_processed: 1983 dev.ixl.0.iflib.txq7.txq_cidx: 1975 dev.ixl.0.iflib.txq7.txq_pidx: 1983 dev.ixl.0.iflib.txq7.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq7.txd_encap_efbig: 0 dev.ixl.0.iflib.txq7.tx_map_failed: 0 dev.ixl.0.iflib.txq7.no_desc_avail: 0 dev.ixl.0.iflib.txq7.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq7.m_pullups: 1377 dev.ixl.0.iflib.txq7.mbuf_defrag: 0 dev.ixl.0.iflib.txq7.cpu: 6 dev.ixl.0.iflib.txq6.r_abdications: 0 dev.ixl.0.iflib.txq6.r_restarts: 0 dev.ixl.0.iflib.txq6.r_stalls: 0 dev.ixl.0.iflib.txq6.r_starts: 57165056 dev.ixl.0.iflib.txq6.r_drops: 0 dev.ixl.0.iflib.txq6.r_enqueues: 57232644 dev.ixl.0.iflib.txq6.ring_state: pidx_head: 1285 pidx_tail: 1285 cidx: 1285 state: IDLE dev.ixl.0.iflib.txq6.txq_cleaned: 57913397 dev.ixl.0.iflib.txq6.txq_processed: 57913406 dev.ixl.0.iflib.txq6.txq_in_use: 9 dev.ixl.0.iflib.txq6.txq_cidx_processed: 65 dev.ixl.0.iflib.txq6.txq_cidx: 58 dev.ixl.0.iflib.txq6.txq_pidx: 68 dev.ixl.0.iflib.txq6.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq6.txd_encap_efbig: 0 dev.ixl.0.iflib.txq6.tx_map_failed: 0 dev.ixl.0.iflib.txq6.no_desc_avail: 0 dev.ixl.0.iflib.txq6.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq6.m_pullups: 127 dev.ixl.0.iflib.txq6.mbuf_defrag: 0 dev.ixl.0.iflib.txq6.cpu: 4 dev.ixl.0.iflib.txq5.r_abdications: 0 dev.ixl.0.iflib.txq5.r_restarts: 0 dev.ixl.0.iflib.txq5.r_stalls: 0 dev.ixl.0.iflib.txq5.r_starts: 73716508 dev.ixl.0.iflib.txq5.r_drops: 0 dev.ixl.0.iflib.txq5.r_enqueues: 73782066 dev.ixl.0.iflib.txq5.ring_state: pidx_head: 0818 pidx_tail: 0818 cidx: 0818 state: IDLE dev.ixl.0.iflib.txq5.txq_cleaned: 76713335 dev.ixl.0.iflib.txq5.txq_processed: 76713343 dev.ixl.0.iflib.txq5.txq_in_use: 8 dev.ixl.0.iflib.txq5.txq_cidx_processed: 1407 dev.ixl.0.iflib.txq5.txq_cidx: 1399 dev.ixl.0.iflib.txq5.txq_pidx: 1407 dev.ixl.0.iflib.txq5.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq5.txd_encap_efbig: 0 dev.ixl.0.iflib.txq5.tx_map_failed: 0 dev.ixl.0.iflib.txq5.no_desc_avail: 0 dev.ixl.0.iflib.txq5.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq5.m_pullups: 716 dev.ixl.0.iflib.txq5.mbuf_defrag: 0 dev.ixl.0.iflib.txq5.cpu: 2 dev.ixl.0.iflib.txq4.r_abdications: 0 dev.ixl.0.iflib.txq4.r_restarts: 0 dev.ixl.0.iflib.txq4.r_stalls: 0 dev.ixl.0.iflib.txq4.r_starts: 58307559 dev.ixl.0.iflib.txq4.r_drops: 0 dev.ixl.0.iflib.txq4.r_enqueues: 58369606 dev.ixl.0.iflib.txq4.ring_state: pidx_head: 1606 pidx_tail: 1606 cidx: 1606 state: IDLE dev.ixl.0.iflib.txq4.txq_cleaned: 59003490 dev.ixl.0.iflib.txq4.txq_processed: 59003498 dev.ixl.0.iflib.txq4.txq_in_use: 8 dev.ixl.0.iflib.txq4.txq_cidx_processed: 618 dev.ixl.0.iflib.txq4.txq_cidx: 610 dev.ixl.0.iflib.txq4.txq_pidx: 618 dev.ixl.0.iflib.txq4.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq4.txd_encap_efbig: 0 dev.ixl.0.iflib.txq4.tx_map_failed: 0 dev.ixl.0.iflib.txq4.no_desc_avail: 0 dev.ixl.0.iflib.txq4.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq4.m_pullups: 86 dev.ixl.0.iflib.txq4.mbuf_defrag: 0 dev.ixl.0.iflib.txq4.cpu: 0 dev.ixl.0.iflib.txq3.r_abdications: 0 dev.ixl.0.iflib.txq3.r_restarts: 0 dev.ixl.0.iflib.txq3.r_stalls: 0 dev.ixl.0.iflib.txq3.r_starts: 77583619 dev.ixl.0.iflib.txq3.r_drops: 0 dev.ixl.0.iflib.txq3.r_enqueues: 77663516 dev.ixl.0.iflib.txq3.ring_state: pidx_head: 1308 pidx_tail: 1308 cidx: 1308 state: IDLE dev.ixl.0.iflib.txq3.txq_cleaned: 78294052 dev.ixl.0.iflib.txq3.txq_processed: 78294060 dev.ixl.0.iflib.txq3.txq_in_use: 8 dev.ixl.0.iflib.txq3.txq_cidx_processed: 1068 dev.ixl.0.iflib.txq3.txq_cidx: 1060 dev.ixl.0.iflib.txq3.txq_pidx: 1068 dev.ixl.0.iflib.txq3.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq3.txd_encap_efbig: 0 dev.ixl.0.iflib.txq3.tx_map_failed: 0 dev.ixl.0.iflib.txq3.no_desc_avail: 0 dev.ixl.0.iflib.txq3.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq3.m_pullups: 409 dev.ixl.0.iflib.txq3.mbuf_defrag: 0 dev.ixl.0.iflib.txq3.cpu: 6 dev.ixl.0.iflib.txq2.r_abdications: 0 dev.ixl.0.iflib.txq2.r_restarts: 0 dev.ixl.0.iflib.txq2.r_stalls: 0 dev.ixl.0.iflib.txq2.r_starts: 61084731 dev.ixl.0.iflib.txq2.r_drops: 0 dev.ixl.0.iflib.txq2.r_enqueues: 61139632 dev.ixl.0.iflib.txq2.ring_state: pidx_head: 0688 pidx_tail: 0688 cidx: 0688 state: IDLE dev.ixl.0.iflib.txq2.txq_cleaned: 61925883 dev.ixl.0.iflib.txq2.txq_processed: 61925891 dev.ixl.0.iflib.txq2.txq_in_use: 8 dev.ixl.0.iflib.txq2.txq_cidx_processed: 515 dev.ixl.0.iflib.txq2.txq_cidx: 507 dev.ixl.0.iflib.txq2.txq_pidx: 515 dev.ixl.0.iflib.txq2.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq2.txd_encap_efbig: 0 dev.ixl.0.iflib.txq2.tx_map_failed: 0 dev.ixl.0.iflib.txq2.no_desc_avail: 0 dev.ixl.0.iflib.txq2.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq2.m_pullups: 550 dev.ixl.0.iflib.txq2.mbuf_defrag: 0 dev.ixl.0.iflib.txq2.cpu: 4 dev.ixl.0.iflib.txq1.r_abdications: 0 dev.ixl.0.iflib.txq1.r_restarts: 0 dev.ixl.0.iflib.txq1.r_stalls: 0 dev.ixl.0.iflib.txq1.r_starts: 101436836 dev.ixl.0.iflib.txq1.r_drops: 0 dev.ixl.0.iflib.txq1.r_enqueues: 101537592 dev.ixl.0.iflib.txq1.ring_state: pidx_head: 1848 pidx_tail: 1848 cidx: 1848 state: IDLE dev.ixl.0.iflib.txq1.txq_cleaned: 101552637 dev.ixl.0.iflib.txq1.txq_processed: 101552645 dev.ixl.0.iflib.txq1.txq_in_use: 8 dev.ixl.0.iflib.txq1.txq_cidx_processed: 517 dev.ixl.0.iflib.txq1.txq_cidx: 509 dev.ixl.0.iflib.txq1.txq_pidx: 517 dev.ixl.0.iflib.txq1.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq1.txd_encap_efbig: 0 dev.ixl.0.iflib.txq1.tx_map_failed: 0 dev.ixl.0.iflib.txq1.no_desc_avail: 0 dev.ixl.0.iflib.txq1.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq1.m_pullups: 1089 dev.ixl.0.iflib.txq1.mbuf_defrag: 0 dev.ixl.0.iflib.txq1.cpu: 2 dev.ixl.0.iflib.txq0.r_abdications: 1 dev.ixl.0.iflib.txq0.r_restarts: 0 dev.ixl.0.iflib.txq0.r_stalls: 0 dev.ixl.0.iflib.txq0.r_starts: 78227423 dev.ixl.0.iflib.txq0.r_drops: 0 dev.ixl.0.iflib.txq0.r_enqueues: 78277869 dev.ixl.0.iflib.txq0.ring_state: pidx_head: 1261 pidx_tail: 1261 cidx: 1261 state: IDLE dev.ixl.0.iflib.txq0.txq_cleaned: 78508028 dev.ixl.0.iflib.txq0.txq_processed: 78508036 dev.ixl.0.iflib.txq0.txq_in_use: 8 dev.ixl.0.iflib.txq0.txq_cidx_processed: 4 dev.ixl.0.iflib.txq0.txq_cidx: 2044 dev.ixl.0.iflib.txq0.txq_pidx: 4 dev.ixl.0.iflib.txq0.no_tx_dma_setup: 0 dev.ixl.0.iflib.txq0.txd_encap_efbig: 0 dev.ixl.0.iflib.txq0.tx_map_failed: 0 dev.ixl.0.iflib.txq0.no_desc_avail: 0 dev.ixl.0.iflib.txq0.mbuf_defrag_failed: 0 dev.ixl.0.iflib.txq0.m_pullups: 484 dev.ixl.0.iflib.txq0.mbuf_defrag: 0 dev.ixl.0.iflib.txq0.cpu: 0 dev.ixl.0.iflib.override_nrxds: 2048 dev.ixl.0.iflib.override_ntxds: 2048 dev.ixl.0.iflib.allocated_msix_vectors: 9 dev.ixl.0.iflib.use_extra_msix_vectors: 0 dev.ixl.0.iflib.use_logical_cores: 0 dev.ixl.0.iflib.separate_txrx: 0 dev.ixl.0.iflib.core_offset: 0 dev.ixl.0.iflib.tx_abdicate: 0 dev.ixl.0.iflib.rx_budget: 0 dev.ixl.0.iflib.disable_msix: 0 dev.ixl.0.iflib.override_qs_enable: 0 dev.ixl.0.iflib.override_nrxqs: 8 dev.ixl.0.iflib.override_ntxqs: 8 dev.ixl.0.iflib.driver_version: 2.3.3-k dev.ixl.0.%domain: 0 dev.ixl.0.%iommu: rid=0x6500 dev.ixl.0.%parent: pci7 dev.ixl.0.%pnpinfo: vendor=0x8086 device=0x158b subvendor=0x15d9 subdevice=0x0978 class=0x020000 dev.ixl.0.%location: slot=0 function=0 dbsf=pci0:101:0:0 dev.ixl.0.%driver: ixl dev.ixl.0.%desc: Intel(R) Ethernet Controller XXV710 for 25GbE SFP28 - 2.3.3-kis it possible that there are too many vlans on the network and too many rules/packets to process with my cpu during high speed transfer spikes?

Currently 13 vlans + LAN on the same cable with various rules.