Netgate 6100 Crash On Interface Change - Not Resolved (IPv6 + PPPoE)

-

@stephenw10

There should be 4 back-traces shown above but I have full captures for all 4 events that I can send to you, if that would help.I hope you remember that it's a Bank Holiday Steve, so feel free to enjoy it instead.

Rob

-

-

We think we have found the cause here and have a test kernel to confirm it. It is only a test at this point though.

Are you able to test that? So far we have not been able to replicate this locally.

-

@stephenw10 said in Netgate 6100 Crash On Interface Change - Not Resolved (IPv6 + PPPoE):

Are you able to test that?

Yes of course. If you tell me how to load it, at what logging verbosity and which log extracts you would like to receive I will test it as soon as the network traffic allows.

Do you have an overview of the kernel changes?

️

️ -

The changes here are to the interface handling in kernel. So mostly to sys/net/if.c and sys/netinet6/in6.c

They are a test only at this point and would need streamlining before including upstream. But running this should prove if this is what you're hitting.

Test Kernel:

Removed after finding a new bug.Move /boot/kernel to a backup:

mv /boot/kernel /boot/kernel.old2Upload the tgz to /boot and then extract it there:

tar -xzf kernel-amd64-inet6-panic.tgzThen reboot into that. Confirm you're running that after booting:

[23.05-RELEASE][root@6100-3.stevew.lan]/root: uname -a FreeBSD 6100-3.stevew.lan 14.0-CURRENT FreeBSD 14.0-CURRENT inet6_backport-n256104-f5556386d38 pfsense-NODEBUG amd64I've been running it here no problem but I couldn't hit the issue before hand so have a recovery solution in place!

If you can test that and it no-longer hits that issue though we can get a long-term solution upstreamed.

Steve

-

We found a bug in that test code and are working on something new.

I don't recommend running that yet.

-

@stephenw10 said in Netgate 6100 Crash On Interface Change - Not Resolved (IPv6 + PPPoE):

I don't recommend running that yet.

Ok, understood.

️

️ -

Hi Steve,

Any progress with this issue as I am close to removing the Netgate 6100 from production use?The recent changes do not appear to have changed anything of note, albeit the more random reboots no longer produce a crash log. There has not been much encouraging news on the associated redmine tracker either.

I'm not looking to dispose or return the 6100 when I change to a different vendor so it will be available for testing. I'm hoping to remain with Netgate but I am sure you appreciate that as a UK user the issue with PPPoE / IPv6 cannot be sustained.

️

️ -

Were you able to test a 23.09 snapshot?

Indeed I agree that here in the UK that's combo many, many people are running. Including me.

-

@stephenw10 said in Netgate 6100 Crash On Interface Change - Not Resolved (IPv6 + PPPoE):

Were you able to test a 23.09 snapshot?

No I didn't, for a couple of reasons. The first is a general reluctance to run snapshots on a live production network and, secondly, there being no changes listed in v23.09 that suggest a possible fix.

A month ago Kristof Provost posted that he was unable to reproduce the fault, which was a concern.

That said, if you think a particular snapshot might help or at least add useful data please drop me a link and I will give it a go.

️

️ -

Mainly because if you can replicate it in 23.09 then we can can test with that to try and replicate it here. Otherwise we need to keep trying in 23.05.1 and that makes it more difficult because all the development is in 23.09 at the moment. And there's a chance it's already fixed in 23.09. Though I agree there hasn't been anything specific gone in.

-

Ok, that all makes sense.

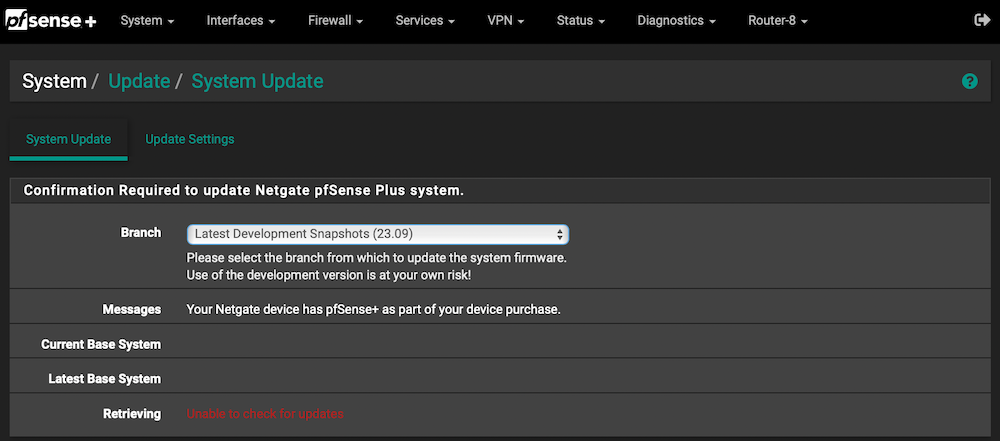

A bit of a pain to achieve though. It took countless refresh attempts to get beyond this stage, until pfSense 'found' the things it needed:

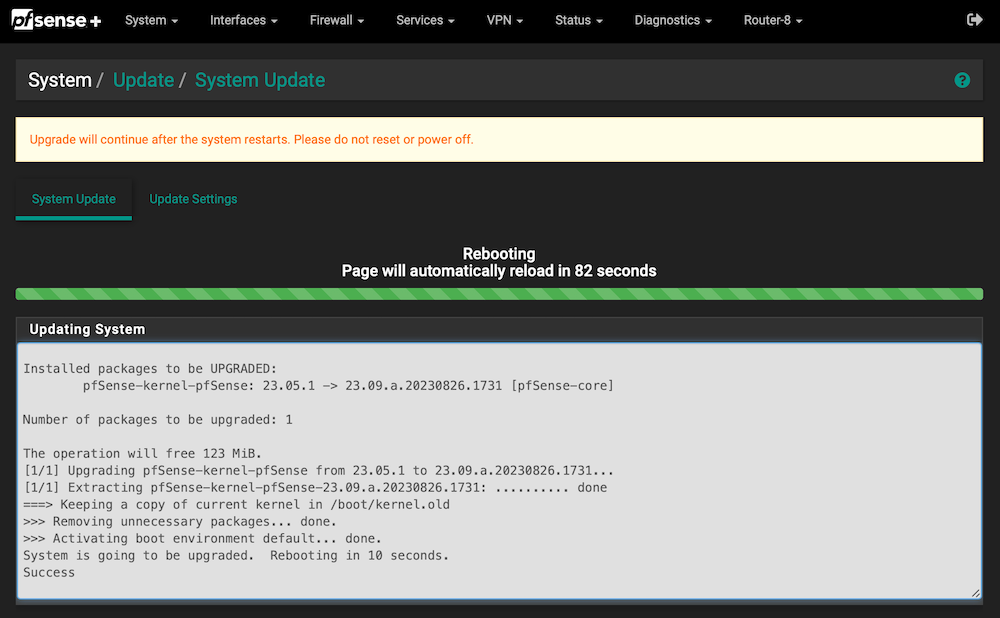

Still, it eventually 'just worked' and all loaded just fine:

It didn't crash on first interface change, so at least there is that.

️

️ -

Ok, cool. There's a routing issue with v6 at the pkg server our guys are working on. And we know you're using v6! Should be resolved shortly.

Let us know if you hit it in 23.09. Thanks

-

@stephenw10 said in Netgate 6100 Crash On Interface Change - Not Resolved (IPv6 + PPPoE):

Let us know if you hit it in 23.09. Thanks

Will do.

It survived 5 interface changes with no issue, before I ran out of time / actively complained at.

️

️ -

The issue persists with the latest dev snapshot. This crash was triggered by taking the WAN interface down & up again:

db:1:pfs> bt Tracing pid 2 tid 100041 td 0xfffffe0085272560 kdb_enter() at kdb_enter+0x32/frame 0xfffffe00850c5840 vpanic() at vpanic+0x163/frame 0xfffffe00850c5970 panic() at panic+0x43/frame 0xfffffe00850c59d0 trap_fatal() at trap_fatal+0x40c/frame 0xfffffe00850c5a30 trap_pfault() at trap_pfault+0x4f/frame 0xfffffe00850c5a90 calltrap() at calltrap+0x8/frame 0xfffffe00850c5a90 --- trap 0xc, rip = 0xffffffff80f4d9e6, rsp = 0xfffffe00850c5b60, rbp = 0xfffffe00850c5b90 --- in6_selecthlim() at in6_selecthlim+0x96/frame 0xfffffe00850c5b90 tcp_default_output() at tcp_default_output+0x1d97/frame 0xfffffe00850c5d70 tcp_timer_rexmt() at tcp_timer_rexmt+0x52f/frame 0xfffffe00850c5dd0 tcp_timer_enter() at tcp_timer_enter+0x101/frame 0xfffffe00850c5e10 softclock_call_cc() at softclock_call_cc+0x134/frame 0xfffffe00850c5ec0 softclock_thread() at softclock_thread+0xe9/frame 0xfffffe00850c5ef0 fork_exit() at fork_exit+0x7f/frame 0xfffffe00850c5f30 fork_trampoline() at fork_trampoline+0xe/frame 0xfffffe00850c5f30 --- trap 0, rip = 0, rsp = 0, rbp = 0 ---All hopes of an accidental fix were suddenly dashed...

I have full logs, should you need them.

️

️ -

Urgh. Ok, thanks. Let me see if ours guys would like to review....

-

Today, on 23.09.a.20230921.1219:

db:1:pfs> bt Tracing pid 2 tid 100041 td 0xfffffe0085274560 kdb_enter() at kdb_enter+0x32/frame 0xfffffe00850f9840 vpanic() at vpanic+0x163/frame 0xfffffe00850f9970 panic() at panic+0x43/frame 0xfffffe00850f99d0 trap_fatal() at trap_fatal+0x40c/frame 0xfffffe00850f9a30 trap_pfault() at trap_pfault+0x4f/frame 0xfffffe00850f9a90 calltrap() at calltrap+0x8/frame 0xfffffe00850f9a90 --- trap 0xc, rip = 0xffffffff80f4e066, rsp = 0xfffffe00850f9b60, rbp = 0xfffffe00850f9b90 --- in6_selecthlim() at in6_selecthlim+0x96/frame 0xfffffe00850f9b90 tcp_default_output() at tcp_default_output+0x1d97/frame 0xfffffe00850f9d70 tcp_timer_rexmt() at tcp_timer_rexmt+0x52f/frame 0xfffffe00850f9dd0 tcp_timer_enter() at tcp_timer_enter+0x101/frame 0xfffffe00850f9e10 softclock_call_cc() at softclock_call_cc+0x134/frame 0xfffffe00850f9ec0 softclock_thread() at softclock_thread+0xe9/frame 0xfffffe00850f9ef0 fork_exit() at fork_exit+0x7f/frame 0xfffffe00850f9f30 fork_trampoline() at fork_trampoline+0xe/frame 0xfffffe00850f9f30 --- trap 0, rip = 0, rsp = 0, rbp = 0 ---Humbug. On to the next one...

️

️ -

This seems a little odd too - the QDrops on ipv6:

netstat -Q Configuration: Setting Current Limit Thread count 4 4 Default queue limit 256 10240 Dispatch policy deferred n/a Threads bound to CPUs disabled n/a Protocols: Name Proto QLimit Policy Dispatch Flags ip 1 1000 cpu hybrid C-- igmp 2 256 source default --- rtsock 3 1024 source default --- arp 4 256 source default --- ether 5 256 cpu direct C-- ip6 6 256 cpu hybrid C-- ip_direct 9 256 cpu hybrid C-- ip6_direct 10 256 cpu hybrid C-- Workstreams: WSID CPU Name Len WMark Disp'd HDisp'd QDrops Queued Handled 0 0 ip 0 81 0 351082 0 66515 417597 0 0 igmp 0 0 0 0 0 0 0 0 0 rtsock 0 0 0 0 0 0 0 0 0 arp 0 0 0 0 0 0 0 0 0 ether 0 0 8010840 0 0 0 8010840 0 0 ip6 0 256 0 1923527 1245 320631 2244158 1 1 ip 0 22 0 49489 0 245280 294769 1 1 igmp 0 1 0 0 0 4 4 1 1 rtsock 0 0 0 0 0 0 0 1 1 arp 0 1 0 0 0 1431 1431 1 1 ether 0 0 245520 0 0 0 245520 1 1 ip6 0 36 0 126867 214 617858 744725 2 2 ip 0 52 0 60905 0 246025 306930 2 2 igmp 0 1 0 0 0 4 4 2 2 rtsock 0 5 0 0 0 467 467 2 2 arp 0 1 0 0 0 3968 3968 2 2 ether 0 0 281007 0 0 0 281007 2 2 ip6 0 256 0 140458 65916 5273321 5413779 3 3 ip 0 17 0 52687 0 110755 163442 3 3 igmp 0 0 0 0 0 0 0 3 3 rtsock 0 0 0 0 0 0 0 3 3 arp 0 0 0 0 0 0 0 3 3 ether 0 0 480023 0 0 0 480023 3 3 ip6 0 256 0 389341 428 1224093 1613434The system has only been up a little while and nowhere near saturation - just background traffic really. I would not expect to see any QDrops; IPv4 is fine but IPv6 is ???

..and from the previous log; all fine but for IPv6 QDrops:

WSID CPU Name Len WMark Disp'd HDisp'd QDrops Queued Handled 0 0 ip6 0 256 0 6582753 1758 413699 6996452 1 1 ip6 0 256 0 916478 83941 10325643 11242121 2 2 ip6 0 256 0 1177425 140455 19670180 20847605 3 3 ip6 0 256 0 1441443 13413 5797973 7239416 ️

️ -

With the news that the fix for this will probably have to wait until 24.03 (ie 6-months away) does anyone have suggestions for a non-pfSense router OS?

I currently run a Netgate 6100 so I will have to run a new OS on different hardware too but I do have a potential mini-server I can use arriving sometime in Oct; so that will give me something to host on. I also intend to keep my toes in the pfSense world whilst I wait this IPv6 / interface issue to be resolved but this is not something I can do when I'm away.

So what is my least-worst option for a router OS that is reliable for high-bandwidth PPPoE WAN and IPv6?

️

️ -

Are you able to test a debug image if we generate one to get more info on this?

Despite absolutely hammering the connection I have here I have yet to make it fail.