Cannot Achieve 10g pfsense bottleneck

-

@Averlon said in Cannot Achieve 10g pfsense bottleneck:

From your first post I can see that your CPU is running on the 2.2GHz base frequency. This is good for constant latency and throughput. Allowing the CPU to scale down the frequency introduces more variance to latency, but it allows to run longer on boost clock (3GHz), which can process more interrupts and make more throughput.

The max value shown there of 2201 means that Turbo is enabled. It never actually shows the turbo speed on a speed-step enabled CPU. But it could be hitting some thermal/power limit perhaps I agree. Though I'd expect to see some spikes above the 5Gbps if that was the case. At least some variation between repeated tests.

-

@stephenw10 It's not about Turbo is enabled or not, it's about power management in general, since it is one of many factors affecting throughput of the platform and it wasn't considered yet. Too aggressive power saving settings can also lead to performance degradations.

-

Thank you all for suggestions

@pwood999 said in Cannot Achieve 10g pfsense bottleneck:

What's the traffic like on the other VLAN's, etc. If it's low it shouldn't have much impact ?

there is not much file transfer traffic on L3. Generally chatter.

@pwood999 said in Cannot Achieve 10g pfsense bottleneck:

What PfSense Rules are on the VLAN's that have the iperf3 client & server ?

For testing purposes, I allowed all traffic.

@pwood999 said in Cannot Achieve 10g pfsense bottleneck:

Also, what managed switch are you using on the PfSense LAN & VLAN side ?

All unifi switches, but I have no problem getting 10G on L2 so I have ruled out the switches as the problem.

@w0w said in Cannot Achieve 10g pfsense bottleneck:

I’d test the firewall hardware by installing Proxmox on it and running pfSense with the NICs as virtual devices. Even virtualized, I tested my Intel X710-T4L on the pfSense LAN with an N100 CPU and the card in an x4 PCIe slot. Even in that setup, iperf reaches 6–7 Gbps.

I cannot afford testing this hardware in a virtual environment. This is currently in production and there is no backup for it.

@pwood999 said in Cannot Achieve 10g pfsense bottleneck:

Definitely try UDP but you might need to specify UDP on the server as well as client side.

Good point. Testing with UDP I got very interesting results.

root@PROXMOX1:~# iperf3 -c 192.168.40.40 -u -b 9.5G -t 10 -l 1472 --get-server-output -P 4 warning: UDP block size 1472 exceeds TCP MSS 1448, may result in fragmentation / drops Connecting to host 192.168.40.40, port 5201 [ 5] local 192.168.55.21 port 53975 connected to 192.168.40.40 port 5201 [ 7] local 192.168.55.21 port 36922 connected to 192.168.40.40 port 5201 [ 9] local 192.168.55.21 port 57106 connected to 192.168.40.40 port 5201 [ 11] local 192.168.55.21 port 43050 connected to 192.168.40.40 port 5201 [ ID] Interval Transfer Bitrate Total Datagrams [ 5] 0.00-1.00 sec 285 MBytes 2.39 Gbits/sec 203373 [ 7] 0.00-1.00 sec 286 MBytes 2.39 Gbits/sec 203512 [ 9] 0.00-1.00 sec 286 MBytes 2.39 Gbits/sec 203515 [ 11] 0.00-1.00 sec 285 MBytes 2.39 Gbits/sec 203332 [SUM] 0.00-1.00 sec 1.12 GBytes 9.57 Gbits/sec 813732 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 285 MBytes 2.39 Gbits/sec 203224 [ 7] 1.00-2.00 sec 285 MBytes 2.39 Gbits/sec 203205 [ 9] 1.00-2.00 sec 285 MBytes 2.39 Gbits/sec 203122 [ 11] 1.00-2.00 sec 285 MBytes 2.39 Gbits/sec 203127 [SUM] 1.00-2.00 sec 1.11 GBytes 9.57 Gbits/sec 812678 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 285 MBytes 2.39 Gbits/sec 203131 [ 7] 2.00-3.00 sec 285 MBytes 2.39 Gbits/sec 203188 [ 9] 2.00-3.00 sec 285 MBytes 2.39 Gbits/sec 203161 [ 11] 2.00-3.00 sec 285 MBytes 2.39 Gbits/sec 203165 [SUM] 2.00-3.00 sec 1.11 GBytes 9.57 Gbits/sec 812645 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 285 MBytes 2.39 Gbits/sec 203180 [ 7] 3.00-4.00 sec 285 MBytes 2.39 Gbits/sec 203170 [ 9] 3.00-4.00 sec 285 MBytes 2.39 Gbits/sec 203211 [ 11] 3.00-4.00 sec 285 MBytes 2.39 Gbits/sec 203119 [SUM] 3.00-4.00 sec 1.11 GBytes 9.57 Gbits/sec 812680 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 285 MBytes 2.39 Gbits/sec 203136 [ 7] 4.00-5.00 sec 285 MBytes 2.39 Gbits/sec 203126 [ 9] 4.00-5.00 sec 285 MBytes 2.39 Gbits/sec 203134 [ 11] 4.00-5.00 sec 285 MBytes 2.39 Gbits/sec 203145 [SUM] 4.00-5.00 sec 1.11 GBytes 9.57 Gbits/sec 812541 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 285 MBytes 2.39 Gbits/sec 203125 [ 7] 5.00-6.00 sec 285 MBytes 2.39 Gbits/sec 203171 [ 9] 5.00-6.00 sec 285 MBytes 2.39 Gbits/sec 203162 [ 11] 5.00-6.00 sec 285 MBytes 2.39 Gbits/sec 203157 [SUM] 5.00-6.00 sec 1.11 GBytes 9.57 Gbits/sec 812615 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 285 MBytes 2.39 Gbits/sec 203178 [ 7] 6.00-7.00 sec 285 MBytes 2.39 Gbits/sec 203207 [ 9] 6.00-7.00 sec 285 MBytes 2.39 Gbits/sec 203174 [ 11] 6.00-7.00 sec 285 MBytes 2.39 Gbits/sec 203166 [SUM] 6.00-7.00 sec 1.11 GBytes 9.57 Gbits/sec 812725 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 285 MBytes 2.39 Gbits/sec 203199 [ 7] 7.00-8.00 sec 285 MBytes 2.39 Gbits/sec 203171 [ 9] 7.00-8.00 sec 285 MBytes 2.39 Gbits/sec 203138 [ 11] 7.00-8.00 sec 285 MBytes 2.39 Gbits/sec 203196 [SUM] 7.00-8.00 sec 1.11 GBytes 9.57 Gbits/sec 812704 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 285 MBytes 2.39 Gbits/sec 203085 [ 7] 8.00-9.00 sec 285 MBytes 2.39 Gbits/sec 203155 [ 9] 8.00-9.00 sec 285 MBytes 2.39 Gbits/sec 203137 [ 11] 8.00-9.00 sec 285 MBytes 2.39 Gbits/sec 203124 [SUM] 8.00-9.00 sec 1.11 GBytes 9.57 Gbits/sec 812501 - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 285 MBytes 2.39 Gbits/sec 203169 [ 7] 9.00-10.00 sec 285 MBytes 2.39 Gbits/sec 203196 [ 9] 9.00-10.00 sec 285 MBytes 2.39 Gbits/sec 203177 [ 11] 9.00-10.00 sec 285 MBytes 2.39 Gbits/sec 203195 [SUM] 9.00-10.00 sec 1.11 GBytes 9.56 Gbits/sec 812737 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams [ 5] 0.00-10.00 sec 2.79 GBytes 2.39 Gbits/sec 0.000 ms 0/2031800 (0%) sender [ 5] 0.00-10.00 sec 1.18 GBytes 1.01 Gbits/sec 0.011 ms 1170176/2030971 (58%) receiver [ 7] 0.00-10.00 sec 2.79 GBytes 2.39 Gbits/sec 0.000 ms 0/2032101 (0%) sender [ 7] 0.00-10.00 sec 1.19 GBytes 1.02 Gbits/sec 0.018 ms 1163621/2031264 (57%) receiver [ 9] 0.00-10.00 sec 2.79 GBytes 2.39 Gbits/sec 0.000 ms 0/2031931 (0%) sender [ 9] 0.00-10.00 sec 1.17 GBytes 1.01 Gbits/sec 0.010 ms 1175570/2031116 (58%) receiver [ 11] 0.00-10.00 sec 2.79 GBytes 2.39 Gbits/sec 0.000 ms 0/2031726 (0%) sender [ 11] 0.00-10.00 sec 1.15 GBytes 991 Mbits/sec 0.011 ms 1189040/2030907 (59%) receiver [SUM] 0.00-10.00 sec 11.1 GBytes 9.57 Gbits/sec 0.000 ms 0/8127558 (0%) sender [SUM] 0.00-10.00 sec 4.70 GBytes 4.03 Gbits/sec 0.012 ms 4698407/8124258 (58%) receiver Server output: ----------------------------------------------------------- Server listening on 5201 (test #3) ----------------------------------------------------------- Accepted connection from 192.168.55.21, port 33208 [ 5] local 192.168.40.40 port 5201 connected to 192.168.55.21 port 53975 [ 6] local 192.168.40.40 port 5201 connected to 192.168.55.21 port 36922 [ 9] local 192.168.40.40 port 5201 connected to 192.168.55.21 port 57106 [ 11] local 192.168.40.40 port 5201 connected to 192.168.55.21 port 43050 [ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams [ 5] 0.00-1.00 sec 120 MBytes 1.01 Gbits/sec 0.008 ms 116792/202427 (58%) [ 6] 0.00-1.00 sec 122 MBytes 1.02 Gbits/sec 0.011 ms 115872/202564 (57%) [ 9] 0.00-1.00 sec 118 MBytes 985 Mbits/sec 0.009 ms 118800/202535 (59%) [ 11] 0.00-1.00 sec 115 MBytes 963 Mbits/sec 0.020 ms 120523/202367 (60%) [SUM] 0.00-1.00 sec 474 MBytes 3.98 Gbits/sec 0.012 ms 471987/809893 (58%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 1.00-2.00 sec 122 MBytes 1.02 Gbits/sec 0.010 ms 116048/203048 (57%) [ 6] 1.00-2.00 sec 120 MBytes 1.01 Gbits/sec 0.010 ms 117649/203048 (58%) [ 9] 1.00-2.00 sec 115 MBytes 967 Mbits/sec 0.013 ms 120927/203008 (60%) [ 11] 1.00-2.00 sec 114 MBytes 959 Mbits/sec 0.019 ms 121594/202996 (60%) [SUM] 1.00-2.00 sec 472 MBytes 3.96 Gbits/sec 0.013 ms 476218/812100 (59%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 2.00-3.00 sec 122 MBytes 1.02 Gbits/sec 0.014 ms 116649/203371 (57%) [ 6] 2.00-3.00 sec 123 MBytes 1.03 Gbits/sec 0.012 ms 115793/203379 (57%) [ 9] 2.00-3.00 sec 121 MBytes 1.01 Gbits/sec 0.010 ms 117380/203379 (58%) [ 11] 2.00-3.00 sec 112 MBytes 939 Mbits/sec 0.011 ms 123560/203353 (61%) [SUM] 2.00-3.00 sec 477 MBytes 4.00 Gbits/sec 0.012 ms 473382/813482 (58%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 3.00-4.00 sec 123 MBytes 1.03 Gbits/sec 0.013 ms 115535/203161 (57%) [ 6] 3.00-4.00 sec 123 MBytes 1.03 Gbits/sec 0.011 ms 115716/203181 (57%) [ 9] 3.00-4.00 sec 119 MBytes 997 Mbits/sec 0.012 ms 118496/203183 (58%) [ 11] 3.00-4.00 sec 117 MBytes 982 Mbits/sec 0.013 ms 119764/203121 (59%) [SUM] 3.00-4.00 sec 482 MBytes 4.04 Gbits/sec 0.012 ms 469511/812646 (58%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 4.00-5.00 sec 122 MBytes 1.02 Gbits/sec 0.014 ms 116366/203129 (57%) [ 6] 4.00-5.00 sec 123 MBytes 1.03 Gbits/sec 0.022 ms 115463/203140 (57%) [ 9] 4.00-5.00 sec 123 MBytes 1.03 Gbits/sec 0.017 ms 115425/203123 (57%) [ 11] 4.00-5.00 sec 122 MBytes 1.02 Gbits/sec 0.013 ms 116144/203140 (57%) [SUM] 4.00-5.00 sec 490 MBytes 4.11 Gbits/sec 0.016 ms 463398/812532 (57%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 5.00-6.00 sec 120 MBytes 1.01 Gbits/sec 0.022 ms 117455/203241 (58%) [ 6] 5.00-6.00 sec 123 MBytes 1.03 Gbits/sec 0.022 ms 115785/203257 (57%) [ 9] 5.00-6.00 sec 123 MBytes 1.03 Gbits/sec 0.023 ms 115539/203263 (57%) [ 11] 5.00-6.00 sec 122 MBytes 1.02 Gbits/sec 0.023 ms 116512/203255 (57%) [SUM] 5.00-6.00 sec 488 MBytes 4.09 Gbits/sec 0.023 ms 465291/813016 (57%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 6.00-7.00 sec 116 MBytes 971 Mbits/sec 0.015 ms 120389/202814 (59%) [ 6] 6.00-7.00 sec 119 MBytes 1.00 Gbits/sec 0.010 ms 117896/202957 (58%) [ 9] 6.00-7.00 sec 121 MBytes 1.02 Gbits/sec 0.023 ms 116693/202940 (58%) [ 11] 6.00-7.00 sec 118 MBytes 988 Mbits/sec 0.011 ms 119068/202957 (59%) [SUM] 6.00-7.00 sec 474 MBytes 3.98 Gbits/sec 0.015 ms 474046/811668 (58%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 7.00-8.00 sec 119 MBytes 998 Mbits/sec 0.009 ms 118459/203188 (58%) [ 6] 7.00-8.00 sec 121 MBytes 1.01 Gbits/sec 0.013 ms 117195/203087 (58%) [ 9] 7.00-8.00 sec 117 MBytes 981 Mbits/sec 0.011 ms 119700/203046 (59%) [ 11] 7.00-8.00 sec 119 MBytes 996 Mbits/sec 0.012 ms 118481/203087 (58%) [SUM] 7.00-8.00 sec 475 MBytes 3.99 Gbits/sec 0.011 ms 473835/812408 (58%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 8.00-9.00 sec 122 MBytes 1.02 Gbits/sec 0.020 ms 116771/203418 (57%) [ 6] 8.00-9.00 sec 122 MBytes 1.02 Gbits/sec 0.014 ms 116689/203445 (57%) [ 9] 8.00-9.00 sec 122 MBytes 1.02 Gbits/sec 0.013 ms 116864/203438 (57%) [ 11] 8.00-9.00 sec 120 MBytes 1.01 Gbits/sec 0.023 ms 117691/203420 (58%) [SUM] 8.00-9.00 sec 485 MBytes 4.07 Gbits/sec 0.017 ms 468015/813721 (58%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 9.00-10.00 sec 123 MBytes 1.03 Gbits/sec 0.016 ms 115712/203031 (57%) [ 6] 9.00-10.00 sec 123 MBytes 1.03 Gbits/sec 0.018 ms 115563/203054 (57%) [ 9] 9.00-10.00 sec 123 MBytes 1.03 Gbits/sec 0.011 ms 115746/203054 (57%) [ 11] 9.00-10.00 sec 123 MBytes 1.03 Gbits/sec 0.018 ms 115703/203066 (57%) [SUM] 9.00-10.00 sec 491 MBytes 4.12 Gbits/sec 0.016 ms 462724/812205 (57%) - - - - - - - - - - - - - - - - - - - - - - - - - [ 5] 10.00-10.00 sec 206 KBytes 1.02 Gbits/sec 0.011 ms 0/143 (0%) [ 6] 10.00-10.00 sec 218 KBytes 1.08 Gbits/sec 0.018 ms 0/152 (0%) [ 9] 10.00-10.00 sec 211 KBytes 1.05 Gbits/sec 0.010 ms 0/147 (0%) [ 11] 10.00-10.00 sec 208 KBytes 1.03 Gbits/sec 0.011 ms 0/145 (0%) [SUM] 10.00-10.00 sec 844 KBytes 4.19 Gbits/sec 0.012 ms 0/587 (0%) - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Jitter Lost/Total Datagrams [ 5] 0.00-10.00 sec 1.18 GBytes 1.01 Gbits/sec 0.011 ms 1170176/2030971 (58%) receiver [ 6] 0.00-10.00 sec 1.19 GBytes 1.02 Gbits/sec 0.018 ms 1163621/2031264 (57%) receiver [ 9] 0.00-10.00 sec 1.17 GBytes 1.01 Gbits/sec 0.010 ms 1175570/2031116 (58%) receiver [ 11] 0.00-10.00 sec 1.15 GBytes 991 Mbits/sec 0.011 ms 1189040/2030907 (59%) receiver [SUM] 0.00-10.00 sec 4.70 GBytes 4.03 Gbits/sec 0.012 ms 4698407/8124258 (58%) receiver iperf Done.so the client can blast 10g with UDP but the server only receives about 4G. There is a massive loss.

I looked more closely to my CPU thinking maybe it was not going Turbo and I was right.

pfSense is reporting Current: 2200 MHz, Max: 2201 MHz. However, I still suspect this is the reason 2.2GHz fixed CPU should be capable of 10g anyway.However, this CPU should be able to go up to 3.00 GHz in any case.

Checking in with sysctl

sysctl dev.cpu | grep freq dev.cpu.7.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.7.freq: 2200 dev.cpu.6.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.6.freq: 2200 dev.cpu.5.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.5.freq: 2200 dev.cpu.4.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.4.freq: 2200 dev.cpu.3.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.3.freq: 2200 dev.cpu.2.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.2.freq: 2200 dev.cpu.1.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.1.freq: 2200 dev.cpu.0.freq_levels: 2201/60000 2200/60000 2100/56352 2000/52778 1900/49855 1800/46415 1700/43611 1600/40303 1500/37618 1400/34440 1300/31871 1200/28822 1100/26367 1000/23442 dev.cpu.0.freq: 2200and after putting some heavy load on CPU to test it stayed stuck at 2200.

sysctl dev.cpu | grep freq was also reporting the same during the test.

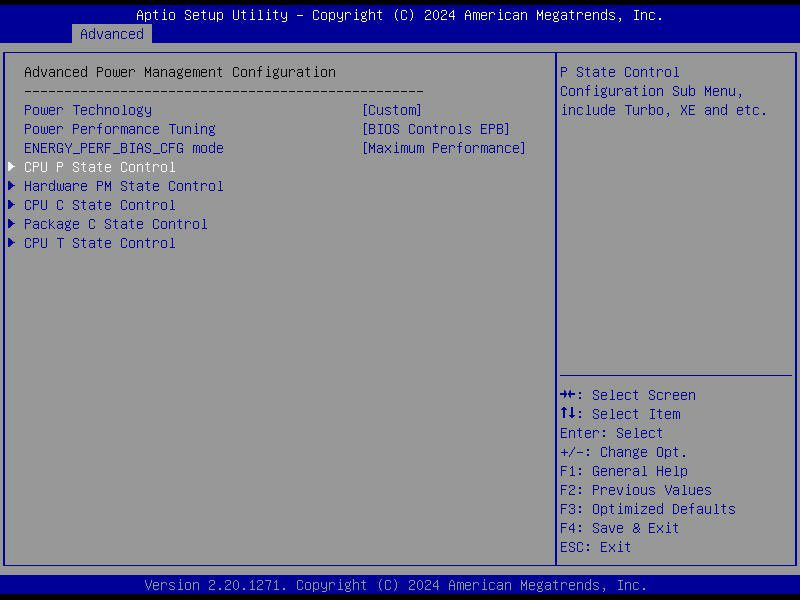

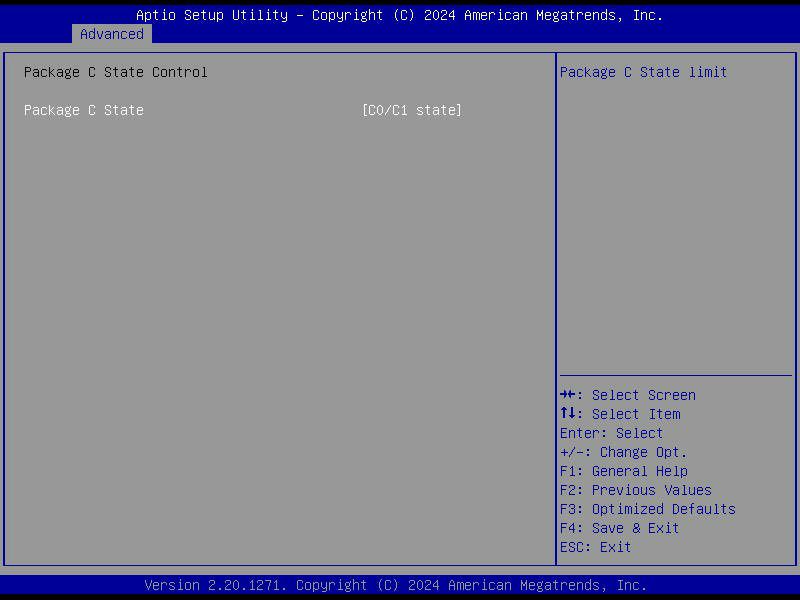

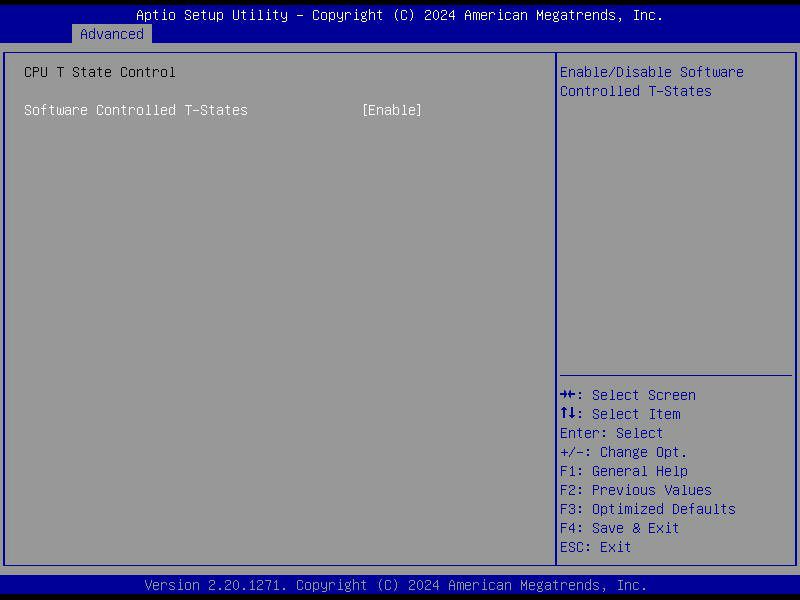

Then I went into BIOS to play with power settings which is a foreign territory to me.

But the power technology was set to "Energy Efficient" which did not let me modify anything at all. I changed this to Custom

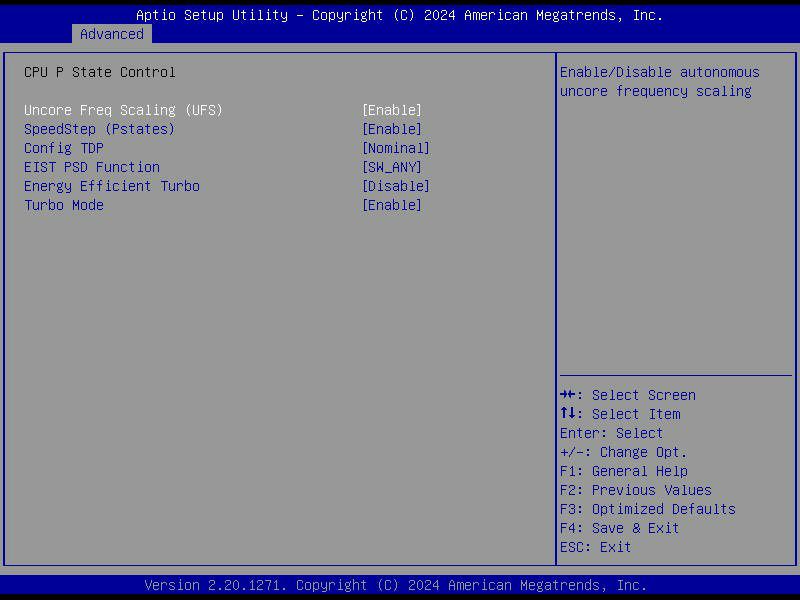

Then for tuning I choose OS controls EPB but pfsense was still reporting the CPU at 2200. (PowerD enabled with HiAdpt)

I am not sure what I did wrong here, I assumed pfSense can manage P states of CPU. But it did not work and I had to enable BIOS controls EPB with Maximum Performance

Anyway, after a lot of rebooting and tinkering I got to see 2693 on my CPU. (This was the highest I could get)

PowerD disabled, Speed Shift enabled at 10 with core level control.As a final test, I run iperf3 again. I saw marginal improvements up to 6g with p4 but that is it. Nowhere near where it should be.

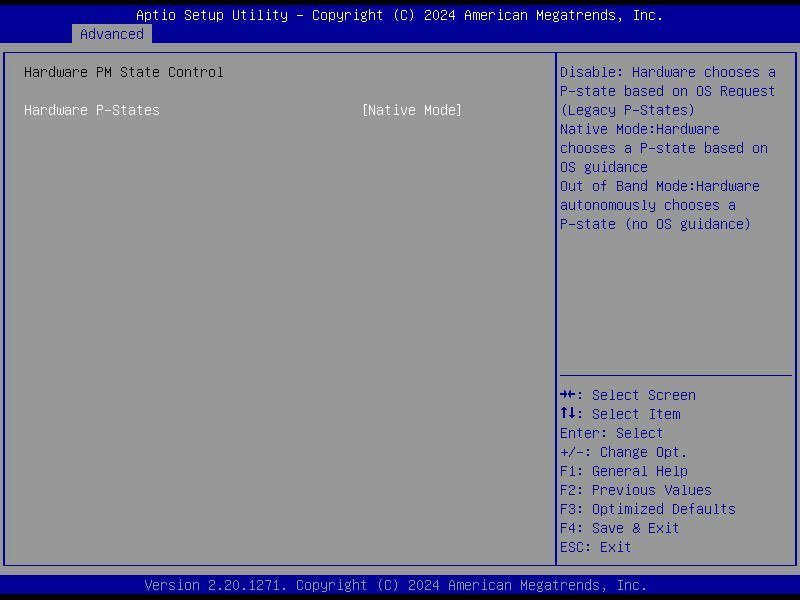

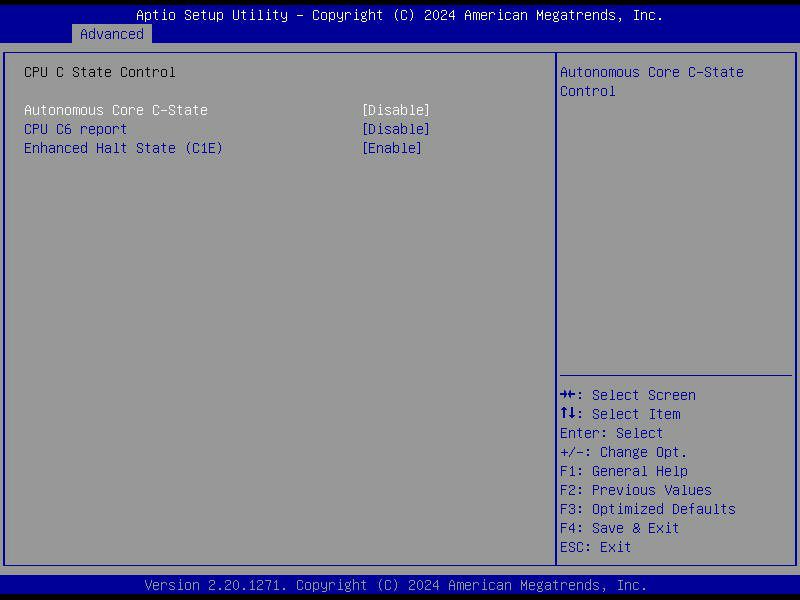

My current BIOS settings are:

+@stephenw10 said in Cannot Achieve 10g pfsense bottleneck:

The max value shown there of 2201 means that Turbo is enabled. It never actually shows the turbo speed on a speed-step enabled CPU. But it could be hitting some thermal/power limit perhaps I agree. Though I'd expect to see some spikes above the 5Gbps if that was the case. At least some variation between repeated tests.

CPU is not thermal throttling, it is generally at 50C even with all the testing.

I am also not sure what are the suitable BIOS settings for the pfsense since I am having hard time. Is there anyone with the same CPU?

-

You will never see the reported CPU frequency show the 3.0GHz turbo value. pfSense can only ever see 2201 which means the full speed with turbo.

-

You should probably separate the problem into stages rather the just assume is a CPU issue.

Ideally you need to build a Lab setup rather than use a production system. That way you have control over any background activities.

Test with UDP between each server & PfSense iperf directly. Try running it with PfSense as the server, and then the other way round. That should prove you can get full speed to & from each server to PF in all modes & prove your Microtik VLAN's plus PF Firewall rules.

If the above works, then it must be the PF Routing engine where the packets are being dropped.

-

@pwood999 said in Cannot Achieve 10g pfsense bottleneck:

You should probably separate the problem into stages rather the just assume is a CPU issue.

Can you name the stages you have in mind to divide the problem into?

CPU performance is crucial on a platform where packet forwarding is performed in software. Testing with UDP is a non-sense, cause it has no flow control and you have to calculate the possible throughput by accounting the packet lost. Running pfSense as iPerf server coast also CPU time to process packets received and does not reflect the possible throughput of the platform. Testing between two endpoints connected to Pfsense is the right way to do. It's why it is called throughput ...

Devices in between may affect possible throughput, especially when packet lost occurs there for whatever reason and TCP congestion algorithms kicks in. So it's good to check the interface counter in the path and ensure there are no drops on interfaces in the path for whatever reason.My suggestion is to disable HT / SMT, scale queues down to 4 and there might be another improvement. The Intel SpeedShift may work better on packed level rather than core level.

-

@Averlon what would you suggest for other BIOS power settings? Can pfSense manage power well enough for me to disable BIOS control? From my testing, when I let pfSense manage the power, it did not go over the 2200 limit (or I put some wrong settings in the BIOS and got stuck at 2200 when I let pfsense handle the power management)

-

@Laxarus

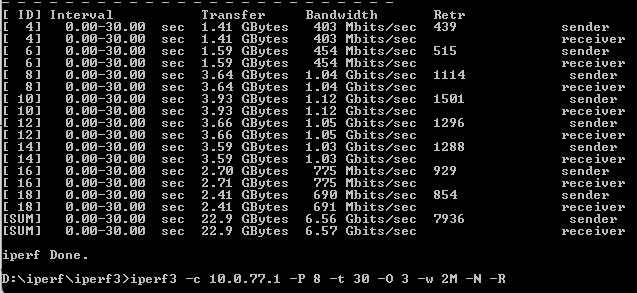

And never getting closer with your settings.This is what I am using on a Windows machine

iperf3 -c 192.168.40.40 -P 8 -t 30 -O 3 -w 2M -N -RAs @stephenw10 already said, this is not a CPU frequency issue, this is just maximum reported by pfSense.

@Laxarus said in Cannot Achieve 10g pfsense bottleneck:

I cannot afford testing this hardware in a virtual environment. This is currently in production and there is no backup for it.

Are you using UEFI mode and SATA drive or this is NVME installation?

-

@w0w ı was using legacy but switched to uefi later thinking this will improve things but it did not change anything. Installation is on a m.2 nvme.

-

Mmm, drive speed and boot type really shouldn't make any difference to throughput.

You could be hitting some bus limit perhaps. Some hardware off-loading not playing nicely?

-

@stephenw10 said in Cannot Achieve 10g pfsense bottleneck:

about 2 hours ago

Mmm, drive speed and boot type really shouldn't make any difference to throughput.

This makes a difference if we want to migrate to a Proxmox VM. When a SATA drive is used, you can prepare a new drive with Proxmox and a pfSense VM on another system, then just move it over and reassign the interfaces in Proxmox. Just use a USB Ethernet adapter as the management interface on both PCs. With NVMe it can be more complicated and may require more downtime.

@Laxarus try the iperf command provided in my previous message and post back the results.

-

Right but no difference to the the throughput of the resulting install.

-

@stephenw10 said in Cannot Achieve 10g pfsense bottleneck:

about 2 hours ago

Right but no difference to the the throughput of the resulting install.

Definitely yes.

-

@Averlon The reason I suggest testing each server to & from PfSense was just to verify that part of the E2E path - especially as the 25G link is used for all VLAN's to the Microtik.

Server1 --> Microtik --> PfSense (DS & US)

Server2 --> Microtik --> PfSense (DS & US)It would at least verify the firewall rules on the VLAN's & the VLAN's through the Microtik can pass the full bandwidth.

-

@w0w said in Cannot Achieve 10g pfsense bottleneck:

@Laxarus try the iperf command provided in my previous message and post back the results.

still 5G and occasional 6G

@Averlon said in Cannot Achieve 10g pfsense bottleneck:

My suggestion is to disable HT / SMT, scale queues down to 4 and there might be another improvement. The Intel SpeedShift may work better on packed level rather than core level.

disabled HT but this did not make any difference

-

@Laxarus said in Cannot Achieve 10g pfsense bottleneck:

still 5G and occasional 6G

OK, so how exactly is the Intel XXV710 dual 25G connected to the Ubiquiti switch, and what is the exact switch model, ports, cables, and transceivers you’re using if any?

-

@w0w

Switch: USW-EnterpriseXG-24

Connection: Unifi SFP28 DAC cable (UC-DAC-SFP28)

I disabled the LAGG so there is only a single cable now.

Do you think these cables dont play nice with pfsense?

But I also tested the 10g rj-45 built-in port but still no difference so I've ruled this out.

At this point, I am entertaining the idea of putting all 10G devices in same vlan/switch and stick with L2.

-

@Laxarus said in Cannot Achieve 10g pfsense bottleneck:

Do you think these cables dont play nice with pfsense?

I don’t think so. The more I look at it, the more I think it’s some software glitch — but where exactly is the bottleneck? It looks just like some queues/limiters. This CPU should do 30-40 Gbit with fw filtering and 60 Gbit just for routing. I don’t know — something is broken.

-

Maybe share your PfSense config, with any public IP's, Certs, etc. obfuscated ?

Or just screenshots of the VLAN firewall rules & any Limiter/Shaper queue settings ?

Check this post or an XML Redactor that might be helpful.

link redactor -

@Laxarus said in Cannot Achieve 10g pfsense bottleneck:

disabled HT but this did not make any difference

Did you configure the NIC queues down to 4 as well and tested SpeedShift at Package Level? The hwpstate_intel driver works quite well with Broadwell CPUs and does shown improvements (according to your post) towards 6Gbps on your Skylake CPUs. Compared to your previous posted results, this is an improvement of almost 1Gbps.

How is the throughput if you disable the firewall (pfctl -d) and use pfsense as router only. NAT won't be available once you disable the firewall. You can re-enable by running pfctl -e and it will load your last ruleset. If you don't see any significant difference with firewall disabled, you can be at least sure, it's not the firewall ruleset slowing things down.

What about the interface counter on that Ubiquiti switch, especially the ones for the 25gbps Uplinks - are there any error counter / drops shown?