Disappointing sub Gb throughput using server hardware.

-

@grimson said in Disappointing sub Gb throughput using server hardware.:

Do you use traffic shaping/limiters on your LAN interface?

No, it is disabled.

@stephenw10 said in Disappointing sub Gb throughput using server hardware.:

I would start out with a very basic config and test that. Then add stuff like Squid etc.

I disabled Squid and openVPN, which are the only services I have running, and got the same result.

I have VLANs enabled but I doubt those are having any effect since I was getting the same results before enabling those.

The hardware recommendations you see being echoed around the forums are coming directly from the vendor (PFsense/NetGate), which is why you keep seeing "Server Class Hardware" for anything over 500 Mbit. It's not a "stupid" response. You're hearing it because that's what the vendor recommends to reliably push data thru their product at 1 Gbit throughput.

This is fair. I only mentioned it because the default response whenever someone said that their speed was low as "you need server hardware" which is not helpful, or frankly logical imo. You're not gonna get gbps on a potato, but if you tape several potatoes together then you should be fine.

The fact that your hardware has the specs needed to push 1 Gbit is only half the equation. It's difficult to offer any targeted troubleshooting when no network details are provided.

Provide a network map so we know what's connected where. What kind of switches are you using? Are you virtualized? Are you using VLAN's? If you're using VLANs, are they terminated on PFsense or your switch? etc, etc.

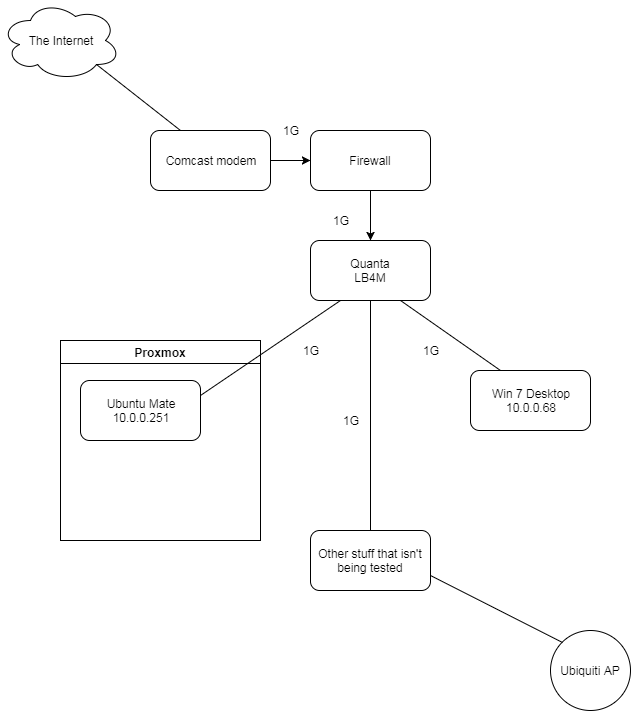

Network map is super simple.

Then there's some of the obvious stuff... anything notable in the syslogs?

Nothing of note

Any in/out errors on any of the PFsense interfaces?

LAN IN : 37,127 of 235,349,599

I think I got those when I was messing with my equipment. It hasn't changed recently.Are all interfaced connected at full duplex?

Yes.

Have you tested your cabling?

Unfortunately I do not have equipment to do a proper test but I don't have reason to believe that it is the issue.

Are your NIC's on the same BUS (possible bottleneck)?

Honestly not sure. Could you advise how I can find out?

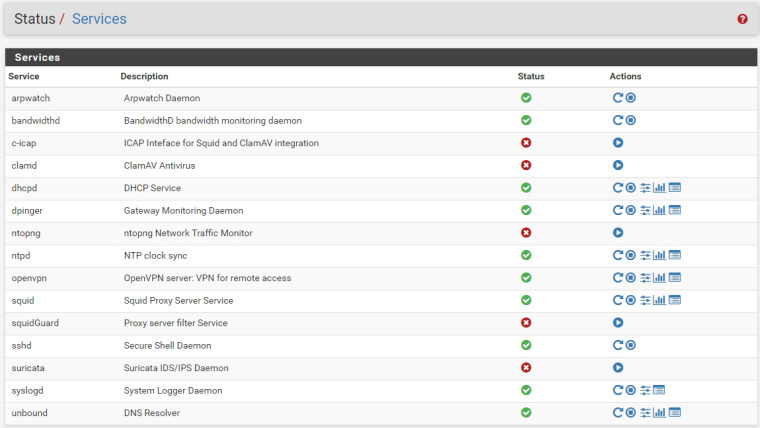

What other packages are you running if any?

What version of PFsense are you running?

My bad. Should have included that.

2.4.4-RELEASE (amd64)

built on Thu Sep 20 09:03:12 EDT 2018

FreeBSD 11.2-RELEASE-p3 -

arpwatch and bandwdithd are packages - what do you mean your not sure? Just look at the installed packages tab..

-

Where are you testing between on that diagram?

Is that traffic going over VLANs at all?

Really you should start with something very basic and work up if you can. Default config, just WAN and LAN, test between ion iperf server on the WAN and a client on the LAN. If you still see less that line speed there then you have a deeper problem to investigate.

Steve

-

@johnpoz Comments refer to the quote above. The photo was my reply. I didn't think they would be causing an issue so I didn't bother disabling them. If you think they might be an issue I'll just turn them off. I do know they are there. Thankfully I'm not a total idiot.

@stephenw10 said in Disappointing sub Gb throughput using server hardware.:

Where are you testing between on that diagram?

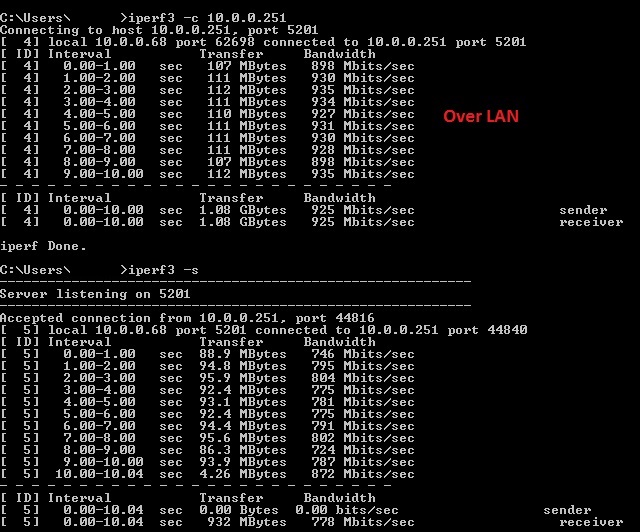

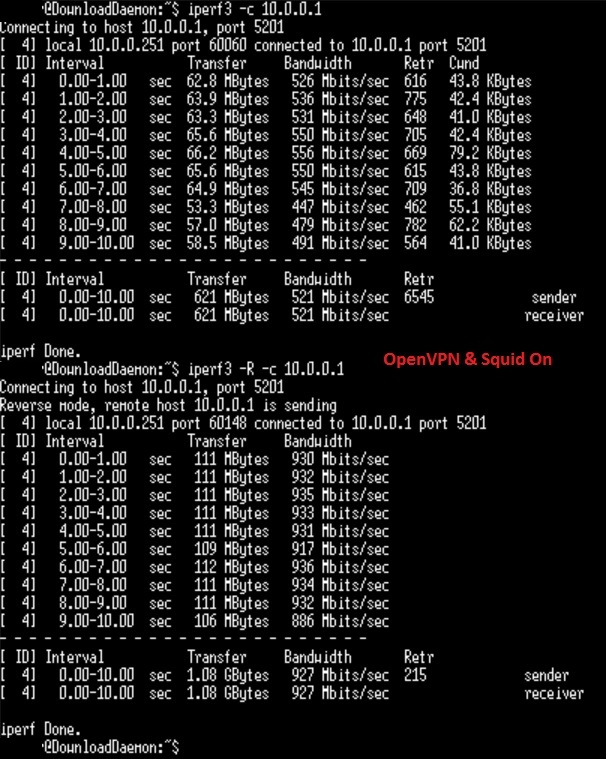

I am testing between a few things there. The LAN tests were between the desktop and Ubuntu VM to sanity check and make sure my hardware wasn't borked before I even got to the firewall. The other tests were conducted between one of those devices and the firewall.

In the case of the through firewall tests I put the Comcast modem router into it's normal configuration(LAN) and connected a toughbook to the other side to allow me to test through.Is that traffic going over VLANs at all?

None that I'm playing with. I have security cameras segmented on a VLAN and they are sending data to the NVR but it is marginal, < 5 mbps. I had this issue long before I set those up however so this isn't a result of that.

Really you should start with something very basic and work up if you can. Default config, just WAN and LAN, test between ion iperf server on the WAN and a client on the LAN. If you still see less that line speed there then you have a deeper problem to investigate.

I will completely unplug everything if you want and test it again but I've had this issue since I installed the firewall through rewiring and switch changes.

-

Hmm, OK.

It's less the wiring and additional devices that I would want to remove and more about the firewall config.

That said your LAN to LAN result doesn't looks that great. If I run a test between local clients I see:

steve@steve-MMLP7AP-00 ~ $ iperf3 -c 172.21.16.88 Connecting to host 172.21.16.88, port 5201 [ 4] local 172.21.16.5 port 33030 connected to 172.21.16.88 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 112 MBytes 943 Mbits/sec 0 86.3 KBytes [ 4] 1.00-2.00 sec 112 MBytes 942 Mbits/sec 0 106 KBytes [ 4] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 3.00-4.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 4.00-5.00 sec 112 MBytes 942 Mbits/sec 0 106 KBytes [ 4] 5.00-6.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 6.00-7.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 164 KBytes [ 4] 8.00-9.00 sec 112 MBytes 942 Mbits/sec 0 164 KBytes [ 4] 9.00-10.00 sec 112 MBytes 942 Mbits/sec 0 430 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 1.10 GBytes 942 Mbits/sec 0 sender [ 4] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver iperf Done.Steve

-

Yeah if your not seeing at least low 900's between clients on the same lan you got something not quite up to snuff.

C:\tools\iperf3.6_64bit>iperf3.exe -c 192.168.9.9 warning: Ignoring nonsense TCP MSS 0 Connecting to host 192.168.9.9, port 5201 [ 5] local 192.168.9.100 port 63702 connected to 192.168.9.9 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 114 MBytes 956 Mbits/sec [ 5] 1.00-2.00 sec 113 MBytes 947 Mbits/sec [ 5] 2.00-3.00 sec 112 MBytes 936 Mbits/sec [ 5] 3.00-4.00 sec 113 MBytes 948 Mbits/sec [ 5] 4.00-5.00 sec 113 MBytes 948 Mbits/sec [ 5] 5.00-6.00 sec 113 MBytes 949 Mbits/sec [ 5] 6.00-7.00 sec 113 MBytes 950 Mbits/sec [ 5] 7.00-8.00 sec 113 MBytes 949 Mbits/sec [ 5] 8.00-9.00 sec 112 MBytes 942 Mbits/sec [ 5] 9.00-10.00 sec 113 MBytes 950 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 1.10 GBytes 948 Mbits/sec sender [ 5] 0.00-10.00 sec 1.10 GBytes 947 Mbits/sec receiver iperf Done.Test from to OLD pos N40L box...

-

A rule of thumb is dual socket is sub-optimal for firewalls. Inter-socket communications can dramatically increase latency if the CPU processing the interrupt is not the same CPU that the NIC is attached to.

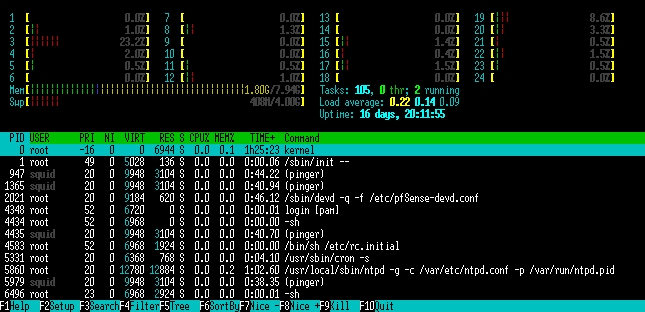

You say 2% cpu usage, but network traffic cannot be perfectly load balanced across all cores. 2% cpu with 24 threads is the same as 50% of a single core, which could cause poor performance. Like saying you have 24 people building a house and wondering why it's going to slowly when you have 23 people sitting around and an over-worked 24th.

If you can, disable hyper-threading, see if that helps, and preferably pin the interrupts for your NICs to the socket "closest" to them.

-

The LAN to LAN results I'm not worried about. My desktop seems to be getting lower results than I would like and the Ubuntu VM is a VM with a paravirtualized NIC. I don't expect it to hit full speed because the 1gbps NIC is being shared by the hypervisor, and two active VMs. That being said, it's getting a better speed than my desktop when testing the firewall.

I could fire up some other servers as well but it didn't make sense to me to test the network switch because I have been getting the same poor speed to the firewall since I installed it, originally with an AT9924-T as my main switch and now a Quanta LB4M.@Harvy66 I know how single vs multi thread works. And I get what you're saying. I'll straight up remove the second CPU if upu think it will help but it's been this way since I had a single X5550. I upgraded them to L5640s to get more threads and lower wattage. If you look at my original comment you'll see my htop reading. No single thread was having any real load. I'll disable HT and test it again.

-

I'd be amazed if that was causing the sort of throttling you're seeing. But a two interface bare bones config test would prove it.

Steve

-

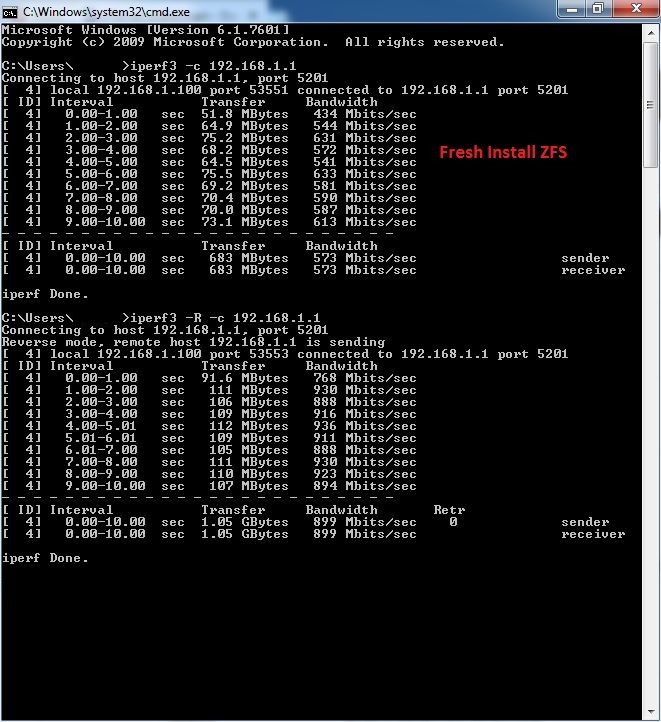

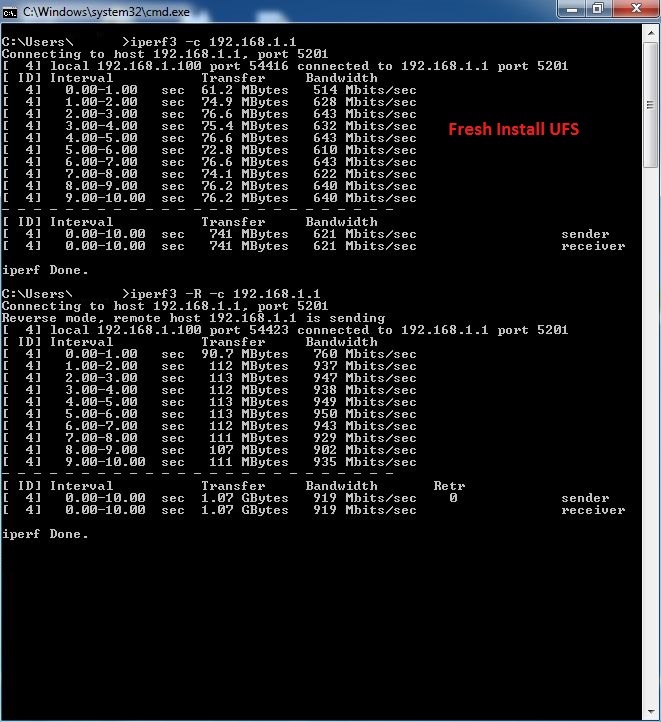

Tell ya what. I've got a fresh SSD. I'll load up a totally bone stock default install tonight and I'll run some tests.

-

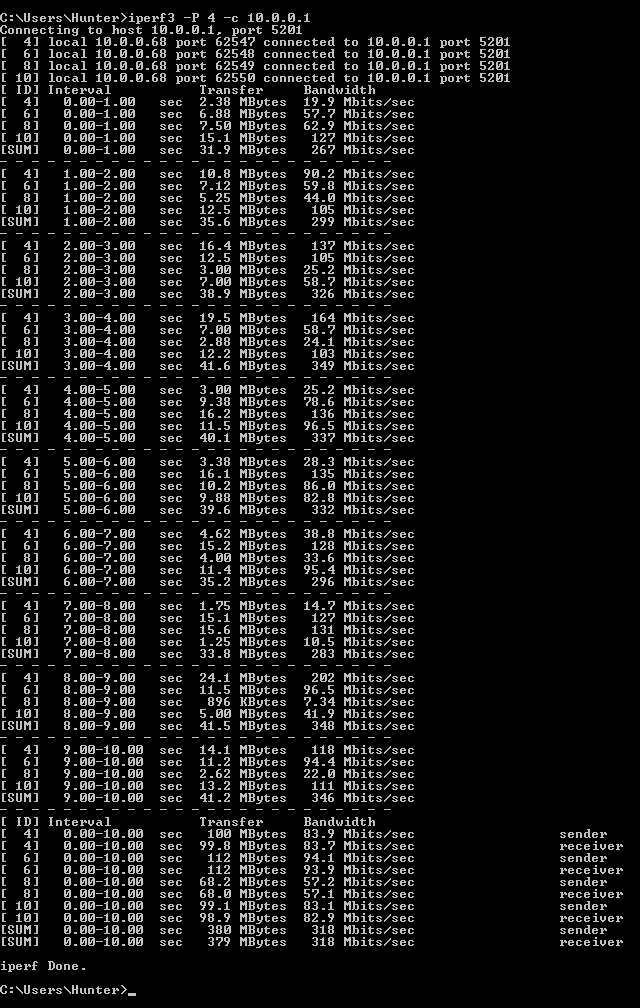

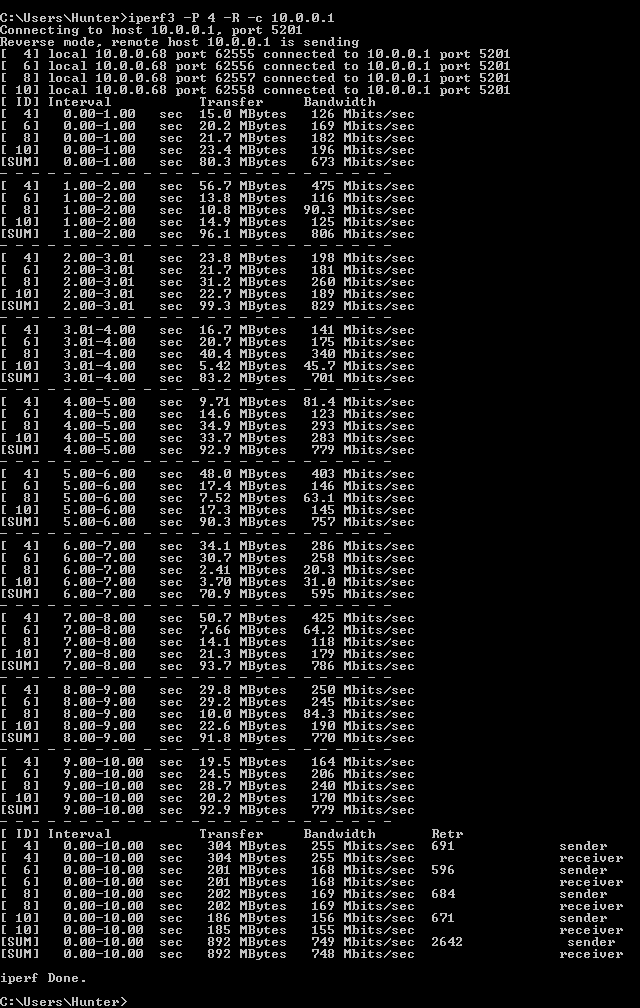

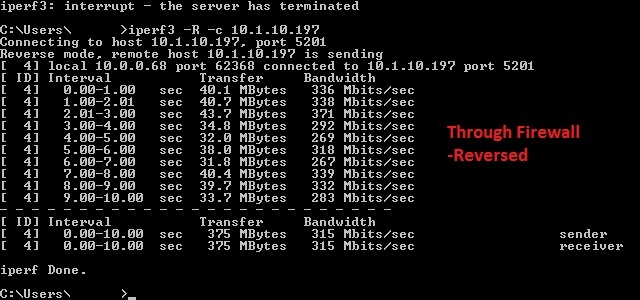

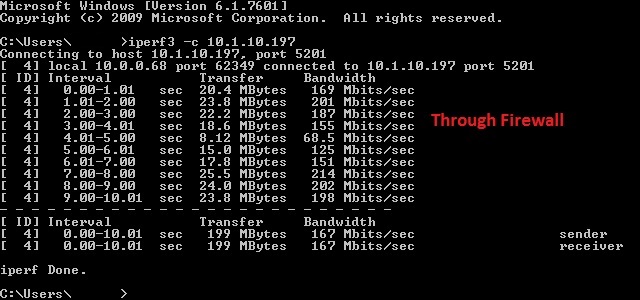

Completely fresh install, updated to 2.4.4 (whatever is current at this moment) pkg install iperf3, iperf3 -s

Connected directly from LAN to my Toughbook's NIC.

-

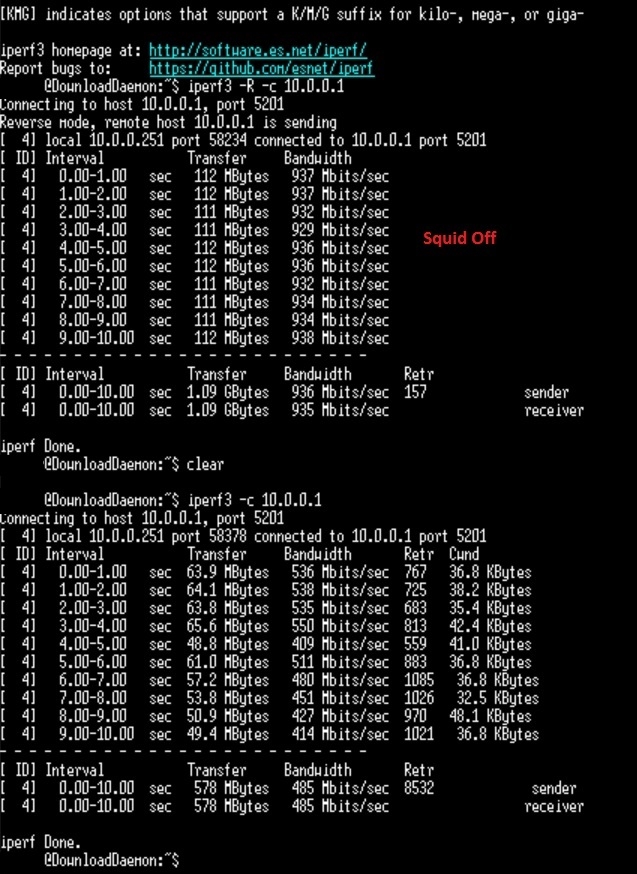

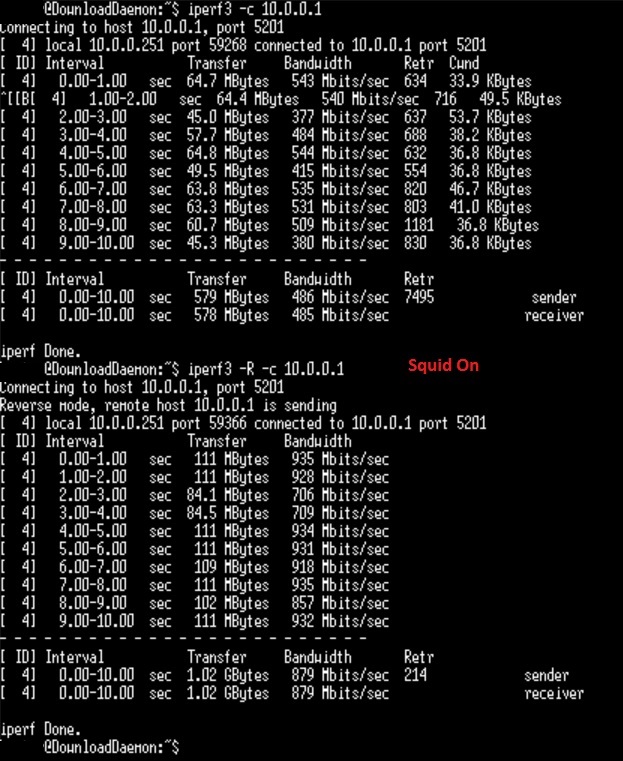

So after some more testing I think the issue may lie with the Intel NIC. At the recommendation of a friend I installed IPFire to test it's performance and fought with it for several hours. The iperf tests I was getting were similar but seemed reversed speed wise. Don't remember exactly. It's Nearly 6AM now.

Since the results looked way too similar I tried using a dual Broadcom NIC which oddly refused to get a public IP but handled LAN fine. So I used the onboard NIC as WAN and the Broadcom NIC as LAN. This time the iperf test hit the high 800s to 900s. I haven't yet duplicated this test with Pfsense to confirm it has the same behavior, but I have a feeling this is the answer. Looks like I'll be finding a newer NIC to test. I'll update again when I conduct more tests.

-

That does seem suspect.

The filesystem type should make no difference at all in that test. iperf doesn't read or write from disk. But good to try I guess.

Steve

-

@stephenw10 Yeah I figured. Just thought since it's not exactly the standard I may as well test it. ZFS also has higher CPU and RAM overhead unless I'm mistaken.