Disappointing sub Gb throughput using server hardware.

-

Hmm, OK.

It's less the wiring and additional devices that I would want to remove and more about the firewall config.

That said your LAN to LAN result doesn't looks that great. If I run a test between local clients I see:

steve@steve-MMLP7AP-00 ~ $ iperf3 -c 172.21.16.88 Connecting to host 172.21.16.88, port 5201 [ 4] local 172.21.16.5 port 33030 connected to 172.21.16.88 port 5201 [ ID] Interval Transfer Bandwidth Retr Cwnd [ 4] 0.00-1.00 sec 112 MBytes 943 Mbits/sec 0 86.3 KBytes [ 4] 1.00-2.00 sec 112 MBytes 942 Mbits/sec 0 106 KBytes [ 4] 2.00-3.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 3.00-4.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 4.00-5.00 sec 112 MBytes 942 Mbits/sec 0 106 KBytes [ 4] 5.00-6.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 6.00-7.00 sec 112 MBytes 941 Mbits/sec 0 106 KBytes [ 4] 7.00-8.00 sec 112 MBytes 941 Mbits/sec 0 164 KBytes [ 4] 8.00-9.00 sec 112 MBytes 942 Mbits/sec 0 164 KBytes [ 4] 9.00-10.00 sec 112 MBytes 942 Mbits/sec 0 430 KBytes - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 1.10 GBytes 942 Mbits/sec 0 sender [ 4] 0.00-10.00 sec 1.10 GBytes 941 Mbits/sec receiver iperf Done.Steve

-

Yeah if your not seeing at least low 900's between clients on the same lan you got something not quite up to snuff.

C:\tools\iperf3.6_64bit>iperf3.exe -c 192.168.9.9 warning: Ignoring nonsense TCP MSS 0 Connecting to host 192.168.9.9, port 5201 [ 5] local 192.168.9.100 port 63702 connected to 192.168.9.9 port 5201 [ ID] Interval Transfer Bitrate [ 5] 0.00-1.00 sec 114 MBytes 956 Mbits/sec [ 5] 1.00-2.00 sec 113 MBytes 947 Mbits/sec [ 5] 2.00-3.00 sec 112 MBytes 936 Mbits/sec [ 5] 3.00-4.00 sec 113 MBytes 948 Mbits/sec [ 5] 4.00-5.00 sec 113 MBytes 948 Mbits/sec [ 5] 5.00-6.00 sec 113 MBytes 949 Mbits/sec [ 5] 6.00-7.00 sec 113 MBytes 950 Mbits/sec [ 5] 7.00-8.00 sec 113 MBytes 949 Mbits/sec [ 5] 8.00-9.00 sec 112 MBytes 942 Mbits/sec [ 5] 9.00-10.00 sec 113 MBytes 950 Mbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate [ 5] 0.00-10.00 sec 1.10 GBytes 948 Mbits/sec sender [ 5] 0.00-10.00 sec 1.10 GBytes 947 Mbits/sec receiver iperf Done.Test from to OLD pos N40L box...

-

A rule of thumb is dual socket is sub-optimal for firewalls. Inter-socket communications can dramatically increase latency if the CPU processing the interrupt is not the same CPU that the NIC is attached to.

You say 2% cpu usage, but network traffic cannot be perfectly load balanced across all cores. 2% cpu with 24 threads is the same as 50% of a single core, which could cause poor performance. Like saying you have 24 people building a house and wondering why it's going to slowly when you have 23 people sitting around and an over-worked 24th.

If you can, disable hyper-threading, see if that helps, and preferably pin the interrupts for your NICs to the socket "closest" to them.

-

The LAN to LAN results I'm not worried about. My desktop seems to be getting lower results than I would like and the Ubuntu VM is a VM with a paravirtualized NIC. I don't expect it to hit full speed because the 1gbps NIC is being shared by the hypervisor, and two active VMs. That being said, it's getting a better speed than my desktop when testing the firewall.

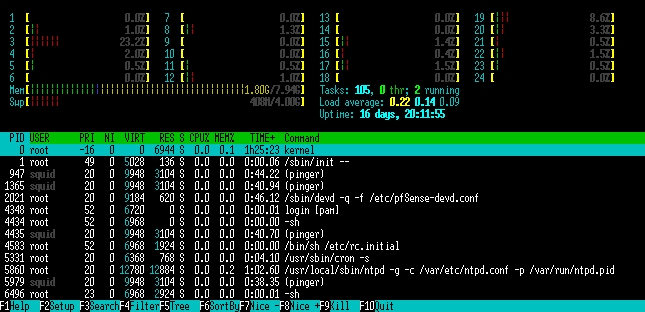

I could fire up some other servers as well but it didn't make sense to me to test the network switch because I have been getting the same poor speed to the firewall since I installed it, originally with an AT9924-T as my main switch and now a Quanta LB4M.@Harvy66 I know how single vs multi thread works. And I get what you're saying. I'll straight up remove the second CPU if upu think it will help but it's been this way since I had a single X5550. I upgraded them to L5640s to get more threads and lower wattage. If you look at my original comment you'll see my htop reading. No single thread was having any real load. I'll disable HT and test it again.

-

I'd be amazed if that was causing the sort of throttling you're seeing. But a two interface bare bones config test would prove it.

Steve

-

Tell ya what. I've got a fresh SSD. I'll load up a totally bone stock default install tonight and I'll run some tests.

-

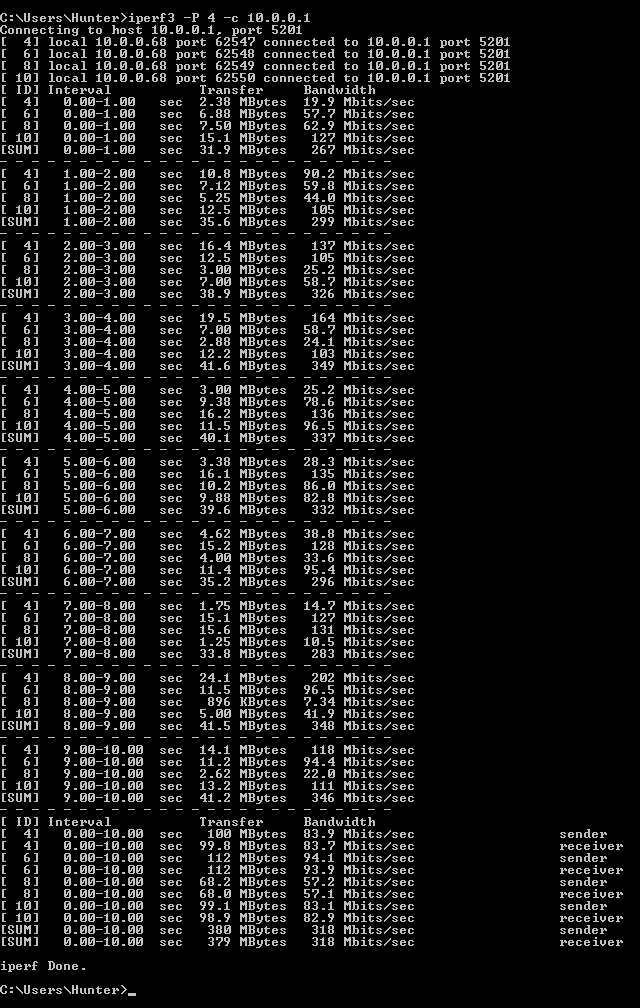

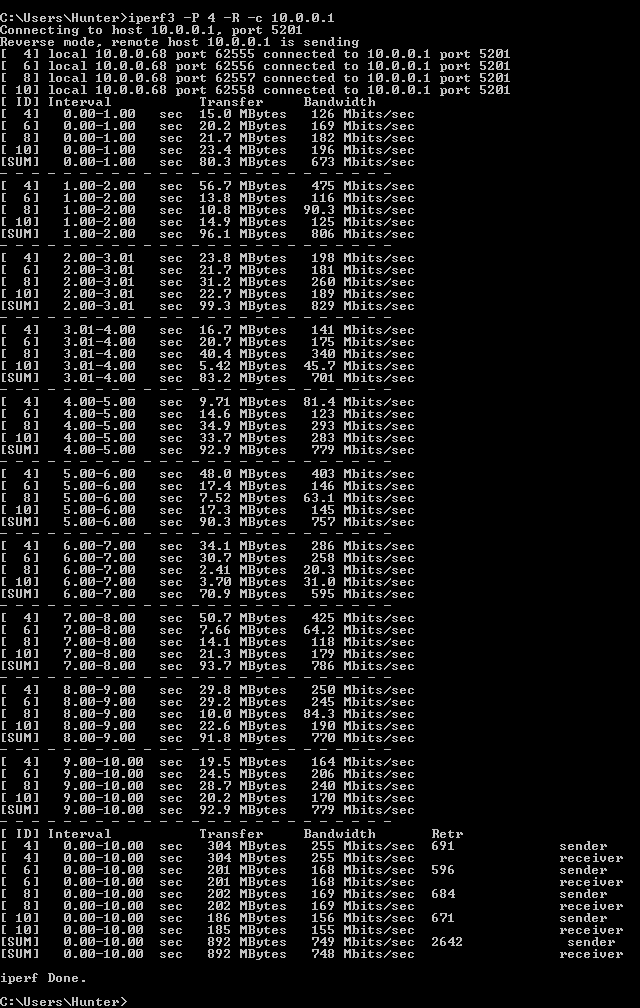

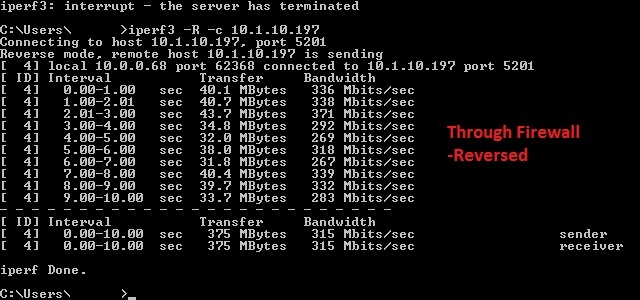

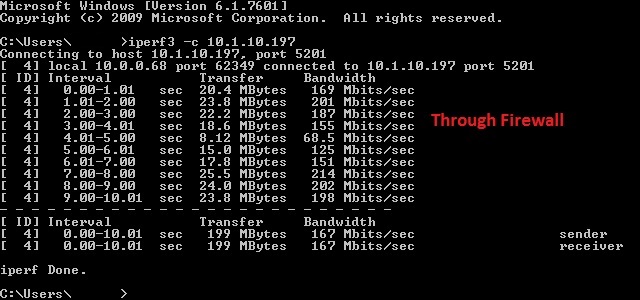

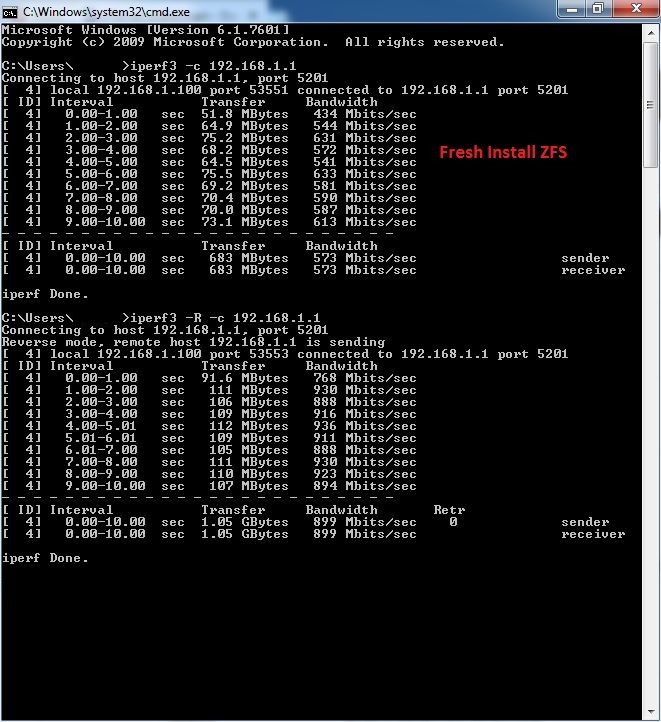

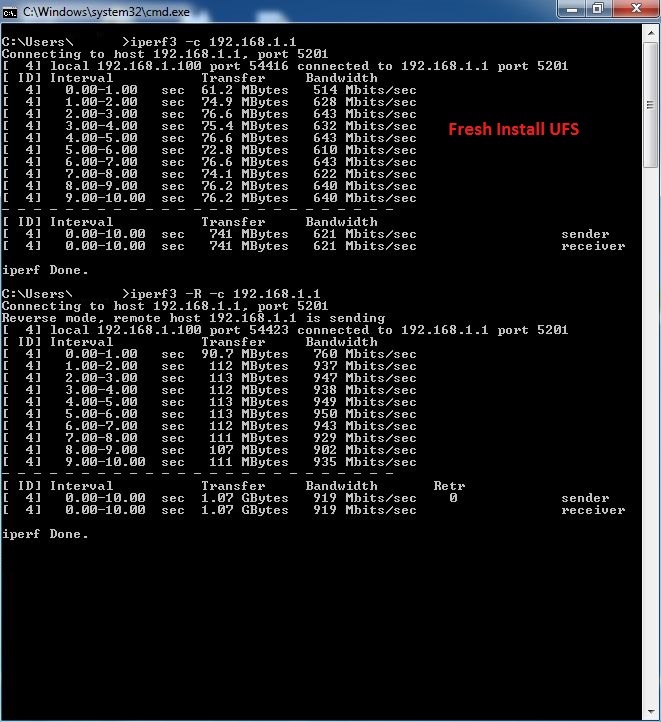

Completely fresh install, updated to 2.4.4 (whatever is current at this moment) pkg install iperf3, iperf3 -s

Connected directly from LAN to my Toughbook's NIC.

-

So after some more testing I think the issue may lie with the Intel NIC. At the recommendation of a friend I installed IPFire to test it's performance and fought with it for several hours. The iperf tests I was getting were similar but seemed reversed speed wise. Don't remember exactly. It's Nearly 6AM now.

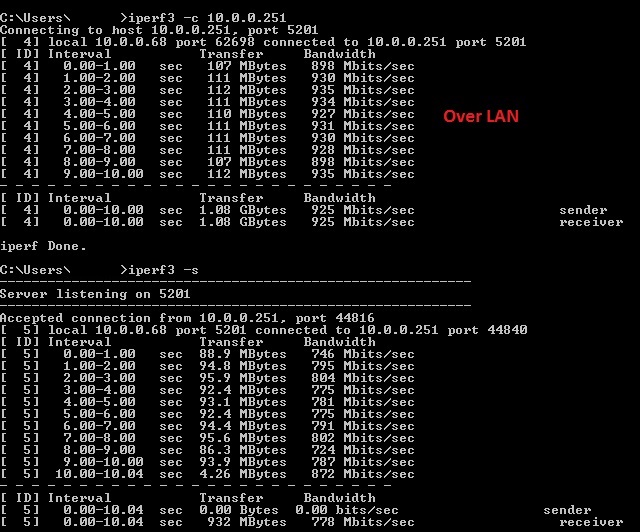

Since the results looked way too similar I tried using a dual Broadcom NIC which oddly refused to get a public IP but handled LAN fine. So I used the onboard NIC as WAN and the Broadcom NIC as LAN. This time the iperf test hit the high 800s to 900s. I haven't yet duplicated this test with Pfsense to confirm it has the same behavior, but I have a feeling this is the answer. Looks like I'll be finding a newer NIC to test. I'll update again when I conduct more tests.

-

That does seem suspect.

The filesystem type should make no difference at all in that test. iperf doesn't read or write from disk. But good to try I guess.

Steve

-

@stephenw10 Yeah I figured. Just thought since it's not exactly the standard I may as well test it. ZFS also has higher CPU and RAM overhead unless I'm mistaken.