PC Engines apu2 experiences

-

-

@logan5247 said in PC Engines apu2 experiences:

@qinn @Vollans - I also would be interested in hearing about this. I was under the impression ZFS wasn't really useful on a single-disk setup.

I have all my pfsense's on ZFS with a single Disk.

One day my UPS was going crazy (before she died) and switched power on / off in a short interval - the pfsense restarted, after I get a spare UPS in place, without any complains - is this good enough to show the advantages of ZFS?

Regards,

fireodo -

@logan5247 I’ll get it typed up in a couple of hours. It’s not tricky.

ZFS is a lot more robust that UFS and is definitely worth the effort to use. As it says above, a power failure is far less likely to cause loss of data, and a hard reboot in case of lockups again is highly unlikely to result in loss of data. And if you do snapshots before and after major upgrades you’re in a good place to revert if things go nuts.

-

@fireodo said in PC Engines apu2 experiences:

@logan5247 said in PC Engines apu2 experiences:

@qinn @Vollans - I also would be interested in hearing about this. I was under the impression ZFS wasn't really useful on a single-disk setup.

I have all my pfsense's on ZFS with a single Disk.

One day my UPS was going crazy (before she died) and switched power on / off in a short interval - the pfsense restarted, after I get a spare UPS in place, without any complains - is this good enough to show the advantages of ZFS?

Regards,

fireodo....of course RAID gives more redundancy, best is more disks using RAID. As the problem with a single disk and "copies" is the same as creating an mdadm raid-1 using two partitions of the same disk: you have data redundancy, but not disk redundancy, as disk failure will cause the loss of both data sets.

Then ZFS, like btrfs, is copy-on-write, so power surges are never a problem and ZFS requires a system with ECC memory (APU2 has this), otherwise you're still not 100% safeguarded against bit errors.. -

@vollans said in PC Engines apu2 experiences:

@logan5247 I’ll get it typed up in a couple of hours. It’s not tricky.

Sorry, ended up being a couple of days due to events.

Log in via SSH

Check that you're using ZFS and what it's called, usually zroot

zfs list NAME USED AVAIL REFER MOUNTPOINT zroot 2.43G 9.68G 88K /zroot zroot/ROOT 1.69G 9.68G 88K none zroot/ROOT/default 1.69G 9.68G 1.40G / zroot/tmp 496K 9.68G 496K /tmp zroot/var 751M 9.68G 401M /varTurn on listing of snapshots just to make life easier:

zpool set listsnapshots=on zrootDo your first snapshot - the bit after the @ sign is your name for the snapshot. I usually do a base, then @date or installed version similar:

zfs snapshot zroot@21-03-05 zfs snapshot zroot/ROOT@21-03-05 zfs snapshot zroot/ROOT/default@21-03-05 zfs snapshot zroot/var@21-03-05There is no point in snapshotting the tmp directory. It is normal to get no feedback from those commands.

Check you have a snapshot

zfs list NAME USED AVAIL REFER MOUNTPOINT zroot 2.43G 9.68G 88K /zroot zroot@21-03-05 0 - 88K - zroot/ROOT 1.69G 9.68G 88K none zroot/ROOT@21-03-05 0 - 88K - zroot/ROOT/default 1.69G 9.68G 1.40G / zroot/ROOT/default@21-03-05 0 - 1.40G - zroot/tmp 496K 9.68G 496K /tmp zroot/var 752M 9.68G 401M /var zroot/var@21-03-05 1.48M - 401M -Do a snapshot whenever you make major changes, such as going from 2.4.5 to 2.5. Normal config changes it would be overkill for as the config backup would be enough.

You can remove your most recent snapshot with the commands, where the bit after @ is the snapshot name:

zfs destroy zroot/var@21-03-05 zfs destroy zroot/ROOT/default@21-03-05 zfs destroy zroot/ROOT@21-03-05 zfs destroy zroot@21-03-05If disaster strikes, otherwise known as 2.5, you can roll back to 2.4.5p1 working state by restoring the snapshot:

zfs rollback zroot/var@21-03-05 zfs rollback zroot/ROOT/default@21-03-05 zfs rollback zroot/ROOT@21-03-05 zfs rollback zroot@21-03-05 shutdown -r nowThe final line is vital! You MUST immediately reboot after rolling back the whole OS otherwise Bad Things Happen (TM).

There is also method in my madness of doing the rollback with /var first. If the var rollback fails, it's not the end of the world, and you can work out what you did wrong without putting the whole system at risk.

-

@vollans Thank you for the writeup. It's good to know this works.

Now please try pool checkpointing and let us know how it goes.

You'll need to boot from the installer and use the rescue image to recover.

You'll need to boot from the installer and use the rescue image to recover.From the zpool Manual Page:

Pool checkpoint

Before starting critical procedures that include destructive actions (e.g zfs destroy ), an administrator can checkpoint the pool's state and in the case of a mistake or failure, rewind the entire pool back to the checkpoint. Otherwise, the checkpoint can be discarded when the procedure has completed successfully.

A pool checkpoint can be thought of as a pool-wide snapshot and should be used with care as it contains every part of the pool's state, from properties to vdev configuration. Thus, while a pool has a checkpoint certain operations are not allowed. Specifically, vdev removal/attach/detach, mirror splitting, and changing the pool's guid. Adding a new vdev is supported but in the case of a rewind it will have to be added again. Finally, users of this feature should keep in mind that scrubs in a pool that has a checkpoint do not repair checkpointed data.

To create a checkpoint for a pool:

# zpool checkpoint pool

To later rewind to its checkpointed state, you need to first export it and then rewind it during import:

# zpool export pool

# zpool import --rewind-to-checkpoint poolTo discard the checkpoint from a pool:

# zpool checkpoint -d pool

-

@dem I'll give that a go when I've got some spare time next week or when an updated 2.5 comes out.

-

@vollans I did a quick test in a virtual machine to figure out what the commands would be. This appears to work:

On a running 2.4.5-p1 system:

zpool checkpoint zrootBooted from the 2.5.0 installer and in the Rescue Shell:

zpool import -f -N --rewind-to-checkpoint zroot zpool export zroot poweroff -

@vollans Thanks for this write up! I am installed on UFS but may go back and switch to ZFS now. I'm a Linux guy, so ZFS has always been out of my wheelhouse.

When I do the initial setup and pfSense is working, do I:

- Perform a snapshot then and leave it around for years and years? Is this safe? I'm thinking like a VM snapshot where you don't want to have a snapshot hang around for long periods of time.

- Only perform snapshots before an upgrade, do the upgrade, then remove the snapshot after it's working?

Thanks again!

-

@logan5247 said in PC Engines apu2 experiences:

@vollans Thanks for this write up! I am installed on UFS but may go back and switch to ZFS now. I'm a Linux guy, so ZFS has always been out of my wheelhouse.

This is an issue mainly because UFS in pfsense performs recovery so incredibly badly. I don't fully understand why something as heavy as ZFS seems to be the only solution.

-

@logan5247 I don’t see any inherent dangers in leaving the snapshot hanging around, unless you are really tight for space. Snapshots only record changed files, so it’s not a huge thing. Personally, I use it for a couple of reasons.

-

Fully installed with patches base OS before any fiddling - that way if you screw up you can roll back and undo your “magic” that was more Weasley than Granger.

-

Snapshot once fully tweaked and working, so you’ve got a known working system to roll back to

-

Just before a major upgrade

Here’s my snapshot catalogue:

NAME USED AVAIL REFER MOUNTPOINT zroot 2.90G 9.21G 88K /zroot zroot@210219 0 - 88K - zroot@2-4-5p1-base 0 - 88K - zroot@2-4-5-p1 0 - 88K - zroot/ROOT 2.14G 9.21G 88K none zroot/ROOT@210219 0 - 88K - zroot/ROOT@2-4-5p1-base 0 - 88K - zroot/ROOT@2-4-5-p1 0 - 88K - zroot/ROOT/default 2.14G 9.21G 1.84G / zroot/ROOT/default@210219 146M - 1.14G - zroot/ROOT/default@2-4-5p1-base 36.3M - 1.43G - zroot/ROOT/default@2-4-5-p1 36.5M - 1.43G - zroot/tmp 512K 9.21G 512K /tmp zroot/var 776M 9.21G 396M /var zroot/var@210219 183M - 527M - zroot/var@2-4-5p1-base 52.1M - 409M - zroot/var@2-4-5-p1 61.5M - 434M -The space used as it goes along is tiny. The upgrade to 2.5 that I ended up rolling back from only used about 900MB IIRC.

-

-

Without doing manual snapshots, is there an advantage of using ZFS over the old UFS? I am on ZFS on a single SSD and I forgot what its advantage is when I posted here a few years ago.

-

@kevindd992002 Better resilience if you have a crash. UFS has a horrid habit of collapsing in an unrecoverable heap, ZFS is far more likely to recover gracefully.

-

@kevindd992002 said in PC Engines apu2 experiences:

Without doing manual snapshots, is there an advantage of using ZFS over the old UFS? I am on ZFS on a single SSD and I forgot what its advantage is when I posted here a few years ago.

....of course RAID with ZFS gives more redundancy, best is more disks using RAID. As the problem with a single disk and "copies" is the same as creating an mdadm raid-1 using two partitions of the same disk: you have data redundancy, but not disk redundancy, as disk failure will cause the loss of both data sets.

Comparing UFS with ZFS, well ZFS, like btrfs, is copy-on-write, so power surges are never a problem and ZFS requires a system with ECC memory (APU2 has this), otherwise you're still not 100% safeguarded against bit errors.

-

@vollans Sorry to keep asking questions.

- ) If I snapshot

zrootdo I need to snapshotzroot/ROOTandzroot/ROOT/default? Doeszrootnot include everything else?

- Let's say I did a snapshot, made a change, and successfully rolled back:

zfs rollback zroot/var@20210308 zfs rollback zroot/ROOT/default@20210308 zfs rollback zroot/ROOT@20210308 zfs rollback zroot@20210308 shutdown -r nowAnd now my

zfs listlooks like this (after the rollback):NAME USED AVAIL REFER MOUNTPOINT zroot 674M 12.4G 96K /zroot zroot@20210308 0 - 96K - zroot/ROOT 665M 12.4G 96K none zroot/ROOT@20210308 0 - 96K - zroot/ROOT/default 665M 12.4G 665M / zroot/ROOT/default@20210308 388K - 665M - zroot/tmp 144K 12.4G 144K /tmp zroot/var 7.02M 12.4G 6.62M /var zroot/var@20210308 400K - 6.62M -How do I know what set of filesystems I'm running on? Is there something like an "active" marker in

zfs list? - ) If I snapshot

-

@logan5247 this really should get its own zfs thread, it has nothing to do with the apu2

-

@vamike I agree, but quickly in summary, you're always running the one without the @ sign - that's the current live version. You can see that the size of the "backup" of zroot is nothing. The size of zroot/ROOT/default's backup is bigger. zroot doesn't include the other, effectively, "partitions".

-

@dem said in PC Engines apu2 experiences:

@vollans I did a quick test in a virtual machine to figure out what the commands would be. This appears to work:

On a running 2.4.5-p1 system:

zpool checkpoint zrootBooted from the 2.5.0 installer and in the Rescue Shell:

zpool import -f -N --rewind-to-checkpoint zroot zpool export zroot poweroffI would like to know how you booted from the 2.5.0 installer using a virtual pfsense machine and got to the Rescue Shell?

-

@qinn In VirtualBox I put the file

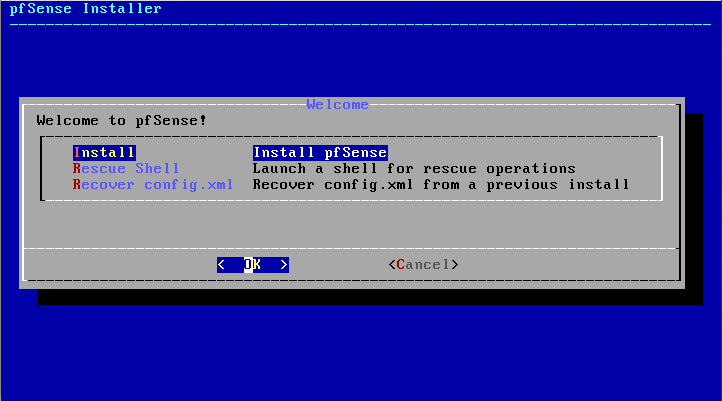

pfSense-CE-2.5.0-RELEASE-amd64.isoin the virtual optical drive and booted to this screen, where I selected Rescue Shell:

-

@dem Thanks for the quick reply, I understand that.

What I would like to know is how you get to the Virtual pfSense from here as the virtual pfsense machine is not running and access the checkpoint you made?Btw I am using VM workstation!