Average writes to Disk

-

@andyrh said in Average writes to Disk:

Extra space will get longer life, more room for TRIM to work with and at todays prices it is worth the investment IMHO.

I understand that all very well - its the Researcher in me that wants to find out what the real cause of all that is! Eliminating the symptoms dont eliminate the ill (if it is one) ;-)

-

@fireodo said in Average writes to Disk:

its the Researcher in me that wants to find out what the real cause of all that is!

Agreed.

Not everyone does that. I like to know how it works too. -

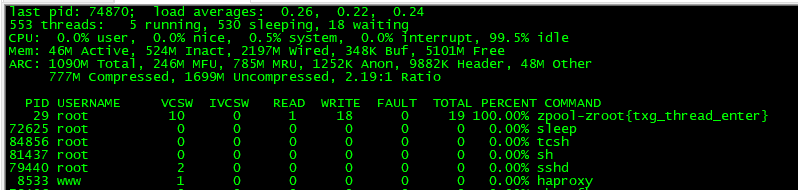

@johnpoz Is this process telling you (or somebody else) something?

zpool-zroot{txg_thread_enter}

This process is doing very much writing when I use

top -SH -o write (and after that "m") -

I see that pop up every few seconds on mine as well

But I have no idea what it is or does to be honest..

Maybe a zfs guru will chime in - that sure isn't me ;)

-

@johnpoz said in Average writes to Disk:

But I have no idea what it is or does to be honest..

I googled about it but I did not find anything concludent - that's why I was asking.

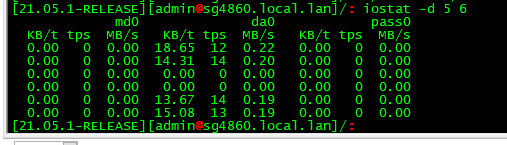

Would you show me your output of iostat -d 5 6 ?

-

here you go - but I don't have a ssd, this is on a netgate sg4860

-

@johnpoz said in Average writes to Disk:

here you go - but I don't have a ssd, this is on a netgate sg4860

Thanks a lot - your values are similar to mine! You have also ZFS?

-

Yeah zfs.. Like I said I agree that 20GB a day seems like a lot - if your doing your math right, etc. But even at that your typical ssd should have a long life anyway..

My desktop model sg4860 has eMMC not ssd.. Which also have limited life writes..

-

@johnpoz said in Average writes to Disk:

My desktop model sg4860 has eMMC not ssd.. Which also have limited life writes..

Is that eMMC replaceable?

-

I don't think so - not easy... But I think there is something missing here on what is actually being written.

So from my understanding zpool iostat shows total since boot..

[21.05.1-RELEASE][admin@sg4860.local.lan]/: zpool iostat capacity operations bandwidth pool alloc free read write read write ---------- ----- ----- ----- ----- ----- ----- zroot 969M 25.1G 0 10 645 227K [21.05.1-RELEASE][admin@sg4860.local.lan]/:My box has been up for days.. Since I updated it to 21.05.1

Uptime 6 Days 02 Hours 12 Minutes 47 Seconds

edit: Well that can not be since boot... Not exactly sure what that reads to be honest.. But if you have it report every 2 seconds.

[21.05.1-RELEASE][admin@sg4860.local.lan]/: zpool iostat zroot 2 capacity operations bandwidth pool alloc free read write read write ---------- ----- ----- ----- ----- ----- ----- zroot 970M 25.1G 0 10 645 227K zroot 970M 25.1G 0 0 0 0 zroot 968M 25.1G 0 34 0 552K zroot 968M 25.1G 0 0 0 0 zroot 967M 25.1G 0 35 0 624K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 38 0 532K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 33 0 602K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 34 0 520K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 33 0 618K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 33 0 531K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 33 0 621K zroot 967M 25.1G 0 0 0 0 zroot 967M 25.1G 0 7 0 114K zroot 967M 25.1G 0 24 0 415K zroot 967M 25.1G 0 0 0 0 -

@johnpoz Here is my output - I'm also no ZFS expert!

As far as I see not much different ...

zpool iostat

capacity operations bandwidth

pool alloc free read write read write

zroot 808M 11.7G 0 12 319 214K

zpool iostat zroot 2

capacity operations bandwidth

pool alloc free read write read write

zroot 807M 11.7G 0 12 319 214K

zroot 808M 11.7G 0 35 0 623K

zroot 808M 11.7G 0 30 0 432K

zroot 808M 11.7G 0 1 0 8.00K

zroot 808M 11.7G 0 0 0 0

zroot 807M 11.7G 0 36 0 535K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 32 0 442K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 2 0 28.0K

zroot 807M 11.7G 0 35 0 618K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 32 0 420K

zroot 807M 11.7G 0 3 0 22.0K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 35 0 486K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 32 0 442K

zroot 807M 11.7G 0 0 0 7.99K

zroot 807M 11.7G 0 0 0 5.98K

zroot 807M 11.7G 0 32 0 442K

zroot 807M 11.7G 0 0 0 0

zroot 806M 11.7G 0 1 0 14.0K

zroot 806M 11.7G 0 2 0 28.0K

zroot 806M 11.7G 0 1 0 14.0K

zroot 807M 11.7G 0 33 0 442K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 31 0 428K

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 0 0 0

zroot 807M 11.7G 0 10 0 623K

zroot 808M 11.7G 0 33 0 547K

zroot 808M 11.7G 0 35 0 498K

zroot 808M 11.7G 0 1 0 14.0K -

SG-3100 - 21.05.1 - pfblockerng dnsbl python mode enabled

iostat -d 5 6

-

@mcury I guess FS is UFS and not ZFS ...

-

@fireodo said in Average writes to Disk:

@mcury I guess FS is UFS and not ZFS ...

Indeed, UFS here, noatime in fstab

-

@mcury said in Average writes to Disk:

@fireodo said in Average writes to Disk:

@mcury I guess FS is UFS and not ZFS ...

Indeed, UFS here, noatime in fstab

So it seams to cristallise that ZFS is the problem ...

-

@mcury said in Average writes to Disk:

SG-3100 - 21.05.1 - pfblockerng dnsbl python mode enabled

iostat -d 5 6

This is really interesting because your box does not suffer from sustained writing from UNBOUND (caused by pfBlockerNG in python mode).

That would suggest it’s a setting or configuration detail that causes the issue.I wonder what that might be. I think my setup is fairly default, but I have pfBlockerNG creating alias lists instead of it’s own rules.

Maybe we should start a thread with pfBlockerNG configs to see if we can find the root configuration cause for sustained writing to disk in pfBlockerNG?

-

@keyser Just as a follow up I think I have now tried EVERY possible setting in my pfBlockerNG setup in terms of making it “quiet” on disk writes.

Nothing sticks - No lists, no logging enabled anywhere, nothing - and still I have a UNBOUND process that writes on average about 380 Kb/s to disk if python mode is enabled.

If I disable Python mode or disable pfBlockerNG in general, UNBOUND no longer does it’s sustained disk writing.So what could cause that behavior?

-

@keyser said in Average writes to Disk:

Just as a follow up I think I have now tried EVERY possible setting in my pfBlockerNG setup

Good Morning (here it is),

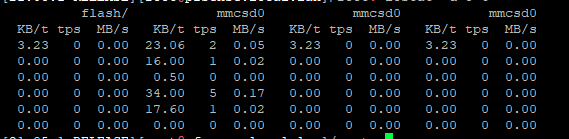

I do not think pfblockerNG (in my case) is the culprit - I just set up a APU2 machine that was yesterday with ZFS and now with UFS and ... voilà: the writings are GONE!

iostat -d 5 6

md0 ada0 pass0

KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s

0.00 0 0.00 22.55 13 0.30 0.38 0 0.00

0.00 0 0.00 0.00 0 0.00 0.00 0 0.00

0.00 0 0.00 0.00 0 0.00 0.00 0 0.00

0.00 0 0.00 4.00 0 0.00 0.00 0 0.00

0.00 0 0.00 4.00 0 0.00 0.00 0 0.00

0.00 0 0.00 0.00 0 0.00 0.00 0 0.00This seams to prove my suspicion from yesterday that ZFS is causing the heavy writings to disk even if no service is activated on the firewall.

PS. Here the same machine with the same config but ZFS:

iostat -d 5 6

md0 ada0 pass0

KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s

0.00 1 0.00 16.67 41 0.67 0.38 0 0.00

0.00 0 0.00 11.29 26 0.28 0.00 0 0.00

0.00 0 0.00 14.13 19 0.26 0.00 0 0.00

0.00 0 0.00 12.38 21 0.25 0.00 0 0.00

0.00 0 0.00 14.70 19 0.27 0.00 0 0.00

0.00 0 0.00 14.06 21 0.29 0.00 0 0.00Have a good trip to weekend ...

fireodo -

I hope I remember correctly, but zfs by default writes every 5 seconds if there is anything to write. Also it depends on type of write operation (synchronous or asynchronous). Because it is easy to revert copy of the pfsense configuration I changed a little zfs configuration on my device (but with it there is a little bit bigger chance of problems in case of not clean shutdown)

So for my device I changed the following:

- make all writes asynchronous (probably won't help much here, but software doesn't have to wait for OS to finish writing)

zfs set sync=disabled zroot- System -> Advanced -> System Tunables - set vfs.zfs.txg.timeout to 180 - it will increase time between writes from 5s to 180s; during this time all operations will happen in RAM; but writes could happen earlier if there is too much to write (also OS is able to force writing earlier - I'm not expert so I don't know all conditions); for me write happens once or twice during said 3 minutes

- because there is bigger chance of failure with 180s I made zfs to write everything twice (I have 100GB SSD so it is not problem); this won't guaranty data safety, but still (works only for newly written data - old won't be touched)

zfs set copies=2 zrootThat works for me. Please read about it before changing anything. Or at least have configuration backup before you try.

-

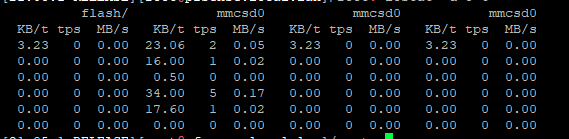

I use ZFS also but with RAM disks. this will reduce the writes to the ssd massively, right?

KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s KB/t tps MB/s 0.00 1 0.00 0.00 1 0.00 13.97 1 0.01 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00 0.00 12 0.00 7.86 23 0.18 0.00 0 0.00 0.00 2 0.00 0.00 2 0.00 0.00 0 0.00 0.00 0 0.00 0.00 3 0.00 0.00 0 0.00 0.00 0 0.00 0.00 0 0.00