High memory usage on hyper-v compared to vsphere

-

Hi,

I transfered some part of infrastructure from vsphere to hyperv on windows server 2019.

The same VM (pfsense 2.4.4 p3, 512MB ram) , show about 30% memory usage on vsphere and about 85% on hyperv (from dashboard).

I thought some problem with import of configuration, then I tried a fresh install, but also on fresh install (without any package and configuration) the memory on hyperv still about 55% without packages and increase to 85% with radius and ssltunnel.

Vsphere VM top:

54 processes: 1 running, 53 sleeping

CPU: 0.7% user, 0.0% nice, 2.4% system, 0.8% interrupt, 96.1% idle

Mem: 18M Active, 192M Inact, 126M Wired, 39M Buf, 114M Free

Swap: 512M Total, 512M FreeHyperv VM top:

54 processes: 1 running, 53 sleeping

CPU: 0.0% user, 0.0% nice, 0.0% system, 0.0% interrupt, 100% idle

Mem: 49M Active, 4224K Inact, 48M Laundry, 296M Wired, 25M Buf, 53M Free

Swap: 824M Total, 7540K Used, 816M FreeVsphere VM State table size 4% (2045/46000)

Vsphere VM MBUF Usage 17% (4560/26584)Hyperv VM State table size 0% (25/46000)

Hyperv VM MBUF Usage 0% (508/1000000)The vsphere VM is a 2.x updated VM, while hyperv VM is a new 2.4.4 p3 .

It seems that MBUF default is not anymore 26584 but is 1000000.I thought it was because of MBUF, so I tried to put it back the 26584. Used kern.ipc.nmbclusters System Tunables, rebooted but the MBUF dimension is the same, so I had to tune it from /boot/loader.conf.local to work.

Unfortunately, this changed nothing, the amount of memory used is exactly the same.The main thing is that on vsphere the memory was okay, stable around 30% with packages and connection, not so much wired memory and no swap at all, and now it is 85% ram and swap used.

It seems also the jumbo clusters increased to higher value

[2.4.4-RELEASE][root@HYPERV]/root: netstat -m

2/28/30 mbufs in use (current/cache/total)

0/508/508/26584 mbuf clusters in use (current/cache/total/max)

0/0 mbuf+clusters out of packet secondary zone in use (current/cache)

0/0/0/524288 4k (page size) jumbo clusters in use (current/cache/total/max)

0/0/0/524288 9k jumbo clusters in use (current/cache/total/max)

0/0/0/2397 16k jumbo clusters in use (current/cache/total/max)

0K/1023K/1023K bytes allocated to network (current/cache/total)

0/0/0 requests for mbufs denied (mbufs/clusters/mbuf+clusters)

0/0/0 requests for mbufs delayed (mbufs/clusters/mbuf+clusters)

0/0/0 requests for jumbo clusters delayed (4k/9k/16k)

0/0/0 requests for jumbo clusters denied (4k/9k/16k)

0 sendfile syscalls

0 sendfile syscalls completed without I/O request

0 requests for I/O initiated by sendfile

0 pages read by sendfile as part of a request

0 pages were valid at time of a sendfile request

0 pages were requested for read ahead by applications

0 pages were read ahead by sendfile

0 times sendfile encountered an already busy page

0 requests for sfbufs denied

0 requests for sfbufs delayed[2.4.4-RELEASE][root@VSPHERE]/root: netstat -m

3126/3459/6585 mbufs in use (current/cache/total)

2714/1846/4560/26584 mbuf clusters in use (current/cache/total/max)

2714/1840 mbuf+clusters out of packet secondary zone in use (current/cache)

0/223/223/13291 4k (page size) jumbo clusters in use (current/cache/total/max)

0/0/0/3938 9k jumbo clusters in use (current/cache/total/max)

0/0/0/2215 16k jumbo clusters in use (current/cache/total/max)

6209K/5448K/11658K bytes allocated to network (current/cache/total)

0/0/0 requests for mbufs denied (mbufs/clusters/mbuf+clusters)

0/0/0 requests for mbufs delayed (mbufs/clusters/mbuf+clusters)

0/0/0 requests for jumbo clusters delayed (4k/9k/16k)

0/0/0 requests for jumbo clusters denied (4k/9k/16k)

0 sendfile syscalls

0 sendfile syscalls completed without I/O request

0 requests for I/O initiated by sendfile

0 pages read by sendfile as part of a request

0 pages were valid at time of a sendfile request

0 pages were requested for read ahead by applications

0 pages were read ahead by sendfile

0 times sendfile encountered an already busy page

0 requests for sfbufs denied

0 requests for sfbufs delayedI tuned them like the vsphere VM

kern.ipc.nmbclusters="26584"

kern.ipc.nmbjumbop="13291"

kern.ipc.nmbjumbo9="3938"

kern.ipc.nmbjumbo16="2215"

but nothing changed with memory.This is very strange, because nbmclusters allocate memory so anyway the memory occupied would have to move when I move the value of nbm and not stay the same.

The same problem is present with opn sense clean installation (226MB of RAM on vsphere vs 340MB of RAM on hyperv) , also with load average on hyperv more than double compared to vmware.

Some help?

Thanks -

Can you really not allocate even a GB RAM to PF? I have 1.5GB on mine running on 2012r2 (and no tuning).

-

@provels , hello.

The question, for me, is not if I can allocate less than 1GB of ram, because the answer was already yes; in the original VM, in fact, pfsense works at 30% with 512 of ram. Most of my pfsense are 512MB where is not required differently.

I would like to stay focused at the specific problem:

- Full installation on vsphere, with radius and ssltunnel, use about 32% of 512MB and no swap.

- Full installation on hyperv, with radius and ssltunnel, use about 85% of 512MB and some swap.

- Modify MBUF, didn't move one single MB of RAM.

Tried with copied machine and with new installation, nothing change.

-

@m4rv1n Not less, at least or more. Be a trailblazer, then. Figure it out. ;) Good luck!

-

@provels , for sure I will continue to find out what is the problem, especially for environment when you have to think about size of system and RAM is not free, and keeping in mind that hundred of system run perfectly with the same size and configuration at less than 35%.

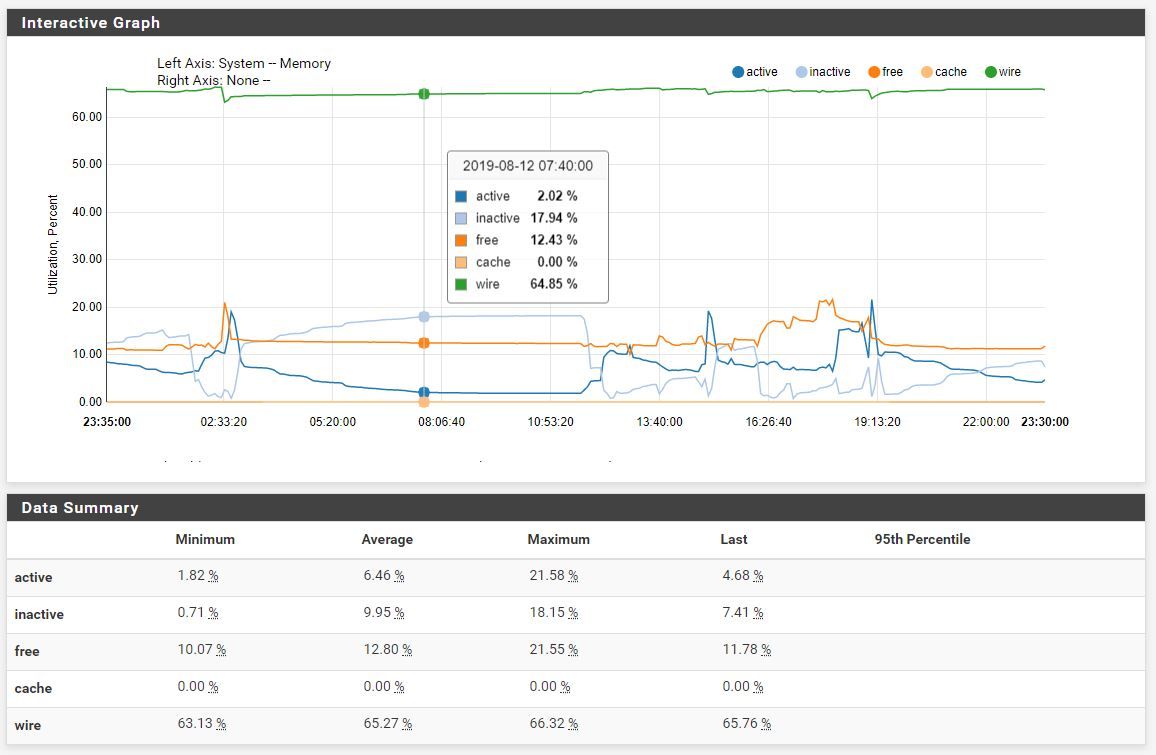

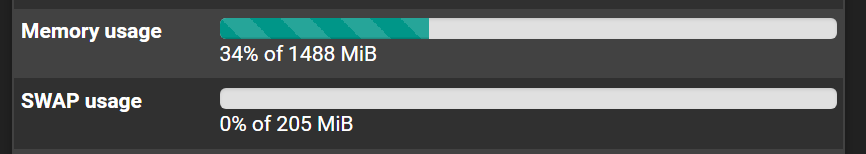

I add, If a tomorrow you will move to vsphere and the pfsense will stay at 85% with the same configuration of now, that you are at 34%, I will try to help you too

If you have some news or some idea, with knowledge of pfsense/freebsd, or also just some test, for example clean installation of pfsense with 512MB in your 2012r2 environment, you are and will be welcome, also just to share knowledge. Thank you for the good luck, I will put together with heavy test, and if I will have news I will update this thread. The final aim is to help most people we can. -

Same NIC type in both?

-

@stephenw10 hello.

They are different NIC type, one is one vsphere hypervisor and another one is on hyper-v.

Vsphere 1Gb

em0: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2000-0x203f mem 0xfd560000-0xfd57ffff,0xfdff0000-0xfdffffff irq 18 at device 0.0 on pci2Hyperv 10Gb

hn0: <Hyper-V Network Interface> on vmbus0I tried also with VM generation 1 on hyperv and nothing changed.

Actually the hyperv VM is doing nothing, so no traffic, states or vpn.@stephenw10

Notice that also by changing the mbuf cluster size, nothing changed about RAMmbuf clusters 1000000 -> 26584

4k (page size) jumbo clusters 524288 -> 13291

9k jumbo clusters 524288 -> 3938

16k jumbo clusters 2397 -> 2215 -

Same RAM usage using legacy NICs in Hyper-V?

-

With gen1 and legacy card, most of times it have connection problems.

When I succeed to connect with legacy adapter, the %mem used is about 43% on a clean install, compared to 55-58% of the standard network adapter. -

Hmm, did you try a clean install in vmware to compare directly? It could be some other legacy setting from an earlier version carried through. I would certainly expect it to run fine in 512MB if you don't add packages etc.

Steve

-

Yes, clean install without additional package stays under 30% mem in vmware environment. I tried also to deactivate all integration services in hyper-v configuration of the VM but nothing change.

-

Hmm, must be what it's emulating then somehow. This is the first time I've seen anyone ask about this though.

Try comparing boot logs, what's different?

Steve

-

This is system.log at boot for VM in hyper-v.

Sep 8 22:23:53 FWAZ01 syslogd: kernel boot file is /boot/kernel/kernel Sep 8 22:23:53 FWAZ01 kernel: Copyright (c) 1992-2018 The FreeBSD Project. Sep 8 22:23:53 FWAZ01 kernel: Copyright (c) 1979, 1980, 1983, 1986, 1988, 1989, 1991, 1992, 1993, 1994 Sep 8 22:23:53 FWAZ01 kernel: The Regents of the University of California. All rights reserved. Sep 8 22:23:53 FWAZ01 kernel: FreeBSD is a registered trademark of The FreeBSD Foundation. Sep 8 22:23:53 FWAZ01 kernel: FreeBSD 11.2-RELEASE-p10 #9 4a2bfdce133(RELENG_2_4_4): Wed May 15 18:54:42 EDT 2019 Sep 8 22:23:53 FWAZ01 kernel: root@buildbot1-nyi.netgate.com:/build/ce-crossbuild-244/obj/amd64/ZfGpH5cd/build/ce-crossbuild-244/pfSense/tmp/FreeBSD-src/sys/pfSense amd64 Sep 8 22:23:53 FWAZ01 kernel: FreeBSD clang version 6.0.0 (tags/RELEASE_600/final 326565) (based on LLVM 6.0.0) Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x24000000 Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x100000000 Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x1000000000 Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x10000000000 Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x20000000000 Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x40000000000 Sep 8 22:23:53 FWAZ01 kernel: SRAT: Ignoring memory at addr 0x80000000000 Sep 8 22:23:53 FWAZ01 kernel: VT(efifb): resolution 1024x768 Sep 8 22:23:53 FWAZ01 kernel: Hyper-V Version: 10.0.17763 [SP0] Sep 8 22:23:53 FWAZ01 kernel: Features=0x2e7f<VPRUNTIME,TMREFCNT,SYNIC,SYNTM,APIC,HYPERCALL,VPINDEX,REFTSC,IDLE,TMFREQ> Sep 8 22:23:53 FWAZ01 kernel: PM Features=0x0 [C2] Sep 8 22:23:53 FWAZ01 kernel: Features3=0xbed7b2<DEBUG,XMMHC,IDLE,NUMA,TMFREQ,SYNCMC,CRASH,NPIEP> Sep 8 22:23:53 FWAZ01 kernel: Timecounter "Hyper-V" frequency 10000000 Hz quality 2000 Sep 8 22:23:53 FWAZ01 kernel: CPU: Intel(R) Xeon(R) CPU E5-2673 v4 @ 2.30GHz (2305.50-MHz K8-class CPU) Sep 8 22:23:53 FWAZ01 kernel: Origin="GenuineIntel" Id=0x406f1 Family=0x6 Model=0x4f Stepping=1 Sep 8 22:23:53 FWAZ01 kernel: Features=0x1f83fbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE,MCA,CMOV,PAT,PSE36,MMX,FXSR,SSE,SSE2,SS,HTT> Sep 8 22:23:53 FWAZ01 kernel: Features2=0xfeda3203<SSE3,PCLMULQDQ,SSSE3,FMA,CX16,PCID,SSE4.1,SSE4.2,MOVBE,POPCNT,AESNI,XSAVE,OSXSAVE,AVX,F16C,RDRAND,HV> Sep 8 22:23:53 FWAZ01 kernel: AMD Features=0x2c100800<SYSCALL,NX,Page1GB,RDTSCP,LM> Sep 8 22:23:53 FWAZ01 kernel: AMD Features2=0x121<LAHF,ABM,Prefetch> Sep 8 22:23:53 FWAZ01 kernel: Structured Extended Features=0x1c2fb9<FSGSBASE,BMI1,HLE,AVX2,SMEP,BMI2,ERMS,INVPCID,RTM,NFPUSG,RDSEED,ADX,SMAP> Sep 8 22:23:53 FWAZ01 kernel: Structured Extended Features3=0x20000000<ARCH_CAP> Sep 8 22:23:53 FWAZ01 kernel: XSAVE Features=0x1<XSAVEOPT> Sep 8 22:23:53 FWAZ01 kernel: IA32_ARCH_CAPS=0x4 Sep 8 22:23:53 FWAZ01 kernel: Hypervisor: Origin = "Microsoft Hv" Sep 8 22:23:53 FWAZ01 kernel: real memory = 603979776 (576 MB) Sep 8 22:23:53 FWAZ01 kernel: avail memory = 522960896 (498 MB) Sep 8 22:23:53 FWAZ01 kernel: Event timer "LAPIC" quality 100 Sep 8 22:23:53 FWAZ01 kernel: ACPI APIC Table: <VRTUAL MICROSFT> Sep 8 22:23:53 FWAZ01 kernel: FreeBSD/SMP: Multiprocessor System Detected: 2 CPUs Sep 8 22:23:53 FWAZ01 kernel: FreeBSD/SMP: 1 package(s) x 2 hardware threads Sep 8 22:23:53 FWAZ01 kernel: ioapic0 <Version 1.1> irqs 0-23 on motherboard Sep 8 22:23:53 FWAZ01 kernel: SMP: AP CPU #1 Launched! Sep 8 22:23:53 FWAZ01 kernel: Timecounter "Hyper-V-TSC" frequency 10000000 Hz quality 3000 Sep 8 22:23:53 FWAZ01 kernel: ipw_bss: You need to read the LICENSE file in /usr/share/doc/legal/intel_ipw.LICENSE. Sep 8 22:23:53 FWAZ01 kernel: ipw_bss: If you agree with the license, set legal.intel_ipw.license_ack=1 in /boot/loader.conf. Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (ipw_bss_fw, 0xffffffff80681430, 0) error 1 Sep 8 22:23:53 FWAZ01 kernel: random: entropy device external interface Sep 8 22:23:53 FWAZ01 kernel: ipw_ibss: You need to read the LICENSE file in /usr/share/doc/legal/intel_ipw.LICENSE. Sep 8 22:23:53 FWAZ01 kernel: ipw_ibss: If you agree with the license, set legal.intel_ipw.license_ack=1 in /boot/loader.conf. Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (ipw_ibss_fw, 0xffffffff806814e0, 0) error 1 Sep 8 22:23:53 FWAZ01 kernel: ipw_monitor: You need to read the LICENSE file in /usr/share/doc/legal/intel_ipw.LICENSE. Sep 8 22:23:53 FWAZ01 kernel: ipw_monitor: If you agree with the license, set legal.intel_ipw.license_ack=1 in /boot/loader.conf. Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (ipw_monitor_fw, 0xffffffff80681590, 0) error 1 Sep 8 22:23:53 FWAZ01 kernel: iwi_bss: You need to read the LICENSE file in /usr/share/doc/legal/intel_iwi.LICENSE. Sep 8 22:23:53 FWAZ01 kernel: iwi_bss: If you agree with the license, set legal.intel_iwi.license_ack=1 in /boot/loader.conf. Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (iwi_bss_fw, 0xffffffff806a8460, 0) error 1 Sep 8 22:23:53 FWAZ01 kernel: iwi_ibss: You need to read the LICENSE file in /usr/share/doc/legal/intel_iwi.LICENSE. Sep 8 22:23:53 FWAZ01 kernel: iwi_ibss: If you agree with the license, set legal.intel_iwi.license_ack=1 in /boot/loader.conf. Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (iwi_ibss_fw, 0xffffffff806a8510, 0) error 1 Sep 8 22:23:53 FWAZ01 kernel: iwi_monitor: You need to read the LICENSE file in /usr/share/doc/legal/intel_iwi.LICENSE. Sep 8 22:23:53 FWAZ01 kernel: iwi_monitor: If you agree with the license, set legal.intel_iwi.license_ack=1 in /boot/loader.conf. Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (iwi_monitor_fw, 0xffffffff806a85c0, 0) error 1 Sep 8 22:23:53 FWAZ01 kernel: wlan: mac acl policy registered Sep 8 22:23:53 FWAZ01 kernel: kbd0 at kbdmux0 Sep 8 22:23:53 FWAZ01 kernel: netmap: loaded module Sep 8 22:23:53 FWAZ01 kernel: module_register_init: MOD_LOAD (vesa, 0xffffffff8120aaa0, 0) error 19 Sep 8 22:23:53 FWAZ01 kernel: random: registering fast source Intel Secure Key RNG Sep 8 22:23:53 FWAZ01 kernel: random: fast provider: "Intel Secure Key RNG" Sep 8 22:23:53 FWAZ01 kernel: nexus0 Sep 8 22:23:53 FWAZ01 kernel: cryptosoft0: <software crypto> on motherboard Sep 8 22:23:53 FWAZ01 kernel: padlock0: No ACE support. Sep 8 22:23:53 FWAZ01 kernel: acpi0: <VRTUAL MICROSFT> on motherboard Sep 8 22:23:53 FWAZ01 kernel: atrtc0: <AT realtime clock> port 0x70-0x71 irq 8 on acpi0 Sep 8 22:23:53 FWAZ01 kernel: atrtc0: registered as a time-of-day clock, resolution 1.000000s Sep 8 22:23:53 FWAZ01 kernel: Event timer "RTC" frequency 32768 Hz quality 0 Sep 8 22:23:53 FWAZ01 kernel: Timecounter "ACPI-fast" frequency 3579545 Hz quality 900 Sep 8 22:23:53 FWAZ01 kernel: acpi_timer0: <32-bit timer at 3.579545MHz> port 0x408-0x40b on acpi0 Sep 8 22:23:53 FWAZ01 kernel: acpi_syscontainer0: <System Container> on acpi0 Sep 8 22:23:53 FWAZ01 kernel: vmbus0: <Hyper-V Vmbus> on acpi_syscontainer0 Sep 8 22:23:53 FWAZ01 kernel: vmbus_res0: <Hyper-V Vmbus Resource> irq 5 on acpi0 Sep 8 22:23:53 FWAZ01 kernel: Timecounters tick every 10.000 msec Sep 8 22:23:53 FWAZ01 kernel: vmbus0: version 3.0 Sep 8 22:23:53 FWAZ01 kernel: hvet0: <Hyper-V event timer> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: Event timer "Hyper-V" frequency 10000000 Hz quality 1000 Sep 8 22:23:53 FWAZ01 kernel: hvkbd0: <Hyper-V KBD> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hvheartbeat0: <Hyper-V Heartbeat> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hvkvp0: <Hyper-V KVP> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hvshutdown0: <Hyper-V Shutdown> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hvtimesync0: <Hyper-V Timesync> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hvtimesync0: RTT Sep 8 22:23:53 FWAZ01 kernel: hvvss0: <Hyper-V VSS> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hn0: <Hyper-V Network Interface> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hn0: Ethernet address: 00:0d:3a:79:09:3b Sep 8 22:23:53 FWAZ01 kernel: hn0: link state changed to UP Sep 8 22:23:53 FWAZ01 kernel: storvsc0: <Hyper-V SCSI> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hn1: <Hyper-V Network Interface> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hn1: Ethernet address: 00:15:5d:00:04:01 Sep 8 22:23:53 FWAZ01 kernel: hn2: <Hyper-V Network Interface> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hn1: link state changed to UP Sep 8 22:23:53 FWAZ01 kernel: hn2: Ethernet address: 00:15:5d:00:04:02 Sep 8 22:23:53 FWAZ01 kernel: hn2: link state changed to UP Sep 8 22:23:53 FWAZ01 kernel: hn3: <Hyper-V Network Interface> on vmbus0 Sep 8 22:23:53 FWAZ01 kernel: hn3: Ethernet address: 00:15:5d:00:04:18 Sep 8 22:23:53 FWAZ01 kernel: hn3: link state changed to UP Sep 8 22:23:53 FWAZ01 kernel: Trying to mount root from ufs:/dev/gptid/2bd76852-a445-11e9-b481-00155d000400 [rw]... Sep 8 22:23:53 FWAZ01 kernel: cd0 at storvsc0 bus 0 scbus0 target 0 lun 1 Sep 8 22:23:53 FWAZ01 kernel: cd0: <Msft Virtual DVD-ROM 1.0> Removable CD-ROM SPC-3 SCSI device Sep 8 22:23:53 FWAZ01 kernel: cd0: 300.000MB/s transfers Sep 8 22:23:53 FWAZ01 kernel: cd0: Attempt to query device size failed: NOT READY, Medium not present - tray closed Sep 8 22:23:53 FWAZ01 kernel: da0 at storvsc0 bus 0 scbus0 target 0 lun 0 Sep 8 22:23:53 FWAZ01 kernel: da0: <Msft Virtual Disk 1.0> Fixed Direct Access SPC-3 SCSI device Sep 8 22:23:53 FWAZ01 kernel: da0: 300.000MB/s transfers Sep 8 22:23:53 FWAZ01 kernel: da0: Command Queueing enabled Sep 8 22:23:53 FWAZ01 kernel: da0: 3072MB (6291456 512 byte sectors) Sep 8 22:23:53 FWAZ01 kernel: WARNING: / was not properly dismounted Sep 8 22:23:53 FWAZ01 kernel: WARNING: /: mount pending error: blocks 16 files 0 Sep 8 22:23:53 FWAZ01 kernel: random: unblocking device. Sep 8 22:23:53 FWAZ01 kernel: CPU: Intel(R) Xeon(R) CPU E5-2673 v4 @ 2.30GHz (2305.50-MHz K8-class CPU) Sep 8 22:23:53 FWAZ01 kernel: Origin="GenuineIntel" Id=0x406f1 Family=0x6 Model=0x4f Stepping=1 Sep 8 22:23:53 FWAZ01 kernel: Features=0x1f8bfbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE,MCA,CMOV,PAT,PSE36,CLFLUSH,MMX,FXSR,SSE,SSE2,SS,HTT> Sep 8 22:23:53 FWAZ01 kernel: Features2=0xfeda3203<SSE3,PCLMULQDQ,SSSE3,FMA,CX16,PCID,SSE4.1,SSE4.2,MOVBE,POPCNT,AESNI,XSAVE,OSXSAVE,AVX,F16C,RDRAND,HV> Sep 8 22:23:53 FWAZ01 kernel: AMD Features=0x2c100800<SYSCALL,NX,Page1GB,RDTSCP,LM> Sep 8 22:23:53 FWAZ01 kernel: AMD Features2=0x121<LAHF,ABM,Prefetch> Sep 8 22:23:53 FWAZ01 kernel: Structured Extended Features=0x1c2fb9<FSGSBASE,BMI1,HLE,AVX2,SMEP,BMI2,ERMS,INVPCID,RTM,NFPUSG,RDSEED,ADX,SMAP> Sep 8 22:23:53 FWAZ01 kernel: Structured Extended Features3=0x20000000<ARCH_CAP> Sep 8 22:23:53 FWAZ01 kernel: XSAVE Features=0x1<XSAVEOPT> Sep 8 22:23:53 FWAZ01 kernel: IA32_ARCH_CAPS=0x4 Sep 8 22:23:53 FWAZ01 kernel: Hypervisor: Origin = "Microsoft Hv" Sep 8 22:23:53 FWAZ01 kernel: padlock0: No ACE support. Sep 8 22:23:53 FWAZ01 kernel: aesni0: <AES-CBC,AES-XTS,AES-GCM,AES-ICM> on motherboard Sep 8 22:23:53 FWAZ01 kernel: tun1: changing name to 'ovpns1' Sep 8 22:23:52 FWAZ01 php-cgi: rc.bootup: Resyncing OpenVPN instances. Sep 8 22:23:53 FWAZ01 kernel: tun2: changing name to 'ovpns2' Sep 8 22:23:53 FWAZ01 kernel: ovpns1: link state changed to UP Sep 8 22:23:53 FWAZ01 check_reload_status: rc.newwanip starting ovpns1 Sep 8 22:23:53 FWAZ01 kernel: tun3: changing name to 'ovpns3' Sep 8 22:23:53 FWAZ01 kernel: ovpns2: link state changed to UP Sep 8 22:23:53 FWAZ01 check_reload_status: rc.newwanip starting ovpns2 Sep 8 22:23:53 FWAZ01 kernel: ovpns3: link state changed to UP Sep 8 22:23:53 FWAZ01 kernel: tun5: changing name to 'ovpns5' Sep 8 22:23:53 FWAZ01 check_reload_status: rc.newwanip starting ovpns3 Sep 8 22:23:54 FWAZ01 kernel: ovpns5: link state changed to UP Sep 8 22:23:54 FWAZ01 check_reload_status: rc.newwanip starting ovpns5 Sep 8 22:23:54 FWAZ01 kernel: pflog0: promiscuous mode enabled Sep 8 22:23:54 FWAZ01 sshd[8755]: Server listening on :: port 22. Sep 8 22:23:54 FWAZ01 kernel: Sep 8 22:23:54 FWAZ01 kernel: DUMMYNET 0 with IPv6 initialized (100409) Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched FIFO loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched QFQ loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched RR loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched WF2Q+ loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched PRIO loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched FQ_CODEL loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_sched dn_sched FQ_PIE loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_aqm dn_aqm CODEL loaded Sep 8 22:23:54 FWAZ01 kernel: load_dn_aqm dn_aqm PIE loaded Sep 8 22:23:54 FWAZ01 sshd[8755]: Server listening on 0.0.0.0 port 22. Sep 8 22:23:54 FWAZ01 kernel: .done. Sep 8 22:23:54 FWAZ01 php-cgi: rc.bootup: Default gateway setting as default. Sep 8 22:23:54 FWAZ01 kernel: done. Sep 8 22:23:54 FWAZ01 php-cgi: rc.bootup: Gateway, none 'available' for inet6, use the first one configured. '' Sep 8 22:23:54 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: Info: starting on ovpns1. Sep 8 22:23:54 FWAZ01 kernel: done. Sep 8 22:23:54 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns1). Sep 8 22:23:54 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: Info: starting on ovpns2. Sep 8 22:23:54 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns2). Sep 8 22:23:54 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: Info: starting on ovpns3. Sep 8 22:23:54 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns3). Sep 8 22:23:55 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: Info: starting on ovpns5. Sep 8 22:23:55 FWAZ01 php-fpm[338]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns5). Sep 8 22:23:56 FWAZ01 kernel: done. Sep 8 22:23:58 FWAZ01 kernel: done. Sep 8 22:23:58 FWAZ01 kernel: done. Sep 8 22:23:58 FWAZ01 php-cgi: rc.bootup: NTPD is starting up. Sep 8 22:23:58 FWAZ01 kernel: done. Sep 8 22:23:58 FWAZ01 check_reload_status: Updating all dyndns Sep 8 22:23:59 FWAZ01 kernel: . Sep 8 22:23:59 FWAZ01 kernel: ....done. Sep 8 22:24:05 FWAZ01 php-cgi: rc.bootup: Creating rrd update script Sep 8 22:24:05 FWAZ01 kernel: done. Sep 8 22:24:05 FWAZ01 syslogd: exiting on signal 15 Sep 8 22:24:06 FWAZ01 syslogd: kernel boot file is /boot/kernel/kernel Sep 8 22:24:06 FWAZ01 kernel: done. Sep 8 22:24:06 FWAZ01 php-fpm[339]: /rc.start_packages: Restarting/Starting all packages. Sep 8 22:24:07 FWAZ01 radiusd[29114]: Debugger not attached Sep 8 22:24:08 FWAZ01 radiusd[63061]: [/usr/local/etc/raddb/mods-config/attr_filter/access_reject]:11 Check item "FreeRADIUS-Response-Delay" found in filter list for realm "DEFAULT". Sep 8 22:24:08 FWAZ01 radiusd[63061]: [/usr/local/etc/raddb/mods-config/attr_filter/access_reject]:11 Check item "FreeRADIUS-Response-Delay-USec" found in filter list for realm "DEFAULT". Sep 8 22:24:08 FWAZ01 radiusd[63061]: Loaded virtual server <default> Sep 8 22:24:08 FWAZ01 radiusd[63061]: Loaded virtual server default Sep 8 22:24:08 FWAZ01 radiusd[63061]: Ignoring "sql" (see raddb/mods-available/README.rst) Sep 8 22:24:08 FWAZ01 radiusd[63061]: Ignoring "ldap" (see raddb/mods-available/README.rst) Sep 8 22:24:08 FWAZ01 radiusd[63061]: # Skipping contents of 'if' as it is always 'false' -- /usr/local/etc/raddb/sites-enabled/inner-tunnel-ttls:63 Sep 8 22:24:08 FWAZ01 radiusd[63061]: Loaded virtual server inner-tunnel-ttls Sep 8 22:24:08 FWAZ01 radiusd[63061]: # Skipping contents of 'if' as it is always 'false' -- /usr/local/etc/raddb/sites-enabled/inner-tunnel-peap:63 Sep 8 22:24:08 FWAZ01 radiusd[63061]: Loaded virtual server inner-tunnel-peap Sep 8 22:24:08 FWAZ01 radiusd[63061]: Ready to process requests -

So are you seeing this only in Azure or is this in locally hosted hyper-V VMs also?

Steve

-

This is the log from vmware VM

Sep 10 03:14:21 fwlan syslogd: kernel boot file is /boot/kernel/kernel Sep 10 03:14:21 fwlan kernel: pflog0: promiscuous mode disabled Sep 10 03:14:21 fwlan kernel: ovpns1: link state changed to DOWN Sep 10 03:14:21 fwlan kernel: ovpns3: link state changed to DOWN Sep 10 03:14:21 fwlan kernel: ovpns2: link state changed to DOWN Sep 10 03:14:21 fwlan kernel: ovpns5: link state changed to DOWN Sep 10 03:14:21 fwlan kernel: Waiting (max 60 seconds) for system process `vnlru' to stop... done Sep 10 03:14:21 fwlan kernel: Waiting (max 60 seconds) for system process `bufdaemon' to stop... done Sep 10 03:14:21 fwlan kernel: Waiting (max 60 seconds) for system process `syncer' to stop... Sep 10 03:14:21 fwlan kernel: Syncing disks, vnodes remaining... 0 0 done Sep 10 03:14:21 fwlan kernel: All buffers synced. Sep 10 03:14:21 fwlan kernel: Uptime: 62d2h23m45s Sep 10 03:14:21 fwlan kernel: Copyright (c) 1992-2018 The FreeBSD Project. Sep 10 03:14:21 fwlan kernel: Copyright (c) 1979, 1980, 1983, 1986, 1988, 1989, 1991, 1992, 1993, 1994 Sep 10 03:14:21 fwlan kernel: The Regents of the University of California. All rights reserved. Sep 10 03:14:21 fwlan kernel: FreeBSD is a registered trademark of The FreeBSD Foundation. Sep 10 03:14:21 fwlan kernel: FreeBSD 11.2-RELEASE-p10 #9 4a2bfdce133(RELENG_2_4_4): Wed May 15 18:54:42 EDT 2019 Sep 10 03:14:21 fwlan kernel: root@buildbot1-nyi.netgate.com:/build/ce-crossbuild-244/obj/amd64/ZfGpH5cd/build/ce-crossbuild-244/pfSense/tmp/FreeBSD-src/sys/pfSense amd64 Sep 10 03:14:21 fwlan kernel: FreeBSD clang version 6.0.0 (tags/RELEASE_600/final 326565) (based on LLVM 6.0.0) Sep 10 03:14:21 fwlan kernel: VT(vga): text 80x25 Sep 10 03:14:21 fwlan kernel: CPU: Intel(R) Xeon(R) CPU X5660 @ 2.80GHz (2799.20-MHz K8-class CPU) Sep 10 03:14:21 fwlan kernel: Origin="GenuineIntel" Id=0x206c2 Family=0x6 Model=0x2c Stepping=2 Sep 10 03:14:21 fwlan kernel: Features=0x1fa3fbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE,MCA,CMOV,PAT,PSE36,DTS,MMX,FXSR,SSE,SSE2,SS,HTT> Sep 10 03:14:21 fwlan kernel: Features2=0x82982203<SSE3,PCLMULQDQ,SSSE3,CX16,SSE4.1,SSE4.2,POPCNT,AESNI,HV> Sep 10 03:14:21 fwlan kernel: AMD Features=0x28100800<SYSCALL,NX,RDTSCP,LM> Sep 10 03:14:21 fwlan kernel: AMD Features2=0x1<LAHF> Sep 10 03:14:21 fwlan kernel: TSC: P-state invariant Sep 10 03:14:21 fwlan kernel: Hypervisor: Origin = "VMwareVMware" Sep 10 03:14:21 fwlan kernel: real memory = 536870912 (512 MB) Sep 10 03:14:21 fwlan kernel: avail memory = 458670080 (437 MB) Sep 10 03:14:21 fwlan kernel: Event timer "LAPIC" quality 600 Sep 10 03:14:21 fwlan kernel: ACPI APIC Table: <PTLTD APIC > Sep 10 03:14:21 fwlan kernel: FreeBSD/SMP: Multiprocessor System Detected: 4 CPUs Sep 10 03:14:21 fwlan kernel: FreeBSD/SMP: 2 package(s) x 2 core(s) Sep 10 03:14:21 fwlan kernel: MADT: Forcing active-low polarity and level trigger for SCI Sep 10 03:14:21 fwlan kernel: ioapic0 <Version 1.1> irqs 0-23 on motherboard Sep 10 03:14:21 fwlan kernel: SMP: AP CPU #3 Launched! Sep 10 03:14:21 fwlan kernel: SMP: AP CPU #2 Launched! Sep 10 03:14:21 fwlan kernel: SMP: AP CPU #1 Launched! Sep 10 03:14:21 fwlan kernel: ipw_bss: You need to read the LICENSE file in /usr/share/doc/legal/intel_ipw.LICENSE. Sep 10 03:14:21 fwlan kernel: ipw_bss: If you agree with the license, set legal.intel_ipw.license_ack=1 in /boot/loader.conf. Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (ipw_bss_fw, 0xffffffff80681430, 0) error 1 Sep 10 03:14:21 fwlan kernel: random: entropy device external interface Sep 10 03:14:21 fwlan kernel: ipw_ibss: You need to read the LICENSE file in /usr/share/doc/legal/intel_ipw.LICENSE. Sep 10 03:14:21 fwlan kernel: ipw_ibss: If you agree with the license, set legal.intel_ipw.license_ack=1 in /boot/loader.conf. Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (ipw_ibss_fw, 0xffffffff806814e0, 0) error 1 Sep 10 03:14:21 fwlan kernel: ipw_monitor: You need to read the LICENSE file in /usr/share/doc/legal/intel_ipw.LICENSE. Sep 10 03:14:21 fwlan kernel: ipw_monitor: If you agree with the license, set legal.intel_ipw.license_ack=1 in /boot/loader.conf. Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (ipw_monitor_fw, 0xffffffff80681590, 0) error 1 Sep 10 03:14:21 fwlan kernel: iwi_bss: You need to read the LICENSE file in /usr/share/doc/legal/intel_iwi.LICENSE. Sep 10 03:14:21 fwlan kernel: iwi_bss: If you agree with the license, set legal.intel_iwi.license_ack=1 in /boot/loader.conf. Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (iwi_bss_fw, 0xffffffff806a8460, 0) error 1 Sep 10 03:14:21 fwlan kernel: iwi_ibss: You need to read the LICENSE file in /usr/share/doc/legal/intel_iwi.LICENSE. Sep 10 03:14:21 fwlan kernel: iwi_ibss: If you agree with the license, set legal.intel_iwi.license_ack=1 in /boot/loader.conf. Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (iwi_ibss_fw, 0xffffffff806a8510, 0) error 1 Sep 10 03:14:21 fwlan kernel: iwi_monitor: You need to read the LICENSE file in /usr/share/doc/legal/intel_iwi.LICENSE. Sep 10 03:14:21 fwlan kernel: iwi_monitor: If you agree with the license, set legal.intel_iwi.license_ack=1 in /boot/loader.conf. Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (iwi_monitor_fw, 0xffffffff806a85c0, 0) error 1 Sep 10 03:14:21 fwlan kernel: wlan: mac acl policy registered Sep 10 03:14:21 fwlan kernel: kbd1 at kbdmux0 Sep 10 03:14:21 fwlan kernel: netmap: loaded module Sep 10 03:14:21 fwlan kernel: module_register_init: MOD_LOAD (vesa, 0xffffffff8120aaa0, 0) error 19 Sep 10 03:14:21 fwlan kernel: nexus0 Sep 10 03:14:21 fwlan kernel: vtvga0: <VT VGA driver> on motherboard Sep 10 03:14:21 fwlan kernel: cryptosoft0: <software crypto> on motherboard Sep 10 03:14:21 fwlan kernel: padlock0: No ACE support. Sep 10 03:14:21 fwlan kernel: acpi0: <INTEL 440BX> on motherboard Sep 10 03:14:21 fwlan kernel: acpi0: Power Button (fixed) Sep 10 03:14:21 fwlan kernel: hpet0: <High Precision Event Timer> iomem 0xfed00000-0xfed003ff on acpi0 Sep 10 03:14:21 fwlan kernel: Timecounter "HPET" frequency 14318180 Hz quality 950 Sep 10 03:14:21 fwlan kernel: cpu0: <ACPI CPU> numa-domain 0 on acpi0 Sep 10 03:14:21 fwlan kernel: cpu1: <ACPI CPU> numa-domain 0 on acpi0 Sep 10 03:14:21 fwlan kernel: cpu2: <ACPI CPU> numa-domain 0 on acpi0 Sep 10 03:14:21 fwlan kernel: cpu3: <ACPI CPU> numa-domain 0 on acpi0 Sep 10 03:14:21 fwlan kernel: attimer0: <AT timer> port 0x40-0x43 irq 0 on acpi0 Sep 10 03:14:21 fwlan kernel: Timecounter "i8254" frequency 1193182 Hz quality 0 Sep 10 03:14:21 fwlan kernel: Event timer "i8254" frequency 1193182 Hz quality 100 Sep 10 03:14:21 fwlan kernel: atrtc0: <AT realtime clock> port 0x70-0x71 irq 8 on acpi0 Sep 10 03:14:21 fwlan kernel: atrtc0: registered as a time-of-day clock, resolution 1.000000s Sep 10 03:14:21 fwlan kernel: Event timer "RTC" frequency 32768 Hz quality 0 Sep 10 03:14:21 fwlan kernel: Timecounter "ACPI-fast" frequency 3579545 Hz quality 900 Sep 10 03:14:21 fwlan kernel: acpi_timer0: <24-bit timer at 3.579545MHz> port 0x1008-0x100b on acpi0 Sep 10 03:14:21 fwlan kernel: pcib0: <ACPI Host-PCI bridge> port 0xcf8-0xcff on acpi0 Sep 10 03:14:21 fwlan kernel: pci0: <ACPI PCI bus> on pcib0 Sep 10 03:14:21 fwlan kernel: pcib1: <ACPI PCI-PCI bridge> at device 1.0 on pci0 Sep 10 03:14:21 fwlan kernel: pci1: <ACPI PCI bus> on pcib1 Sep 10 03:14:21 fwlan kernel: isab0: <PCI-ISA bridge> at device 7.0 on pci0 Sep 10 03:14:21 fwlan kernel: isa0: <ISA bus> on isab0 Sep 10 03:14:21 fwlan kernel: atapci0: <Intel PIIX4 UDMA33 controller> port 0x1f0-0x1f7,0x3f6,0x170-0x177,0x376,0x1060-0x106f at device 7.1 on pci0 Sep 10 03:14:21 fwlan kernel: ata0: <ATA channel> at channel 0 on atapci0 Sep 10 03:14:21 fwlan kernel: ata1: <ATA channel> at channel 1 on atapci0 Sep 10 03:14:21 fwlan kernel: pci0: <bridge> at device 7.3 (no driver attached) Sep 10 03:14:21 fwlan kernel: vgapci0: <VGA-compatible display> port 0x1070-0x107f mem 0xec000000-0xefffffff,0xfe000000-0xfe7fffff irq 16 at device 15.0 on pci0 Sep 10 03:14:21 fwlan kernel: vgapci0: Boot video device Sep 10 03:14:21 fwlan kernel: pcib2: <ACPI PCI-PCI bridge> at device 17.0 on pci0 Sep 10 03:14:21 fwlan kernel: pci2: <ACPI PCI bus> on pcib2 Sep 10 03:14:21 fwlan kernel: em0: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2000-0x203f mem 0xfd560000-0xfd57ffff,0xfdff0000-0xfdffffff irq 18 at device 0.0 on pci2 Sep 10 03:14:21 fwlan kernel: em0: Ethernet address: 00:50:56:a6:06:40 Sep 10 03:14:21 fwlan kernel: em0: link state changed to UP Sep 10 03:14:21 fwlan kernel: em0: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em1: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2040-0x207f mem 0xfd540000-0xfd55ffff,0xfdfe0000-0xfdfeffff irq 19 at device 1.0 on pci2 Sep 10 03:14:21 fwlan kernel: em1: Ethernet address: 00:50:56:0c:53:c2 Sep 10 03:14:21 fwlan kernel: em1: link state changed to UP Sep 10 03:14:21 fwlan kernel: em1: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em2: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2080-0x20bf mem 0xfd520000-0xfd53ffff,0xfdfd0000-0xfdfdffff irq 17 at device 3.0 on pci2 Sep 10 03:14:21 fwlan kernel: em2: Ethernet address: 00:50:56:a6:dd:d7 Sep 10 03:14:21 fwlan kernel: em2: link state changed to UP Sep 10 03:14:21 fwlan kernel: em2: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em3: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x20c0-0x20ff mem 0xfd500000-0xfd51ffff,0xfdfc0000-0xfdfcffff irq 18 at device 4.0 on pci2 Sep 10 03:14:21 fwlan kernel: em3: Ethernet address: 00:50:56:a6:da:1b Sep 10 03:14:21 fwlan kernel: em3: link state changed to UP Sep 10 03:14:21 fwlan kernel: em3: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em4: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2400-0x243f mem 0xfd4e0000-0xfd4fffff,0xfdfb0000-0xfdfbffff irq 19 at device 5.0 on pci2 Sep 10 03:14:21 fwlan kernel: em4: Ethernet address: 00:50:56:a6:89:a3 Sep 10 03:14:21 fwlan kernel: em4: link state changed to UP Sep 10 03:14:21 fwlan kernel: em4: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em5: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2440-0x247f mem 0xfd4c0000-0xfd4dffff,0xfdfa0000-0xfdfaffff irq 16 at device 6.0 on pci2 Sep 10 03:14:21 fwlan kernel: em5: Ethernet address: 00:50:56:a6:7f:7c Sep 10 03:14:21 fwlan kernel: em5: link state changed to UP Sep 10 03:14:21 fwlan kernel: em5: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em6: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x2480-0x24bf mem 0xfd4a0000-0xfd4bffff,0xfdf90000-0xfdf9ffff irq 17 at device 7.0 on pci2 Sep 10 03:14:21 fwlan kernel: em6: Ethernet address: 00:50:56:a6:3c:9c Sep 10 03:14:21 fwlan kernel: em6: link state changed to UP Sep 10 03:14:21 fwlan kernel: em6: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: em7: <Intel(R) PRO/1000 Legacy Network Connection 1.1.0> port 0x24c0-0x24ff mem 0xfd480000-0xfd49ffff,0xfdf80000-0xfdf8ffff irq 18 at device 8.0 on pci2 Sep 10 03:14:21 fwlan kernel: em7: Ethernet address: 00:50:56:a6:28:eb Sep 10 03:14:21 fwlan kernel: em7: link state changed to UP Sep 10 03:14:21 fwlan kernel: em7: netmap queues/slots: TX 1/256, RX 1/256 Sep 10 03:14:21 fwlan kernel: pcib3: <ACPI PCI-PCI bridge> at device 21.0 on pci0 Sep 10 03:14:21 fwlan kernel: pcib3: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pci3: <ACPI PCI bus> on pcib3 Sep 10 03:14:21 fwlan kernel: mpt0: <LSILogic SAS/SATA Adapter> port 0x4000-0x40ff mem 0xfd3ec000-0xfd3effff,0xfd3f0000-0xfd3fffff irq 18 at device 0.0 on pci3 Sep 10 03:14:21 fwlan kernel: mpt0: MPI Version=1.5.0.0 Sep 10 03:14:21 fwlan kernel: pcib4: <ACPI PCI-PCI bridge> at device 21.1 on pci0 Sep 10 03:14:21 fwlan kernel: pcib4: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib5: <ACPI PCI-PCI bridge> at device 21.2 on pci0 Sep 10 03:14:21 fwlan kernel: pcib5: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib6: <ACPI PCI-PCI bridge> at device 21.3 on pci0 Sep 10 03:14:21 fwlan kernel: pcib6: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib7: <ACPI PCI-PCI bridge> at device 21.4 on pci0 Sep 10 03:14:21 fwlan kernel: pcib7: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib8: <ACPI PCI-PCI bridge> at device 21.5 on pci0 Sep 10 03:14:21 fwlan kernel: pcib8: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib9: <ACPI PCI-PCI bridge> at device 21.6 on pci0 Sep 10 03:14:21 fwlan kernel: pcib9: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib10: <ACPI PCI-PCI bridge> at device 21.7 on pci0 Sep 10 03:14:21 fwlan kernel: pcib10: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib11: <ACPI PCI-PCI bridge> at device 22.0 on pci0 Sep 10 03:14:21 fwlan kernel: pcib11: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pci4: <ACPI PCI bus> on pcib11 Sep 10 03:14:21 fwlan kernel: vmx0: <VMware VMXNET3 Ethernet Adapter> port 0x5000-0x500f mem 0xfd2fc000-0xfd2fcfff,0xfd2fd000-0xfd2fdfff,0xfd2fe000-0xfd2fffff irq 19 at device 0.0 on pci4 Sep 10 03:14:21 fwlan kernel: vmx0: Ethernet address: 00:0c:29:cd:30:a3 Sep 10 03:14:21 fwlan kernel: pcib12: <ACPI PCI-PCI bridge> at device 22.1 on pci0 Sep 10 03:14:21 fwlan kernel: pcib12: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib13: <ACPI PCI-PCI bridge> at device 22.2 on pci0 Sep 10 03:14:21 fwlan kernel: pcib13: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib14: <ACPI PCI-PCI bridge> at device 22.3 on pci0 Sep 10 03:14:21 fwlan kernel: pcib14: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib15: <ACPI PCI-PCI bridge> at device 22.4 on pci0 Sep 10 03:14:21 fwlan kernel: pcib15: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib16: <ACPI PCI-PCI bridge> at device 22.5 on pci0 Sep 10 03:14:21 fwlan kernel: pcib16: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib17: <ACPI PCI-PCI bridge> at device 22.6 on pci0 Sep 10 03:14:21 fwlan kernel: pcib17: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib18: <ACPI PCI-PCI bridge> at device 22.7 on pci0 Sep 10 03:14:21 fwlan kernel: pcib18: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib19: <ACPI PCI-PCI bridge> at device 23.0 on pci0 Sep 10 03:14:21 fwlan kernel: pcib19: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib20: <ACPI PCI-PCI bridge> at device 23.1 on pci0 Sep 10 03:14:21 fwlan kernel: pcib20: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib21: <ACPI PCI-PCI bridge> at device 23.2 on pci0 Sep 10 03:14:21 fwlan kernel: pcib21: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib22: <ACPI PCI-PCI bridge> at device 23.3 on pci0 Sep 10 03:14:21 fwlan kernel: pcib22: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib23: <ACPI PCI-PCI bridge> at device 23.4 on pci0 Sep 10 03:14:21 fwlan kernel: pcib23: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib24: <ACPI PCI-PCI bridge> at device 23.5 on pci0 Sep 10 03:14:21 fwlan kernel: pcib24: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib25: <ACPI PCI-PCI bridge> at device 23.6 on pci0 Sep 10 03:14:21 fwlan kernel: pcib25: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib26: <ACPI PCI-PCI bridge> at device 23.7 on pci0 Sep 10 03:14:21 fwlan kernel: pcib26: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib27: <ACPI PCI-PCI bridge> at device 24.0 on pci0 Sep 10 03:14:21 fwlan kernel: pcib27: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib28: <ACPI PCI-PCI bridge> at device 24.1 on pci0 Sep 10 03:14:21 fwlan kernel: pcib28: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib29: <ACPI PCI-PCI bridge> at device 24.2 on pci0 Sep 10 03:14:21 fwlan kernel: pcib29: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib30: <ACPI PCI-PCI bridge> at device 24.3 on pci0 Sep 10 03:14:21 fwlan kernel: pcib30: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib31: <ACPI PCI-PCI bridge> at device 24.4 on pci0 Sep 10 03:14:21 fwlan kernel: pcib31: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib32: <ACPI PCI-PCI bridge> at device 24.5 on pci0 Sep 10 03:14:21 fwlan kernel: pcib32: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib33: <ACPI PCI-PCI bridge> at device 24.6 on pci0 Sep 10 03:14:21 fwlan kernel: pcib33: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: pcib34: <ACPI PCI-PCI bridge> at device 24.7 on pci0 Sep 10 03:14:21 fwlan kernel: pcib34: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: acpi_acad0: <AC Adapter> on acpi0 Sep 10 03:14:21 fwlan kernel: atkbdc0: <Keyboard controller (i8042)> port 0x60,0x64 irq 1 on acpi0 Sep 10 03:14:21 fwlan kernel: atkbd0: <AT Keyboard> irq 1 on atkbdc0 Sep 10 03:14:21 fwlan kernel: kbd0 at atkbd0 Sep 10 03:14:21 fwlan kernel: atkbd0: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: psm0: <PS/2 Mouse> irq 12 on atkbdc0 Sep 10 03:14:21 fwlan kernel: psm0: [GIANT-LOCKED] Sep 10 03:14:21 fwlan kernel: psm0: model IntelliMouse, device ID 3 Sep 10 03:14:21 fwlan kernel: acpi_syscontainer0: <System Container> on acpi0 Sep 10 03:14:21 fwlan kernel: ppc0: <Parallel port> port 0x378-0x37b irq 7 on acpi0 Sep 10 03:14:21 fwlan kernel: ppc0: Generic chipset (NIBBLE-only) in COMPATIBLE mode Sep 10 03:14:21 fwlan kernel: ppbus0: <Parallel port bus> on ppc0 Sep 10 03:14:21 fwlan kernel: lpt0: <Printer> on ppbus0 Sep 10 03:14:21 fwlan kernel: lpt0: Interrupt-driven port Sep 10 03:14:21 fwlan kernel: ppi0: <Parallel I/O> on ppbus0 Sep 10 03:14:21 fwlan kernel: uart0: <16550 or compatible> port 0x3f8-0x3ff irq 4 flags 0x10 on acpi0 Sep 10 03:14:21 fwlan kernel: uart1: <16550 or compatible> port 0x2f8-0x2ff irq 3 on acpi0 Sep 10 03:14:21 fwlan kernel: fdc0: <floppy drive controller> port 0x3f0-0x3f5,0x3f7 irq 6 drq 2 on acpi0 Sep 10 03:14:21 fwlan kernel: fd0: <1440-KB 3.5" drive> on fdc0 drive 0 Sep 10 03:14:21 fwlan kernel: orm0: <ISA Option ROMs> at iomem 0xc0000-0xc7fff,0xc8000-0xc8fff,0xc9000-0xc9fff,0xca000-0xcafff,0xcb000-0xcbfff,0xcc000-0xccfff,0xcd000-0xcdfff,0xce000-0xcefff,0xcf000-0xcffff,0xd0000-0xd1fff,0xd2000-0xd2fff,0xdc000-0xdffff,0xe0000-0xe7fff on isa0 Sep 10 03:14:21 fwlan kernel: vga0: <Generic ISA VGA> at port 0x3c0-0x3df iomem 0xa0000-0xbffff on isa0 Sep 10 03:14:21 fwlan kernel: Timecounters tick every 10.000 msec Sep 10 03:14:21 fwlan kernel: da0 at mpt0 bus 0 scbus2 target 0 lun 0 Sep 10 03:14:21 fwlan kernel: da0: <VMware Virtual disk 1.0> Fixed Direct Access SCSI-2 device Sep 10 03:14:21 fwlan kernel: da0: 300.000MB/s transfers Sep 10 03:14:21 fwlan kernel: da0: Command Queueing enabled Sep 10 03:14:21 fwlan kernel: da0: 2048MB (4194304 512 byte sectors) Sep 10 03:14:21 fwlan kernel: da0: quirks=0x140<RETRY_BUSY,STRICT_UNMAP> Sep 10 03:14:21 fwlan kernel: cd0 at ata1 bus 0 scbus1 target 0 lun 0 Sep 10 03:14:21 fwlan kernel: cd0: <NECVMWar VMware IDE CDR10 1.00> Removable CD-ROM SCSI device Sep 10 03:14:21 fwlan kernel: cd0: Serial Number 10000000000000000001 Sep 10 03:14:21 fwlan kernel: cd0: 33.300MB/s transfers (UDMA2, ATAPI 12bytes, PIO 65534bytes) Sep 10 03:14:21 fwlan kernel: cd0: Attempt to query device size failed: NOT READY, Medium not present Sep 10 03:14:21 fwlan kernel: cd0: quirks=0x40<RETRY_BUSY> Sep 10 03:14:21 fwlan kernel: Trying to mount root from ufs:/dev/ufsid/5556008062c6837b [rw]... Sep 10 03:14:21 fwlan kernel: random: unblocking device. Sep 10 03:14:21 fwlan kernel: CPU: Intel(R) Xeon(R) CPU X5660 @ 2.80GHz (2799.20-MHz K8-class CPU) Sep 10 03:14:21 fwlan kernel: Origin="GenuineIntel" Id=0x206c2 Family=0x6 Model=0x2c Stepping=2 Sep 10 03:14:21 fwlan kernel: Features=0x1fabfbff<FPU,VME,DE,PSE,TSC,MSR,PAE,MCE,CX8,APIC,SEP,MTRR,PGE,MCA,CMOV,PAT,PSE36,CLFLUSH,DTS,MMX,FXSR,SSE,SSE2,SS,HTT> Sep 10 03:14:21 fwlan kernel: Features2=0x82982203<SSE3,PCLMULQDQ,SSSE3,CX16,SSE4.1,SSE4.2,POPCNT,AESNI,HV> Sep 10 03:14:21 fwlan kernel: AMD Features=0x28100800<SYSCALL,NX,RDTSCP,LM> Sep 10 03:14:21 fwlan kernel: AMD Features2=0x1<LAHF> Sep 10 03:14:21 fwlan kernel: TSC: P-state invariant Sep 10 03:14:21 fwlan kernel: Hypervisor: Origin = "VMwareVMware" Sep 10 03:14:21 fwlan kernel: padlock0: No ACE support. Sep 10 03:14:21 fwlan kernel: aesni0: <AES-CBC,AES-XTS,AES-GCM,AES-ICM> on motherboard Sep 10 03:14:22 fwlan kernel: done. Sep 10 03:14:22 fwlan kernel: done. Sep 10 03:14:22 fwlan check_reload_status: Linkup starting vmx0 Sep 10 03:14:22 fwlan kernel: done. Sep 10 03:14:22 fwlan kernel: vmx0: link state changed to UP Sep 10 03:14:22 fwlan php-cgi: rc.bootup: Resyncing OpenVPN instances. Sep 10 03:14:22 fwlan kernel: Sep 10 03:14:22 fwlan kernel: tun1: changing name to 'ovpns1' Sep 10 03:14:22 fwlan kernel: ovpns1: link state changed to UP Sep 10 03:14:22 fwlan kernel: tun2: changing name to 'ovpns2' Sep 10 03:14:22 fwlan check_reload_status: rc.newwanip starting ovpns1 Sep 10 03:14:22 fwlan kernel: ovpns2: link state changed to UP Sep 10 03:14:22 fwlan kernel: tun3: changing name to 'ovpns3' Sep 10 03:14:22 fwlan check_reload_status: rc.newwanip starting ovpns2 Sep 10 03:14:23 fwlan sshd[68557]: Server listening on :: port 22. Sep 10 03:14:23 fwlan sshd[68557]: Server listening on 0.0.0.0 port 22. Sep 10 03:14:23 fwlan syslogd: Logging subprocess 69035 (exec /usr/local/sbin/sshguard) exited due to signal 15. Sep 10 03:14:23 fwlan kernel: ovpns3: link state changed to UP Sep 10 03:14:23 fwlan check_reload_status: rc.newwanip starting ovpns3 Sep 10 03:14:23 fwlan kernel: tun5: changing name to 'ovpns5' Sep 10 03:14:23 fwlan kernel: ovpns5: link state changed to UP Sep 10 03:14:23 fwlan check_reload_status: rc.newwanip starting ovpns5 Sep 10 03:14:23 fwlan kernel: pflog0: promiscuous mode enabled Sep 10 03:14:23 fwlan kernel: . Sep 10 03:14:23 fwlan kernel: DUMMYNET 0 with IPv6 initialized (100409) Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched FIFO loaded Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched QFQ loaded Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched RR loaded Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched WF2Q+ loaded Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched PRIO loaded Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched FQ_CODEL loaded Sep 10 03:14:23 fwlan kernel: load_dn_sched dn_sched FQ_PIE loaded Sep 10 03:14:23 fwlan kernel: load_dn_aqm dn_aqm CODEL loaded Sep 10 03:14:23 fwlan kernel: load_dn_aqm dn_aqm PIE loaded Sep 10 03:14:23 fwlan kernel: ...done. Sep 10 03:14:23 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: Info: starting on ovpns1. Sep 10 03:14:23 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns1). Sep 10 03:14:23 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: Info: starting on ovpns2. Sep 10 03:14:23 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns2). Sep 10 03:14:24 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: Info: starting on ovpns3. Sep 10 03:14:24 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns3). Sep 10 03:14:24 fwlan php-cgi: rc.bootup: Default gateway setting as default. Sep 10 03:14:24 fwlan php-cgi: rc.bootup: Gateway, none 'available' for inet6, use the first one configured. '' Sep 10 03:14:24 fwlan kernel: done. Sep 10 03:14:24 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: Info: starting on ovpns5. Sep 10 03:14:24 fwlan php-fpm[342]: /rc.newwanip: rc.newwanip: on (IP address: ***.***.***.***) (interface: []) (real interface: ovpns5). Sep 10 03:14:24 fwlan kernel: done. Sep 10 03:14:25 fwlan kernel: done. Sep 10 03:14:25 fwlan php-cgi: rc.bootup: NTPD is starting up. Sep 10 03:14:26 fwlan kernel: done. Sep 10 03:14:26 fwlan check_reload_status: Updating all dyndns Sep 10 03:14:26 fwlan kernel: ... Sep 10 03:14:26 fwlan kernel: .done. Sep 10 03:14:30 fwlan php-cgi: rc.bootup: Creating rrd update script Sep 10 03:14:31 fwlan kernel: done. Sep 10 03:14:31 fwlan syslogd: exiting on signal 15 Sep 10 03:14:31 fwlan syslogd: kernel boot file is /boot/kernel/kernel Sep 10 03:14:31 fwlan kernel: done. Sep 10 03:14:31 fwlan php-fpm[343]: /rc.start_packages: Restarting/Starting all packages. Sep 10 03:14:31 fwlan stunnel: LOG5[ui]: stunnel 5.47 on amd64-portbld-freebsd11.2 platform Sep 10 03:14:31 fwlan stunnel: LOG5[ui]: Compiled/running with OpenSSL 1.0.2o-freebsd 27 Mar 2018 Sep 10 03:14:31 fwlan stunnel: LOG5[ui]: Threading:PTHREAD Sockets:POLL,IPv6 TLS:ENGINE,OCSP,PSK,SNI Sep 10 03:14:31 fwlan stunnel: LOG5[ui]: Reading configuration from file /usr/local/etc/stunnel/stunnel.conf Sep 10 03:14:31 fwlan stunnel: LOG5[ui]: UTF-8 byte order mark not detected Sep 10 03:14:31 fwlan stunnel: LOG5[ui]: Configuration successful Sep 10 03:14:32 fwlan kernel: VMware memory control driver initialized Sep 10 03:14:32 fwlan radiusd[54174]: Debugger not attached Sep 10 03:14:32 fwlan login: login on ttyv0 as root Sep 10 03:14:32 fwlan radiusd[65619]: [/usr/local/etc/raddb/mods-config/attr_filter/access_reject]:11 Check item "FreeRADIUS-Response-Delay" found in filter list for realm "DEFAULT". Sep 10 03:14:32 fwlan radiusd[65619]: [/usr/local/etc/raddb/mods-config/attr_filter/access_reject]:11 Check item "FreeRADIUS-Response-Delay-USec" found in filter list for realm "DEFAULT". Sep 10 03:14:32 fwlan radiusd[65619]: Loaded virtual server <default> Sep 10 03:14:32 fwlan radiusd[65619]: Loaded virtual server default Sep 10 03:14:32 fwlan radiusd[65619]: Ignoring "sql" (see raddb/mods-available/README.rst) Sep 10 03:14:32 fwlan radiusd[65619]: Ignoring "ldap" (see raddb/mods-available/README.rst) Sep 10 03:14:32 fwlan radiusd[65619]: # Skipping contents of 'if' as it is always 'false' -- /usr/local/etc/raddb/sites-enabled/inner-tunnel-ttls:63 Sep 10 03:14:32 fwlan radiusd[65619]: Loaded virtual server inner-tunnel-ttls Sep 10 03:14:32 fwlan radiusd[65619]: # Skipping contents of 'if' as it is always 'false' -- /usr/local/etc/raddb/sites-enabled/inner-tunnel-peap:63 Sep 10 03:14:32 fwlan radiusd[65619]: Loaded virtual server inner-tunnel-peap Sep 10 03:14:32 fwlan radiusd[65619]: Ready to process requestsIt seems the row "kernel: SRAT: Ignoring memory at addr" is not present in the vmware log.

-

So that's not in Azure directly?

-

Exactly. Vmware vsphere and Hyper-v on Windows server 2019

-

So the Windows server is running in Azure? Not that that should make any difference but I'm not sure I've seen anyone running in nested hypervisors like that.

-

I don't have any pfsense directly in azure at the moment, just pfsense running on hyper-v.

Actually the problem is just present in all configuration I tried, nested or baremetal hyper-v, with all possible combinations. This have sense because, from hardware point of view, vm doesn't know what hardware is after the first layer; vm just see hyper-v hardware or vmware hardware.

I am running Hyper-v on windows server 2019 (as you can run on windows pro or enterprise pc).Do you think the row "kernel: SRAT: Ignoring memory at addr", or maybe something else in the log, can be elements to investigate more? Do you have the chanches to try some hyper-v environment?

-

Lazy me would just throw another whole 512MB RAM (or even 256) at the Hyper-V install and chalk it up to differences in the emulation/implementation of the V-nics. Or just go back to VMWare.