Pfsense high cpu usage KVM (Unraid)

-

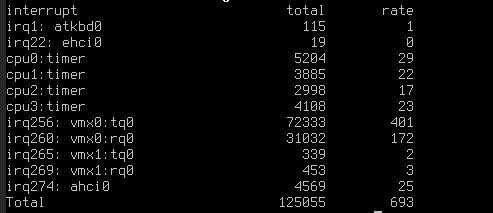

I actually don't know how to read the vmstat -i, but i hope you might know more @stephenw10

-

one queue

vmx0: tq0 (transmission queue 0)

vmx0: rq0 (receive queue 0)with multiple queue you should see tq0 / tq1 etc etc

-

Yeah, that. Though I don't have anything vmx to test again right now.

I think it probably is working as you are seeing the high numbered IRQs which MSI uses.

Try removing that line or commenting it out and rebooting. Do you see any change?On other NICs you might see something like:

[2.4.4-RELEASE][root@5100.stevew.lan]/root: vmstat -i interrupt total rate irq7: uart0 432 0 irq16: sdhci_pci0 536 0 cpu0:timer 68688188 1001 cpu3:timer 1069435 16 cpu2:timer 1060293 15 cpu1:timer 1086989 16 irq264: igb0:que 0 68630 1 irq265: igb0:que 1 68630 1 irq266: igb0:que 2 68630 1 irq267: igb0:que 3 68630 1 irq268: igb0:link 3 0 irq269: igb1:que 0 68630 1 irq270: igb1:que 1 68630 1 irq271: igb1:que 2 68630 1 irq272: igb1:que 3 68630 1 irq273: igb1:link 1 0 irq274: ahci0:ch0 4473 0 irq290: xhci0 85 0 irq291: ix0:q0 216643 3 irq292: ix0:q1 47933 1 irq293: ix0:q2 325480 5 irq294: ix0:q3 514752 7 irq295: ix0:link 2 0 irq301: ix2:q0 74629 1 irq302: ix2:q1 507 0 irq303: ix2:q2 1703 0 irq304: ix2:q3 89446 1 irq305: ix2:link 1 0 irq306: ix3:q0 70295 1 irq307: ix3:q1 4985 0 irq308: ix3:q2 186433 3 irq309: ix3:q3 413486 6 irq310: ix3:link 1 0 Total 74405771 1084https://www.freebsd.org/cgi/man.cgi?query=vmx#MULTIPLE_QUEUES

Steve

-

try to add this on your loader.conf.local

hw.vmx.txnqueue="4" hw.vmx.rxnqueue="4" -

I added the rule with

hw.vmx.txnqueue="4"

hw.vmx.rxnqueue="4"I did not see any change whatsoever in vmstat -i:

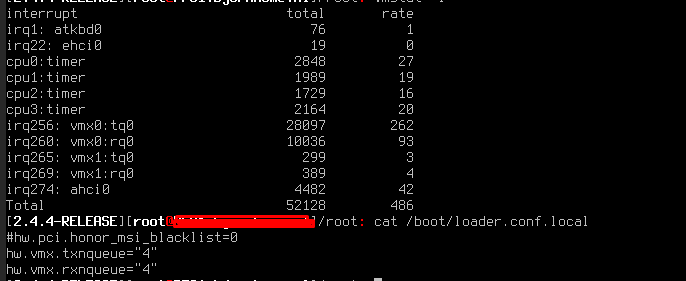

and commenting out the first rule also did not change anything:

Edit:

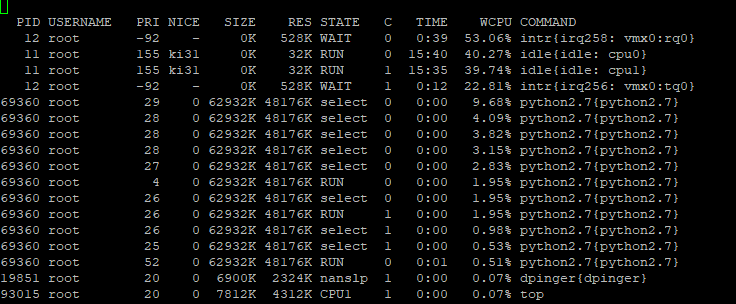

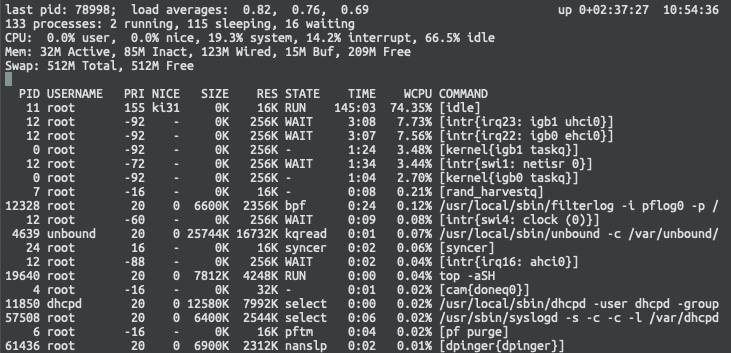

Even when doing a download on a server in LAN and using top -S -H i have this outcome:

-

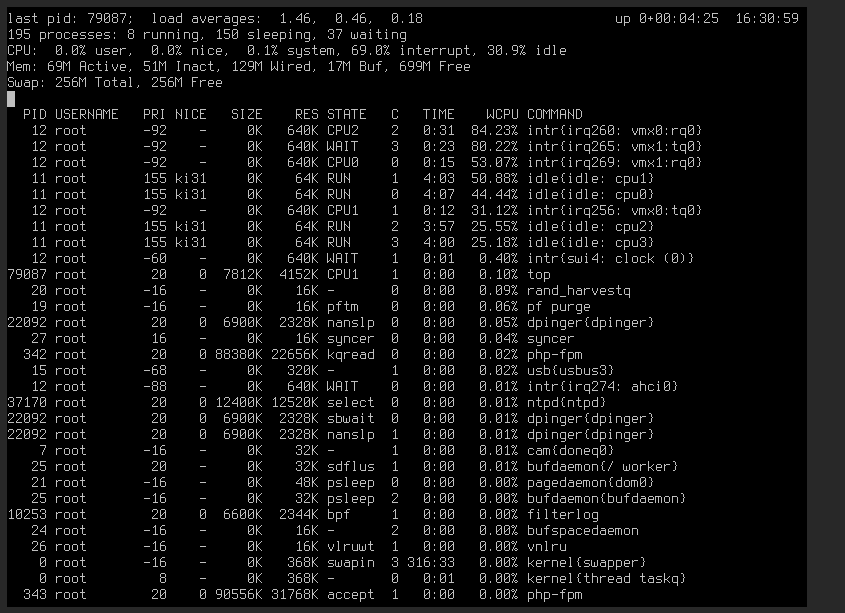

You are seeing load on all CPUs there and none is at 100% so it's not CPU limited at that point.

-

@stephenw10 i have increased it before to 4 cores running at 4ghz. Right now i dont know what to do at all:( i really like the easy way of working with pfsense but i dont know what further investigation i can do because the cpu usage is skyrocket high with 250mbit/s

-

Yes, there is something significantly wrong with your virtualisation setup there. You can pass 250Mbps with a something ancient and slow like a 1st gen APU at 1GHz.

Steve

-

@stephenw10 Poor me then, i will see if i will try some other things with this setup

-

to me the problem should be investigated on the vm side more than from inside pfsense. i see on google that people tend to bridge the interface instead off using the passthrough for unraid.

personally, for example, i was never able to make pfSense work reliable under virtualbox and i had to change the vm to qemu/kvm -

Here a little update: i changed from pfsense to the OPNsense. Kind off the same thing but OPNsense seemed to handle the troughput way better with way lower usage. Right now i am able to run power safe mode (all 8 cores on 1.4Ghz) where 4 cores are for the firewall and get 250mbit without a problem. I am now using this firewall for all the network traffic in my house. So far no issues.

-

same thing here, i'm using intel cpu and yet very high cpu usage.

I have a 4 port NIC, and I passthrough 2 ports to pfSense, 1 port for WAN, and 1 port for LAN.

I saw a comment on reddit says:it sounds like you've got your WAN to one port of your Intel NIC and the LAN to the other port of your Intel NIC... I don't think that's it's intended use. Each physical NIC should be for one purpose, LAN or WAN but not both. Maybe I'm wrong on that but I've always seen Dual or Quad NICs used as all LAN ports. (reddit)

I'm wondering if this really a bad thing? I have other openwrt installed before and never have this issue, or maybe you guys have a workaround to fix this?

-

No that doesn't make any difference. pfSense just sees those as individual NICs.

Steve

-

ok, I found out my network card is using the igb driver, there are some threads point out that sometimes igb cards need some tweaking. so this is not quite a unraid's fault.

-

@tinysnake Have u tried completely disconnecting the NIC from unraid and bound the PCI(E) card to your VM?

See this video for configuration: https://www.youtube.com/watch?v=58tNUx7A3lM -

@BjornStevens yes, I followed his tutorial to passthrough the nics to pfsense.

And I tried using just 1 port for wan and lan with no performance issue, but I don't quite like this setup, will try tweak the igb settings after work. -

Nope, I tried every possible tweak that I can found and with no luck what so ever.

I found a weird thing: the intr process of igb0 and igb1 is ehci and uhci? as far as I know, these are usb thing not a pcie thing?

-

They are sharing the irq with those USB controllers, which is unusual but probably not an issue.

They don't appear to be using MSI/X, did you disable that? They would normally be on their own, much higher, IRQs.

Steve

-

@stephenw10 Yes I disabled MSI/X, like I said, I tried every possible combination of fine tuning and the problem still there. I even bought an other card, and more problem pops up. I think it's time for me to give up trying pfSense, :(

-

You shouldn't need any tweaks to igb really, I would removed all that and recheck.

Just how high a CPU usage are you seeing? Under what traffic conditions?

Steve