How pfSense utilize multicore processors and multi-CPU systems ?

-

pfSense is not single threaded. pf is no longer single threaded so there are certainly advantages to use multiple CPU cores.

Some things are still single threaded. OpenVPN and PPPoE are two we most commonly see. Some NIC drivers cannot use more than one queue but most now do.

There's no significant difference between multiple cpus and multiple cores in a single CPU as far as I know.Steve

-

@stephenw10 said in How pfSense utilize multicore processors and multi-CPU systems ?:

Some NIC drivers cannot use more than one queue but most now do.

Where am I able to see a list of NICs that able to using multitreads on FreeBSD ?

-

Well ....

Stay away from Realtek

Prefer Intel

and your good. -

@Gertjan said in How pfSense utilize multicore processors and multi-CPU systems ?:

Well ....

Stay away from Realtek

Prefer Intel

and your good.Thank You for advise!

Are You sure about intel ? Because even in pfSense official doc i able to see from all NICs troubleshootings at least 2 issues linked to Broadcom and 2 issues linked to Intel. No other NICs.

From statistical point of view this may be not good result.

Also search on this forum also give a point that many issues linked to Intel. Of course may be a lot of users prefer to using Intel NICs, and some of them have an issues... -

@stephenw10 said in How pfSense utilize multicore processors and multi-CPU systems ?:

pfSense is not single threaded. pf is no longer single threaded so there are certainly advantages to use multiple CPU cores.

Some things are still single threaded. OpenVPN and PPPoE are two we most commonly see. Some NIC drivers cannot use more than one queue but most now do.

There's no significant difference between multiple cpus and multiple cores in a single CPU as far as I know.You mean "no significant difference" from FreeBSD CPU-related kernel drivers that care about apps threads?

-

@Sergei_Shablovsky said in How pfSense utilize multicore processors and multi-CPU systems ?:

@stephenw10 said in How pfSense utilize multicore processors and multi-CPU systems ?:

Some NIC drivers cannot use more than one queue but most now do.

Where am I able to see a list of NICs that able to using multitreads on FreeBSD ?

The FreeBSD web pages would be a good place to start.

A lot of the drivers are provided by the chip manufacturers igb for example is written by Intel.

https://www.freebsd.org/cgi/man.cgi?query=igb&sektion=4&manpath=freebsd-release-ports

https://www.freebsd.org/releases/11.2R/hardware.html#ethernet

-

@Sergei_Shablovsky said in How pfSense utilize multicore processors and multi-CPU systems ?:

Are You sure about intel ?

Very sure. Use Intel based NICs if you want the least likelihood of seeing issues.

Steve

-

@stephenw10 said in How pfSense utilize multicore processors and multi-CPU systems ?:

Steve

Appreciate Your help, Steve! :)

-

After FreeBSD coming and pfSense have a several major updates, time to return to this question.

In FreeBSD 9-11 separate process was creating for each card

(for example for Intels cards with 2Eth)

intr{irq273: igb1:que}

intr{irq292: igb3:que}

...and so on...And because FreeBSD (BTW for a long time!) not able to paralleling PPPOE traffic for several threads, in FreeBSD 9-11 this processes going to several cores by using cpuset. This working nod bad until FreeBSD 12 come in.

Now on FreeBSD 12 all processes are together

kernel{if_io_tqg_0}

kernel{if_io_tqg_1}

kernel{if_io_tqg_2}

kernel{if_io_tqg_3}

....and so on....And looks like no ability to assign each card to separate core.

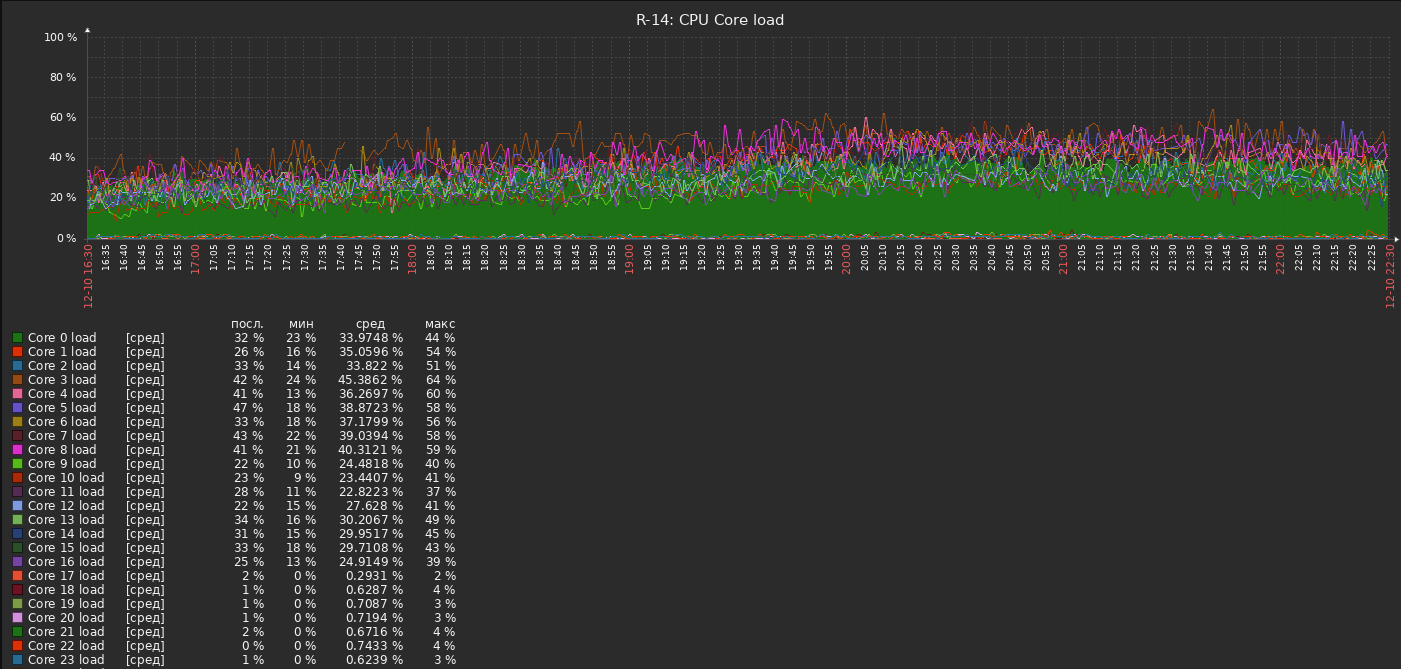

As a result we have first core 75-80% loaded in middle, and up to 100% - at peak traffic loading.

Some people’s suggest tuning the iflib settings (sometime in conjunction with switching OFF hyper threading)

In loader:

net.isr.maxthreads="1024" # Use at most this many CPUs for netisr processing

net.isr.bindthreads="1" # Bind netisr threads to CPUs.

In sysctl:

net.isr.dispatch=deferred # direct / hybrid / deffered // Interrupt handling via multiple CPU, but with context switcOr

dev.igb.0.iflib.tx_abdicate=1

dev.igb.0.iflib.separate_txrx=1So the question is still: how to effectively manage loading on multi-core multi-CPU systems?

Especially when problems with powerd and est drivers for ALL Intel CPU still exist (look of this thread about SpeedStep & TurboBoost work together in FreeBSD https://forum.netgate.com/topic/112201/issue-with-intel-speedstep-settings)

-

Also this post about FreeBSD optimization and tuning for networking for Yours attention https://calomel.org/freebsd_network_tuning.html

-

And another one example how to manually dispatching processes to certain CPUs cores:

for l in `cat ${basedir}/ix_cpu_16core_2nic`; do if [ -n "$l" ]; then iface=`echo $l | cut -f 1 -d ":"` queue=`echo $l | cut -f 2 -d ":"` cpu=`echo $l | cut -f 3 -d ":"` irq=`vmstat -i | grep "${iface}:q${queue}" | cut -f 1 -d ":" | sed "s/irq//g"` echo "Binding ${iface} queue #${queue} (irq ${irq}) -> CPU${cpu}" cpuset -l $cpu -x $irq fi done ix0:0:1 ix0:1:2 ix0:2:3 ix0:3:4 ix0:4:5 ix0:5:6 ix0:6:7 ix0:7:8 ix1:0:9 ix1:1:10 ix1:2:11 ix1:3:12 ix1:4:13 ix1:5:14 ix1:6:15 ix1:7:16Totally 8 interrupts: 1 interrupt to 1 CPU core, 0 for dummynet

From here (You need a Google translator): https://local.com.ua/forum/topic/117570-freebsd-gateway-10g/

-

And at last another one interesting thread about Binding igb(4) IRQs and dummynet to CPUs https://dadv.livejournal.com/139366.html (Use a translate.google.com to read)

Shortly to say, because igb(4) driver queues linking algorithm (when first queue created, they linking to fires core - CPU0, and doing the same for each card) , PPPoE/GRE traffic in first queue on each of Intel cards linked strongly to CPU0, and because this traffic are high load -> CPU0 become quickly overloaded -> packets are more holding in NIC buffers -> we have dramatically latency increasing

Another interesting thing are the FreeBSD behavior of system thread that service dummynet: manually linking dummynet to CPU0 decrease core loading from 80% to 0,1%

Article worth to read.

-

Need to note that mostly all this links are about high-load PPPoE/PPtP/GRE traffic with 90% of traffic are ~600 bytes size.

Interesting to read detailed comments from pfSense developers side, even we speak about Netgate-branded hardware (SuperMicro motherboard and case, yes?) because Intel CPUs are the same, FreeBSD are the same and all drivers are the same for Your own bare metal and Netgate hardware.

And in near future we see only frequency increasing, numbers of cores increasing, and energy consuming decrease. Se the proper using multi cores CPUs in case of specialized solution like “network packet grinder” pfSense still actual.

-

What sort of increase in throughput do you see by applying that?

Were you seeing very uneven CPU core loading before applying it?

PPPoE is a special case in FreeBSD/pfSense. Only one Rx queue on a NIC will ever be used so only one core.

Steve

-

Need to note that I understand that dumminet was written by Luigi Rizzo as system shaper for imitating environment of a low-quality channels (with big latency, packet drops, etc.), that exist more in 2008-2010.

For nowadays ALTQ and NetGraph working better on fast 1-10-100G links.I not speak especially about dummynet, or PPPoE/GRE but more about how to effectively loading multi-CPUs systems. Because in case firewalls, system with 2-4 Intel CPUs (E or X server series) and independent RAM banks on each CPU ARE MORE EFFECTIVE THAN system based on 1 CPU, but hi-frequency.

Effectively because, this mean ability to “fine tuning” the pfSense (FreeBSD) to professional cases (for example):

- in small ISP where exist hi-loading by PPPoE/GRE traffic;

- in middle companies networking with a lot of traffic with small packets (~500~800 bytes);

- in broadcasting services/platforms oriented on mobile clients (with a lot of reconnections and small packets size);

- ...

The initial question in this thread mean

1. How processes and FreeBSD services in pfSense bundle utilize the cores and memory in multi-CPUs systems? Which behavior ?

2. When I understand each process / system service behavior, I able easy tuning pfSense in each usecase to achieve MORE BANDWITH, LESS LATENCY with not spending another $2-3k on a new server + NICs.From my point of view this is reasonable in nowadays when each company try to cutting costs on a tight budget due economic situation from one side and online services needs rapidly increasing (due COVID-19) from the other side.

-

Hm. Looks like hard to find right answer...

I need a little bit to explain the topic start question:

What system is better for network-related operation (i.e. firewall, load balancing, gate, proxy, media stream,...):

a) 1 CPU with 4-10 cores, hi-frequency

b) 2-4 CPU with 4-6 cores, mid-frequencyAnd how the cache in CPU L2 (2-56Mb) and L3 (2-57Mb) impact on network-related operation (in cooperation with NIC card) ?

-

Is anything changes in this after FreeBSD 13-based pfSense rolled out? Better CPU using? More cores better than CPU frequency? Etc...

-

@sergei_shablovsky Your question from 2/2021 is slightly flawed. The CPU package count is not relevant. Cores (threads) and frequency are relevant.

For tasks that are single threaded frequency is what you want, for tasks that are multi threaded you want enough threads to allow the concurrency you need. The result is a balance based on your goals. If single threaded tasks are your number one concern, you will lean to frequency at the expense of cores. However if you have several packages and many NICs, you will lean to core count at the expense of frequency because you will have many threads needing to execute at the same time and it is more efficient for the computer to have many threads vs having to share.I hope that helps.

-

At the first let me say BIG THANKS for briefly but “all that you need” answer!

And for patience, because I thinking this is one of the most asking question on that forum (and on FreeBSD.org also). :) Take my respect!@andyrh said in How pfSense utilize multicore processors and multi-CPU systems ?:

@sergei_shablovsky Your question from 2/2021 is slightly flawed. The CPU package count is not relevant. Cores (threads) and frequency are relevant.

Because most of all rack servers are 2xCPU packaged, this is something like default for users that prefer own rackmounted hardware.

But for Netgate original brand firewalls - may be this is not true default because most of their Motherboards (I remember NetGate using LANNER MB in early models, but after switch to SuperMicro, is this still true using SuperMicro?) are 1 x CPU package. And in this case there are only one ability to increasing performance when You bandwidth grow - more speedy processor with a large Cache 3 level.For tasks that are single threaded frequency is what you want, for tasks that are multi threaded you want enough threads to allow the concurrency you need.

So, may be great idea for NetGate create some reference table for users that prefer own hardware, in which some characteristics (single/multithreaded, requirements of CPU frequency, memory) linked to main services and additional packages (like Snort, Suricata, etc...) that require much system resources?

To help choose right NICs and server platform.

Reasonable ?The result is a balance based on your goals. If single threaded tasks are your number one concern, you will lean to frequency at the expense of cores. However if you have several packages and many NICs, you will lean to core count at the expense of frequency because you will have many threads needing to execute at the same time and it is more efficient for the computer to have many threads vs having to share.

The initial question in this topic combine inside two:

- how FreeBSD core manage threads between CPU packages in multi-CPU packages systems (2, 4), because bus between CPUs packages involved, NUMA/non-NUMA/chipkill memory configuration in BIOS, etc...;

- how different packages/services in pfSense depends on NICs configuration and tuning, CPUs packages numbers and frequencies;

I hope that helps.

Really, thank You.

-

What system is better for network-related operation (i.e. firewall, load balancing, gate, proxy, media stream,...):

a) 1 CPU with 4-10 cores, hi-frequency

b) 2-4 CPU with 4-6 cores, mid-frequencySingle CPU with more cores is usually better than multiple sockets with the same/less cores with networking gear. This is especially true when running on a hypervisor platform.

If the hypervisor hardware has multiple physical CPU sockets, see if the VM can be pinned to cores on CPU0 (Or whatever CPU the bus for the physical NIC is attached, usually CPU0)If the physical hardware NICs are attached to the BUS on CPU0 but the VM is running on CPU1 or higher, there can be issues in high traffic situations. This is usually seen as: Everything works perfectly until a certain threshold of network traffic is hit and when that happens, you may start to see packet drop/loss/re-transmits.

Non network gear is usually not affected, this is mainly for virtualized switches/proxies/scalers/routers/etc.