VMware Workstation VMs Web Traffic Being Blocked

-

Thank you for your opinion. I feel essentially the same way, can't nail down what is happening. Please let me know if any other ideas. I am going to continue troubleshooting here and there aiming for resolution to this weird problem but ultimately I'll have to make a decision in the next few weeks as that is what is left in my return policy for the appliance.

I think this goes without saying but I want to keep it if I can figure out how to get it all to play ball :). I'll keep fighting, I got time left.

If you think of anything that I can supply you for a random troubleshooting thought please done hesitate.

I do have one other sub option which would be to purchase the direct support for $400 and see if they can pull off something we didn't. Any reason from your opinion to think that they may be able to get further than we did?

-

@johnpoz said in VMware Workstation VMs Web Traffic Being Blocked:

@bpsdtzpw said in VMware Workstation VMs Web Traffic Being Blocked:

I meant smaller actual frames being used because there just isn't enough of a required resource

What? Look up frame and mtu ;)

If I were writing a VMM, it would be important to maintain reasonable performance within spec, including I/O bandwidth, latency, compute availability, etc.

To do this, the VMM would need to apportion baremetal resources to the VMs, both statically and dynamically, depending on available resources and how VMs (and other things running on the baremetal) are using them. So, to maintain both acceptable bandwidth and acceptable latency, the VMM might send a message to a VM's (virtual) ethernet driver, asking it to send smaller-than-permissible frames. It would still work to send frames as large as the MTU, but the VMM might punish violations of its request by delaying such frames, reducing resource quantums to the offending VM, etc.

This kind of resource management might explain the preponderance of smaller-than-MTU frames that puzzled you above.

BTW, I don't know WMware internals, but I do have experience designing and implementing VMMs.

-

I could believe this is a combination of things. Changing any one of them removes it but you happen to have hit the right combo!

VMWare not playing nicely with the NIC. USB/WIFI NICs never sit well with me but I've never tried that with VMWare&Windows.

Have you actually run any MTU tests yet? Like pinging with larger packets until it fails?

We see the replies coming back from external sites much larger when pfSense is routing but they are not over 1500B. Why is VMWare dropping them?

It seems like something is breaking PMTUD so one thing to do here would be to check for the presence of the ICMP packet-too-large messages when both Cisco and pfSense are routing.

Steve

-

@stephenw10 : Checking VMware logs might help. Presumably it can be configured to shout if it's dropping packets....

Aha!

<p>

Troubleshoot lost connectivity by capturing dropped packets through the pktcap-uw utility.A packet might be dropped at a point in the network stream for many reasons, for example, a firewall rule, filtering in an IOChain and DVfilter, VLAN mismatch, physical adapter malfunction, checksum failure, and so on. You can use the pktcap-uw utility to examine where packets are dropped and the reason for the drop.

</p>https://docs.vmware.com/en/VMware-vSphere/7.0/com.vmware.vsphere.networking.doc/GUID-84627D49-F449-4F77-B931-3C55E4A8ECA1.html

-

I'll happily perform another test. I don't actually know how to do the MTU tests such as the pinging with larger packets. I googled it earlier and messed with it for a few minutes but then stopped just because my attention got changed.

Do you have an example large ping test that you would like for me to run? If so, let me know what command and where (and if any captures) and I'll get that done ASAP.

I can do some basic watching of both scenarios looking for that ICMP packets too large messages you're talking about.

@bpsdtzpw said in VMware Workstation VMs Web Traffic Being Blocked:

pktcap-uw utility

I looked at this quick and this appears to be tied to vsphere esxi hosts vs my lowly VMware Workstation :)

-

@bpsdtzpw said in VMware Workstation VMs Web Traffic Being Blocked:

would be important to maintain reasonable performance within spec, including I/O bandwidth, latency

That wouldn't make for smaller or larger frames - or change the mtu of the network your connected too.. You understanding creating smaller packets would cause more work.. Trying to use a larger frame then your network was set to allow would again cause more work - And how would the VM software change the mtu of the switch port its connected too, etc..

-

@dfinjr said in VMware Workstation VMs Web Traffic Being Blocked:

I don't actually know how to do the MTU tests such as the pinging with larger packets.

not good to hear that the "calculation" with the USB NIC did not work..., but it is also a step forward and now there is a test USB NIC as well :)

although this doesn't mean anything, because the USB NIC also is a strange animal, especially on hypervisors.

(what's for sure is that I've never seen this before on VMware products , but surely this brings the problem, because the VB works)Steve is right about this:

- so the wifi has modified the MTU, which is a typical behavior, because of its own high MTU..., just think what happens when you want to get on the wire from wifi...

For the PING test, don't overthink it, just use the command with switches -f and -I

like:

ping google.com -f -I 1492

(do not test with 1492)

+++edit:

16.2.3 has been out for two days

one more thing, can you possibly run WS16 on Linux?

-

@bpsdtzpw said in VMware Workstation VMs Web Traffic Being Blocked:

If I were writing a VMM

Hi,

rather than writing a hypervisor

, I suggest you read the thread carefully from the very beginning, it's not a case of I/O and performance...

, I suggest you read the thread carefully from the very beginning, it's not a case of I/O and performance...BTW:

and it would cause chaos in the world of networking if the MTU was adapted to the load of a CPU@bPsdTZpW "This kind of resource management might explain the preponderance of smaller-than-MTU frames that puzzled you above."

you see that you misunderstand, because the problem is rather this MTU 1767 and/or 1753,

in case of ASA 1434 - let's face it none of them are good -

Good Morning everyone,

I have some things to get caught up with testing (obviously :)) and will do some testing I found these articles stumbling around with generic problem googling.

Good MTU article (might be elementary for some of you on the forum already but I found this explanation helpful):

https://www.imperva.com/blog/mtu-mss-explained/Then found this article from the methane forum that feels similar in issue possibly:

https://forum.netgate.com/topic/50886/mtu-and-mssIs it possible that the Cisco appliance is simply doing mss clamping and we need to set it?

I’ll still do that testing of course but found these articles and it has me thinking.

Thanks!

-

@dfinjr said in VMware Workstation VMs Web Traffic Being Blocked:

Is it possible that the Cisco appliance is simply doing mss clamping and we need to set it?

MSS clamping would be used on an external connection, like PPPoE or something that has a lowered mtu..

There is little reason that you should ever have to do mss clamping internally.. Since everything should be using the standard of 1500.

Even if your external internet connection could only use a specific lower than 1500 mtu... It wouldn't explain why you were seeing a 1753 mtu being used internally..

Your other devices are working fine when they use a correct mtu of 1500.. Why you continue to look to something to handle your VM using the wrong mtu makes no sense.

Why don't you open up a case with vmware on why your seeing the wrong mtu..

-

Yeah I wouldn't expect to need MSS clamping but if the Cisco is doing it that would explain why traffic can pass. And why it comes back with the unexpected size (1434B)

-

Since you say it works with virtual box, and none of your devices are having issues - and when you just test from the host it is using the correct mtu.

Have you thought of just uninstalling workstation and starting from scratch? Use a new box or wipe the host box as well..

I can not reproduce this problem on a clean install - everything works fine normal mtu.

You would think if this is some vmware generic sort of issue - that everyone would be screaming and there would be info all over the internet. Something specific to your setup it seems.

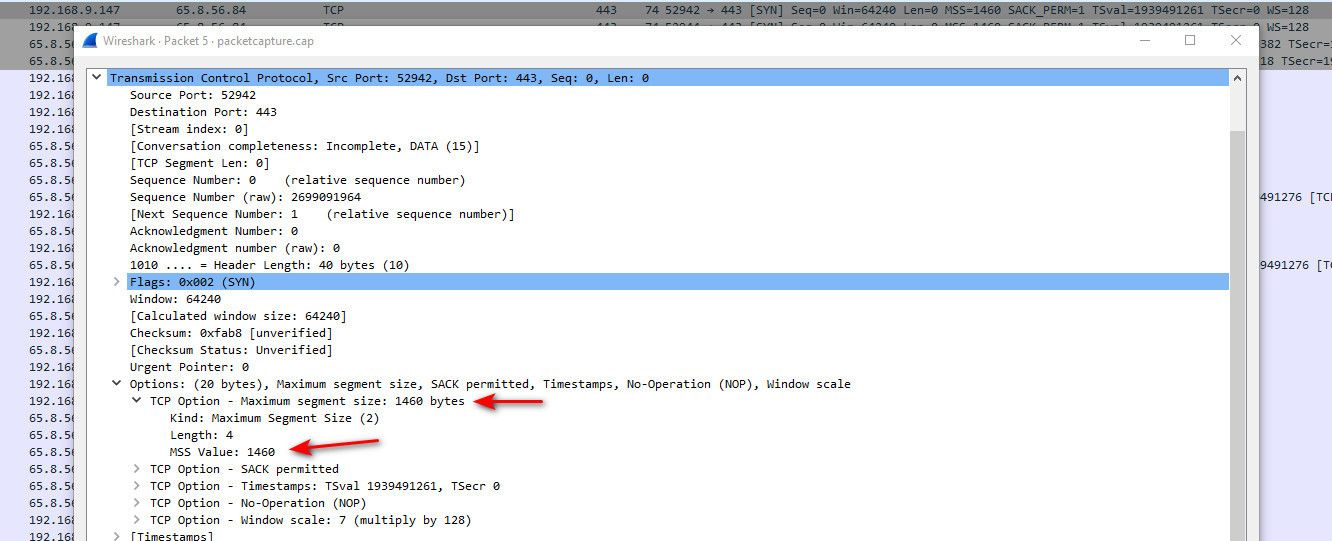

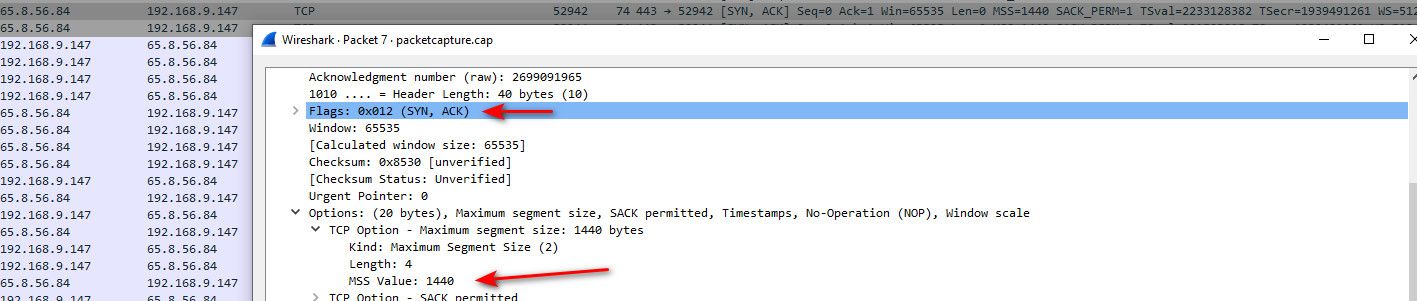

I just updated to 16.2.3 and still working as it should.. Out of curiosity, when you start your conversation with some website.. Look at your sniff on the SYN packet... What does it show you for the mss?

Then in the syn ack what do you see?

-

Mmm, the remote host replies with 1380 when the Cisco is routing:

Frame 6833: 66 bytes on wire (528 bits), 66 bytes captured (528 bits) on interface \Device\NPF_{3B5E8E58-9711-4569-8E92-7272D1125BC5}, id 0 Ethernet II, Src: Cisco_55:3d:55 (00:78:88:55:3d:55), Dst: VMware_f9:11:bd (00:0c:29:f9:11:bd) Internet Protocol Version 4, Src: 151.101.2.219, Dst: 172.16.0.202 Transmission Control Protocol, Src Port: 443, Dst Port: 49062, Seq: 0, Ack: 1, Len: 0 Source Port: 443 Destination Port: 49062 [Stream index: 17] [TCP Segment Len: 0] Sequence number: 0 (relative sequence number) Sequence number (raw): 2928660168 [Next sequence number: 1 (relative sequence number)] Acknowledgment number: 1 (relative ack number) Acknowledgment number (raw): 2848488699 1000 .... = Header Length: 32 bytes (8) Flags: 0x012 (SYN, ACK) Window size value: 65535 [Calculated window size: 65535] Checksum: 0xc2b6 [unverified] [Checksum Status: Unverified] Urgent pointer: 0 Options: (12 bytes), Maximum segment size, No-Operation (NOP), No-Operation (NOP), SACK permitted, No-Operation (NOP), Window scale TCP Option - Maximum segment size: 1380 bytes TCP Option - No-Operation (NOP) TCP Option - No-Operation (NOP) TCP Option - SACK permitted TCP Option - No-Operation (NOP) TCP Option - Window scale: 9 (multiply by 512) [SEQ/ACK analysis] [Timestamps]Hence the 1434B packets.

Yeah double check there's no MSS clamping in the Cisco config.

-

A shot in the dark: check these VMware Workstation settings:

- You can use advanced virtual network adapter settings to limit the bandwidth, specify the acceptable packet loss percentage, and create network latency for incoming and outgoing data transfers for a virtual machine.

https://docs.vmware.com/en/VMware-Workstation-Pro/16.0/com.vmware.ws.using.doc/GUID-7BFFA8B3-C134-4801-A0AD-3DA53BBAC5CA.html

-

Hello Everyone, Day finally free'd up a bit for me to do some testing which I am going to conduct now to see what it looks like. Have results shortly.

-

Ok so a quick few details. The system I am running WS16 isn't on a system I am allowed to do 100% what I want to with. That would be nice and all but it is a corporate asset and I don't have a ton of options like wiping it out and placing it on Linux; have to leave it windows. Additionally, I could wipe WS16 and start again possibly just being alright with the added time to bring back in the VMs? Or were you meaning to start fresh across the board?

Either case, I'll keep that as a last resort at the moment. I'll do the ping test first, see what that yields both from Cisco and from pfsense. I'll do some base packet tests as well to see if I can narrow down some more specifics around what I am seeing around the MSS values.

I did check the Cisco config from a full back and I see no mention of MSS anywhere in the config which makes me think that it isn't there unless it is a part of something else in the config.

-

@dfinjr said in VMware Workstation VMs Web Traffic Being Blocked:

https://www.imperva.com/blog/mtu-mss-explained/

Just because I wanted to see what would happen. I turned on MSS clamping for the WAN and traffic started passing just fine to the VMs.

Cisco had to of been doing this. For giggles I just started with 1434 for the MSS setting on the wan and the traffic is passing. Doing some other tests to see what that did...

-

Get this! Remember that firewall fail I was seeing out of my DMZ over to my VMs (port 52311)? I was still seeing failures there so I figured "why not" and applied 1434 everywhere else and what was looking like a failing firewall rule disappeared. The DMZ is able to register to the hosting server now no problems.

I mean I am happy with the result and all but I can't say I have a solid handle entirely around why Cisco was doing this without a single mention of it... kind of blows my mind really.

Would you guys like to see anything from any of the systems to perhaps see if things are good now?

Does 1434 make sense to use? I sort of pulled it out of the air from the Cisco packet captures...

-

An mss like 1380 to give a packet size if 1434 is commonly seen for VPN connections. Azure recommend 1350 for example.

I would run some test pings to determine what the actual packet size is you can pass on each route. I would not expect to need that between the internal interfaces at all but certainly not as low as that.

Steve

-

@dfinjr are you running through some odd ball internet connection or a vpn? Some sort of tunnel? If not you should really be using the standard 1500, and you shouldn't have to do anything. If there is something odd out there on the internet where it or its path to it has some lower than 1500 mtu, then PMTUD (Path MTU Discovery) should figure this out and use a lower mtu.

https://en.wikipedia.org/wiki/Path_MTU_Discovery

This is also why in a syn, the size is sent.. Hey I can talk at this mtu, can you? And the server your talking to answers, etc.