Major DNS Bug 23.01 with Quad9 on SSL

-

Running the SG-1100, I have been fighting this problem for a while. I did notice the problem self resolved once I applied all the recommended patches on 23.01 and reboot. I have not gone in and attempted to figure out which one or combo fixes it, with a 1100 it is just too slow

Curious to know if this solution helps anyone else.

-

@defunct78 I'm running 23.01-Release on SG-5100. I'm still plagued with this issue and today I threw up my hands and reverted to port 53 and disabled python mode in both the resolver & pfBlocker.

I am now watching to see how my system responds.

-

The reverse on my side :

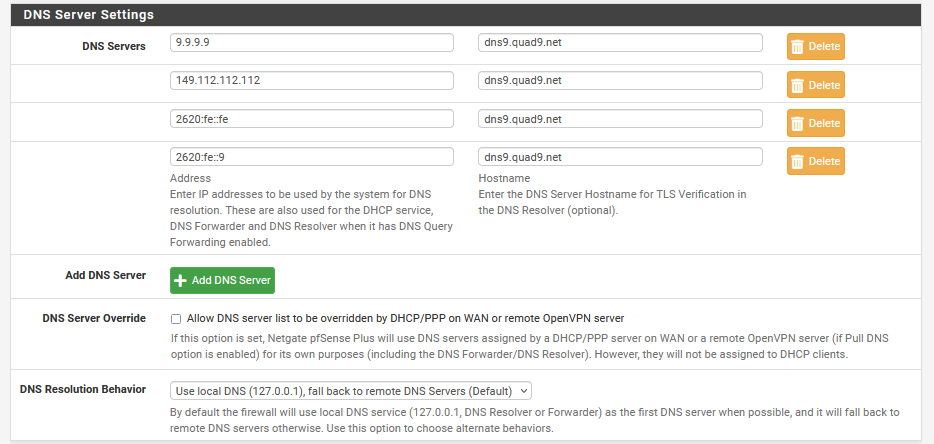

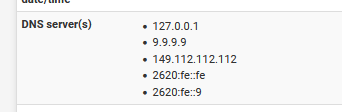

I'm using a 23.01-Release on SG-4100.I changed from default resolving to forwarding to quad9 :

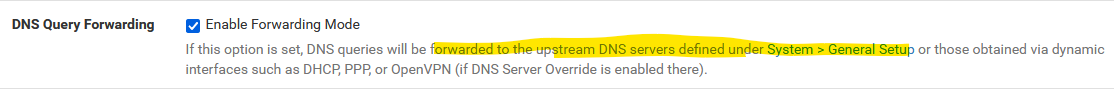

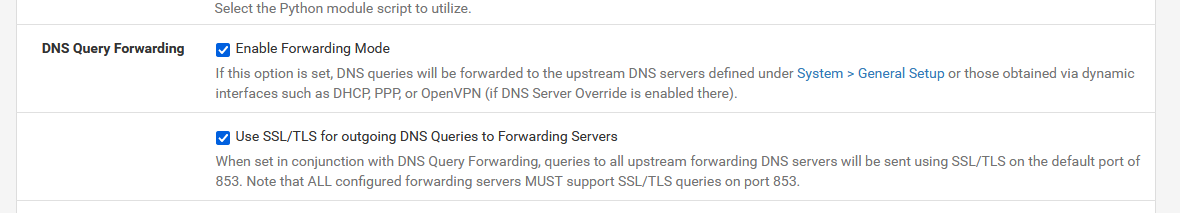

As per instructions :

and then :

So :

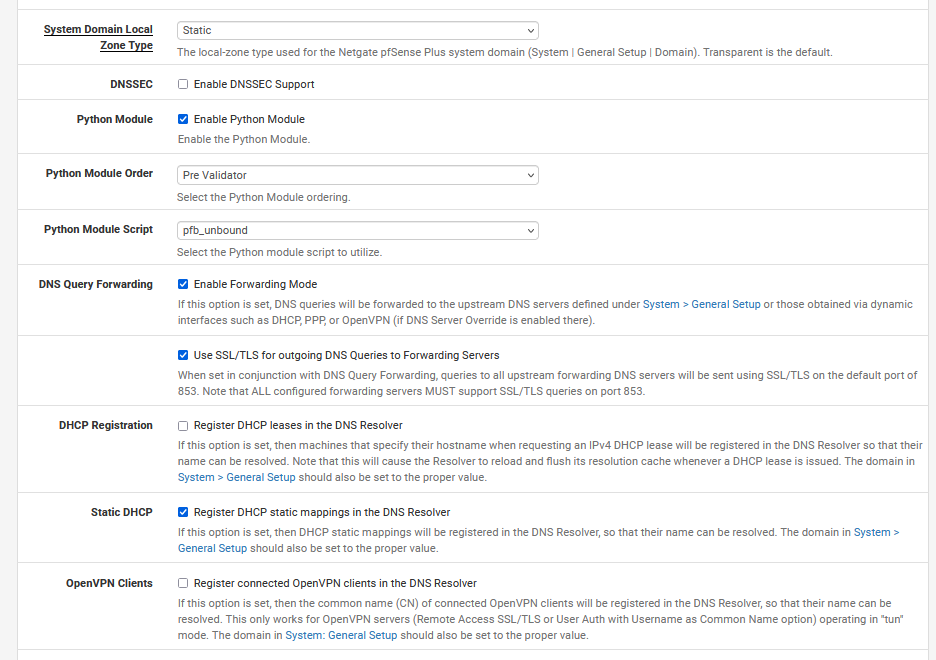

Python mode (of course)

DNSSEC : of course not

Forwarding : that's what this all about : Yes

TLS : over TLS using port 853.

[suspicious mode : ON]

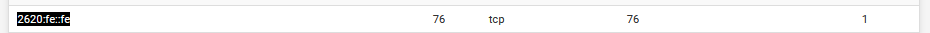

Look at the number of open TCP connections .... every one of them has an opened TLS state.

Nice .... but is it ?

These TLS connections are established by pfSense, not some LAN device.

To fire of a classic DNS request, using UDP, thousands of code lines are needed to handle the request, answers etc.

For TLS : that will be hundreds of thousands per connection - and I'm probably still not even close. Because now, it's TCP, far more complex as UDP, and then ... TLS.

To (re)build a TLS connections, entropy is going through the drain fast.

I never really found a way to measure available entropy, but what I know : if this one goes to zero, my web multi home server becomes slow. Note that the processor of my web (mail, bind, etc) server uses a quad core Intel(R) Xeon(R) CPU 3Ghz, way more power as my pfSense (a 4100).Instead of using the word 'bug' or 'problem' I use this, which permits to to see more clearly what's going on.

Note that I've switched from resolving mode to forwarding mode over TLS on May 4,2023 @08h00.On the other hand : I was forwarding to 1.1.1.1 and friends for a week or two.

Using TLS of course. I've found no (unbound or DNS) issues what so ever.Probably important :

My IPv4 and IPv6 are, afaik, without issues.[suspicious mode : OFF]

Now, let's wait for thread the subject "Major DNS Bug 23.01 with Quad9 on SSL" to happen.

-

I would argue this is a bug because the same load in 22.05 did not cause any problems even in much larger environments.

However has anyone verified that this still happens in 23.05?

We have one report saying that after upgrading the problem was solved.Steve

-

I have been using pfSense w/ Unbound configured for DNS over TLS forwarding for years. Recently, it appears that there is a serious issue with the resolver. I get intermittent errors with the following:

2023-05-04 10:24:53.905770-05:00 unbound 3136 [3136:2] debug: comm point listen_for_rw 46 0

2023-05-04 10:24:53.905752-05:00 unbound 3136 [3136:3] debug: comm point listen_for_rw 47 0

2023-05-04 10:24:53.905719-05:00 unbound 3136 [3136:1] debug: comm point listen_for_rw 48 0

2023-05-04 10:24:53.882272-05:00 unbound 3136 [3136:1] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 10:24:53.882209-05:00 unbound 3136 [3136:3] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 10:24:53.882148-05:00 unbound 3136 [3136:1] debug: serviced send timerEventually, DNS queires from devices with IPv6 addresses fail causing mobile apps to crash on the connected device. The errors happen with or without pfBlocker DNSBL active

I can restart the Unbound service, it will work for a while then the errors return.

Also get this now with DNSBL:

servicewatchdog_cron.php: The command '/usr/local/sbin/unbound -c /var/unbound/unbound.conf' returned exit code '1', the output was '[1683215282] unbound[54733:0] debug: creating udp4 socket 192.168.246.1 53 [1683215282] unbound[54733:0] error: bind: address already in use [1683215282] unbound[54733:0] fatal error: could not open ports' -

Are you using Quad9? Are you running 23.01? can you test 23.05?

-

@n0m0fud Have you disabled DNSSEC and/or DNS over TLS as discussed above?

re: watchdog and DNSBL...pfBlocker updates and DHCP registrations will restart unbound so watchdog should not try to also start it. It can end up starting twice which is presumably why it is saying the address/port is already in use. Same thing with Suricata/Snort or other services that restart as part of normal behavior.

-

@stephenw10 Running 23.05 forwarding to Cloudflare. Its definitely an IPv6 issue. DNS does not resolve via DNS over TLS on IPv6 Interfaces. I rebooted the router which kills IPv6 for a time after the reboot. Everything worked fine until the IPv6 addresses activated and RADV started. Logs:

2023-05-04 11:01:43.286399-05:00 kernel - cannot forward src fe80:2::1ab4:30ff:fe03:3db, dst 2604:2d80:b208:f300:201:2eff:fe5a:6a7c, nxt 17, rcvif re1, outif re0

2023-05-04 11:01:07.981963-05:00 kernel - cannot forward src fe80:2::557:1ce2:51f6:763c, dst 2600:1402:b800:1a::6847:8fc5, nxt 6, rcvif re1, outif re0

2023-05-04 11:01:07.981902-05:00 kernel - cannot forward src fe80:2::557:1ce2:51f6:763c, dst 2600:1402:b800:1a::6847:8fd0, nxt 6, rcvif re1, outif re0AND

2023-05-04 11:00:29.311174-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 89 0

2023-05-04 11:00:29.311008-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 92 0

2023-05-04 11:00:29.310857-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 91 0

2023-05-04 11:00:29.310710-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 85 0

2023-05-04 11:00:29.310546-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 86 0

2023-05-04 11:00:29.310400-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 87 0

2023-05-04 11:00:29.310242-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 90 0

2023-05-04 11:00:29.310094-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 84 0

2023-05-04 11:00:29.309943-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 88 0

2023-05-04 11:00:29.309776-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 81 0

2023-05-04 11:00:29.309627-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 82 0

2023-05-04 11:00:29.309463-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 83 0

2023-05-04 11:00:29.309314-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 77 0

2023-05-04 11:00:29.309160-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 80 0

2023-05-04 11:00:29.308995-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 79 0

2023-05-04 11:00:29.308839-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 78 0

2023-05-04 11:00:29.308673-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 76 0

2023-05-04 11:00:29.308519-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 73 0

2023-05-04 11:00:29.308369-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 75 0

2023-05-04 11:00:29.308191-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 67 0

2023-05-04 11:00:29.307997-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 70 0

2023-05-04 11:00:29.307829-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 61 0

2023-05-04 11:00:29.307696-05:00 unbound 52706 [52706:3] debug: comm point listen_for_rw 74 0

2023-05-04 11:00:29.307645-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 63 0

2023-05-04 11:00:29.307402-05:00 unbound 52706 [52706:1] debug: comm point listen_for_rw 69 0

2023-05-04 11:00:29.307354-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 62 0

2023-05-04 11:00:29.307164-05:00 unbound 52706 [52706:1] debug: comm point listen_for_rw 72 0

2023-05-04 11:00:29.307124-05:00 unbound 52706 [52706:2] debug: comm point listen_for_rw 66 0

2023-05-04 11:00:29.307052-05:00 unbound 52706 [52706:3] debug: comm point listen_for_rw 64 0

2023-05-04 11:00:29.306942-05:00 unbound 52706 [52706:1] debug: comm point listen_for_rw 65 0

2023-05-04 11:00:29.306894-05:00 unbound 52706 [52706:2] debug: comm point listen_for_rw 68 0

2023-05-04 11:00:29.306849-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 57 0

2023-05-04 11:00:29.306696-05:00 unbound 52706 [52706:1] debug: comm point listen_for_rw 71 0

2023-05-04 11:00:29.306664-05:00 unbound 52706 [52706:2] debug: comm point listen_for_rw 59 0

2023-05-04 11:00:29.306647-05:00 unbound 52706 [52706:3] debug: comm point listen_for_rw 60 0

2023-05-04 11:00:29.306475-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 54 0

2023-05-04 11:00:29.306241-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 53 0

2023-05-04 11:00:29.306079-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 55 0

2023-05-04 11:00:29.305913-05:00 unbound 52706 [52706:2] debug: comm point listen_for_rw 58 0

2023-05-04 11:00:29.305895-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 51 0

2023-05-04 11:00:29.305794-05:00 unbound 52706 [52706:3] debug: comm point listen_for_rw 56 0

2023-05-04 11:00:29.305412-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 52 0

2023-05-04 11:00:29.305247-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 50 0

2023-05-04 11:00:29.305075-05:00 unbound 52706 [52706:0] debug: comm point listen_for_rw 49 0

2023-05-04 11:00:29.294142-05:00 unbound 52706 [52706:0] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:00:29.294112-05:00 unbound 52706 [52706:0] debug: tcp bound to src ip4 173.21.178.244 port 0 (len 16)

2023-05-04 11:00:29.294090-05:00 unbound 52706 [52706:0] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:00:29.294056-05:00 unbound 52706 [52706:0] debug: tcp bound to src ip6 2604:2d80:8419:0:35e5:332b:4cf:e696 port 0 (len 28)

2023-05-04 11:00:29.294034-05:00 unbound 52706 [52706:0] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:00:29.293995-05:00 unbound 52706 [52706:0] debug: comm point start listening 89 (-1 msec)

2023-05-04 11:00:29.293989-05:00 unbound 52706 [52706:0] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:00:29.293941-05:00 unbound 52706 [52706:0] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:00:29.293899-05:00 unbound 52706 [52706:0] debug: comm point start listening 87 (-1 msec)

2023-05-04 11:00:29.293871-05:00 unbound 52706 [52706:0] debug: tcp bound to src ip4 173.21.178.244 port 0 (len 16)

2023-05-04 11:00:29.293850-05:00 unbound 52706 [52706:0] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:00:29.293810-05:00 unbound 52706 [52706:0] debug: comm point start listening 85 (-1 msec)

2023-05-04 11:00:29.293777-05:00 unbound 52706 [52706:0] debug: tcp bound to src ip6 2604:2d80:8419:0:35e5:332b:4cf:e696 port 0 (len 28)Customer Unbound Options:

server:

forward-zone:

name: "."

forward-ssl-upstream: yes

forward-addr: 1.1.1.1@853

forward-addr: 1.0.0.1@853

forward-addr: 2606:4700:4700::64@853

forward-addr: 2606:4700:4700::6400@853All Ips are pingable. The IPs are Cloudflare.

Not sure what's going on here.

-

If you set Unbound to use v4 interfaces for Outbound traffic only does that resolve it?

-

@stephenw10 Only way to do that is manually edit and save the config file. The GUI only allows selection of physical interfaces. Not IP addresses.

-

2023-05-04 11:01:07.981902-05:00 kernel - cannot forward src fe80:2::557:1ce2:51f6:763c, dst 2600:1402:b800:1a::6847:8fd0, nxt 6, rcvif re1, outif re0What has

fe80:2::557:1ce2:51f6:763c? It shouldn't be trying to send traffic to a GUA address from LL, it's invalid.What shows in the unbound config for interface binding(s)?

-

@stephenw10 And restarting the service overrides my manual changes. This used to work fine until about 2 months ago. I know its local DNS as I can manually set the DNS on mobile devices that are showing the issue and the app failure problem goes away.

-

@jimp Here is the Unbound.conf:

##########################

Unbound Configuration

##########################

Server configuration

server:

chroot: /var/unbound

username: "unbound"

directory: "/var/unbound"

pidfile: "/var/run/unbound.pid"

use-syslog: yes

port: 53

verbosity: 4

hide-identity: yes

hide-version: yes

harden-glue: yes

do-ip4: yes

do-ip6: yes

do-udp: yes

do-tcp: yes

do-daemonize: yes

module-config: "python validator iterator"

unwanted-reply-threshold: 10000000

num-queries-per-thread: 2048

jostle-timeout: 200

infra-keep-probing: yes

infra-host-ttl: 900

infra-cache-numhosts: 20000

outgoing-num-tcp: 50

incoming-num-tcp: 50

edns-buffer-size: 4096

cache-max-ttl: 86400

cache-min-ttl: 0

harden-dnssec-stripped: yes

msg-cache-size: 512m

rrset-cache-size: 1024m

qname-minimisation: yesnum-threads: 4

msg-cache-slabs: 4

rrset-cache-slabs: 4

infra-cache-slabs: 4

key-cache-slabs: 4

outgoing-range: 4096

#so-rcvbuf: 4m

auto-trust-anchor-file: /var/unbound/root.key

prefetch: yes

prefetch-key: yes

use-caps-for-id: no

serve-expired: yes

aggressive-nsec: yesStatistics

Unbound Statistics

statistics-interval: 0

extended-statistics: yes

statistics-cumulative: yesTLS Configuration

tls-cert-bundle: "/etc/ssl/cert.pem"

tls-port: 853

tls-service-pem: "/var/unbound/sslcert.crt"

tls-service-key: "/var/unbound/sslcert.key"Interface IP addresses to bind to

interface: 192.168.246.1

interface: 192.168.246.1@853

interface: 2604:2d80:b204:b00:201:2eff:fe5a:6a7c

interface: 2604:2d80:b204:b00:201:2eff:fe5a:6a7c@853

interface: 127.0.0.1

interface: 127.0.0.1@853

interface: ::1

interface: ::1@853Outgoing interfaces to be used

outgoing-interface: 173.21.178.244

outgoing-interface: 2604:2d80:8419:0:c4a9:d993:4305:fa3eDNS Rebinding

For DNS Rebinding prevention

private-address: 127.0.0.0/8

private-address: 10.0.0.0/8

private-address: ::ffff:a00:0/104

private-address: 172.16.0.0/12

private-address: ::ffff:ac10:0/108

private-address: 169.254.0.0/16

private-address: ::ffff:a9fe:0/112

private-address: 192.168.0.0/16

private-address: ::ffff:c0a8:0/112

private-address: fd00::/8

private-address: fe80::/10Access lists

include: /var/unbound/access_lists.conf

Static host entries

include: /var/unbound/host_entries.conf

dhcp lease entries

include: /var/unbound/dhcpleases_entries.conf

Domain overrides

include: /var/unbound/domainoverrides.conf

Unbound custom options

server:

tls-upstream: yes

forward-zone:

name: "."

forward-ssl-upstream: yes

forward-addr: 1.1.1.1@853

forward-addr: 1.0.0.1@853

#forward-addr: 2606:4700:4700::64@853

#forward-addr: 2606:4700:4700::6400@853

#forward-addr: 149.112.112.11@853

#forward-addr: 9.9.9.11@853

#forward-addr: 2620:fe::11@853

#forward-addr: 2620:fe::fe:11@853

#forward-addr: 52.205.50.148@853Remote Control Config

include: /var/unbound/remotecontrol.conf

Python Module

python:

python-script: pfb_unbound.py -

@n0m0fud said in Major DNS Bug 23.01 with Quad9 on SSL:

The GUI only allows selection of physical interfaces. Not IP addresses

Unless it changed in 23.05:

Custom unbound option text is not required for forwarding.

re: cannot forward, is IPv6 working otherwise, from both pfSense and LAN devices? You can

ping -6 google.com? -

@n0m0fud Ping works fine both from LAN device and from pfSense. Enabled the forwarding and let it run for a bit. I have to get on to other things.

Now logs show:

2023-05-04 11:44:02.155327-05:00 unbound 45552 [45552:3] debug: comm point listen_for_rw 36 0

2023-05-04 11:44:02.135144-05:00 unbound 45552 [45552:3] debug: comm point start listening 36 (-1 msec)

2023-05-04 11:44:02.135137-05:00 unbound 45552 [45552:3] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:44:02.134995-05:00 unbound 45552 [45552:3] debug: tcp bound to src ip6 2604:2d80:8419:0:c4a9:d993:4305:fa3e port 0 (len 28)

2023-05-04 11:44:02.134947-05:00 unbound 45552 [45552:3] debug: serviced send timer

2023-05-04 11:44:02.134938-05:00 unbound 45552 [45552:3] debug: cache memory msg=66072 rrset=66072 infra=7808 val=66288

2023-05-04 11:44:02.134929-05:00 unbound 45552 [45552:3] info: 0RDd mod2 rep 144.228.109.190.in-addr.arpa. PTR IN

2023-05-04 11:44:02.134924-05:00 unbound 45552 [45552:3] info: mesh_run: end 1 recursion states (1 with reply, 0 detached), 1 waiting replies, 0 recursion replies sent, 0 replies dropped, 0 states jostled out

2023-05-04 11:44:02.134918-05:00 unbound 45552 [45552:3] debug: mesh_run: iterator module exit state is module_wait_reply

2023-05-04 11:44:02.134908-05:00 unbound 45552 [45552:3] debug: dnssec status: not expected

2023-05-04 11:44:02.134904-05:00 unbound 45552 [45552:3] debug: sending to target: <.> 2606:4700:4700::64#853

2023-05-04 11:44:02.134898-05:00 unbound 45552 [45552:3] info: sending query: 144.228.109.190.in-addr.arpa. PTR IN

2023-05-04 11:44:02.134892-05:00 unbound 45552 [45552:3] debug: selrtt 376

2023-05-04 11:44:02.134883-05:00 unbound 45552 [45552:3] debug: rpz: iterator module callback: have_rpz=0

2023-05-04 11:44:02.134876-05:00 unbound 45552 [45552:3] debug: attempt to get extra 3 targets

2023-05-04 11:44:02.134871-05:00 unbound 45552 [45552:3] debug: ip4 1.1.1.1 port 853 (len 16)

2023-05-04 11:44:02.134866-05:00 unbound 45552 [45552:3] debug: ip4 1.0.0.1 port 853 (len 16)

2023-05-04 11:44:02.134861-05:00 unbound 45552 [45552:3] debug: ip6 2606:4700:4700::64 port 853 (len 28)

2023-05-04 11:44:02.134856-05:00 unbound 45552 [45552:3] debug: ip6 2606:4700:4700::6400 port 853 (len 28) -

@n0m0fud said in Major DNS Bug 23.01 with Quad9 on SSL:

The GUI only allows selection of physical interfaces.

Hmm, interesting. I guess it shows only v6 link local as separate 'interfaces'.

-

@gertjan said in Major DNS Bug 23.01 with Quad9 on SSL:

To (re)build a TLS connections, entropy is going through the drain fast.

Linux for sure this can be a problem - and especially on vms, etc. But I thought bsd got their entropy in a different way? and it was unlikely for bsd to run out like linux can?

-

Strange :

... dhcp lease entries include: /var/unbound/dhcpleases_entries.conf Domain overrides include: /var/unbound/domainoverrides.conf Unbound custom options server: tls-upstream: yes forward-zone: name: "." forward-ssl-upstream: yes forward-addr: 1.1.1.1@853 forward-addr: 1.0.0.1@853 #forward-addr: 2606:4700:4700::64@853 #forward-addr: 2606:4700:4700::6400@853 #forward-addr: 149.112.112.11@853 #forward-addr: 9.9.9.11@853 #forward-addr: 2620:fe::11@853 #forward-addr: 2620:fe::fe:11@853 #forward-addr: 52.205.50.148@853 Remote Control Config .....When you set :

pfSense will add a "forward-zone" section will all the needed addresses :

..... # dhcp lease entries include: /var/unbound/dhcpleases_entries.conf # Domain overrides include: /var/unbound/domainoverrides.conf # Forwarding forward-zone: name: "." forward-tls-upstream: yes forward-addr: 9.9.9.9@853#dns9.quad9.net forward-addr: 149.112.112.112@853#dns9.quad9.net forward-addr: 2620:fe::fe@853#dns9.quad9.net forward-addr: 2620:fe::9@853#dns9.quad9.net # Unbound custom options server: statistics-cumulative: no ### # Remote Control Config ### .....And no "forward-ssl-upstream" but "forward-tls-upstream", although both are the same.

So you are forwarding without the GUI set to forwarding ?

Why would you use the custom options to achieve forwarding ?

Maybe that needed to be done in the past, but no so anymore.The usage of

tls-upstream: yesis also very rare.

Google knows about it - in just one place ( !! ): it's the unbound.conf doc :tls-upstream: <yes or no> Enabled or disable whether the upstream queries use TLS only for transport. Default is no. Useful in tunneling scenarios. The TLS contains plain DNS in TCP wireformat. The other server must support this (see tls-service-key). If you enable this, also configure a tls-cert-bundle or use tls-win-cert or tls-sys- tem-cert to load CA certs, otherwise the connections cannot be authenticated. This option enables TLS for all of them, but if you do not set this you can configure TLS specifically for some forward zones with forward-tls-upstream. And also with stub-tls-upstream.Reading this makes me thing : I would stay away from it.

Btw : I'm forwarding to quad9 (IPv4 and IPv6) for the last week or so.

I didn't detect no issues what so ever.

If my unbound got restarted, like this morning, that was me doing so. -

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

I am working on a build of the version of Unbound we shipped with 22.05 that will run on 23.01 (and one for 23.05). If the problem goes away with this old version of Unbound, I will start bisecting to find a root cause. I just don't want to go off in the weeds chasing ghosts.

It would also be useful to know if this problem also manifests on 23.05.

Standby

-

This issue is not unique to pfSense.

We do have a workaround:

- Stop the Unbound service

- Run

elfctl -e +noaslr /usr/local/sbin/unbound - Start the Unbound service

Ref: https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=270912