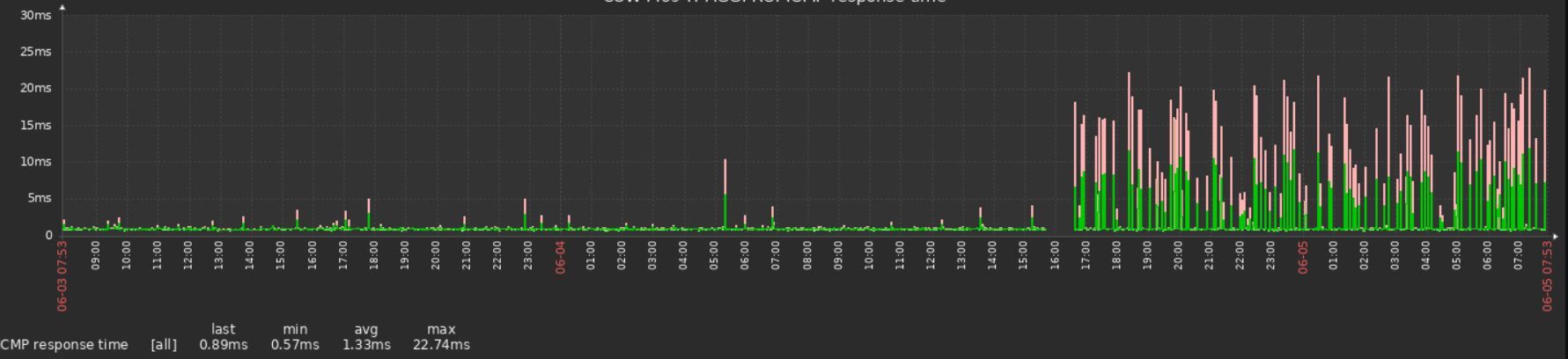

ICMP spikes after 23.05 upgrade

-

The right part looks far better as the left part.

The right part shows a ping reply time of "less then one millisecond", so that will be a "from pfSense to the upstream ISP router" test : you are testing the cable (2 feet or so ?) between pfSense WAN and your ISP LAN port.

That's not a good test, you should use some other, more upstream gateway - or any IP 'close to you bit on the Internet'.

Some use "8.8.8.8" ..... (this works but can have nasty consequences).A ten to 20 ms : that's what we all see, except the very lucky ones

-

@Gertjan maybe misunderstanding. This is not ping to 8.8.8.8, it is ping from Zabbix monitoring tool to eg network switch on another local VLAN. Left side is before update and the right is after update.

-

It's from pfSense to the local device? What are the pings graphed there actually between?

-

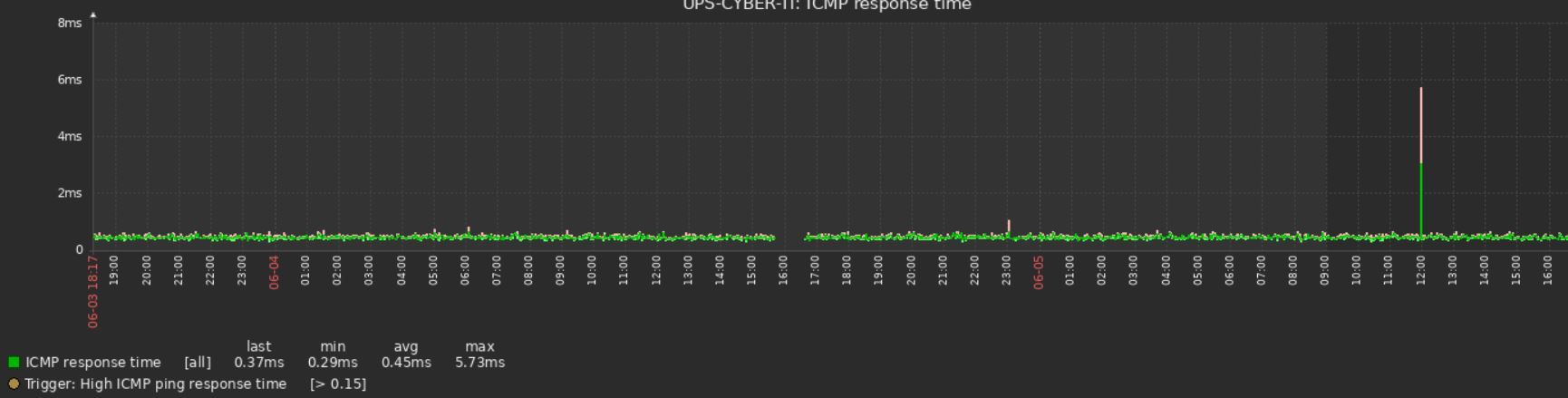

@stephenw10 Ping from Zabbix to UPS on same VLAN are OK - like example in picture.

-

So what are the higher latency pings between?

-

@stephenw10 I do not understand. You do not see difference between PRE upgrade and after upgrade? Difference is only when ping goes thru Netgate, when doesnt, then it is normal.

-

Yes I see the difference, I'm trying to understand where the pings are between that see that difference so I can consider what might have changed in the path to cause that.

-

@GeorgeCZ58 said in ICMP spikes after 23.05 upgrade:

This is not ping to 8.8.8.8, it is ping from Zabbix monitoring tool to eg network switch on another local VLAN

We all see the image.

Only you know what is pinging from where to what trough what.

I (or we) it's a 'from pfSense or behind pfSense to somewhere in front of pfSense, but again, your actual setup could be anything.Pinging to some LAN or VLAN device : that's boring :

[23.05-RELEASE][root@pfSense.local.tld]/root: ping diskstation2 PING6(56=40+8+8 bytes) 2a01:cb19:beef:a6dc::1 --> 2a01:cb19:beef:a6dc::c2 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=0 hlim=64 time=0.319 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=1 hlim=64 time=0.286 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=2 hlim=64 time=0.240 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=3 hlim=64 time=0.689 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=4 hlim=64 time=0.286 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=5 hlim=64 time=0.231 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=6 hlim=64 time=0.214 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=7 hlim=64 time=0.258 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=8 hlim=64 time=0.219 ms 16 bytes from 2a01:cb19:beef:a6dc::c2, icmp_seq=9 hlim=64 time=0.299 ms ....This shows a ping over a one Gbit link - head to head, with 32 port HP switch in between.

When I start to slam the diskstation2 device, a NAS, at full speed, I want it to react fast.

The ICMP packets, by nature, have less priority.

I will see spikes. I want to see spikes.Btw : if there is some other devices like smart switches on the path, this will add a random factor.

Still, I understand you question better now.

Your hardware (a 7100) should deal everything you throw at it. -

Yes, and I wouldn't expect to see a difference like that simply from upgrading.

Do you know if it did it in 23.01 also?

-

@stephenw10 unfortunately I didnt test 23.01. I was afraid about this release, so I go thru 23.01 to 23.05. In that time Zabbix was stopped as it will send lot on notification because of missing router meantime upgrade.

On sunday I will try to reboot all switches if production in plant will be stopped. I will come back with result. Otherwise maybe it will be better to make clean instalation.

-

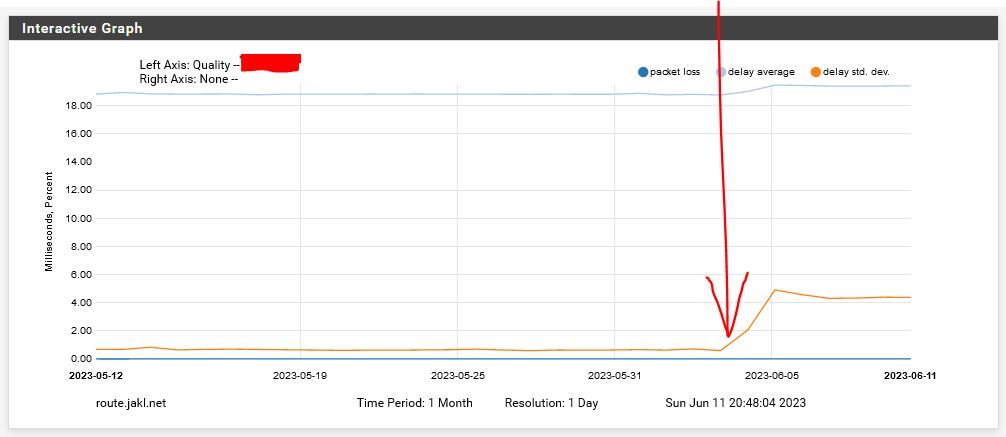

@GeorgeCZ58 so I reboot core switches, but nothing changed. So issue is related to pFsense upgrade. Also it is visible on pFsense monitoring, here is graph of ping on 8.8.8.8 before and after update.

Any idea how to improve pings to get same pings as were on 22.05?

-

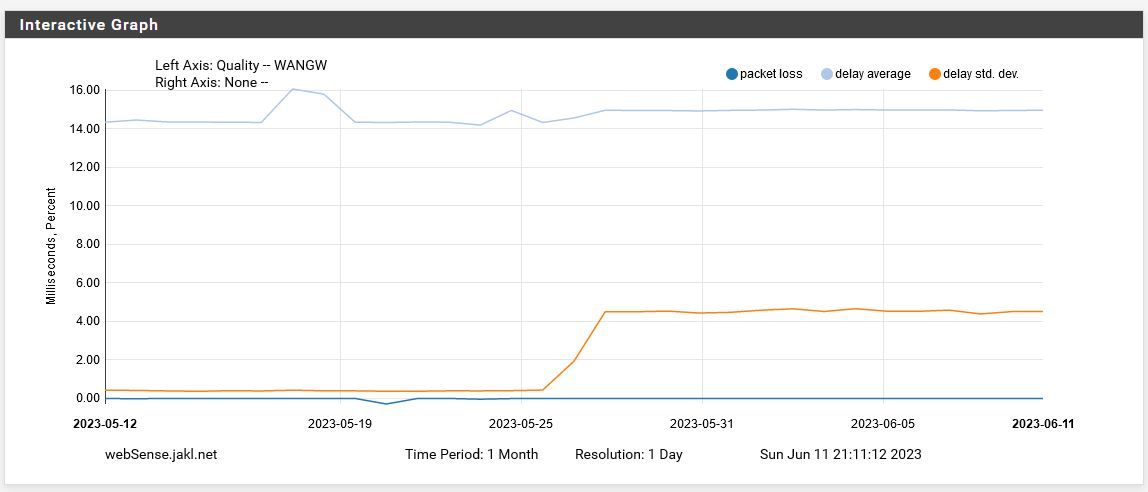

@GeorgeCZ58 I just check second XG7100 that I upgrade to 23.05 and it is same:

-

@GeorgeCZ58 so that is pfsense pinging 8.8.8.8 and your saying before it was like less than 1 ms (from your graph).. So your in the same DC as google?

Not sure how something in pfsense could change how long it takes something to respond to a ping that is sent by pfsense - if you were pinging through pfsense - ok maybe..

But if I send a ping from device A to device B.. How would device A have some effect on the response time? The only thing I could think of that could have effect is the payload.. But pfsense normally sends zero sized pings.. Or it use too - maybe that has changed to be 1 now vs zero

See

https://docs.netgate.com/pfsense/en/latest/routing/gateway-configure.html#advanced-gateway-settingsBut not sure how a size of 1 vs zero would cause it to go from like 1ms to 4? So the echo request is padded, so its not going to be 1.. So maybe going from 0 to 60 might be able to cause that sort of a difference?

Looks like length is 43 in my capture

What is the setting on your monitor, in routing - gateway, advanced

-

@johnpoz said in ICMP spikes after 23.05 upgrade:

So your in the same DC as google?

That's the std deviation. The actual ping times went from ~19ms to 20ms. The increase is 1ms at most from what I can see.

-

@stephenw10 so maybe the increase in packet size could maybe account for that? But my point still stands - there is really nothing that the client (pfsense) could do that could increase the time something takes to respond - other than sending a larger packet..

As to an increase in the std dev, that is a measure of the jitter really or how much the numbers fluctuate. Again your pinging across the public internet, something that takes 19ms RTT.. There is going to be fluctuation, how could the client sending the pings have anything to do with that?

Can see that in just local pings that are normally under 1ms

From 192.168.9.253: bytes=60 seq=0004 TTL=64 ID=9e18 time=0.495ms Packets: sent=4, rcvd=4, error=0, lost=0 (0.0% loss) in 1.504176 sec RTTs in ms: min/avg/max/dev: 0.402 / 0.456 / 0.498 / 0.041Now increase that size.

From 192.168.9.253: bytes=540 seq=0004 TTL=64 ID=bd0e time=0.491ms Packets: sent=4, rcvd=4, error=0, lost=0 (0.0% loss) in 1.508622 sec RTTs in ms: min/avg/max/dev: 0.357 / 0.456 / 0.530 / 0.064Notice the std dev increased.. Even though my avg is the same.. Sending 4 pings did take 4 ms more total time.. etc. .

-

@stephenw10 yes you are right. Small detail, I forgot that I am pinging 1.1.1.1, not google one. But I think it doesnt matter. Problem is, that "something" has changed. Question is, if it is related only to XG7100 on 23.05, or it is behavior on 23.05, that pings have lower priority then on 22.05 .

One XG7100 is with original expansion card and one is without - the basic version. Till now I think all services are working properly, I think (and hope) that only ICMP is affected.

-

~1ms change like that shouln't really affect anything. It's nothing like the sort of spikes you were seeing in Zabbix initially.

-

@stephenw10 what I am not understanding is how could anything in pfsense have to do with it pinging something across the public internet.. Makes no sense - other than the size of the ping, pfsense has no control over how fast something goes across the public internet and how long that something your pinging takes to respond..

Pfsense knows when it put something on the wire, and when it returned.. It has no control on how long that something might take to respond... or any possible delays across the multiple hops to get from A to B and back again...

Nor does it have any control over what the jitter or std dev in a sample of pings might be.. The only thing I could think of that could effect the std dev that might be in the control of pfsense monitoring some remote IP is the sample size it uses to determine that std dev..

-

If it's doing any sort of shaping it could.

We saw issues like that when reloading the ruleset was triggering a huge CPU load previously. But not continually.

-

johnpoz LAYER 8 Global Moderatorlast edited by johnpoz Jun 12, 2023, 12:03 PM Jun 12, 2023, 12:02 PM

@stephenw10 is he doing shaping? Traffic on the link could for sure cause fluctuations that is for sure..