Another Netgate with storage failure, 6 in total so far

-

@JonathanLee said in Another Netgate with storage failure, 6 in total so far:

You use the zfs set sync=disabled pfSense/var in cron right?

No.

Because this will default every reboot...

Not here - once set it remains that way even after reboot. (btw. on 23.04.2025 the system was rebooted, after an long power outage that exhausted the UPS, caused by work on the power line by the provider)

-

@JonathanLee said in Another Netgate with storage failure, 6 in total so far:

should they no longer show if you run "zfs list" also?

You should see the status if you run:

zfs get -r sync pfSenseor

zfs get -r sync zrootdepending on what pfsense system you are.

-

@fireodo Thank you for the reply,

Shell Output - zfs get -r sync pfSense NAME PROPERTY VALUE SOURCE pfSense sync standard default pfSense/ROOT sync standard default pfSense/ROOT/23_05_01_clone sync standard default pfSense/ROOT/23_05_01_clone/cf sync standard default pfSense/ROOT/23_05_01_clone/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv4 sync standard default pfSense/ROOT/23_05_01_ipv4@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv4@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/23_05_01_ipv4@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/cf sync standard default pfSense/ROOT/23_05_01_ipv4/cf@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/var_cache_pkg sync standard default pfSense/ROOT/23_05_01_ipv4/var_cache_pkg@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv4/var_db_pkg@2025-01-20-10:11:49-0 sync - - pfSense/ROOT/23_05_01_ipv4_Backup sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/cf sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/var_cache_pkg sync standard default pfSense/ROOT/23_05_01_ipv4_Backup/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6 sync standard default pfSense/ROOT/23_05_01_ipv6@2025-04-30-11:18:04-0 sync - - pfSense/ROOT/23_05_01_ipv6/cf sync standard default pfSense/ROOT/23_05_01_ipv6/cf@2025-04-30-11:18:04-0 sync - - pfSense/ROOT/23_05_01_ipv6/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6/var_db_pkg@2025-04-30-11:18:04-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/cf@2025-04-30-12:10:00-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg sync standard default pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-01-12-11:46:05-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-02-29-08:52:57-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-04-01-12:32:27-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-06-27-11:52:26-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6_non_website_test_proxy/var_db_pkg@2025-04-30-12:10:00-0 sync - - pfSense/ROOT/23_05_01_ipv6_website_test_proxy_clone sync standard default pfSense/ROOT/23_05_01_ipv6_website_test_proxy_clone@2024-07-25-15:54:45-0 sync - - pfSense/ROOT/23_05_01_ipv6_website_test_proxy_clone@2025-04-30-12:10:00-0 sync - - pfSense/ROOT/23_05_01_ipv6_website_test_proxy_clone/cf sync standard default pfSense/ROOT/23_05_01_ipv6_website_test_proxy_clone/var_db_pkg sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025 sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/cf sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/var_cache_pkg sync standard default pfSense/ROOT/23_09_01_ipv4_20240703094025/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv4 sync standard default pfSense/ROOT/24_03_01_ipv4/cf sync standard default pfSense/ROOT/24_03_01_ipv4/var_cache_pkg sync standard default pfSense/ROOT/24_03_01_ipv4/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850 sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/cf@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_cache_pkg@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg sync standard default pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-03-09:09:28-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-03-09:40:36-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2024-07-23-10:05:22-0 sync - - pfSense/ROOT/24_03_01_ipv6_20250113135850/var_db_pkg@2025-01-13-13:59:02-0 sync - - pfSense/ROOT/auto-default-20240112115753 sync standard default pfSense/ROOT/auto-default-20240112115753@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/cf sync standard default pfSense/ROOT/auto-default-20240112115753/cf@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/var_cache_pkg sync standard default pfSense/ROOT/auto-default-20240112115753/var_cache_pkg@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/auto-default-20240112115753/var_db_pkg sync standard default pfSense/ROOT/auto-default-20240112115753/var_db_pkg@2024-01-12-11:57:53-0 sync - - pfSense/ROOT/quick-20240401123227 sync standard default pfSense/ROOT/quick-20240401123227/cf sync standard default pfSense/ROOT/quick-20240401123227/var_db_pkg sync standard default pfSense/home sync standard default pfSense/reservation sync standard default pfSense/tmp sync disabled local pfSense/var sync disabled local pfSense/var/cache sync disabled inherited from pfSense/var pfSense/var/db sync disabled inherited from pfSense/var pfSense/var/log sync disabled inherited from pfSense/var pfSense/var/tmp sync disabled inherited from pfSense/varlogs and temp are now excluded from sync

and I also set

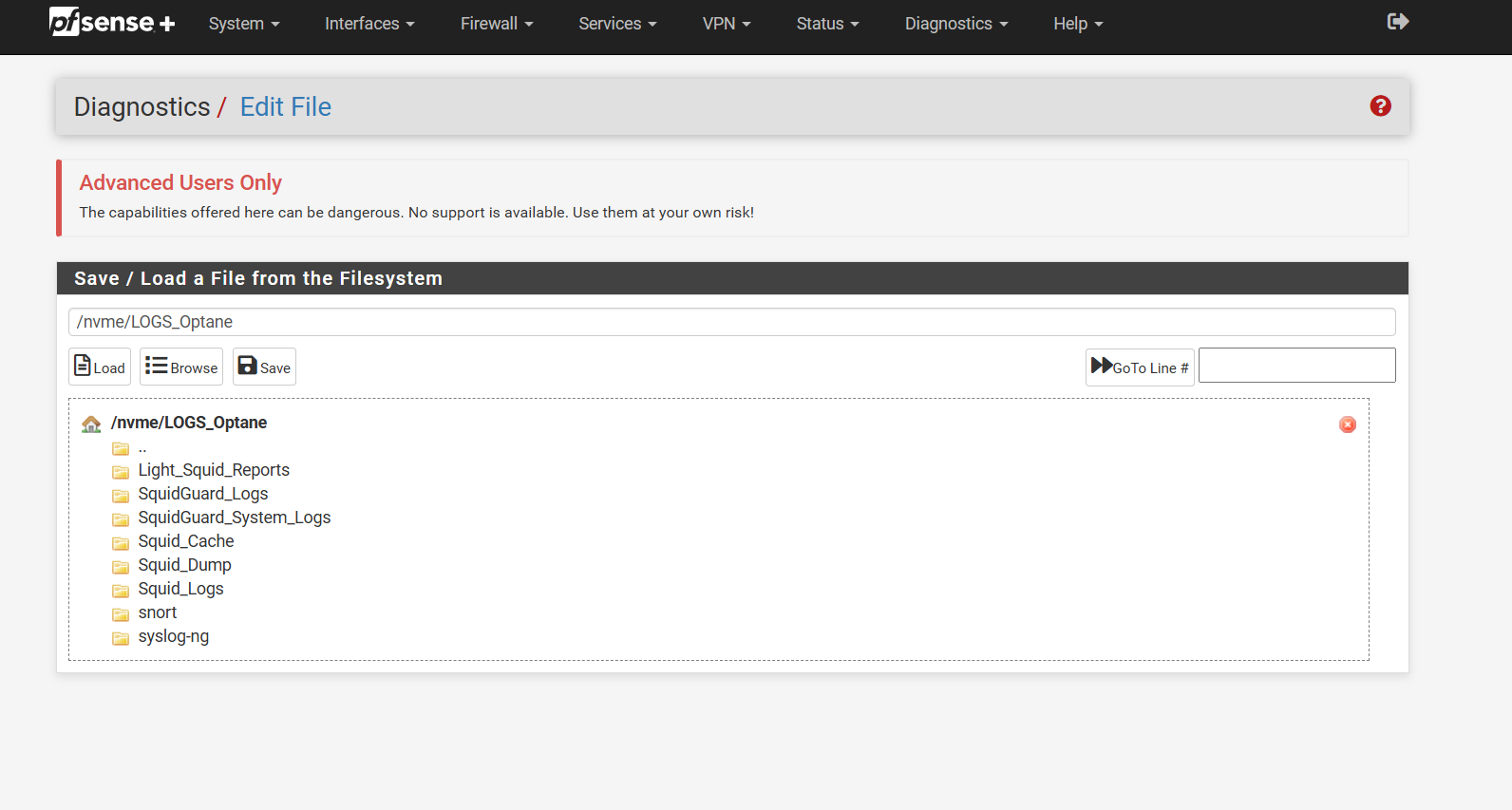

vfs.zfs.txg.timeout=120I also use a secondary nvme drive just for package logs I have a mount point and created linker files that direct everything that is write intensive over to it. That helps alot also, and or you can use a usb thumb drive for package logs if anyone is really worried.

https://forum.netgate.com/topic/195843/unofficial-guide-have-package-logs-record-to-a-secondary-ssd-drive-snort-syslog-squid-and-or-squid-cache-system/

-

@fireodo said in Another Netgate with storage failure, 6 in total so far:

Not here - once set it remains that way even after reboot.

Yes. This is correct. ZFS properties persist across reboots (unless they are changed by something during startup, say in

pfSense-rc, though I don't think we do any of that) -

W w0w referenced this topic on

W w0w referenced this topic on

-

One additional issue to be aware of is that up until pfSense+ 24.03 the Netgate SG-1100 (& SG-2100) installation images resulted in eMMC having non aligned flash partitions. This can result in file system activity causing sub optimal block writing (due to sectors crossing erase boundaries) resulting in increased flash wear.

pfSense Redmine reference -> https://redmine.pfsense.org/issues/15126

Easy to check - from shell use the command:

gpart show mmcsd0and check numbers in first column for either freebsd / freebsd-zfs partitions are divisible by 8 (or a higher power of 2).

Generally if your SG-1100 (also SG-2100) was originally commissioned prior to pfSense+ 24.03 then you should consider reinstalling and restoring config.

Relevant Netgate SG-1100 documentation -> https://docs.netgate.com/pfsense/en/latest/solutions/sg-1100/reinstall-pfsense.html

-

Netgate has finally implemented the fix.

https://redmine.pfsense.org/issues/16210#change-76840

Thank you, @marcosm, @fireodo, @andrew_cb -

Looks like it changes the vfs.zfs.txg.timeout default from

FreeBSD vfs.zfs.txg.timeout = 5

pfsense vfs.zfs.txg.timeout = 30So not as high as 120 suggested but consistent with andrew_cb recommendation

-

That patch code also has this: "zfs set sync=always pfSense/ROOT/default/cf"

Looking at my own system, I don't have that path, as I have only manually named Boot Environments, I have paths like "pfSense/ROOT/24.11_stable/cf ", so that part would fail.Should the command be run manually on the current default/active BE path, "pfSense/ROOT/24.11_stable/cf " in my case?

-

Yup. Fix incoming.

-

@stephenw10 should the timeout be 120 or 30?

-

zfs set sync=always pfSense/ROOT/default/cf

does not work on my 2100 I have a SSD should I run a different command for this?

-

A fix will be provided once it's ready on the following redmine:

https://redmine.pfsense.org/issues/16212 -

A patch is now available for testing on the redmine.

-

@stephenw10 is there a way to stop python pfblocker logging? I’ve tried to shut off all logging in pfblocker but the python module keeps on logging.

I’d prefer to keep using the python module for its benefits but the logging I don’t use often consumes my ssd lifetime at all other times without benefit.

-

@Mission-Ghost What logging are you seeing?

-

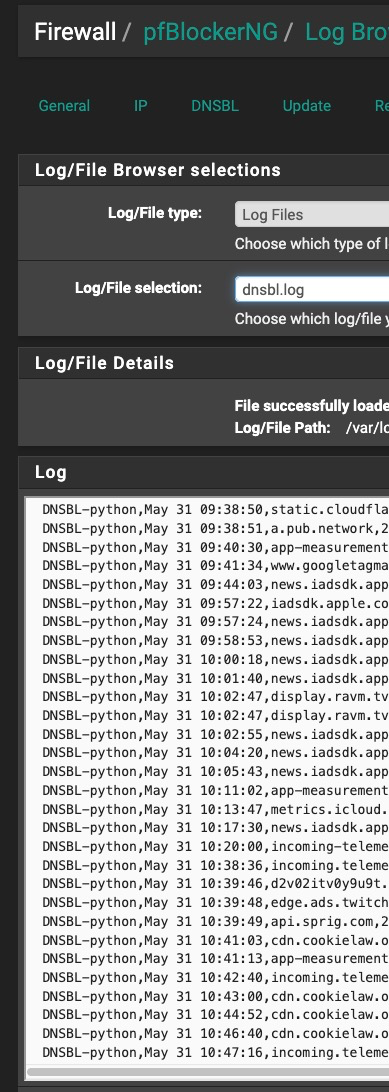

@SteveITS dnsbl.log just keeps going and going:

This is useful on rare occasions when I need to find a site to white-list, but I'd like to turn it on only on such occasions and off the rest of the time.

-

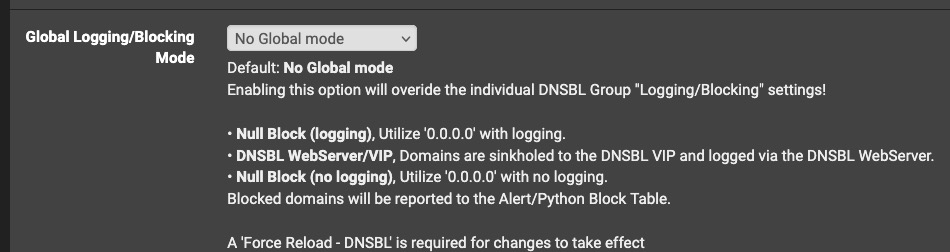

@Mission-Ghost That's set for all lists here:

or else on each list, e.g. on Firewall/pfBlockerNG/IP/IPv4.

With the logging off we have:

File successfully loaded: Total Lines: 0 Log/File Path: /var/log/pfblockerng/dnsbl.log -

@SteveITS Thank you!

I found and set it on the master configuration:

The master setting seems to be working so far.

Why does "Null Block (no logging)" log?

Why does "No Global mode" not log?

Is it just, me, or do the bullet points on the master DNSBL page fail to explain this clearly?

By my way of reading this, "No Global Mode" tells me that the individual settings on each Group will prevail. It doesn't tell me that it is overriding the individual settings on each Group, and sure doesn't tell me that logging is disabled, unlike "no logging" which says it's disabled but it isn't.

I feel like I'm taking crazy pills!

-

@Mission-Ghost No Global should mean it doesn’t override the individual settings. I just set it when creating each list so if the global settings aren’t working I profess ignorance. :)

-

@SteveITS said in Another Netgate with storage failure, 6 in total so far:

@Mission-Ghost No Global should mean it doesn’t override the individual settings. I just set it when creating each list so if the global settings aren’t working I profess ignorance. :)

Well, I guess it should mean it, but in context to some of of us who didn't develop the software, it isn't clear, particularly when adjacent options include "no logging" which apparently could not mean 'no' logging.

Seems like getting an English major (>gasp!<) intern to help redefine the labels to be more meaningful to customers would be a low cost, easy improvement to the usability of the product.

In any case, thank you for your generous help clarifying this. My problem is solved.