Playing with fq_codel in 2.4

-

@zwck

Idk, I have just used the same working config as others here from this post: https://forum.netgate.com/topic/112527/playing-with-fq_codel-in-2-4/815 -

@andresmorago Check out your floating firewall rules in/out pipes - are they switched?

-

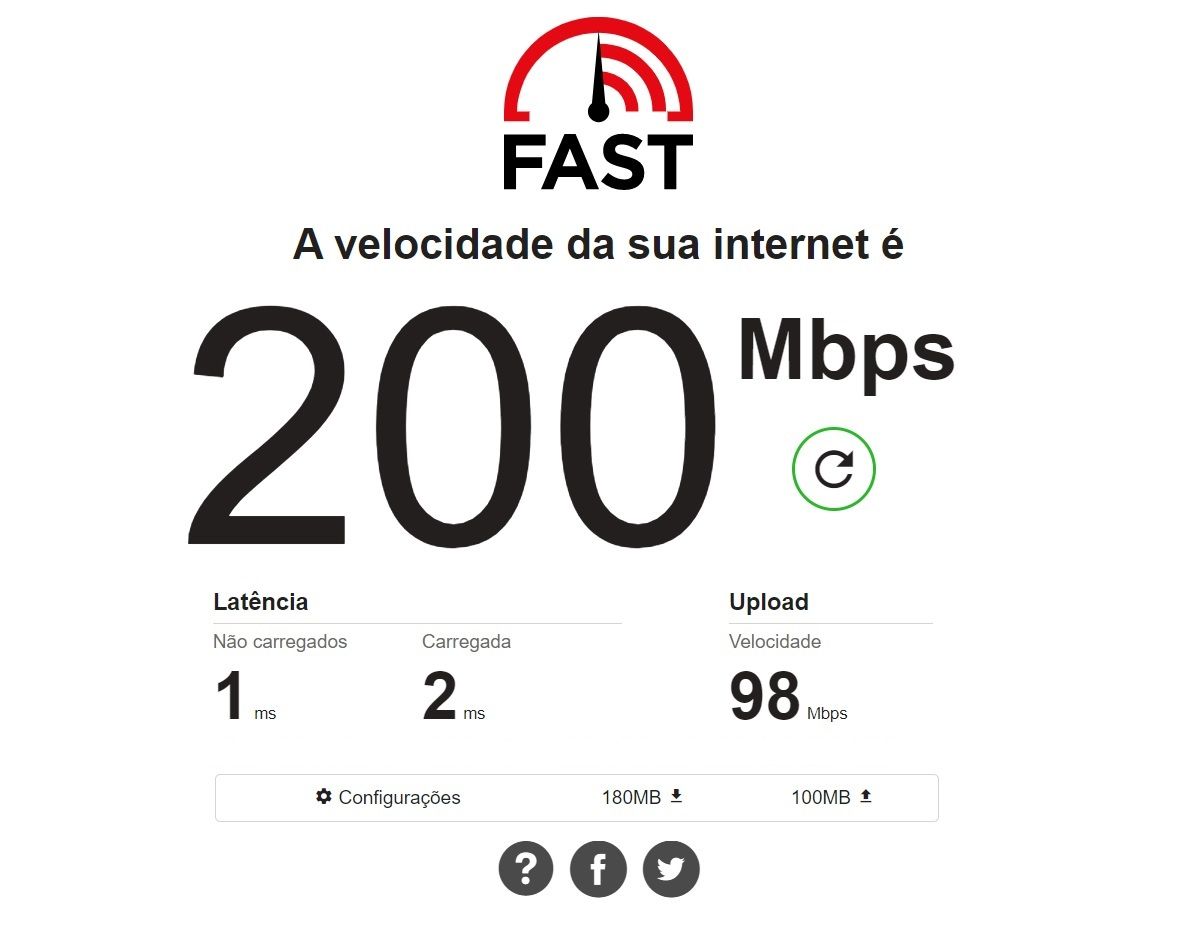

@mind12 For my 200/100 MB network I have no loss of speed. X86 PC

-

@zwck I believe not, change the values and test, for my network these values work well.

-

@ricardox whats your advertised line speed?

-

This post is deleted! -

I don't mean to hijack the thread, but has anyone else seen any catastrophic issues with adjusting fq_codel parameters since upgrading to 2.5.0? I was playing with one of my systems that had limit and flows both set to 1024. The consensus - as much as there is one - seems to be that 10240 and 20480, respectively, may yield better results so long as you're not memory constrained. I have 4GB and it was rarely more than 20 to 30% utilized so I thought I'd try.

Now, for full disclosure, there was some negligence on my part and I was following @andresmorago's post which accidentally had these values flipped (so 20480 for limit and 10240 for flows). When I set those values and applied, the pfSense system became unresponsive (even to pings). I eventually had to resort to hard powering it off, but it didn't come back when I turned it back on either. So I connected a monitor and was able to observe that at some point in the boot process, it began rapidly spamming the period character (.), and did so at such a rate that it was impossible to view the last boot message before this happened. If I were better versed in FreeBSD I may have known what to do to glean more useful information, but I had unhappy users so I just resorted to doing a fresh 2.5.0 installation and restoration of a config backup.

Also of note, after that config backup, I threw caution to the wind and tried to update the parameters again, but this time to limit 10240 and flows 20480. That time, which I clicked apply, the system spontaneously rebooted. It did come back, and the new values had been applied, but I don't know what happened there.

So this isn't really a support request, more just wondering if anyone else has seen any weirdness along these lines. I'm wary of adjusting these parameters any more now as well lest I need to perform a full reinstallation again. I also can't directly implicate 2.5.0 specifically here, although I believe this was the first time I changed the fq_codel params since upgrading, and I know that prior to the upgrade I had done a lot of experimentation with changing them without any issues.

-

@thenarc Not seen anything like that, but I was aware that the traffic shaping in earlier pfSense instances could play havoc with the connection if it changed for some other reason. I have recently built a v2.5.0 fresh instance and configured it with FQ_CoDel with no issues.

-

@pentangle Thanks for the input. I'd feel better had I not seen the spontaneous reset after adjusting these parameters following a fresh install; although it was a fresh install plus a config restore, so perhaps I pulled in some invalid configuration along with it. Just didn't have the stamina at the time to re-configure everything from scratch ;)

-

I have applied the same settings for my 150/10 Mb connection but my download speed wont move above 130Mb. Upload is fine. Checked CPU usage also during the speedtest but it's fine abou 30% utilization at all.

These are my config, similar to @Ricardox 's:

Pfsense VM with Intel NICs 2CPU 4GB RAM (about 60% utilized)

All network hardware offload off because of suricata inline mode.DownLimiter:

147Mb, Tail Drop - FQ_CODEL (5,100,300,10240,20480), Queue 10000, ECN off

DownQueue:

Taildrop, ECN offAny idea/tweak I could try?

-

@mind12 Installed Open-VM-Tools? For my 200/100 MB network I have no loss of speed. X86 PC!

realtek gigabit network card

-

@ricardox

Sure, without the limiters I get maximum speed too. -

I think I still have a bit off tuning to do... Any recommendations?

Have Comcast 400/25 service.

Getting ~380/23 with my limiter config and bufferbloat lags of 56ms/41ms respectively, but with max download bufferbloat lag spiking up to ~230ms.DSLReports SpeedTest (limiters on)

WANDown limiter @ 400mbit/s

Queue: CoDel, target:5 interval:100

Scheduler Config: FQ_CODEL, target:5, interval:100, quantum: 1514, limit: 5120, flows 1024, QueueLength: 1001, ECN: [checked]WANUp limiter @ 25Mbit/s

Queue: CoDel, target:5 interval:100

Scheduler: FQ_CODEL, target:5, interval:100, quantum: 1514, limit: 10240, flows 1024, QueueLength: 1001, ECN: [checked]EDIT: added detail with limiters disabled.

Perhaps I should just turn them off??? Am I really getting any benefit?448/24 MBit/s and 51/67 ms bufferbloat with limiters disabled

[DSLReports SpeedTest (no limiters)]

SW:

pfSense v. 2.4.5-RELEASE-p1

pfBlockerNG-devel (2.2.5_37), ntopng, bandwidthd, telegraf

Openvpn server active, no connections at time of test.HW:

Protectli Vault FW6C

Intel(R) Core(TM) i5-7200U CPU @ 2.50GHz

Current: 2400 MHz, Max: 2601 MHz

AES-NI CPU Crypto: Yes (active)

CPU Utilization: ~5%

Memory Usage: ~17% of 8GB

Network HW Offloading: [edit]disabledenabled -

@fabrizior Hm if the latency wont go below 50ms with and without the limiters I don't see any reason to use them. In my setup despite the speed decrease with the limiters the latency is around 10ms.

Sadly I dont know and have not found any info about those advanced scheduling parameters and how to tune them. Have you tried the values/config we posted?

-

-

@mind12 See the images of my configuration above, I am using fq_codel limiters.

-

@fabrizior Out of curiosity, have you tied setting your download limiter bandwidth higher than 400Mbps? I only ask because my ISP recently doubled my download speed from 100Mbps to 200Mbps and I've observed some inaccuracy when I bumped my download limiter bandwidth accordingly. Specifically, when I tried setting it to just 200, the observed actual limit - over multiple tests using flent - was more like 150Mbps. Through many iterations of testing and upping the limit, I found that I had to set my download limiter's bandwidth to 240Mbps is order to achieve an actual limit of ~200Mbps. I can't explain why, but my test results are consistent.

-

I'm still seeing bufferbloat lag latencies up to between 400-800ms as maximum spikes during testing with the averages being in the 50ms range. What would cause this to continue to occur?

Also, and separately, that RFC says:

5.2.4. Quantum

The "quantum" parameter is the number of bytes each queue gets to

dequeue on each round of the scheduling algorithm. The default is

set to 1514 bytes, which corresponds to the Ethernet MTU plus the

hardware header length of 14 bytes.In systems employing TCP Segmentation Offload (TSO), where a "packet"

consists of an offloaded packet train, it can presently be as large

as 64 kilobytes. In systems using Generic Receive Offload (GRO),

they can be up to 17 times the TCP max segment size (or 25

kilobytes). These mega-packets severely impact FQ-CoDel's ability to

schedule traffic, and they hurt latency needlessly. There is ongoing

work in Linux to make smarter use of offload engines.Is this still a current issue?

Will test disabling TSO when I can take the interfaces offline.

Should I also turn off LRO? -

I get 460-480Mbps down without the limiters enabled.

Have been playing with setting the limiter at anywhere from 350-450 and currently testing at 425 Mbps and getting ~390-410Mbps per test.Regardless of the bandwidth limit setting, I'm consistently getting an average bufferbloat lag of ~50-70 ms with spikes up to 800+ms when the limiter is enabled. Lag is also in the ~50-70ms avg. range with the limiter disabled, but the max lag tops out around ~100ms (instead of ~800).

Upload speed is 25Mbps with limiter set to 24Mbps and getting 21-22Mbps effective with bufferbloat lag of ~35 ms avg and spikes up to ~100ms.

Very curious as to why the limiter isn't improving the avg. lag and the max lag gets worse.

-

@mind12 latency is unique to the individual connection, so quoting a specific (such as "10ms") isn't helpful. What you should see is latency that doesn't change massively when you start to saturate the connection.