Upgrade to 2.4.5 broke 802.1x RADIUS WiFi over VPN

-

Updating my OpenVPN host from pfSense 2.4.4-p3 to 2.4.5 broke 802.1x WPA2-Enterprise WiFi at the remote sites. The problem seems possibly related to the RADIUS handshake / connectivity. Reverting the OpenVPN host (main site) to 2.4.4-p3 restores functionality. Remote site can remain on 2.4.5 and it works again so long as the main site is 2.4.4-p3 or older.

The setup is as follows:

- OpenVPN is setup as site-to-site tunnel, routable between sites. I can directly connect between PCs at sites.

- Firewalls set to allow all traffic over OpenVPN tunnel.

- All sites have UniFi UAP access points, talking to single RADIUS server at main site.

- RADIUS server is a Windows Server 2012R2 domain controller + DNS + NPS (etc.).

- Clients are primarily domain-joined Windows PCs, authenticating with a computer certificate. Phones use username/password and that seems to break too.

I have 2 remote sites, one running 2.4.4-p3 and the other 2.4.5. Both exhibit the same behavior, and only the main site (host) pfSense version seems to matter. I can provide config specifics as needed.

When the host is on 2.4.4-p3, everything works fine. When I update it to 2.4.5 WiFi authentication fails, and laptops try to connect over and over with no logged error (thanks Microsoft). I do see RADIUS connectivity in the states tables of both host and remote pfSense. I also see RADIUS activity start (but never succeed or fail) in the server log. I can SSH in to the AP and ping the RADIUS server, and ping the AP from the RADIUS server regardless of pfSense version. I suspect some packets are being routed differently, dropped, or modified on the latest version that the previous version didn't touch. Or vice versa??

-

@DAVe3283 I'd do packet captures on both main and remote sites for traffic from the APs

-

I was hoping someone had seen this already and it was either a known issue or an easy fix.

I captured the traffic with the main site rolled back to 2.4.4-p3 (working WiFi join). I'll re-update to 2.4.5 this weekend and get a pair of captures with it in the broken state, and go from there. I don't want to break my family's WiFi while they are all trying to work from home... again

If anyone has any insight that might save me from learning the low-level protocol behind 802.1x, please chime in!

-

Nope, that's not anything we are aware of. I doubt it's 802.1x specific, that just happens to be hitting some other issue.

I would normally look at a NAT issue here but you say it's all routed?

Then check for a fragmentation problem, I've seen that is similar situations. Not aware of anything relevant that changed going to 2.4.5 though. A packet capture should show that if it is.

Steve

-

Correct, it is all routed. Each site is on a different subnet:

- 10.0.0.0/23 main site LAN

- 10.0.7.0/24 VPN tunnel

- 10.0.6.0/24 site "H" LAN

- 10.0.8.0/24 site "C" LAN

Traceroute from Domain Controler / NPS / RADIUS server:

PS C:\Users\DAVe3283> tracert UAP-AC-LR.<site C>.<domain> Tracing route to UAP-AC-LR.<site C>.<domain> [10.0.8.2] over a maximum of 30 hops: 1 <1 ms <1 ms <1 ms pfsense1.<domain> [10.0.1.100] 2 103 ms 112 ms 114 ms pfsense.<site C>.<domain> [10.0.7.8] 3 82 ms 77 ms 99 ms uap-ac-lr.<site C>.<domain> [10.0.8.2] Trace complete.Traceroute from UniFi access point:

BZ.v3.9.15# traceroute DC.<domain> traceroute to DC.<domain> (10.0.1.1), 30 hops max, 38 byte packets 1 pfSense.<site C>.<domain> (10.0.8.1) 0.108 ms 0.134 ms 0.151 ms 2 pfsense1.<domain> (10.0.7.1) 152.666 ms 135.819 ms 101.842 ms 3 dc.<domain> (10.0.1.1) 95.220 ms 81.191 ms 69.084 msAnyone see anything wrong with that general setup?

I did notice on the working capture, some of the RADIUS messages are large enough they fragment, and carry all the way through the tunnel to the RADIUS server still fragmented. I will pay close attention to those fragmented packets when I redo the test on the new version. If those are getting dropped or mis-assembled rather than just passed through, that would do it.

-

If traffic is being fragmented I would bet that is somehow causing this.

Make sure you don't have pfscrub disabled anywhere.

Is it failing from all sites? Are they all sending fragmented packets?

Assigning the OpenVPN interface will change the way fragmented packets are handled. We have seen it work correctly with the interface assigned at the receiving end when it failed without that.

Steve

-

@stephenw10 Good points, thanks!

I can post the working packet capture if needed, but from what I can tell with WireShark, some of the RADIUS messages are larger than a single packet, so they get fragmented. Probably due to the size of the public certificates being sent. And with the VPN host on 2.4.4-p3, those packets get passed straight through untouched, and everything works.

All sites were failing when the main site was upgraded to 2.4.5. One remote site is 2.4.5, the other is 2.4.4-p3, so it seems the deciding factor is the host/receiving end of the VPN.

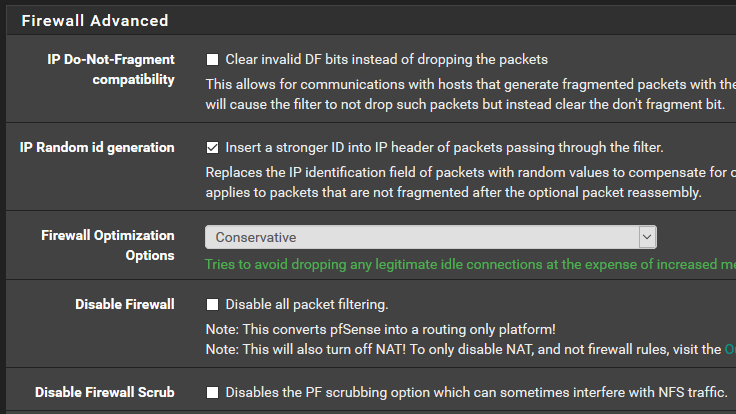

This has been my config for as long as I can remember:

That look OK? The remote sites use the same settings, except one site has the Firewall Optimization Options set to "High-latency" due to their internet connection type.The OpenVPN interface is not assigned on either end currently. I can try that if it turns out to be a fragmentation issue.

My plan is to try and re-upgrade the main site to 2.4.5 this Saturday and redo the packet captures once things stop working. Hopefully it is as easy as assigning an interface. Is that the recommended configuration? Should I be assigning the OpenVPN interface at all sites, or just the main/host?

-

Yes, that looks fine.

The packet capture should show what's happening. I would guess that the packet fragments are being dropped somewhere. Where we've seen that before is been on leaving the internal interface at the receiving end.

Steve

-

@stephenw10 So far I've been using

tcpdumpon the pfSense box to capture what it is passing through the various interfaces. Is that reliable to capture the packets being dropped in this situation? Or do I need to find a way to capture the LAN port with an external tool? I hope not... -

Yes, that is how I've seen it previously. I could see packets (or fragments) coming in in the OpenVPN interface and not leaving the internal interface as I expected them to.

Still not sure what might have induced that in 2.4.5. If that is what you're seeing.Steve

-

I updated to pfSense 2.4.5 again, and as soon as a client tried to connect where the RADIUS packets were fragmented, it wouldn't work. So something with fragmented packet handling changed from 2.4.4-p3 to 2.4.5, and that is breaking RADIUS in this case.

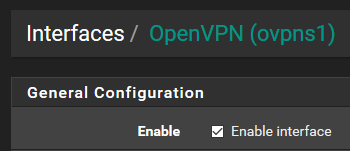

For now, I assigned the OpenVPN interface, and enabled it. It was not enabled after assignment. Should I have left it disabled?

I then had to restart OpenVPN service, but things are working again! Unfortunately, not all connection attempts result in fragments, so that could just be coincidence; I will watch for any future failures.

Hopefully it will just keep working, and I can go back to my regularly scheduled weekend! If not, I guess I will start a deep dive into the packet captures. I grabbed them at every interface along the entire route, so I have plenty to look at.

-

Nice, that does sounds like what you were hitting then.

When I've seen it before it didn't take much analysis. The packets were simply not being sent from the internal interface, where the radius server is in this case I'd guess.I have no idea why that behaviour might have changed. If you confirm that was the issue we will have look into it.

Steve

-

@stephenw10 This does seem to be the fix. I now have a packet capture of a working exchange with fragmented packets after adding the OpenVPN interface, and a failure before adding the interface. I can post the captures and full network topo if that would help, but I would rather share it privately if possible.

-

Really we would need to reproduce it here. Can you say how and where it was failing before assigning the intrerface?

Like packet fragments were arriving on the OpenVPN interface pcap but never leaving on the internal pcap? That's what I expect to see if anything.

Steve

-

@stephenw10 Sure. I dug in, and found this appears to be several things coming together to cause this failure.

In all cases, packets are fragmented at 1504 bytes inside the OpenVPN tunnel.

The twist: On my local (main) LAN, I have jumbo frames turned on (MTU of 9000 bytes). But-- not the RADIUS server; I forgot, so it had the default MTU of 1558 bytes

This... was not helping anything.

This... was not helping anything.With 2.4.5 & no OpenVPN interface, the LAN interface appears to reassemble some packets as large as 1763 bytes on the LAN side. But some remain fragmented at 1566 bytes.

So the packets were passing through the LAN interface (yay), but were being dropped by the RADIUS server because its NIC didn't have jumbo frames enabled (boo). I bet if jumbo frames were enabled, it would have worked fine.

I would expect that packets should either consistently be re-assembled or consistently be left fragmented as they pass from OpenVPN loopback to the LAN interface. So there probably is some undesired behavior on pfSense 2.4.5 in this config. And this is probably where the difference between 2.4.4-p3 & 2.4.5 lies.

After assigning OpenVPN an interface on 2.4.5, fragments do not appear to be reassembled at all. This avoided the jumbo frame mis-match on the LAN, and everything works.

Let me know if you need any more information.

Edit: weirder and weirder. pfSense did not have anything entered for the MTU on the LAN interface (or any interface), so should have been using the default MTU of 1500. In fact, it drops anything larger than 1500 coming in. So why in the world was it assembling packets with a MTU well in excess of that? I would say that is a bug as well.

-

Hmm, that is weird. What was it doing in 2.4.4p3? Not reassembling the packets even without the interface assigned?

Let me see if I can find anything that might explain that in 2.4.5.

Steve

-

@stephenw10 Exactly. 2.4.4-p3 was not reassembling packets even without the interface assigned.

I still find it weird that 2.4.5 reassembles some packets, seemingly at random, without the interface assigned. And assembling packets that exceed the set MTU of the adapter, at that.

-

Hello,

I've been dealing with this issue for the past couple of days and stumbled upon this forum post. The two remote sites that I have updated to 2.4.5 no longer work with our RADIUS WPA2/AES SSID.

Has this been identified as a bug? Is there a fix in the works?

If we create an Interface for OpenVPN and assign it to the client, then our gateway group for failover will no longer be active.

Please assist. :)

Thanks,

Chris -

The gateway group that the client is using? Or having the OpenVPN gateway as part of the group?

-

Yes, the gateway group that the OpenVPN client is using. It is currently set as a TRI WAN Failover.

If we create a new OpenVPN interface, do we have to associate it in any way with the OpenVPN client?

Maybe I'm misunderstanding the solution stated above..

What is the purpose of creating an interface for OpenVPN? What changed in version 2.4.5?

Thanks for your help!