Poor IPv6 performance through HAProxy

-

I am experiencing terrible upstream performance when connecting to my global IPv6 address through haproxy (about 0.02mbps vs 40 mbps). This issue only impacts upstream performance. The ipv4 address works fine. HAProxy running on pfsense forwards to several docker containers on my LAN based on hostname. I've run every test I can think of to try to make sense of this:

- Open containers public ipv6 address through firewall and connect directly - Good performance

- Port-forward port 8080 on WAN ipv6 address (same as haproxy listens on) to direct translate to containers ipv6 address - Good performance

- Pick new ipv6 address on network, 1-off from container's v6 address; create alias and bind haproxy to listen - Poor performance (0.02mbps)

- Configure haproxy to proxy to container's ipv6 address instead of ipv4 address - Poor performance

- Change port for haproxy SSL termination from 443 to 8443 - Poor performance

- Try haproxy-devel 0.62_10 - poor performance

This is clearly not an ISP problem (xfinity) since ipv6 performance directly to the container or through a port forward is good. They also do not seem to be filtering on port 443 (specifically with ipv6, ipv4 traffic to port 443 is good) since changing to 8443 also gives poor performance.

I'm just totally lost as to what to try next. The only way I've been able to get good performance is by bypassing haproxy for ipv6, or sticking to ipv4. I'm pretty certain that haproxy shouldn't even care if its listening on a v4 or a v6 address.

Running haproxy 0.61_7 on pfsense 2.6.0-RELEASE

my haproxy config file:

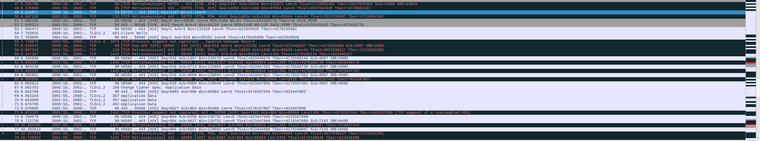

# Automaticaly generated, dont edit manually. # Generated on: 2023-02-19 20:36 global maxconn 10000 stats socket /tmp/haproxy.socket level admin expose-fd listeners uid 80 gid 80 nbproc 1 nbthread 1 hard-stop-after 15m chroot /tmp/haproxy_chroot daemon tune.ssl.default-dh-param 2048 log-send-hostname haproxy server-state-file /tmp/haproxy_server_state listen HAProxyLocalStats bind 127.0.0.1:2200 name localstats mode http stats enable stats refresh 10 stats admin if TRUE stats show-legends stats uri /haproxy/haproxy_stats.php?haproxystats=1 timeout client 5000 timeout connect 5000 timeout server 5000 resolvers globalresolvers nameserver local 127.0.0.1:53 resolve_retries 20 timeout retry 5s timeout resolve 10s frontend Default-443-merged bind <ipv4-ip>:443 name <ipv4-ip>:443 ssl crt-list /var/etc/haproxy/Default-443.crt_list bind <ipv6-ip>:443 name <ipv6-ip>:443 ssl crt-list /var/etc/haproxy/Default-443.crt_list mode http log global option httplog option http-keep-alive option forwardfor acl https ssl_fc http-request set-header X-Forwarded-Proto http if !https http-request set-header X-Forwarded-Proto https if https timeout client 30000 acl speedtest var(txn.txnhost) -m str -i speedtest.<redacted> use_backend speedtest_pool_ipvANY if speedtest backend speedtest_pool_ipvANY mode http id 105 log global http-response set-header Strict-Transport-Security max-age=31536000; rspirep ^(Set-Cookie:((?!;\ secure).)*)$ \1;\ secure if { ssl_fc } timeout connect 30000 timeout server 30000 retries 3 option forwardfor server speedtest speedtest.<docker>:80 id 101 check inter 20000 weight 100 resolvers globalresolvers