Major DNS Bug 23.01 with Quad9 on SSL

-

2023-05-04 11:01:07.981902-05:00 kernel - cannot forward src fe80:2::557:1ce2:51f6:763c, dst 2600:1402:b800:1a::6847:8fd0, nxt 6, rcvif re1, outif re0What has

fe80:2::557:1ce2:51f6:763c? It shouldn't be trying to send traffic to a GUA address from LL, it's invalid.What shows in the unbound config for interface binding(s)?

-

@stephenw10 And restarting the service overrides my manual changes. This used to work fine until about 2 months ago. I know its local DNS as I can manually set the DNS on mobile devices that are showing the issue and the app failure problem goes away.

-

@jimp Here is the Unbound.conf:

##########################

Unbound Configuration

##########################

Server configuration

server:

chroot: /var/unbound

username: "unbound"

directory: "/var/unbound"

pidfile: "/var/run/unbound.pid"

use-syslog: yes

port: 53

verbosity: 4

hide-identity: yes

hide-version: yes

harden-glue: yes

do-ip4: yes

do-ip6: yes

do-udp: yes

do-tcp: yes

do-daemonize: yes

module-config: "python validator iterator"

unwanted-reply-threshold: 10000000

num-queries-per-thread: 2048

jostle-timeout: 200

infra-keep-probing: yes

infra-host-ttl: 900

infra-cache-numhosts: 20000

outgoing-num-tcp: 50

incoming-num-tcp: 50

edns-buffer-size: 4096

cache-max-ttl: 86400

cache-min-ttl: 0

harden-dnssec-stripped: yes

msg-cache-size: 512m

rrset-cache-size: 1024m

qname-minimisation: yesnum-threads: 4

msg-cache-slabs: 4

rrset-cache-slabs: 4

infra-cache-slabs: 4

key-cache-slabs: 4

outgoing-range: 4096

#so-rcvbuf: 4m

auto-trust-anchor-file: /var/unbound/root.key

prefetch: yes

prefetch-key: yes

use-caps-for-id: no

serve-expired: yes

aggressive-nsec: yesStatistics

Unbound Statistics

statistics-interval: 0

extended-statistics: yes

statistics-cumulative: yesTLS Configuration

tls-cert-bundle: "/etc/ssl/cert.pem"

tls-port: 853

tls-service-pem: "/var/unbound/sslcert.crt"

tls-service-key: "/var/unbound/sslcert.key"Interface IP addresses to bind to

interface: 192.168.246.1

interface: 192.168.246.1@853

interface: 2604:2d80:b204:b00:201:2eff:fe5a:6a7c

interface: 2604:2d80:b204:b00:201:2eff:fe5a:6a7c@853

interface: 127.0.0.1

interface: 127.0.0.1@853

interface: ::1

interface: ::1@853Outgoing interfaces to be used

outgoing-interface: 173.21.178.244

outgoing-interface: 2604:2d80:8419:0:c4a9:d993:4305:fa3eDNS Rebinding

For DNS Rebinding prevention

private-address: 127.0.0.0/8

private-address: 10.0.0.0/8

private-address: ::ffff:a00:0/104

private-address: 172.16.0.0/12

private-address: ::ffff:ac10:0/108

private-address: 169.254.0.0/16

private-address: ::ffff:a9fe:0/112

private-address: 192.168.0.0/16

private-address: ::ffff:c0a8:0/112

private-address: fd00::/8

private-address: fe80::/10Access lists

include: /var/unbound/access_lists.conf

Static host entries

include: /var/unbound/host_entries.conf

dhcp lease entries

include: /var/unbound/dhcpleases_entries.conf

Domain overrides

include: /var/unbound/domainoverrides.conf

Unbound custom options

server:

tls-upstream: yes

forward-zone:

name: "."

forward-ssl-upstream: yes

forward-addr: 1.1.1.1@853

forward-addr: 1.0.0.1@853

#forward-addr: 2606:4700:4700::64@853

#forward-addr: 2606:4700:4700::6400@853

#forward-addr: 149.112.112.11@853

#forward-addr: 9.9.9.11@853

#forward-addr: 2620:fe::11@853

#forward-addr: 2620:fe::fe:11@853

#forward-addr: 52.205.50.148@853Remote Control Config

include: /var/unbound/remotecontrol.conf

Python Module

python:

python-script: pfb_unbound.py -

@n0m0fud said in Major DNS Bug 23.01 with Quad9 on SSL:

The GUI only allows selection of physical interfaces. Not IP addresses

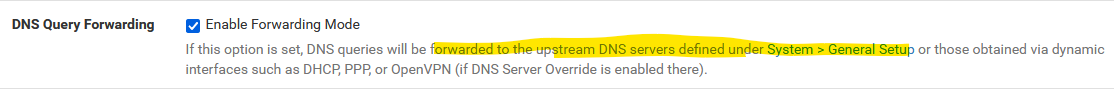

Unless it changed in 23.05:

Custom unbound option text is not required for forwarding.

re: cannot forward, is IPv6 working otherwise, from both pfSense and LAN devices? You can

ping -6 google.com? -

@n0m0fud Ping works fine both from LAN device and from pfSense. Enabled the forwarding and let it run for a bit. I have to get on to other things.

Now logs show:

2023-05-04 11:44:02.155327-05:00 unbound 45552 [45552:3] debug: comm point listen_for_rw 36 0

2023-05-04 11:44:02.135144-05:00 unbound 45552 [45552:3] debug: comm point start listening 36 (-1 msec)

2023-05-04 11:44:02.135137-05:00 unbound 45552 [45552:3] debug: the query is using TLS encryption, for an unauthenticated connection

2023-05-04 11:44:02.134995-05:00 unbound 45552 [45552:3] debug: tcp bound to src ip6 2604:2d80:8419:0:c4a9:d993:4305:fa3e port 0 (len 28)

2023-05-04 11:44:02.134947-05:00 unbound 45552 [45552:3] debug: serviced send timer

2023-05-04 11:44:02.134938-05:00 unbound 45552 [45552:3] debug: cache memory msg=66072 rrset=66072 infra=7808 val=66288

2023-05-04 11:44:02.134929-05:00 unbound 45552 [45552:3] info: 0RDd mod2 rep 144.228.109.190.in-addr.arpa. PTR IN

2023-05-04 11:44:02.134924-05:00 unbound 45552 [45552:3] info: mesh_run: end 1 recursion states (1 with reply, 0 detached), 1 waiting replies, 0 recursion replies sent, 0 replies dropped, 0 states jostled out

2023-05-04 11:44:02.134918-05:00 unbound 45552 [45552:3] debug: mesh_run: iterator module exit state is module_wait_reply

2023-05-04 11:44:02.134908-05:00 unbound 45552 [45552:3] debug: dnssec status: not expected

2023-05-04 11:44:02.134904-05:00 unbound 45552 [45552:3] debug: sending to target: <.> 2606:4700:4700::64#853

2023-05-04 11:44:02.134898-05:00 unbound 45552 [45552:3] info: sending query: 144.228.109.190.in-addr.arpa. PTR IN

2023-05-04 11:44:02.134892-05:00 unbound 45552 [45552:3] debug: selrtt 376

2023-05-04 11:44:02.134883-05:00 unbound 45552 [45552:3] debug: rpz: iterator module callback: have_rpz=0

2023-05-04 11:44:02.134876-05:00 unbound 45552 [45552:3] debug: attempt to get extra 3 targets

2023-05-04 11:44:02.134871-05:00 unbound 45552 [45552:3] debug: ip4 1.1.1.1 port 853 (len 16)

2023-05-04 11:44:02.134866-05:00 unbound 45552 [45552:3] debug: ip4 1.0.0.1 port 853 (len 16)

2023-05-04 11:44:02.134861-05:00 unbound 45552 [45552:3] debug: ip6 2606:4700:4700::64 port 853 (len 28)

2023-05-04 11:44:02.134856-05:00 unbound 45552 [45552:3] debug: ip6 2606:4700:4700::6400 port 853 (len 28) -

@n0m0fud said in Major DNS Bug 23.01 with Quad9 on SSL:

The GUI only allows selection of physical interfaces.

Hmm, interesting. I guess it shows only v6 link local as separate 'interfaces'.

-

@gertjan said in Major DNS Bug 23.01 with Quad9 on SSL:

To (re)build a TLS connections, entropy is going through the drain fast.

Linux for sure this can be a problem - and especially on vms, etc. But I thought bsd got their entropy in a different way? and it was unlikely for bsd to run out like linux can?

-

Strange :

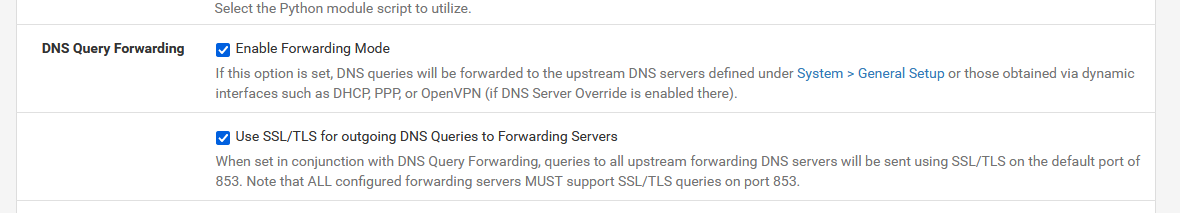

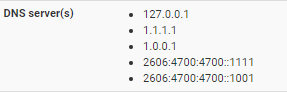

... dhcp lease entries include: /var/unbound/dhcpleases_entries.conf Domain overrides include: /var/unbound/domainoverrides.conf Unbound custom options server: tls-upstream: yes forward-zone: name: "." forward-ssl-upstream: yes forward-addr: 1.1.1.1@853 forward-addr: 1.0.0.1@853 #forward-addr: 2606:4700:4700::64@853 #forward-addr: 2606:4700:4700::6400@853 #forward-addr: 149.112.112.11@853 #forward-addr: 9.9.9.11@853 #forward-addr: 2620:fe::11@853 #forward-addr: 2620:fe::fe:11@853 #forward-addr: 52.205.50.148@853 Remote Control Config .....When you set :

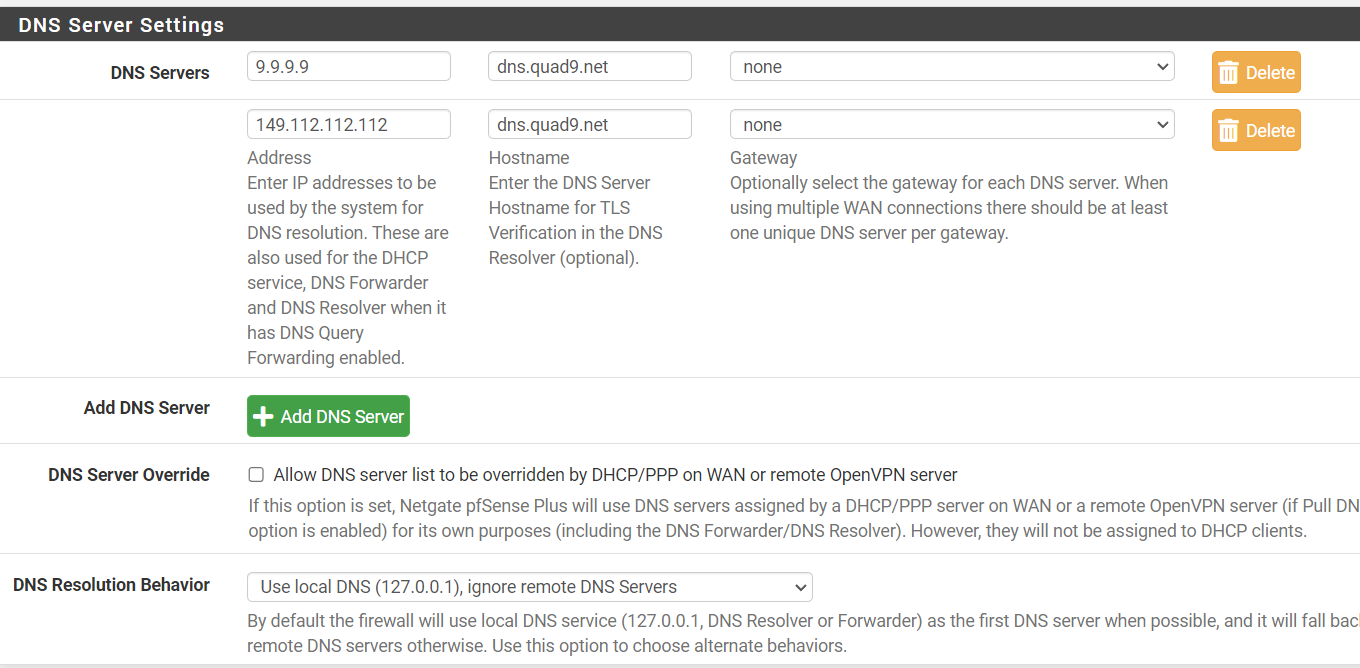

pfSense will add a "forward-zone" section will all the needed addresses :

..... # dhcp lease entries include: /var/unbound/dhcpleases_entries.conf # Domain overrides include: /var/unbound/domainoverrides.conf # Forwarding forward-zone: name: "." forward-tls-upstream: yes forward-addr: 9.9.9.9@853#dns9.quad9.net forward-addr: 149.112.112.112@853#dns9.quad9.net forward-addr: 2620:fe::fe@853#dns9.quad9.net forward-addr: 2620:fe::9@853#dns9.quad9.net # Unbound custom options server: statistics-cumulative: no ### # Remote Control Config ### .....And no "forward-ssl-upstream" but "forward-tls-upstream", although both are the same.

So you are forwarding without the GUI set to forwarding ?

Why would you use the custom options to achieve forwarding ?

Maybe that needed to be done in the past, but no so anymore.The usage of

tls-upstream: yesis also very rare.

Google knows about it - in just one place ( !! ): it's the unbound.conf doc :tls-upstream: <yes or no> Enabled or disable whether the upstream queries use TLS only for transport. Default is no. Useful in tunneling scenarios. The TLS contains plain DNS in TCP wireformat. The other server must support this (see tls-service-key). If you enable this, also configure a tls-cert-bundle or use tls-win-cert or tls-sys- tem-cert to load CA certs, otherwise the connections cannot be authenticated. This option enables TLS for all of them, but if you do not set this you can configure TLS specifically for some forward zones with forward-tls-upstream. And also with stub-tls-upstream.Reading this makes me thing : I would stay away from it.

Btw : I'm forwarding to quad9 (IPv4 and IPv6) for the last week or so.

I didn't detect no issues what so ever.

If my unbound got restarted, like this morning, that was me doing so. -

S SteveITS referenced this topic on

-

S SteveITS referenced this topic on

-

I am working on a build of the version of Unbound we shipped with 22.05 that will run on 23.01 (and one for 23.05). If the problem goes away with this old version of Unbound, I will start bisecting to find a root cause. I just don't want to go off in the weeds chasing ghosts.

It would also be useful to know if this problem also manifests on 23.05.

Standby

-

This issue is not unique to pfSense.

We do have a workaround:

- Stop the Unbound service

- Run

elfctl -e +noaslr /usr/local/sbin/unbound - Start the Unbound service

Ref: https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=270912

-

I am following this thread with interest, I once was plagued with this (DNS over TLS slowness, random timeouts) but no longer and its not 100% clear why, so I made the change as a precaution.

elfctl -e +noaslr /usr/local/sbin/unbound

elfctl /usr/local/sbin/unbound

Shell Output - elfctl /usr/local/sbin/unbound

File '/usr/local/sbin/unbound' features:

noaslr 'Disable ASLR' is set.

noprotmax 'Disable implicit PROT_MAX' is unset.

nostackgap 'Disable stack gap' is unset.

wxneeded 'Requires W+X mappings' is unset.

la48 'amd64: Limit user VA to 48bit' is unset.This website indicates ASLR is on by default in FreeBSD14 -

https://wiki.freebsd.org/AddressSpaceLayoutRandomization and not in 13 (or lower?) so maybe this explains why I stumbled across this after upgrading from 22.05 to 23.01? -

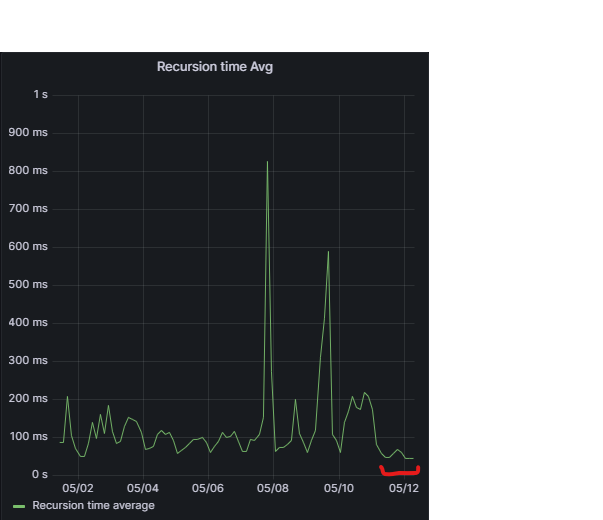

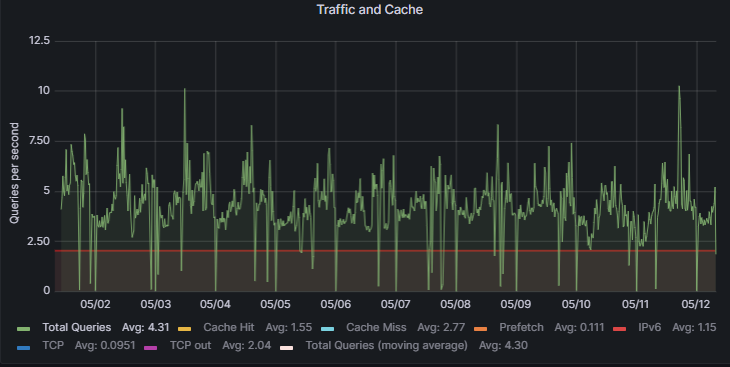

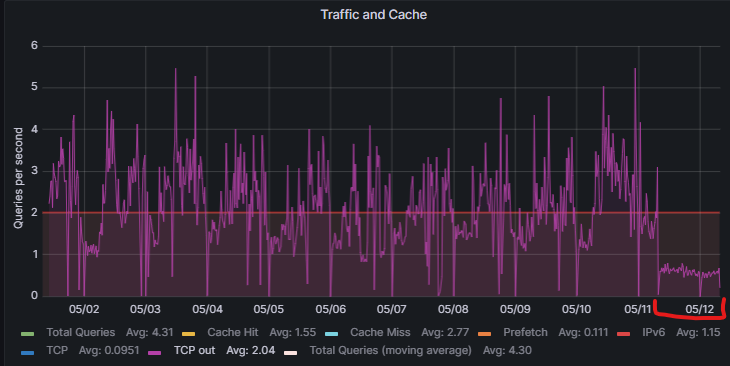

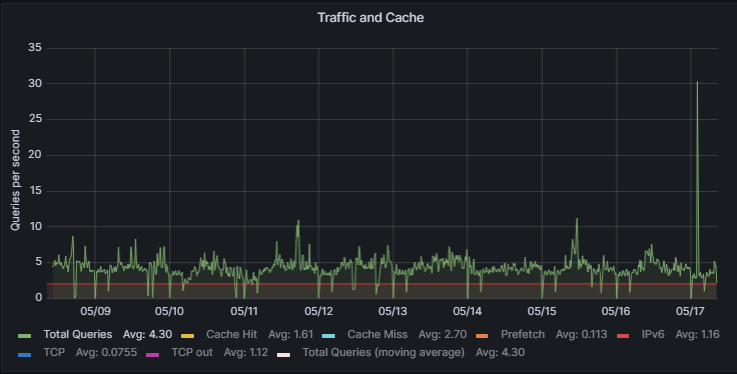

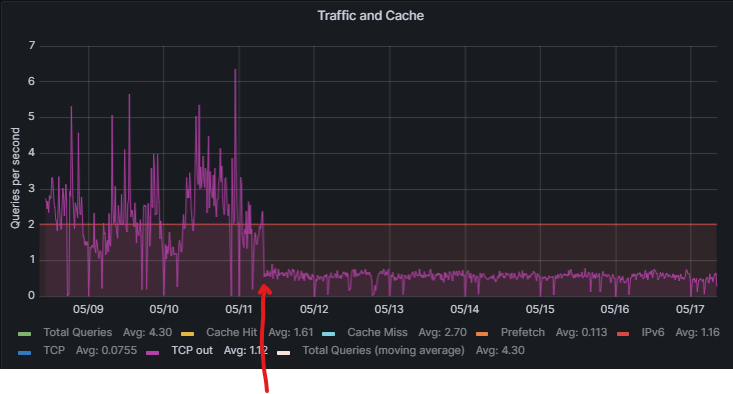

After 24 hours here are some unbound stats observed by turning off ASLR as per note above. My environment has been stable during the last 10 days; this being the only change made 24 hours ago. I WFH 8-10 hours a week and my DNS calls have been consistent over this period (running DNS over TLS to Cloudflare).

10-15ms average recursion time improvement since the change

DNS queries have remained fairly consistent

This observation sticks out though. I'm not technical enough to pretend what's going on here but 'TCP out' has tightened up. All other metrics measured (which are hidden) are identical.

Data shows a consistent new trend after disabling ASLR several days later...

-

@joedan Where do I find that graphing?

-

I use Grafana / InfluxDB.

I'm not a linux person so use a downloaded / pre-made Home Assistant virtual machine in Windows 11 Pro (HyperV). The Grafana / Influx DB addon's were a very simple click to install and run.I use the pfSense Telegraf package using custom config for Unbound stats reporting documented here..

https://github.com/VictorRobellini/pfSense-DashboardThe Grafana dashboard is here..

https://grafana.com/grafana/dashboards/6128-unbound/

Victor doesn't appear to have one for unbound but I also use his dashboard for other stats (from his Github page).I didn't have to code anything just follow the bouncing ball on various sites to set things up.

-

@joedan Thanks, I'll check it out

-

@joedan said in Major DNS Bug 23.01 with Quad9 on SSL:

Like the subject of the thread :

but arguably the same issue : 1.1.1.1 or 9.9.9.9, "what is the difference ?", I'm forwarding just to test 'if it works, or not'.

Up until today, I didn't find any issues.Note that I'm still using

as I presume that error conditions would get logged, if they arrive.

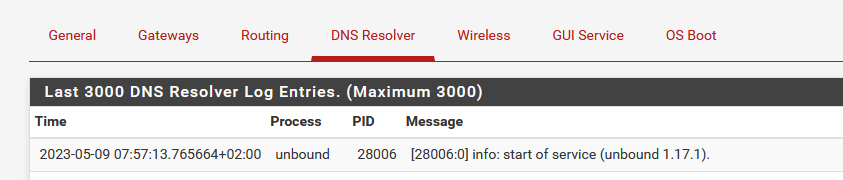

The last log line form unbound tells me that it started a couple of day ago :

I'm going to restart unbound now, and disable address space layout randomization (ALSR), although I just can't wrap my head around this workaround: why would the position in (virtual mapped) memory matter ?

ALSR is used in every modern OS these days.

It's a extra layer of obscurity without any cost or negative side effects, and, as far as I know, only makes the life of a hacker more difficult. hack entry vectors by using stack or memory (aka buffer) overruns are become much harder, as the process uses another layout in memory every time it starts.Btw : this is is what I think. I admit I don't know shit about this ALSR executable option, and was aware only vaguely about the concept.

I also think, or thought, that a coder that makes programs doesn't need to be aware of 'where' the code, data and other segments are placed in memory. We all code relocatable for decades now without being aware of it, as the compiler and linker takes care of all these things.

The unbound issue was marked as as FreeBSD bug first, and they, FreeBSD, said : go ask the unbound author. See post above.

Disabling ASLR is just a stop-gap. (edit : if this is even related to this bug, issue ... we'll see)

IMHO, the real issue is somewhere between unbound and ones of it's linked libraries "libcrypto.so.111" and "libssl.so.111", as I presume that the issue arrives when forwarding over TLS is used.The default unbound mode is resolving doesn't use TLS, so, for me, that explains why the resolver is working fine while resolving.

Anyway, not a pfSense issue, more an unbound issue or even further away, the way how all this interoperates.

The good news : Its still an issue for Netgate, as they are very FreeBSD aware, they will find out what the real issue is.[ end of me thinking out loud ]

-

I would love to see anyone who was hitting this issue repeatedly confirm the ASLR workaround here.

-

@stephenw10

I'm testing right now and for the moment it's "OK" .... I just put back my DNS settings like on my 22.05 version (which was working without any problem)

-

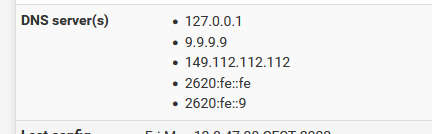

-

Your are forwarding : ok

and

using TLS - port 853 ?

Right ?edit :

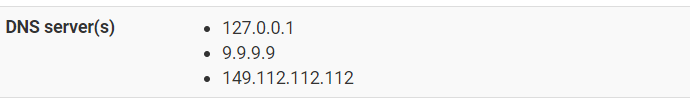

I am forwarding to these two over TLS - and most (not all) traffic goes actually over 2620:fe::fe and

2620:fe::9, the IPv6 counterpart of 9.9.9.9 and 149.112.112.112.

I did not do the ASLR patch .... I'm still waiting for it to fail

As sson as I see the fail, I'll go patch, so I'll know what I don't want to see any more.